Ackwnowledgments

The authors are grateful for the support of the Ministry of Education and Science of Spain “Enfoque integral y probabilista para la evaluación del riesgo sísmico en España” -CoPASRE (CGL2011-29063). Also to the Spain’s Ministry of Economy and Competitiveness in the framework of the researcher’s formation program (FPI).

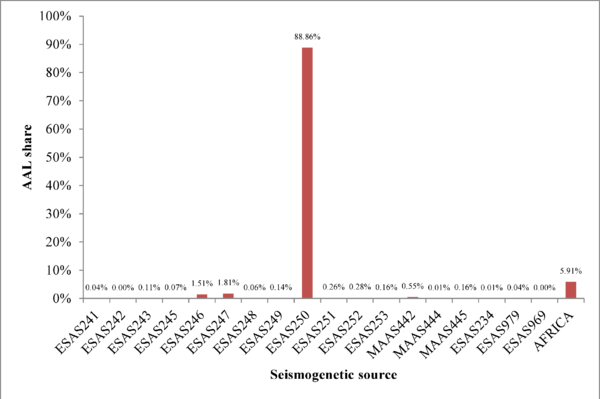

Also to Professor Mario Ordaz, Dr. Gabriel A. Bernal, Dr. Mabel Cristina Marulanda, César Velásquez and Daniela Zuloaga for their contributions and encouragement during this work.

FOREWORD

It has been largely argued that earthquakes are natural, but disasters are not. Because of that, interests and efforts on different disciplines have been developed with the aim of reducing the damages, losses and casualties associated to those events. Whilst important advances have been made in developed countries, especially in terms of reducing casualties, it is interesting to see that more than 90% of the deaths because of natural events occur in developing countries (UNISDR, 2002; Rasmussen, 2004). Although being a worrying figure, it also shows that decreasing that value is not an impossible task in the short-medium term if the correct actions are taken both at the technical and political level, just as has happened in most developed countries.

Seismic risk is considered as a catastrophic risk since it is associated to events with high impact (both in terms of severity and geographical extension) and low occurrence frequency. Those characteristics have implications in the way that both, hazard and risk need to be quantified and assessed differing from the traditional actuarial approaches, besides the inherent uncertainties, like for example what magnitude will the next earthquake have, where is it going to occur and also how the buildings subjected to earthquake forcer will perform; therefore, a fully probabilistic approach is required. Within a probabilistic framework, not only the uncertainties are to be quantified, considered and included but also propagated throughout the analysis.

Probabilistic seismic hazard and risk modelling allows considering the losses of events that have not occurred but are likely to happen because of the hazard environment. This approach can be understood as analogous to classical actuarial techniques useful for other perils where, using historical data, a probability distribution is adjusted and the end tail is modelled to account for loss ranges that have not yet been recorded.

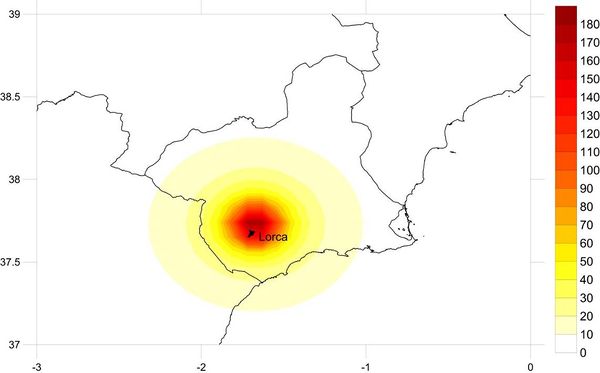

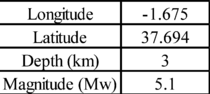

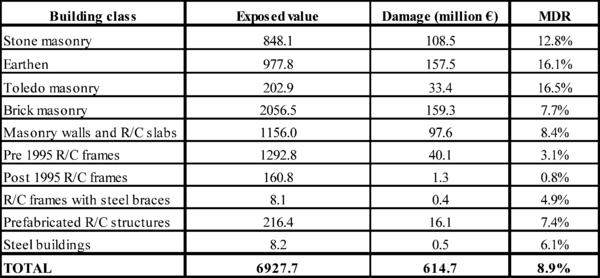

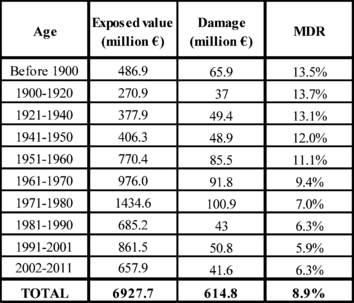

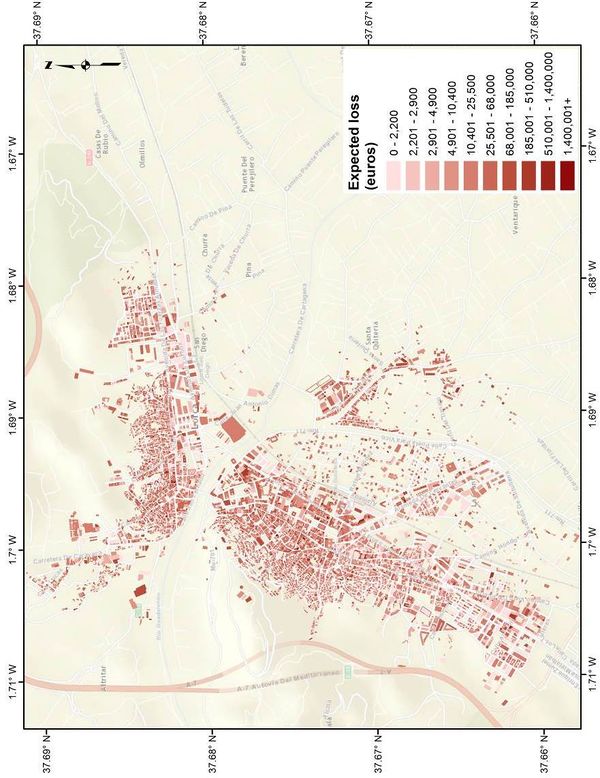

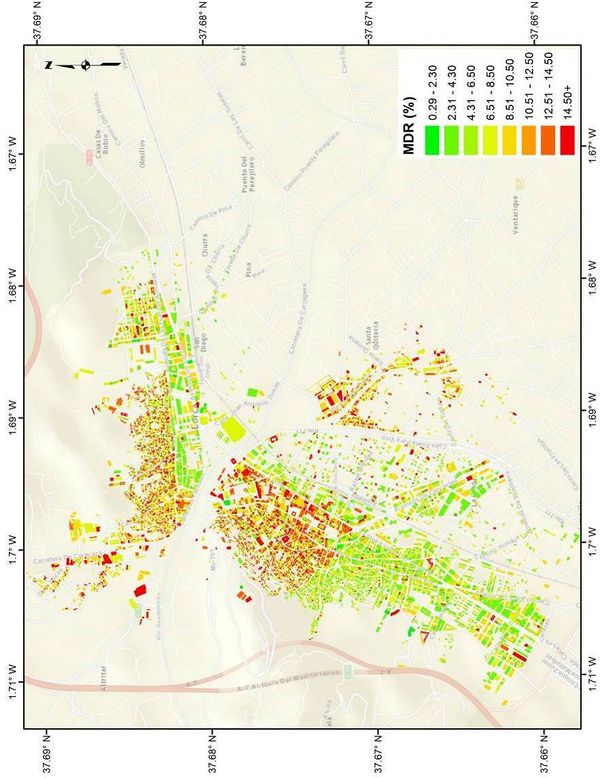

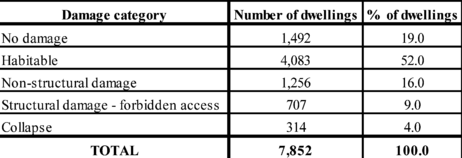

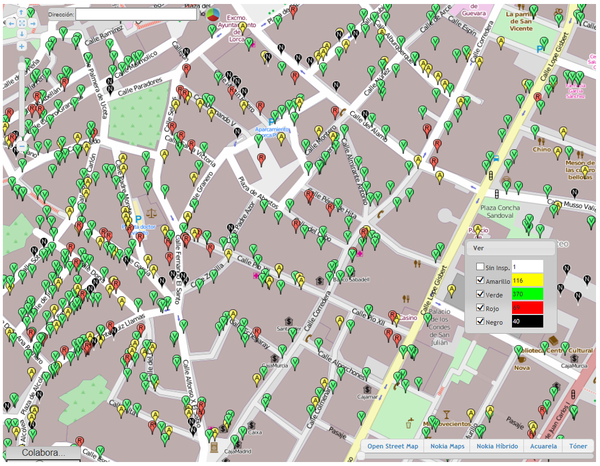

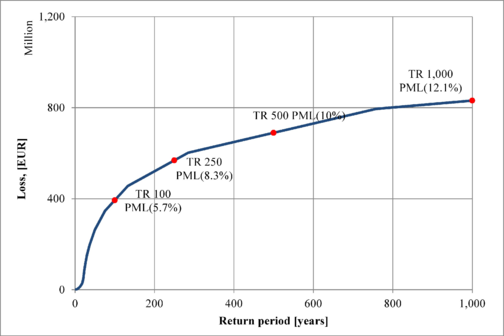

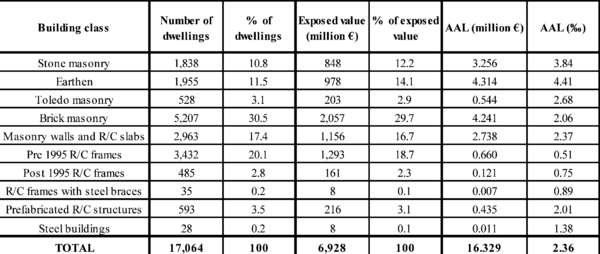

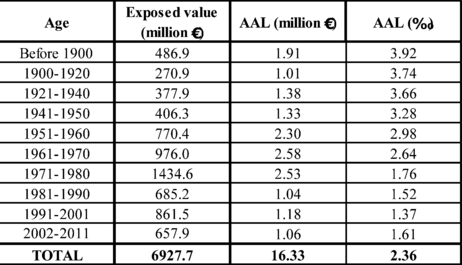

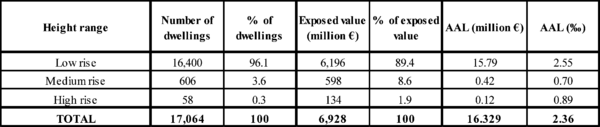

This work attempts to explain how probabilistic seismic risk assessments can be performed at different resolution levels, using, strictly speaking, the same methodology (or arithmetic) and, then, how to obtain results in terms of the same metrics; but, also, highlighting what the differences in terms of inputs for the analysis and the reasons for them (i.e. including the dynamic soil response effects which are only relevant in local assessments) are. First, a country level assessment is first performed with similarities to the presented by Cardona et al. (2014) using a coarse-grain exposure database that includes only the building stock in the urban regions of Spain. Second, a urban seismic risk assessment with the detail of state-of-the-art studies such as the ones developed by Marulanda et al. (2013) and Salgado-Gálvez et al (2013; 2014a) is performed for Lorca, Murcia. In both cases, the fully probabilistic seismic risk results are expressed in terms of the loss exceedance curve which corresponds to the main output of said analysis from where different probabilistic risk metrics, such as the average annual loss and the probable maximum loss, as well as several other relationships, can be derived (Marulanda et al., 2008; Bernal, 2014). Because of the damage data availability for the Lorca May 2011 earthquake, a comparison between the observed losses and those modelled using an earthquake scenario with similar characteristics in terms of location, magnitude and spectral accelerations was done for the building stock of the city. The results of the comparison are presented in terms of expected losses (in monetary terms) and damage levels related to the obtained the mean damage ratios compared with the observed by post-earthquake surveys.

This work aims to present a comprehensive probabilistic seismic risk assessment for Spain, where the different stages of the calculation process are explained and discussed. The stages of this assessment can be summarized as follows:

- Probabilistic seismic hazard assessment

- Assembly of the exposure database

- Seismic vulnerability assessment

- Probabilistic damage and loss calculation

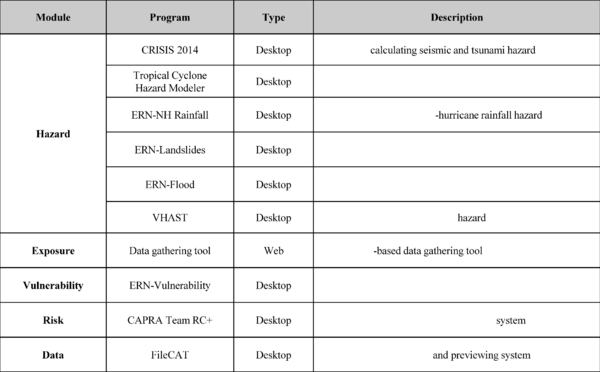

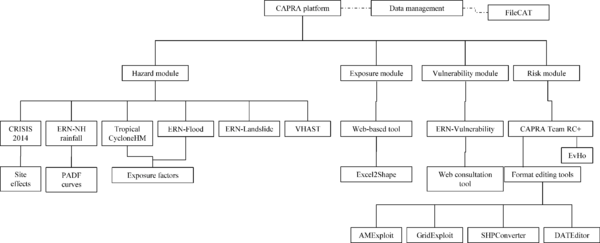

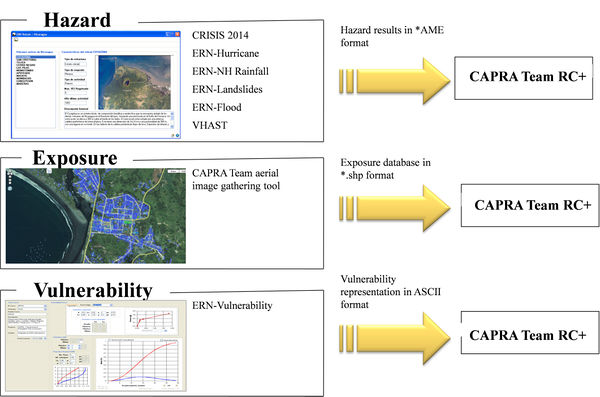

Nowadays, there are several tools available to estimate catastrophe risk by means of probabilistic approaches, while most of the approaches to calculate risk in a probabilistic manner have common procedures, their methodologies are either not clearly explained or not available at all to the general public. This aspect happens even when the trend is to promote and use open-source models (GFDRR, 2014a). After reviewing some of the available tools capable of performing at least one of the stages (i.e., hazard, vulnerability, etc.) of this study (McGuire, 1967; Bender and Perkins, 1987; Field et al., 2003; Silva et al., 2014), the CAPRA1 Platform (Cardona et al., 2010; 2012; Velásquez et al., 2014) was chosen because its flexibility, compatibility with the assessments to be performed at different resolution levels and its open-source/freeware characteristics. The CAPRA Platform comprises different modules among which the following have been used in this monograph:

- CRISIS2014 (Ordaz et al., 2014): is the latest version of the seismic hazard module of the CAPRA Platform. It allows probabilistic estimation of the seismic hazard by considering several geometrical and seismicity models. Besides calculating intensity exceedance curves and uniform hazard spectra, it allows obtaining the hazard output results in terms of a set of stochastic scenarios to be later used for a fully probabilistic and comprehensive seismic risk assessment.

- ERN-Vulnerabilidad (ERN-AL Consortium, 2011) is the vulnerability module of the CAPRA Platform. It allows calculating, calibrating and modifying seismic vulnerability functions using several methodologies. The module includes a library of vulnerability functions for several building classes that can be directly used, reviewed and/or modified to capture the characteristic of specific building conditions.

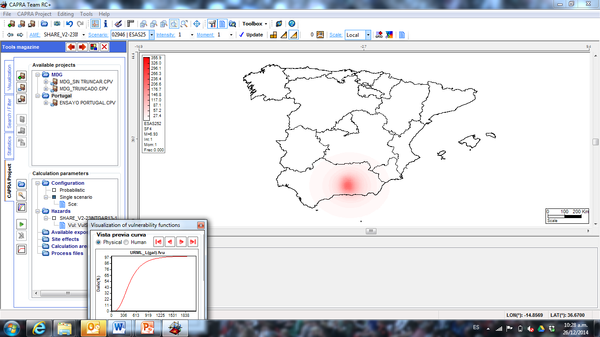

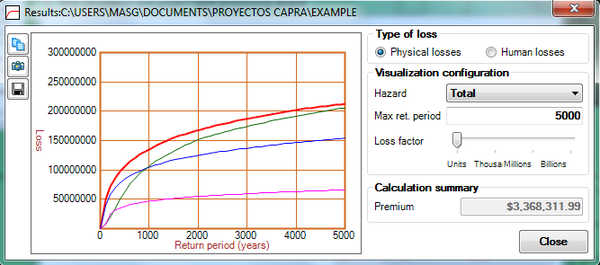

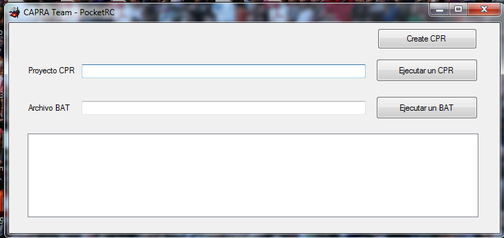

- CAPRA Team RC+: is the latest version of the probabilistic risk calculator of the CAPRA Platform. It allows the comprehensive convolution between hazard and vulnerability of the exposed assets to obtain physical risk results in terms of the loss exceedance curve. Several computation characteristics exist between this version and the former ones given that a new parallelization process is included and can be used in most of today’s personal computers.

Only direct physical losses are considered in this analysis, notwithstanding that, due to indirect and secondary effects, an earthquake can scale onto a major disaster (Albala-Bertrand, 2006). Being aware of that, these results, and some of the inputs used to obtain them, can be used as input for further calculations beyond the scope of this analysis, to quantify it and allow the involvement of other disciplines (Barbat, 1998; Carreño et al., 2004; 2005; Marulanda et al., 2009).

The risk identification process is the first step of a comprehensive disaster risk management scheme (Cardona, 2009) that may provide an order of magnitude of the required budget to proceed to subsequent stages regarding mitigation strategies such as structural intervention or retrofitting of existing structures, urban planning regulations, long-term financial protection strategies (Andersen, 2002; Freeman et al., 2003) and emergency planning. Since this approach allows to quantify the losses before the occurrence of the disaster (which can be understood as the materialization of existent risk conditions), ex-ante measures such as cat-bonds, contingent loans, disaster reserve funds, traditional insurance and reinsurance mechanisms can be considered to cope with the associated costs of them instead of the ex-post measures that are usually followed (Marulanda et al., 2008; Marulanda, 2013). Risk assessment has also been identified as a core indicator in the set of priorities of the Hyogo Framework for Action (HFA) and several challenges have been identified recently by UNISDR (2014) as a contribution towards the development of policy indicators for the Post-2015 framework on disaster risk reduction.

Since uncertainties have become an issue of major interest in the different stages of the catastrophe risk modelling, discussion of their existence, sources and the way they are considered in this study is presented for each of the aspects related to the seismic risk modelling. This not because the topic is new, but because the way of how they are dealt with has become of interest as a consequence of the increasing use of the catastrophe risk models (Cat-Models). Today the trend has changed from blindly trust the results reported by risk modelers to understand, interact and debate what the models do, what aspects are considered and what are the impacts in the final results because of the different hypotheses introduced throughout the process. This is then an effort to explain, in a transparent and comprehensive way, through a step by step example, how risk can be calculated in probabilistic terms and what are the influences of the inputs in each of the stages, what the obtained results mean and how the outputs of a probabilistic risk assessment can be incorporated as inputs in other topics related to disaster risk management.

A full color version of this monograph can be found at: http://www.cimne.com/vpage/2/108/Publications/Monographs

(1) Comprehensive Approach to Probabilistic Risk Assessment (www.ecapra.org)

1. SEISMIC RISK AS A PUBLIC RISK

This section presents the importance to identify, assess and quantify seismic risk in the context of a comprehensive disaster risk management scheme. Because of the characteristics of the events and the short recording timeframe, seismic risk cannot be treated in a prospective way only based on historical records but requires selecting a probabilistic approach to consider events that have not occurred yet whilst also the different uncertainties associated to hazard and vulnerability. Quantifying seismic risk has raised recent interest in many fields related to earthquake engineering such as seismic hazard assessment, structural vulnerability and damage and loss estimation being a reason for several tools, both commercial/proprietary and open-source, to have been developed in the past 25 years. What is new nowadays is not the use of the tools by themselves but the interest in understanding them by the users that years ago only wanted to know the results and had a blind trust on the models. On the other hand, that has also led to raise interest on the uncertainties, the way they are considered and what are their effects on the risk calculation process. Finally, some words about defining an acceptable risk level are presented in this section with the aim of not to define one but of showing the most relevant aspects (not only from the technical side) that are associated to that concept, highlighting their implications and what should be the minimum characteristics said level should have in case of definition and/or implementation.

1.1 INTRODUCTION

Seismic risk is per se a public risk (May, 2001) since it is centrally produced, widely distributed, has low occurrence frequency and, in most cases, is out of control of those who can be affected by it. Generally speaking, the topic does not get the public attention over a long time period with the idea of trying to reduce it because there is the vague and erroneous idea that very little, if any, can be done to achieve that. Seismic risk is a matter of both public interest and welfare since in the case of an earthquake event happening, besides the damages on buildings and infrastructure there are also casualties (both deaths and injuries), emergency attention costs, business interruption and societal disruption.

Seismic risk has a lot to do with awareness and perception; only in places where events occurred within one or two generations there is memory and is easier to find high building code enforcement and good design and construction practices. On the other hand, those same requirements tend to be very flexible places where important and big events have yet not occurred.

Independent of the hazards to be considered, disaster risk management is a fundamental pillar to guarantee any system sustainability because ignoring the increasing risk (mainly due to new exposed and vulnerable assets) makes the situation unaffordable (Douglas, 2014). Also, from the structural engineering perspective, disasters are assessed in terms of the damaged buildings and infrastructure, nevertheless, it is important to realize that every disaster also has a political dimension (Woo, 2011) and, therefore, a comprehensive and multidisciplinary approach to their understanding, with the main objective of reducing their effects requiring involving experts from the social and economic sciences, among others (Cardona et al., 2008a; 2008b).

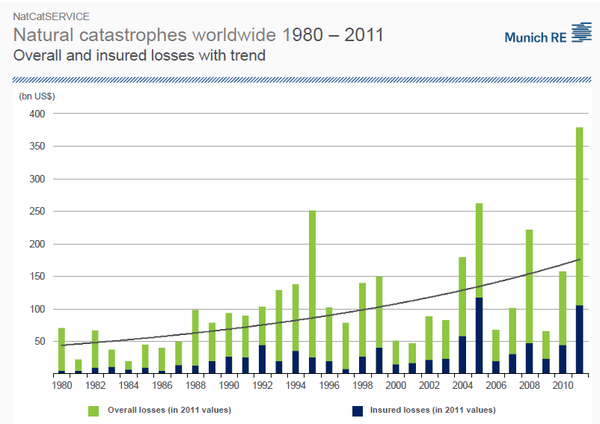

Recently, it has been argued that natural catastrophes are more frequent than before and the number of events and associated losses has an increasing trend. Annualized losses (overall and insured) are commonly presented in plots such as the shown in Figure 1.1 (Münchener Rückversicherungs-Gesellschaft, 2012) where, in absolute values it is true that the increasing trend exists. Nevertheless, it is important to contextualize those losses over the time and understand that, because of normal developing processes in the entire world, mainly leading to denser and bigger urban settlements, day after day more assets are exposed and so the exposed value both increases and is concentrated. Having seen that, what can be stated is that catastrophic events are now more expensive than before, not necessarily more frequent. Insured losses when assessed at global level also need to be contextualized using insurance penetration indexes that highly differ from region to region, therefore, an interesting additional information to contextualize historical insured losses would be to analyze the trend of the global payment of insurance premiums and present the value not in absolute but in relative terms.

Source: Munich RE NatCatSERVICE

There is no formal agreement on the effects earthquakes have in long-term economic performance at country level. While some authors have found them to be important when the lost stock is not replaced or the ground shaking damage critical infrastructure (Auffret, 2003; Benson and Clay, 2003), some others have found that losses in the capital stock do not have important consequences in the economic growth highlighting that disasters are a development problem but not a problem for development (Albala-Bertrand, 1993) and can even serve as a boost for other economic sectors.

The effects of a disaster should also be assessed within a timeframe where not only the damages and losses caused by the event (direct impact) are to be included but those costs associated to the emergency attention and reconstruction. This latest can even activate some economic sectors leading them to higher productivity levels compared to those before the disaster and therefore, the overall economy end up with a better performance and indicators (Hallegate and Przyluski, 2010). Disasters can also be seen as opportunities to update and improve the capital stock and because of that can even be related to the concept of the Schumpetarian creative destruction.

Other authors (Jaramillo, 2009) state that what determine what kind of effects a disaster has had on the long-term is the quality of the reconstruction. Of course, that quality will be affected by the planning level available for it which, on the other hand, is directly correlated with the risk knowledge and understanding of the area of interest.

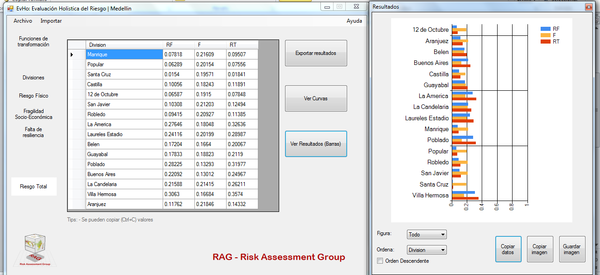

Assessing risk consists on calculating the occurrence possibilities of specific events, in this case earthquakes, and their potential consequences (Kunreuther, 2002) and the the use of the output results are useful to design ex-ante strategies focused on the preparation stage instead of ex-post ones focused on the emergency attention, a paradigm shifting proposed by the HFA ten years ago. Different tools, as presented in the introduction, have been developed to perform seismic risk assessments, most of them in probabilistic terms. Their outputs differ depending on the objective of the analysis, the intended use of the results and the geographical scale of the study. For example, from the perspective of a Minister of Finance, it may be of interest to know what the potential earthquake losses can be at the national level in order to account for them as contingent liabilities (Polackova, 1999) in the development plans, while, knowing the damage distribution at urban level in a secondary city may not be a useful information for the same officer. On the other hand, seismic risk at urban level is of course of interest of a city’s mayor in order to define or update emergency plans, specific structural retrofitting measures or local collective insurance plans (Marulanda et al., 2014).

In most cases the seismic risk results are expressed in terms of economic losses or damage levels but, using the available models, it is also possible to estimate the number of casualties, both deaths and injuries, in case an earthquake strikes a city; this is an additional information useful for the design of emergency plans and to assess the capacity to cope with the disaster under different conditions.

A risk that is not perceived cannot explicitly be collateralized and, of course, this has several implications in different fields. Probabilistic seismic risk assessments, in addition to quantifying possible future losses, play a fundamental role in the risk awareness process and constitute a powerful tool for risk communication. That an earthquake has not happened in recent times in a city may be better understood as a matter of luck instead of a guarantee that it is a safe zone over the time. There are cases where, cities with very different historical seismic activities (low and high) have in the medium-long term (i.e. 475, 975 years return period) similar hazard levels and even more, the seismic risk is higher in that with lower recent seismic activity (Salgado-Gálvez et al., 2015a) than in the more seismically active zones.

1.2 CAT-MODELS

The use of catastrophe risk models (Cat-Models) has boomed in the past 25 years and its use has been mainly related to quantify the exposure to catastrophic events, the risk accumulation by hazard and by region, calculate the required monetary reserves and to assess the capacity to bear risks by companies, insurers and reinsurers among others. One of the industries that use most of this kind of models is the insurance and reinsurance one, where, for example, activities related to pricing catastrophe risk, control the risk accumulation, estimate reserves for different loss levels and explore risk transfer values and mechanisms are conducted (Chávez-López and Zolfaghari, 2010). The main objective of Cat-Models should be understood as providing a measure of the order of magnitude of the overall loss potential associated with natural hazards (Guy Carpenter, 2011) and not exact figures to be directly compared with those recorded after an event. As the British mathematician George Box stated: “all models are wrong but some are useful”, it is important to know in advance the capabilities, strengths and limitations of the models to ensure that they are applied within the appropriate contexts. Cat-Models are powerful tools that can be very useful for the purposes they were developed for and the misuse or misunderstanding of them should not be seen as limitations or product shortages.

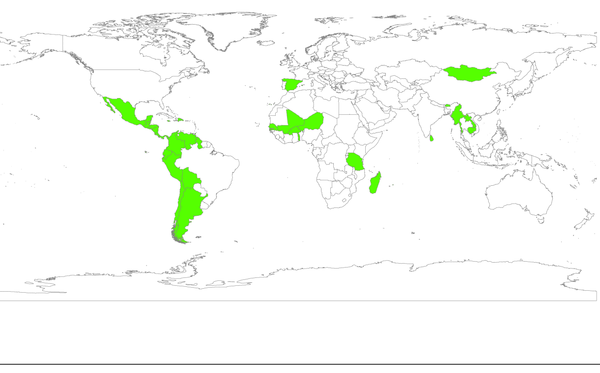

Cat-Models of two types exist; the first ones are proprietary models developed by companies that mainly calculate risk considering perils of different origins (i.e. geological, hydrological, terrorism) for the insurance and reinsurance industry such as Risk Management Solutions (RMS), AIR Worldwide and EQECAT. Those models are licensed tools in which the modeler, despite knowing how to use them, in some cases does not know the full details of the data contained in them (i.e. hazard and vulnerability models). Insurance and reinsurance companies also have in some cases proprietary models, developed either for business reasons or for comparison purposes with the first mentioned models. A second type of models correspond to open-source initiatives that have been recently promoted by public international organizations like The World Bank, the Inter-American Development Bank and the United Nations International Strategy for Disaster Risk Reduction (UNISDR) with the aim of allowing access to probabilistic risk assessment tools in developing countries using models with the same rigor as the proprietary ones but with higher transparency in the calculation process. This is the case of the CAPRA Platform (Cardona et al., 2010; 2012). Few years ago, there was the idea that all the available proprietary and open-source models were competing but now they are seen as complementary since the development of methodologies like the model blending, explained in detail in Chapter 5, have the capability of making use of the best part (i.e. hazard module) of each model.

Generally speaking, the methodology followed by any Cat-Model is very similar. A hazard (peril) is selected and for it, a set of feasible scenarios is generated. Then, after defining an exposure database that captures the minimum relevant characteristics of the elements when subjected to the hazard intensity, vulnerability models are assigned to them to calculate the damage caused by the events.

Once the overall potential losses are estimated, the figures can be used in different activities such as the ones related to the risk transfer/retention by using classical insurance/reinsurance schemes, by using alternative risk transfer instruments (Banks, 2004; Marulanda et al., 2008; Cardona, 2009) or by using the estimations to develop emergency plans, building codes and other activities that allow knowing the potential consequences, damages and losses before the occurrence of the event and therefore be prepared for it. This study presents two case studies at different resolution levels in Spain to exemplify the differences in the outcomes.

Cat-Models should be integrated in a comprehensive way to disaster risk management since they are tools that can be applied to achieve the first stage of identifying risk which, on the other hand, is a key stage for the risk transfer schemes. For example, risk transfer is of interest to the grantor and the taker only if the price associated to that activity seems reasonable for both parties (Arrow, 1996) and, therefore, it is evident the need of reliable, transparent and high-quality assessments for a correct pricing of it.

The use of the results generated by the Cat-Models is of interest of different stakeholders and decision-makers like for example:

- Owners of a considerable large number of elements (Governments)

- National and city governments willing to know the potential losses as well as the capacity of emergency services.

- Insurance and reinsurance companies to define exposure concentration and maximum loss levels.

- Development planners at national level willing to account for the cost of contingent liabilities because of natural disasters.

- Academics involved in the development of methodologies related to any of the stages of probabilistic risk assessments.

Cat-Models are different from other available tools to evaluate seismic risk for a single structure since the damage calculation is performed for several assets at the same time and, in this case, the seismic intensities that damage the portfolio are being caused by the same event. That requires adopting specific methodologies to account for said differences and that will lead to different kind of results.

Of course, when dealing with probabilistic tools, uncertainties are implicitly accounted for but the interest has increased recently in knowing how some related aspects are considered and, also, what their influences in the final results are. Uncertainties will always exist despite the scale of the analysis, as it will be further discussed and that there are uncertainties in any model does not make it wrong or unsuitable as long as the existence of them is acknowledged. Because many of the uncertainties in the seismic hazard and risk assessment context can take long times to be reduced, todays objective is to be as transparent as possible with the aspects related to them. That, for example, has had influence in avoiding always using models that produce the results a stakeholder is expecting and is comfortable with despite their validity (Calder et al., 2012) or by taking advantage of the uncertainty by keeping low reserve levels in case of an insurance company (Bohn and Hall, 1999).

Uncertainties are generally classified in two broad categories: aleatory and epistemic. The first ones are related to the random characteristics of an event and, therefore, it is acknowledged, beforehand, that it cannot be reduced. The second category corresponds to those associated to an incomplete understanding of the phenomena under study but that, with a larger set of observations, can be reduced. Although, in theory, epistemic uncertainty is always in a decreasing process (Murphy et al., 2011), the aleatory uncertainty can be better identified and estimated, even if not reduced (Woo, 2011). Quantifying uncertainty, although desired, is a very challenging task where, unfortunately, it cannot be calculated by subtracting what one does not know from what one do knows (Caers, 2011).

What is uncertain and to what category does it belong is a matter that depends on the context (Der Kiureghian and Dotlevsen, 2009) and, even more, defining which uncertainties are aleatory may result in a philosophical debate depending on the context. Nevertheless, that represents a challenge and a decision to be made by the modeler and there is no a formal rule to make that selection.

Unfortunately, although calculating risk by means of Cat-Models when all the ingredients are ready seems like a not very complicated task, it should be born in mind that modelling risk differs from understanding risk (GFDRR, 2014b). In the first case results can be obtained in terms of damages, casualties and loss values, which are of course significantly important results, but a real and comprehensive understanding involves a bigger approach from a broader and multidisciplinary perspective: risk is socially constructed.

1.3 THE “ACCEPTABLE” RISK

Because of the increasing number of available risk assessments and tools to perform them, either considering natural or anthropogenic events, defining what an acceptable risk level is has become a study field. Although not being an innovative concept (Starr, 1969; Fischhoff, 1994; Cardona, 2001), there is not yet a formal definition of it.

With the available tools it is possible to quantify damages and losses for a very broad range (different return periods); anyhow, the definition of what is an acceptable loss has not (explicitly) being stated. The acceptable risk level is a decision to be taken for the people and not by the people and, therefore, adopting any level has important consequences. Not many people would want to have anyone different to them deciding this kind of issues but, it is clear that it is not a decision which everyone has the capacity to make (Fischhoff, 1994) and that, in certain way, has been assigned to the experts of different fields because of the lack of general public understanding of the social and private effects.

Protection demand by the public increases with the income per capita, but also, the acceptable risk decreases with the number of exposed people (Starr, 1969) and this leads to a main characteristic of the acceptable risk is: it is not a constant. Several factors are involved, such as how controllable the risk is, the associated costs to reach certain acceptable level and the potential benefits of having reached them (Cardona, 2001).

An acceptable risk level, if established, will define a threshold level to decide what is right and what is wrong (May, 2001) and, therefore, the process to define it should be transparent. On the other hand, the selected level should be flexible over the time and subjected to periodical evaluations (Fischhoff, 1994) that consider several aspects besides the physical damages and losses. Even if the technical aspect of a risk evaluation plays a fundamental role in the definition of a possible acceptable risk, it is not the only one (Renn, 1992). Other contextual aspects related to social, economic and risk aversion characteristics are to be considered.

Worldwide used building codes somehow have adopted implicitly certain acceptable risk level since criteria related to it has been included with the following three objectives:

- 1. A structure to be able to resist minor earthquakes without damage.

- 2. A structure to be able to resist moderate earthquakes without significant structural damage but with some non-structural damage.

- 3. A structure to be able to resist severe earthquakes with structural and non-structural damage without collapsing

Even if the definitions of the earthquake size and damage levels are very subjective, the third objective denotes the philosophy behind most current building codes that are focused in protecting life and not property and wealth (although implicitly by avoiding collapse they are doing so) and also shows that, in an indirect way, governments have somehow made a decision on what an acceptable risk level is.

What is also interesting to note at this stage is that the performance objectives on the building codes vary depending the characteristics of the elements. While the latter apply to standard buildings (AIS, 2010), higher requirements are included for critical facilities as well as for other non-building structures such as water storage tanks (AIS, 2013).

Finally, a question that may arise when having defined an acceptable risk level is: who is to pay for the costs of mitigating risk when the actual risk conditions exceed the selected threshold? Should the mitigation measures be mandatory or voluntary? (Kunreuther and Kleffner, 1992). The response to those questions can be understood as to when to pay for the feasible losses since the following questions must be evaluated to see what is better: paying today to avoid future losses that may not occur? Or paying later the cost of the induced damage because of an earthquake knowing that access to funds, if not previously arranged, is a timely and costly task? Later in this context can mean 1 hour or more than a hundred years.

2. PROBABILISTIC SEISMIC HAZARD ASSESSMENT FOR SPAIN

This chapter presents the methodology to assess seismic hazard in a probabilistic way accounting for a full description of the geometry of the seismogenetic sources that are also characterized, in terms of their seismic activity, by using instrumental information. Results at national level for Spain are presented in terms of hazard curves for different spectral ordinates that allow calculating probabilistic seismic hazard maps for several return periods and spectral ordinates besides the classical uniform hazard spectra. Because in local seismic risk assessments soil response has influence on the ground motion intensities at free surface level the procedure to include that information is presented using, as an example, the seismic microzonation of the urban area of Lorca, Spain.

2.1 INTRODUCTION

The hazard that seismic activity induces over regions, cities and human settlements have derived the need to establish parameters, that define the hazard level that have led to the development of different methodologies to estimate them. Seismic hazard levels are not related to social, environmental and/or economic development and unfortunately, so far, nothing can be done to decrease it.

The parameters that define the hazard level in a seismic hazard model are known as strong ground motion parameters. Those parameters define the intensity of the ground motion intensity in the site of analysis. Its intensity estimation is made through equations or relationships known as ground motion prediction equations (GMPE) which depend mainly in the distance of the seismogenetic source to the site of analysis, the magnitude of the event and the type of focal mechanism of the rupture.

There are different approaches to assess the seismic hazard, starting from deterministic models that use a unique scenario approach considering only realistic scenarios (Krinitzsky, 2002) and, now, more commonly used, probabilistic seismic

hazard assessments (PSHA) that are preferred when the decision to be made, that is, the reason because the assessment is being performed, is mainly quantitative.

Also, it is not the same to assess the seismic hazard for a single site (or even a single building) than for a larger zone; for the first cases it is common practice to conduct deterministic assessments but for the second ones the PSHA approach is preferred. Anyhow, both approaches should not be understood as independent but as complementary since a PSHA must include all the feasible deterministic scenarios and a deterministic assessment must be rational enough to worthy be included in a PSHA (McGuire, 2001).

The main objective of a PSHA is to quantify the rate of exceedance for different ground motion levels in one or several site of interest, considering the participation of all possible earthquakes. Earthquakes on the other hand, can at the same time be generated in different seismogenetic sources. There have been several attempts to classify the seismic hazard assessments onto categories depending on the selected approach, used information and the outputs of them. One of the most consistent and complete categorization is the one proposed by Muir-Wood (1993) where five different categories are identified. According to that list, the one conducted in this study can be classified in the seismo-tectonic probabilistic one.

2.2 GROUND MOTION PARAMETERS ESTIMATION

One of the main components of a seismic hazard assessment is the study of the GMPEs that characterize the strong ground motion in which the effects of the amplitude as a function of the magnitude and distance of the event are considered. Next, some of the issues that have to do with them are discussed.

2.2.1 Effects of magnitude and distance

Most of the energy in an earthquake is liberated in form of stress waves that propagate through the Earth’s crust. Because magnitude is associated with the liberated energy in the rupture area of the earthquake, the intensity of said waves is related to the magnitude. The effects of the magnitude are mainly the increase on the intensity amplitude, the variation in the frequency content and the increase in the vibration length.

As the waves travel through the rock, those are absorbed partially and progressively by the materials they transit on. As a result, energy per unit of volume varies as a function of distance.

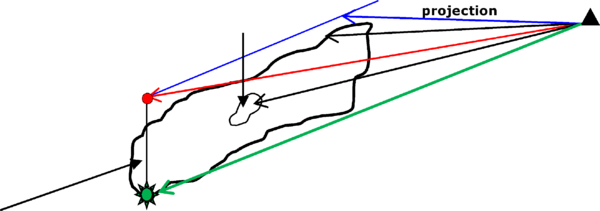

Given that energy is related with the wave’s energy, it is also related to the distance. Many GMPEs relate the intensity, in terms of any strong ground motion parameter, with one of the distances presented in Figure 2.1, which characterize each in a different manner, the origin of the vibration movement.

(Adapted from Kramer, 1996)

Distance D1 represents the site distance to the surface projection of the fault plane. D2 is the distance to the fault surface. D3 is the epicentral distance. D4 is the distance to the fault surface zone that liberated the highest energy amount (which does not necessarily correspond to the hypocenter) and D5 is the hypocentral distance. The use of any of these distances in particular depends on the parameter to be inferred; for example D4 is the distance that better correlates to the peak ground motion values given that most of the rupture occurs in that zone.

2.2.2 Amplitude parameters estimation

The estimation of the amplitude parameters is usually done through regressions performed on historical datasets in areas with good seismic instrumentation. In this section, some of the representative prediction models are presented.

Peak ground acceleration

Peak ground acceleration (PGA) is the most employed parameter in seismic hazard assessments to represent the strong ground motion; because of that, several GMPEs have been proposed for this parameter related to the distance and the transmitter mean properties. As more historical seismic records are available, it is possible to refine the GMPEs which derives in frequent publications of new and more refined relationships. The refinement level increases as more advanced processing methods are developed as well as more and better strong motion recordings are available.

A large set of GMPEs in terms of PGA have been developed worldwide in the last four decades given the high relevance of this input within the seismic hazard assessments.

Response spectra ordinates

Because of the importance that the response spectra has had within the earthquake engineering field, GMPEs that allow obtaining the intensities for other spectral ordinates different than PGA in a direct way have been developed. This can be achieved only in areas with very good instrumental seismicity records. For example, the GMPE proposed by Ambraseys et al. (2005) allows calculating the intensities within the 0.1s-2.0s range, which are considered sufficient for the present study.

Fourier spectra amplitude

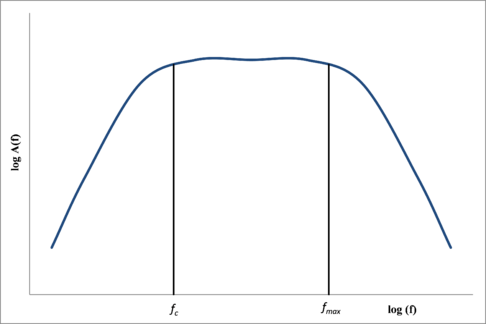

Alternatively, it is possible to calibrate a theoretical model of the physical characteristics of a seismogenetic source, the transport body and the response in the site of analysis to predict the shape of the Fourier spectra. Through the solution for the instantaneous rupture over a spherical surface in a perfectly elastic body (Brune, 1970) it is possible to estimate the amplitudes of the Fourier spectra of distant earthquakes using the following relationship (McGuire and Hanks, 1981; Boore, 1983)

|

|

(2.1) |

where fc is the corner frequency, fmax is the maximum frequency , Q(f) is a quality factor, Mo is the seismic moment and C is a constant defined by

|

(2.2) |

where RθΦ is the radiation pattern, F depends on the effect of the free surface, V accounts for the energy partition in two horizontal components, ρ is the rock density and vs is the shear wave velocity of the rock.

Length

The length of the ground motion increases with the event’s magnitude and its variation with distance depends on how the parameter is defined. For lengths based on absolute acceleration amplitudes, as the one determined with the length threshold, they tend to decrease as distance increases because absolute acceleration decreases in the same way. Lengths based on relative accelerations increase with distance, deriving in very long durations even when amplitudes are very small.

2.3 PROBABILISTIC SEISMIC HAZARD ASSESSMENT FOR SPAIN

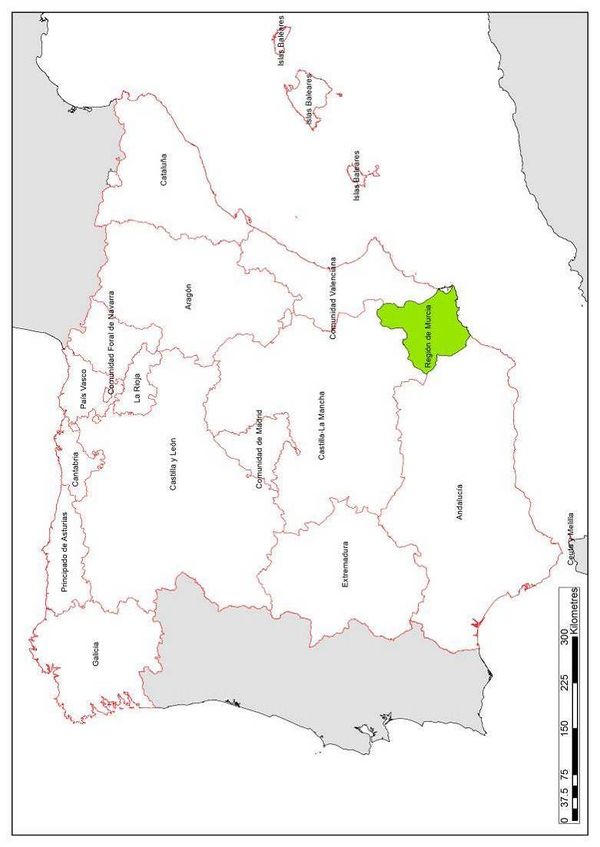

A probabilistic and spectral seismic hazard assessment at national level for Spain using an area geometrical model based on the most recent available information is presented. From the seismo-tectonic settlement of the region, previous studies and the recorded seismicity in the available earthquake catalogues, a set of seismogenetic sources was defined, covering the totality of the Iberian Peninsula, including Portugal as well as zones in northern Africa given that the occurrence of events in those areas contribute to the total seismic hazard level.

2.3.1 Seismo-tectonic settlement in Spain

The Iberian Peninsula is located over a convergence zone of the African and Eurasian tectonic plates that condition the seismicity. The boundary of both tectonic plates on the west is located in the Azores-Gibraltar fracture zone (IGN and UPM, 2013) and from there it is possible to determine four different geodynamic sectors (Buforn et al., 1988; De Vicente et al., 2004; 2008) where it is of special interest the zone close to the contact between the Iberian part and northern Africa that acts as continental convergence zone, where most of the seismic activity of the region concentrates. The varied tectonic settlement presents different types of faults along the peninsula; for example, of reverse type in the Cadiz Gulf and northern Africa, strike type in northern Africa, mainly in Morocco and normal type in the south of Spain (Buforn and Udías, 2007).

Most of the historical recorded events have occurred with depths of less than 60 kilometers besides that in some specific zones in the south, earthquakes with up to 200 kilometers have occurred. The first mentioned the ones are of more interest because they have a considerable higher potential to be the cause of catastrophic events causing important damages and losses on infrastructure.

2.3.2 Selected seismogenetic sources

For this study, a recompilation of different existing tectonic zonations for the analysis area was done, as the ones proposed by Benito and Gaspar-Escribano (2007), Buforn et al. (2004), García-Mayordomo (2005), García-Mayordomo et al. (2007), Grünthal et al. (1999), Jiménez et al. (2001), Jiménez and García-Hernández (1999), Vilanova and Fonseca (2007) and IGN (2013a). In all cases, it was verified that the identification of seismogenetic sources at national level was performed.

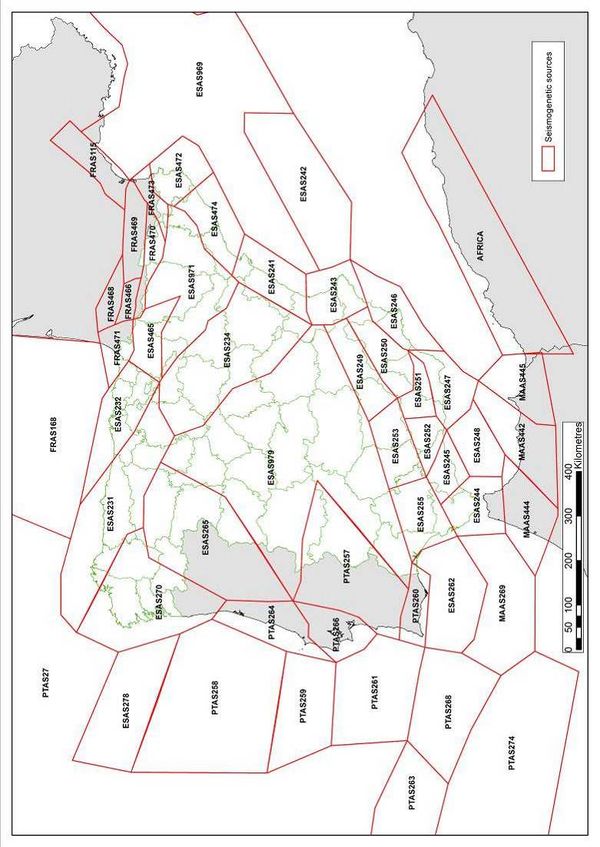

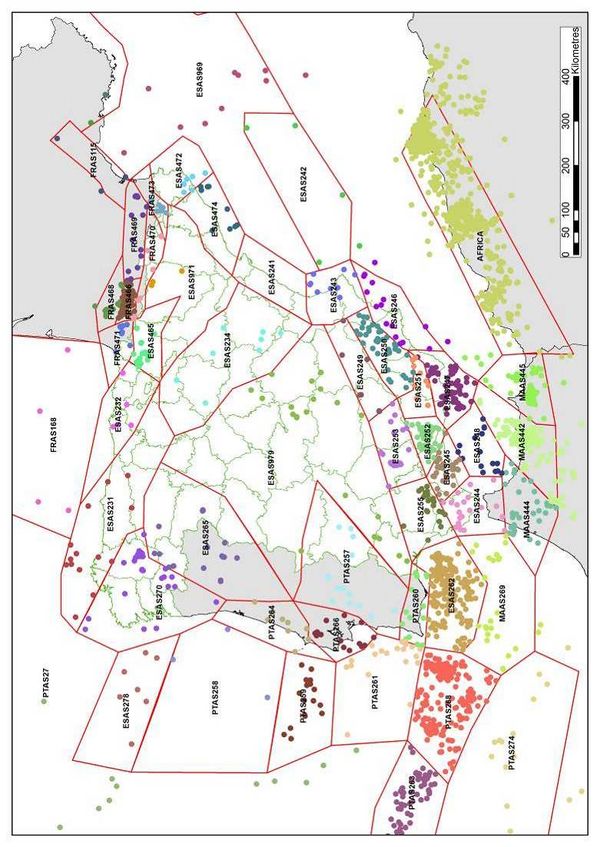

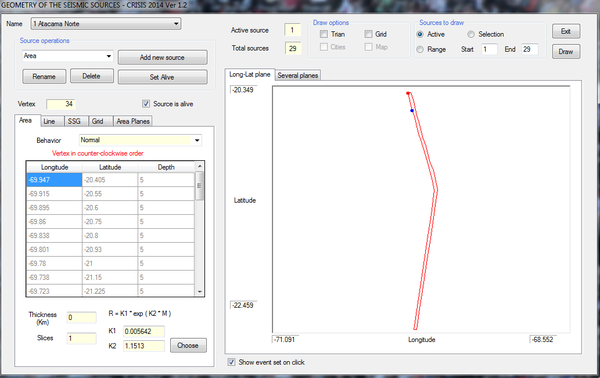

There are numerous similarities in the general procedure followed to define the seismic regions and seismogenetic sources for Spain in recent national and local seismic hazard studies; however, in the framework of the SHARE (Seismic Hazard Harmonization in Europe) project (GRCG, 2010) specialists, not only from Spain but from neighboring countries such as France and Portugal, participated with the aim of considering, in an appropriate manner, the seismogenetic sources in the political border areas. They developed the tectonic zonation that is used in this work which can be considered complete and detailed enough for the purpose of this assessment. Additionally, based on the above mentioned references, a seismogenetic source has been included in northern Africa that is associated to a large number of historical records that occurred at that location.

With that information, it was possible to define 52 seismogenetic sources that are associated to shallow (0-60 km) seismicity. For practical purposes, it is assumed that events occurring at depths higher than 60km do not contribute to the seismic hazard levels and do not generate relevant strong ground motion intensities that may cause damage on buildings and infrastructure.

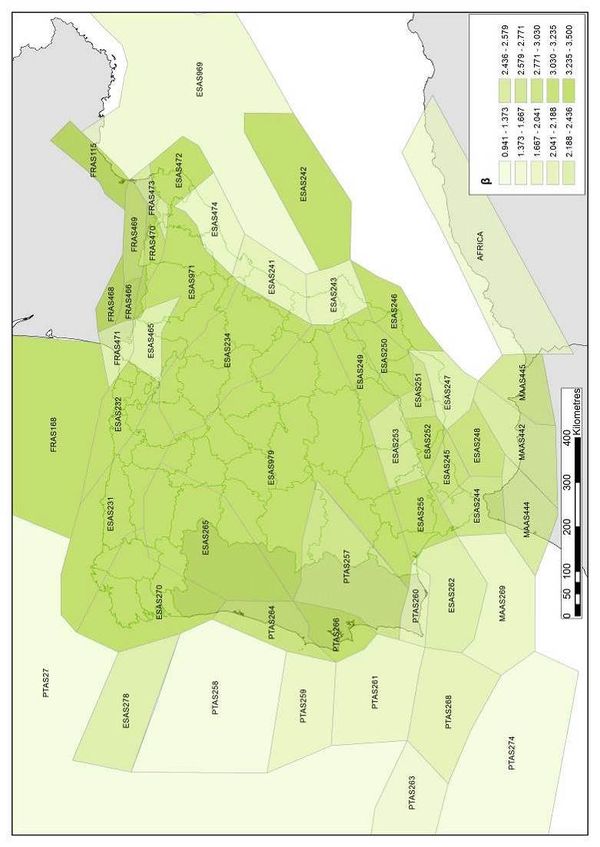

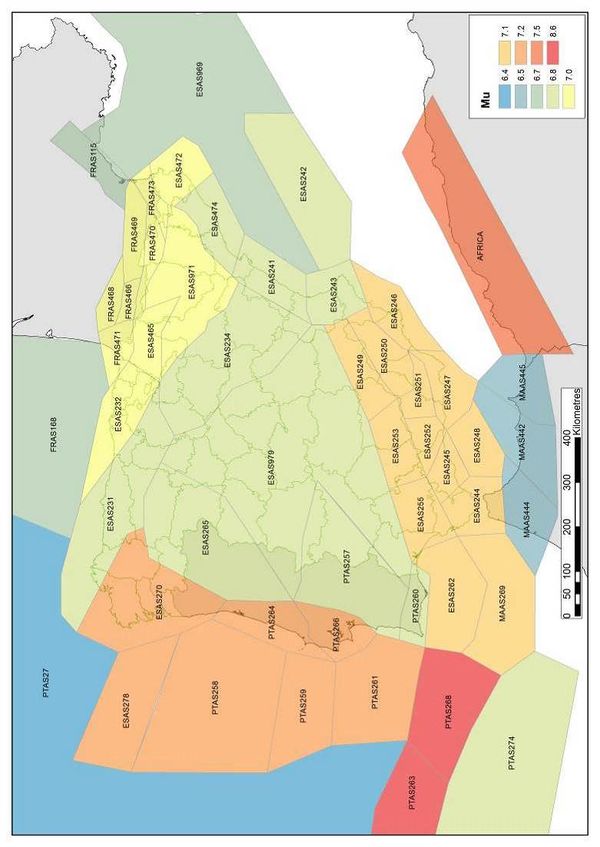

Figure 2.3 shows the geographical location of the considered seismogenetic sources using the exact same notation assigned in the framework of the SHARE project (GRCG, 2010) with exception of the additional seismogenetic source located in northern Africa that has been called “Africa”.

2.3.3 Selection of the analysis model

As a general calculation methodology, the PSHA has been selected because it allows the definition of earthquake scenarios with associated occurrence frequencies and also allows an appropriate and comprehensive treatment to the inherent uncertainties in the analysis. The geometrical model for the definition of the seismogenetic sources in this study corresponds to areas where each of the seismogenetic sources is modelled as a plane in which, the occurrence probability of earthquakes within it, for the same magnitude, is assumed to be equal. That allows characterizing the event generation process from the calculated and assigned seismicity parameters.

It is important to highlight that the PSHA has as an objective to estimate the intensities of ground motion at bedrock level as well as their associated frequencies of occurrence; then, the estimation of the strong ground motion parameters and its units is made by means of the selected GMPEs.

Methodology

The PSHA methodology allows to account in a comprehensive way of the different inherent uncertainties in the calculation process such as the ones associated to the definition of the seismogenetic sources, their geometry, the estimation of the seismicity parameters (mainly the maximum magnitude) and the attenuation patterns of the seismic waves.

When seismic hazard is assessed by probabilistic means, results are expressed in terms of the intensity exceedance rate for any site of interest, from where the exceedance probability of certain intensity value during a timeframe can be derived (i.e. 7% exceedance probability in 75 years). Although both results are presenting the same information, it is worth to highlight that when results are expressed in terms of exceedance rates, the definition of an arbitrarily selected timeframe to contextualize the results is not needed. In this study, results are presented in said way.

The intensity values and units for which the seismic hazard assessment is performed correspond to the selected in the analysis, for example, several spectral ordinates and of course, they are directly related to the selected GMPEs and their range.

Once the seismicity and attenuation patterns of all seismogenetic sources is known, seismic hazard can be calculated considering the sum of the effects of the totality of them and the distance between each seismogenetic source and the point of interest. Seismic hazard, expressed in terms of the intensity exceedance rate, ν(a), is calculated as follows (Ordaz et al., 1997; Ordaz, 2000):

|

(2.3) |

where the sum covers the totality of seismogenetic sources N, Pr(A>a|M, Ri) is the probability that the intensity exceeds certain value given the magnitude M and the distance between the source and the site of interest Ri of the event. λi(M) functions are the activity rates of the seismogenetic sources. The integral is performed from the threshold magnitude M0, to the maximum magnitude Mu, which indicates that for each seismogenetic source, the contribution of all magnitudes is accounted for.

It is worth noting that the previous equation would be exact if the seismogenetic sources were points. In reality, those are volumes and because of that, epicenters cannot occur within the center of the sources but, with equal spatial probability within any point of the corresponding volume. This is considered in the area geometrical model by subdividing the sources into triangles, on which each gravity center is assumed to concentrate the seismicity of each triangle. The subdivision is performed recursively until reaching a small enough triangle size to guarantee the precision in the integration of Equation 2.3.

Given that it is assumed that, once magnitude and distance are known, the intensity follows a lognormal distribution, the probability Pr(A>a|M, Ri) is calculated by the following expression (Ordaz, 2000):

|

|

(2.4) |

where Φ(·) is the normal standard distribution, MED(A|M, Ri) is the median of the intensity, given by the associated GMPE for known magnitude and distance, and σLna accounts for the standard deviation of the natural logarithm of the intensity. It is worth noting that the median is not the same that the mean value, even if they are the same on a logarithmic scale; but, since seismic intensities are quantified in terms of absolute values, what is calculated is the median and not the mean.

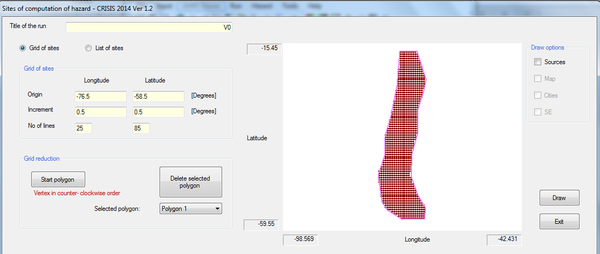

Maximum integration distance has been set to 300 km for this study; this means that, for each node within the grid, only sources (or parts of them) located within that distance, are considered for the seismic hazard assessment.

The intensity return period corresponds to the inverse value of the exceedance rate that can be defined as:

|

|

(2.5) |

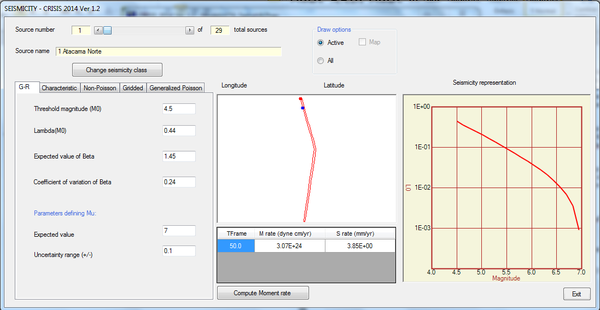

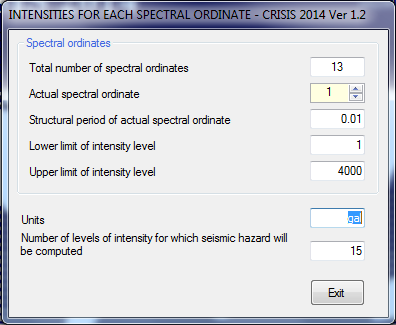

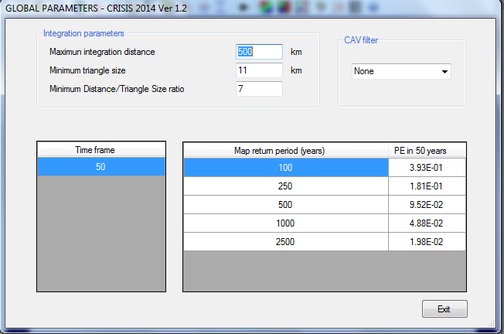

The seismic hazard assessment has been performed using the program CRISIS 2014 V1.2 (Ordaz et al., 2014) which allows obtaining seismic hazard results in the metrics and representation required for this analysis. Additionally, given that the results of the PSHA were to be used later in a probabilistic seismic risk assessment, it is necessary to obtain a set of stochastic scenarios that account for all feasible events within the analysis area, characterized by their location, magnitude and annual occurrence frequency. This methodology has been employed in Colombia to define the official seismic hazard maps included in the earthquake resistant building codes (Salgado-Gálvez et al., 2011).

The stochastic set has been stored in *.AME format which is a collection of intensity grids, both expected and dispersion values that also allows considering several spectral ordinates. All grids are geocoded and also have associated a frequency of occurrence, expressed in annual terms. In total, 32 spectral ordinates were considered in terms of spectral acceleration with a 5% critical damping.

2.3.4 Historical earthquakes' catalogue

Some of the seismicity parameters required to characterize the seismic activity of the seismogenetic sources can be calculated using statistical methods from historical records. For this study, the National Geographical Institute - Instituto Geográfico Nacional - catalogue (IGN, 2013b) was used because for the Spanish context, is the one with higher reliability degree on the information has. Additionally, it was complemented with the instrumental earthquakes catalogue developed by the International Seismological Center in the framework of the Global Earthquake Model initiative (Storchak et al., 2013); this only applies for events with magnitudes equal or higher than 5.5. On that assembled catalogue, a removal process of events where depth and/or magnitude parameters were not reported was followed. Also, a homologation of the magnitudes to magnitude moment (MW) was done following the recommendations made by IGN and UPM (2013) since they are usually reported in different magnitudes such as body wave (Mb) and surface wave (Ms) among others.

The instrumental earthquakes query has been performed for the area surrounded by the polygon with borders at 45° north, 26° south, 5.9° east and -11.9° west. Initially the catalogue had 87,686 events where, after the removal of events with either depth or magnitude parameters reported and also, events with magnitudes lower than 3.5 and depths higher than 60 km, 3,643 events were left.

Because a seismicity model that follows a Poisson process has been selected, one of the assumptions accounts for the independency among the events. For this, a removal of fore and aftershocks was followed using a similar methodology to the one proposed by Gardner and Knopoff (1974). After this process, a total 2,629 events are included in the catalogue.

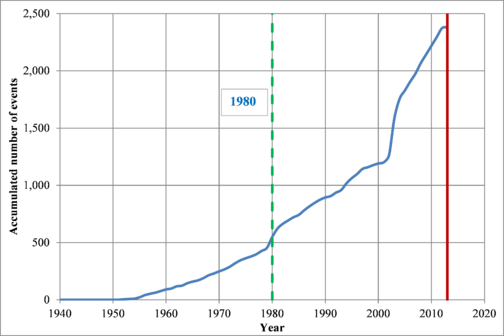

Finally, completeness verification for the selected threshold magnitude (M0), 3.5 in this case, is conducted to define the timeframe to be considered in the estimation of the λ0 and β parameters for the seismogenetic sources. Because historical earthquake catalogues are incomplete on pre-instrumental periods for small and moderate magnitudes, this procedure is required. Following the recommendations made by Tinti and Mulargia (1985), where in a graphical way the events with magnitude equal or higher than M0 are accumulated to identify the part of the plot from where the recorded seismic activity is constant. That point, indicates the starting (cut-off) year from where the seismic catalogue can be considered complete. Figure 2.4 shows that estimation from where it is possible to identify that from 1980 on, the catalogue is complete for the selected M0.

A total 2,629 events are included in the final catalogue that is to be used in the further stages of the analysis.

2.3.5 Assignation of earthquakes to the considered seismogenetic sources

Once the instrumental earthquake catalogue to be used in the analysis has been defined, according to their geographical and depth parameters, the events need to be assigned to one of the considered seismogenetic sources. In case that an event lies outside the boundaries of the modelled seismogenetic sources, it is assigned to the closest source. In this study, no background sources have been considered.

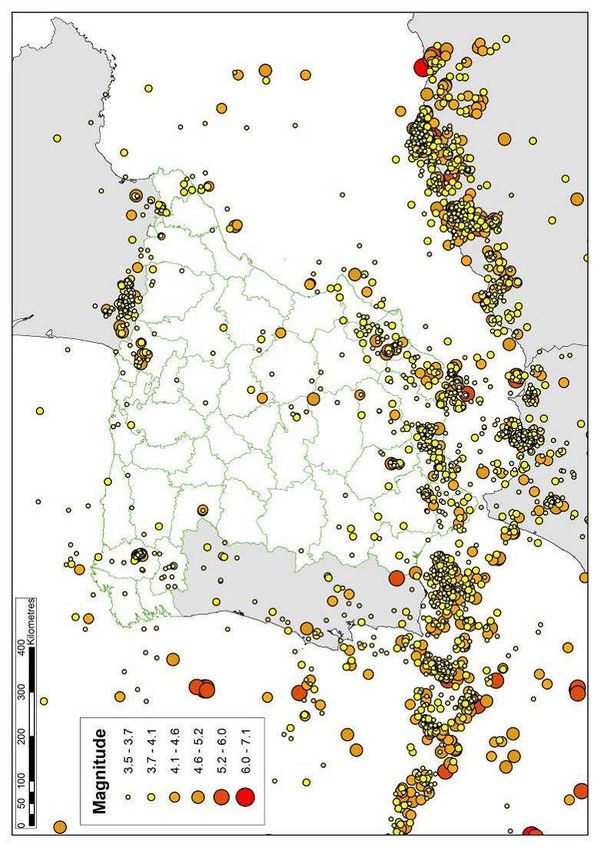

Figure 2.5 shows the location and magnitude of the considered events while Figure 2.6 shows the assigned events to the seismogenetic sources. From the latter figure, it is evident that source ESAS241 does not have enough earthquake records to properly calculate the seismicity parameters. For that case, the same seismicity per unit area as well as the β value of the neighboring source (ESAS474) is assigned.

2.3.6 Seismicity parameters of the seismogenetic sources

Although there are different approaches to calculate seismic hazard using Markov, Semi-Markov and renovation models (Agnanos and Kiremidjian, 1988), for PHSA it is common practice to assume that seismic activity follows a Poissonian process, reason for which the probability of exceeding at least once the intensity parameter a within a timeframe t can be related to the annual occurrence frequency, generally denoted with the parameter λ. According to this, the probability that there is an exceedance (of the intensity parameter a) within an arbitrarily selected timeframe t can be calculated as follows:

|

|

(2.6) |

Now, assuming that the exponent in Equation 2.6 is small enough, the equation can be simplified into:

|

|

(2.7) |

With this, the Poisson seismicity models basically have the following characteristics:

- The sequence of the events does not have memory and the future occurrence of one does not have anything to do with the fact that a previous event occurred.

- Events occur randomly over the time, space and magnitude domains.

- To use this approach in PSHA, it is required to remove the after and foreshocks in the earthquake catalogue.

- The relationship is truncated to a threshold magnitude and a maximum magnitude (M0 and MU) for practical purposes. The latter has associated certain degree of uncertainty.

For this analysis, a local seismicity Poisson model has been selected where the activity of the ith seismogenetic source is specified by means of the magnitude exceedance rate li(M), generated by it which is a continuous distribution of the events. That magnitude exceedance rate relates how frequently earthquakes with magnitude higher than a selected value occur. The li(M) function is a modified version of the Gutenberg-Richter relationship (1944) and then, seismicity is described in the following equation using a procedure like the one proposed by Esteva (1967) and Cornell and Van Marke (1969):

|

|

(2.8) |

where M0 is the selected threshold magnitude and l0, βi, y MU are the seismicity parameters that define the magnitude exceedance rate for each seismogenetic source. Those parameters are unique for each source and are estimated by means of statistical procedures as was mentioned above for the first two cases. For the latter, specialized studies combined with expert opinion is usually employed.

Because seismic activity is assumed to follow a Poissonian process, the probability density function for the magnitudes is as follows:

|

|

(2.9) |

Having said this, it is evident that each seismogenetic source activity is characterized by a set of parameters based on the available information. Those parameters are:

- Earthquakes recurrence rate for magnitudes higher than the selected threshold (λ0): corresponds to the average number of events by year with magnitude higher than the threshold magnitude (3.5 in this case) occurring in a given source.

- β value: represents the slope of the initial part of the logarithmic regression of the magnitude recurrence plot. It accounts for the ratio between large and small events in each source.

- Maximum magnitude (MU): represents the maximum magnitude of feasible events to occur within the considered source.

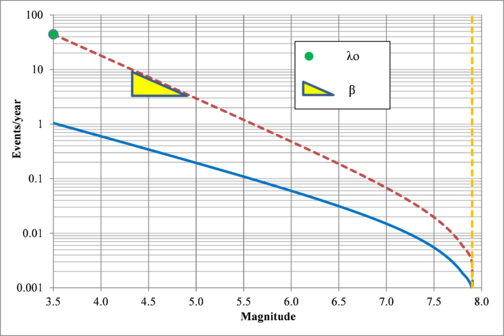

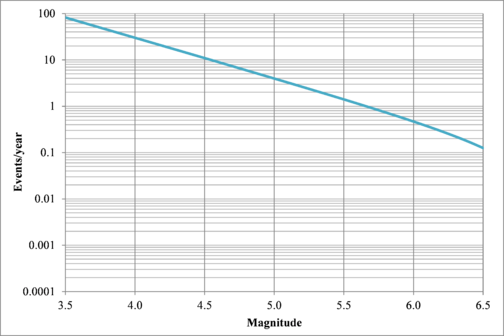

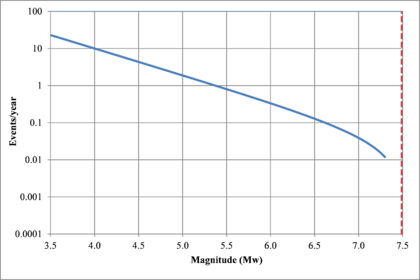

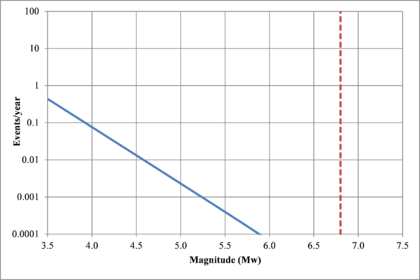

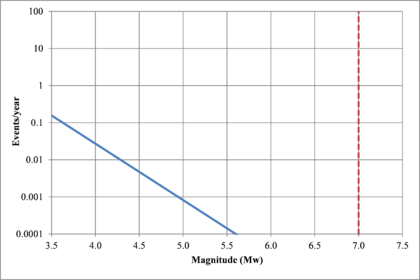

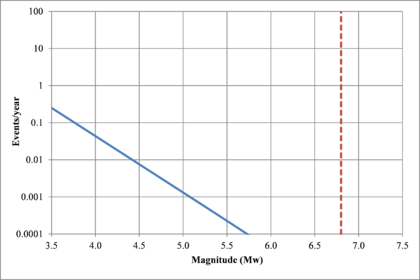

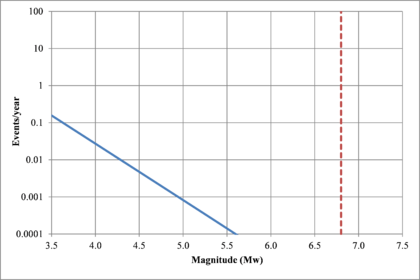

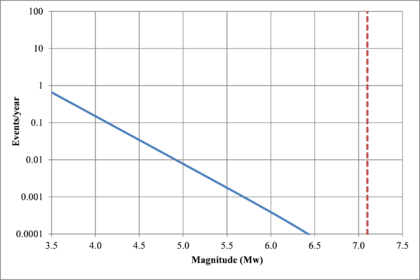

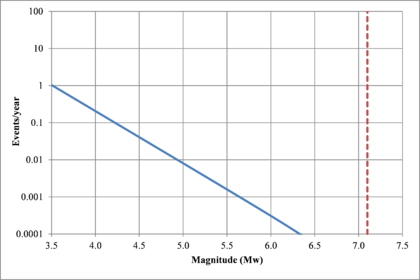

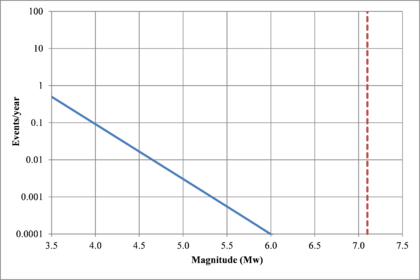

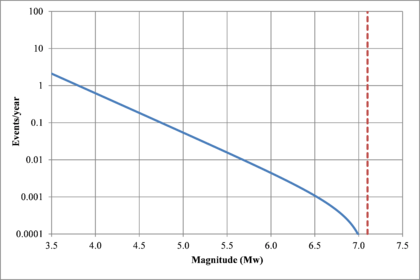

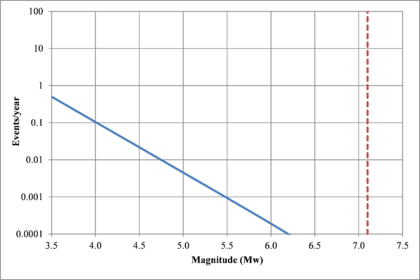

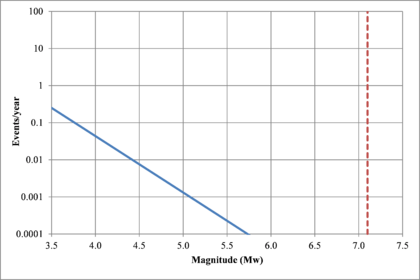

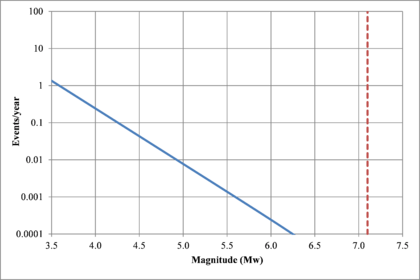

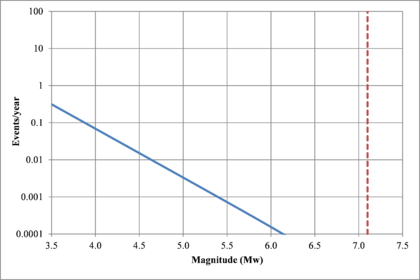

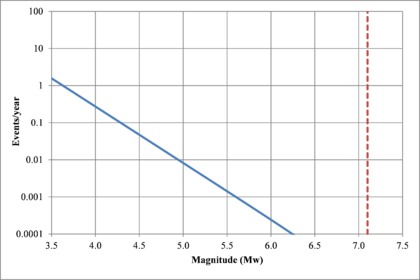

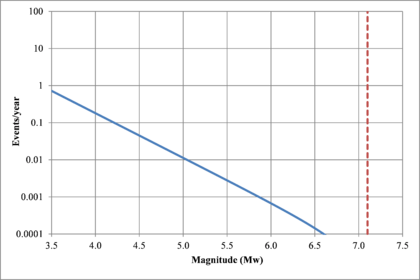

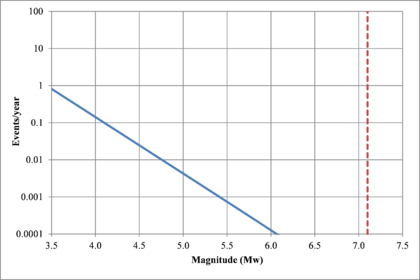

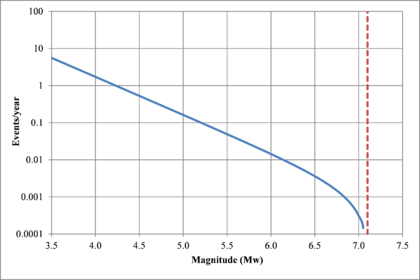

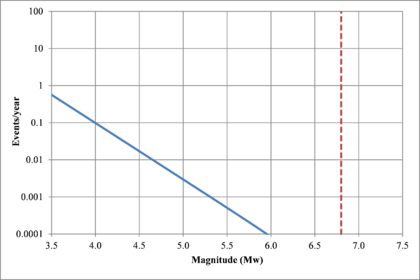

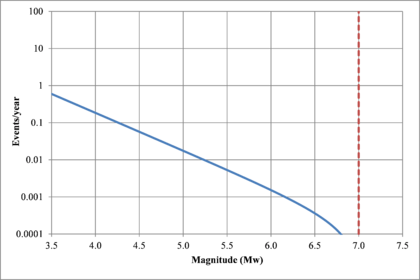

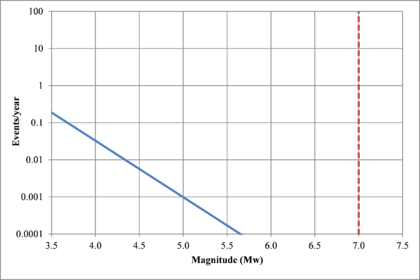

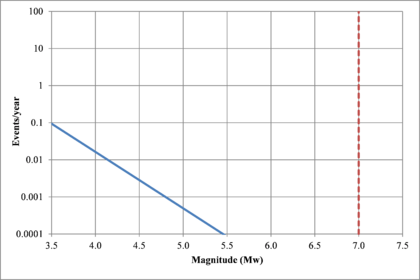

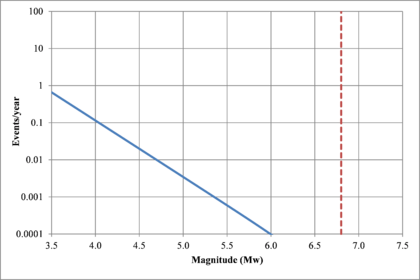

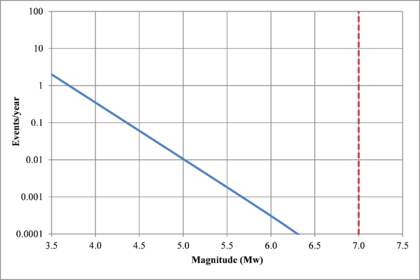

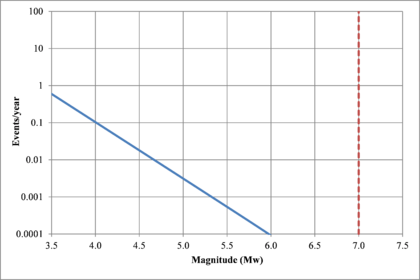

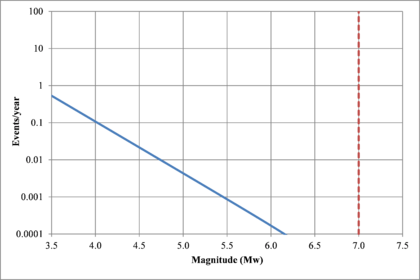

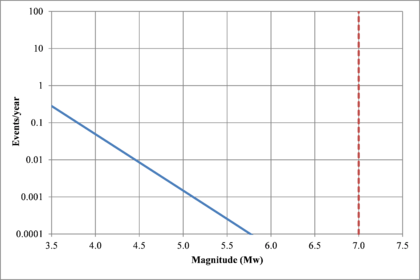

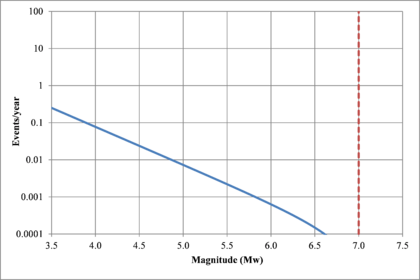

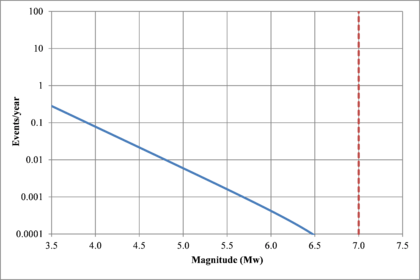

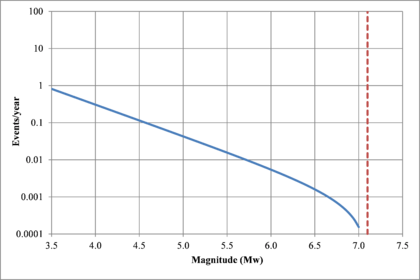

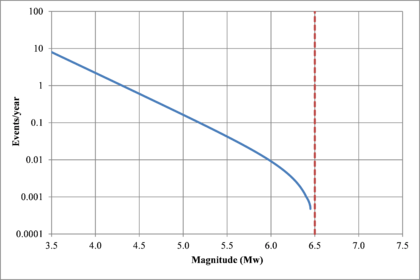

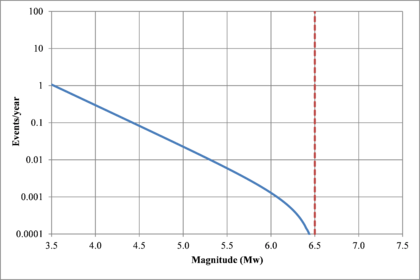

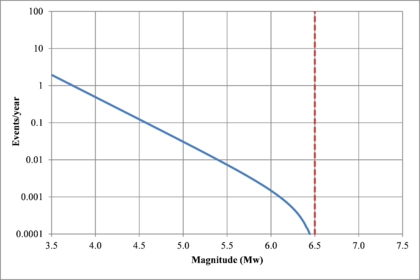

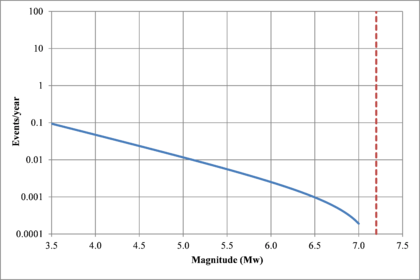

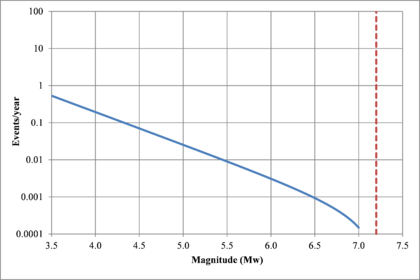

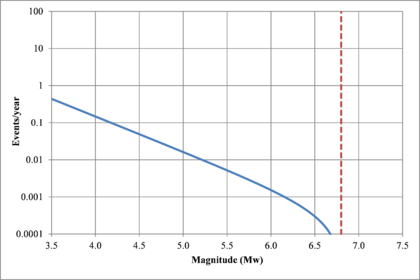

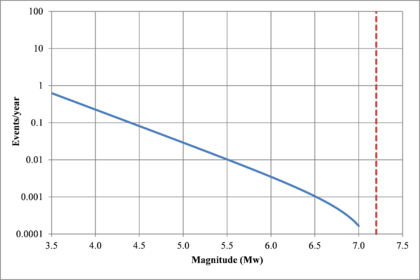

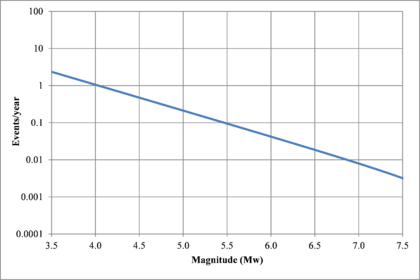

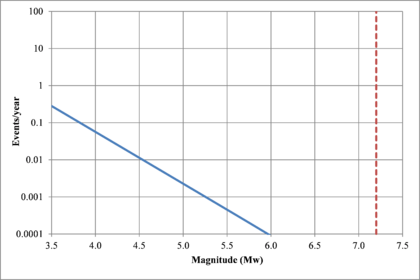

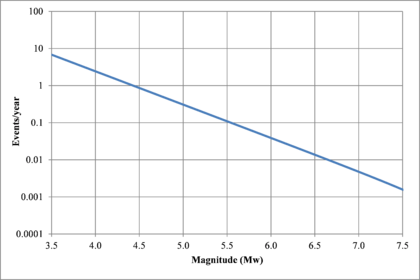

Figure 2.7 shows hypothetical magnitude exceedance rates plots for two seismogenetic sources where the red line is associated with a source with higher seismic activity and higher potential of generating events with large magnitudes if compared with the blue line. For this example, both sources have a M0 equal to 3.5 but, meanwhile λ0 is equal to 1.0 in the source represented through the continuous line, it is equal to approximately to 30 in the dotted one.

Once all the historical events in the earthquake catalogue have been assigned to the seismogenetic sources, the calculation of the seismicity parameters l0 and βi was performed using the maximum likelihood method (Bender, 1983; McGuire, 2004). That method has proven to capture with a higher quality the values of both parameters if compared for example with lineal regression methodologies that have been used previously in Spain (IGN and UPM, 2013).

l0 parameter, which is a rate, is calculated as the number of events N associated to each seismogenetic source observed over the timeframe t

|

|

(2.10) |

That highlights the importance to determine the completeness window for the selected M0. For this study t is equal to 32 years.

On the other hand, βi parameters are calculated by means of

|

|

(2.11) |

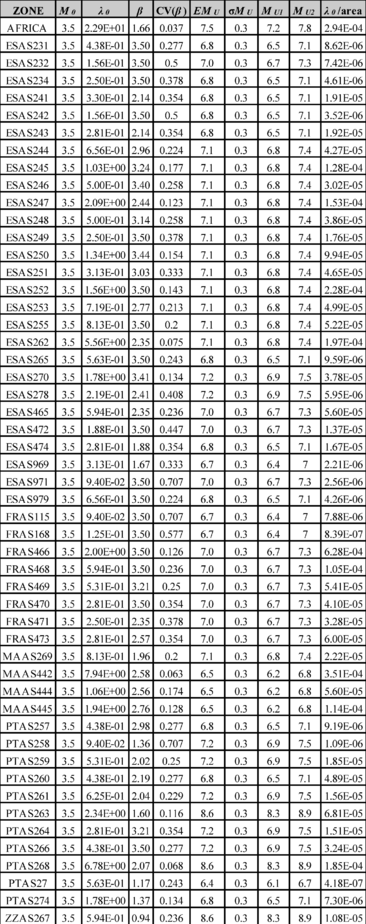

where, again, N is the number of events associated to the source, Mi is the magnitude of each event and M0 is the threshold magnitude of each source. It is worth noting that although the threshold magnitude can be different on each source, in this study has been set equal to 3.5 for all of them. As a summary, Table 2.1 shows some statistics of the catalogue and its seismicity parameters. For practical purposes, β has been truncated to 3.5.

| Parameter | Value |

| N | 2,629 |

| t | 32 |

| λ0 | 82.15 |

| β | 2.01 |

Because βi parameters are considered as a random variable that represent a function that is not completely defined and understood, it is necessary to calculate its coefficient of variation, CV(β) by dividing its mean value between the standard deviation. After simplifying terms, it can be reduced to the following equation:

|

|

(2.12) |

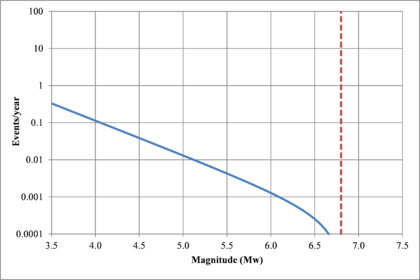

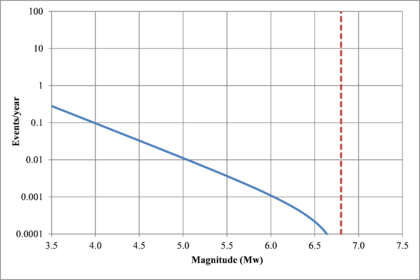

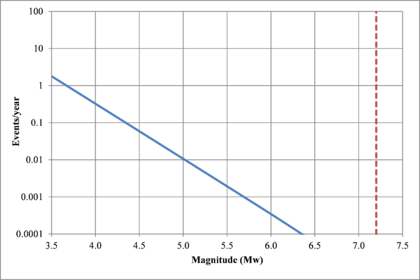

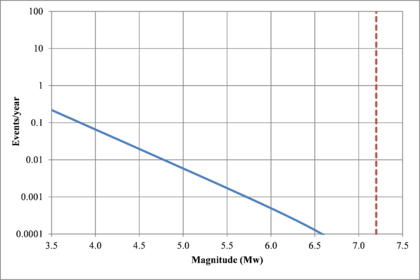

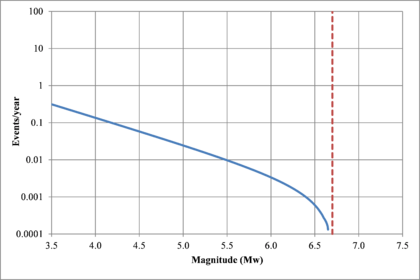

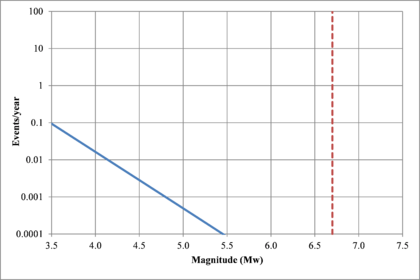

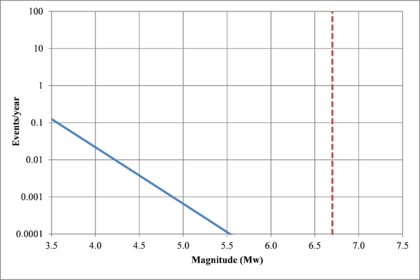

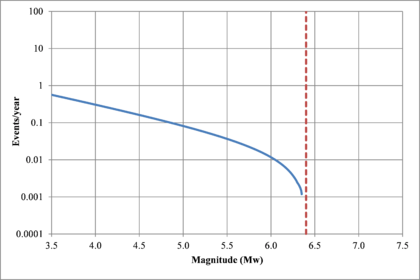

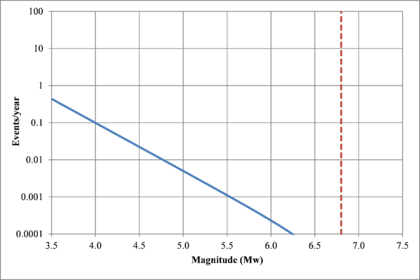

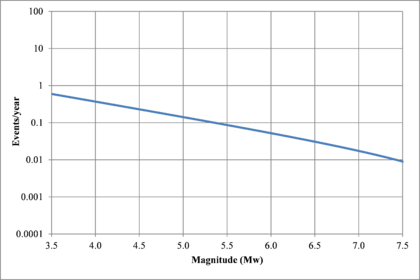

Based on that information, it is possible to calculate the magnitude exceedance rate plot for the complete catalogue for verification purposes; as shown in Figure 2.8 it is considered up to magnitude 6.5 which is the highest included on it.

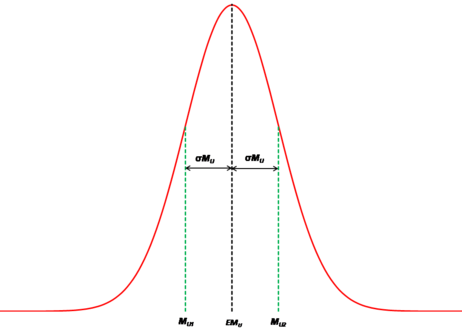

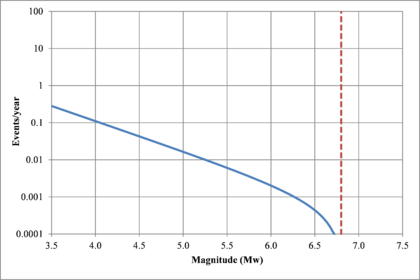

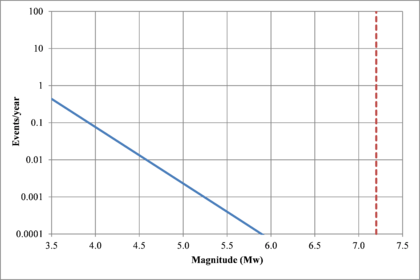

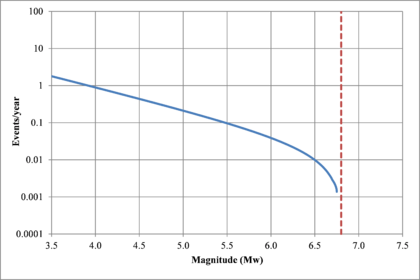

Finally, it is necessary to determine the maximum magnitude associated to each seismogenetic source. In this study, that value has been taken from the information reported by the SHARE project (GRCG, 2010). Because there is uncertainty in this parameter, it is not considered a fixed value but a random variable that follows a truncated normal distribution, which is computed from its expected value and its standard deviation, truncated as shown in Figure 2.9. For this study, a standard deviation equal to 0.3 in all sources has been considered.

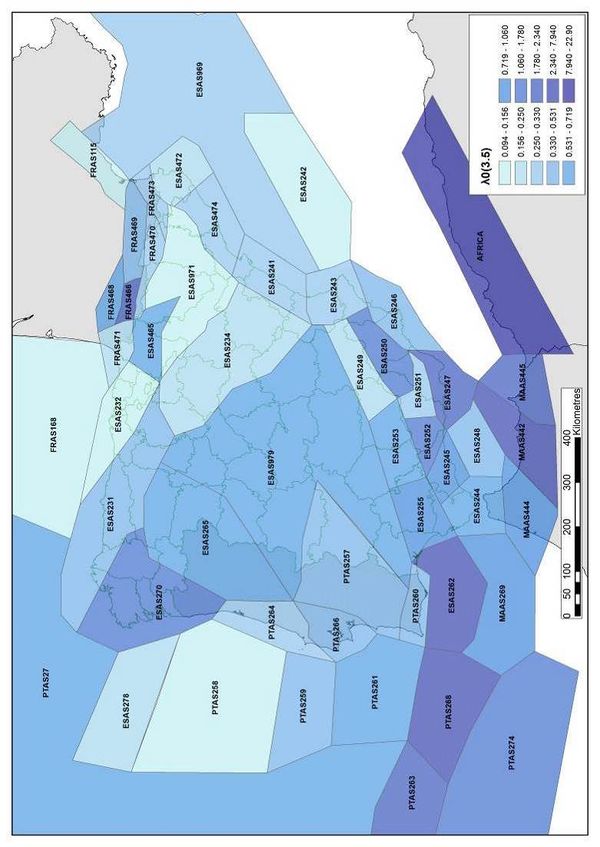

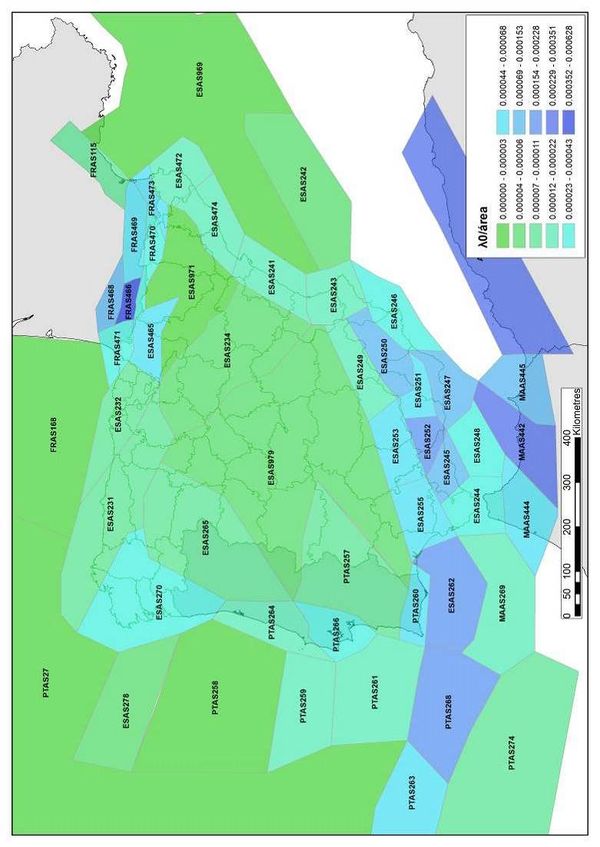

Figure 2.10 shows graphically the distribution of the λ0 value along the Iberian Peninsula whilst Figure 2.11 shows the seismicity per unit area for the same zone. Figure 2.12 shows the geographical distribution of the β value for the seismogenetic sources considered in this study. On the other hand, Figure 2.13 shows the geographical distribution of the expected maximum magnitude MU. Finally, Table 2.2 presents a summary of the seismicity parameters for all considered seismogenetic sources.

Annex A of this monograph shows the magnitude recurrence rate plots for all the seismogenetic sources considered in this study.

2.3.7 Strong ground motion attenuation relationships

Once the activity rate of each seismogenetic source has been defined through the seismicity parameters, it is necessary to evaluate the effects, in terms of seismic physical intensities, that each of them generate in any point of interest. For that, it is required to know the intensity that could occur in the site of analysis, in this stage, at bedrock level, if in the ith seismogenetic source an earthquake occurs with known magnitude and distance.

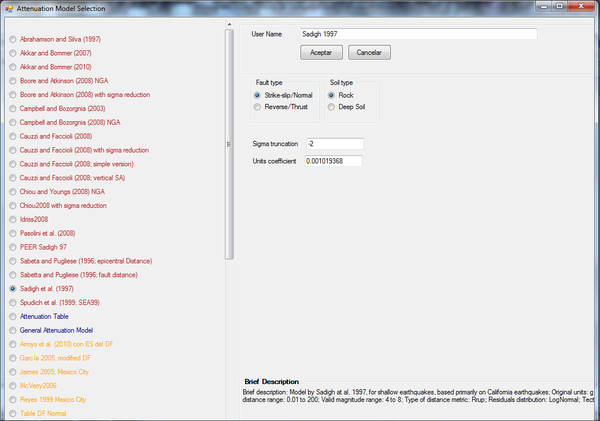

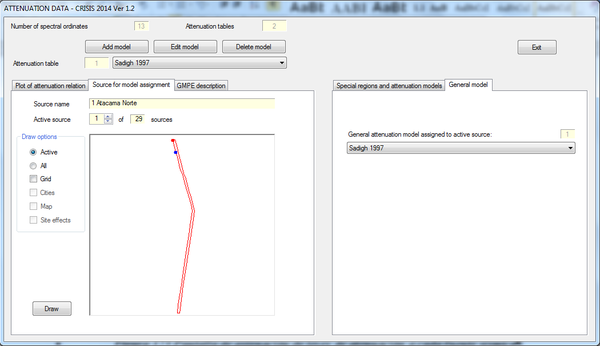

The selection of the GMPEs to be used in the analysis constitutes a fundamental step in the PSHA given that it is through them that the physical parameters of seismic hazard are quantified. Usually, the relative position between the source and the site of analysis is specified by means of the focal distance, which as explained in Figure 2.1, is the distance between the rupture area and the site of analysis. In this study it is assumed that the relevant seismic intensities are the spectral ordinates of the response spectra, quantities that are approximately proportional to the lateral inertial forces that are generated on the structures during the earthquakes.

Seismic intensity, independent of the selected, is not exempt of uncertainty, reason why it is assumed to be a random variable with lognormal distribution with a median given by the GMPE and a standard deviation of the natural logarithm equal to σlna. In this study, a spectral GMPE is used to account for the issue that attenuation patterns differ between waves with different frequency content, that is, are fundamental period dependent. Selecting those, also allows calculating the response spectra for the range of covered spectral ordinates given a magnitude and a distance. For this study, the GMPE proposed by Ambraseys et al. (2005) has been selected, that besides accounting for the magnitude and distance as most GMPE, also considers the faulting mechanism and soil conditions

|

|

(2.13) |

This GMPE has been calibrated with an instrumental earthquake database for Europe and the Middle East using regression analysis that includes a set of weighting factors. This GMPE is defined for the 0.0 and 2.0 seconds range which is sufficient enough for the purposes of this assessment. The distance used by this GMPE is Joyner and Boore (1981) and to account for this, the corresponding options have been selected in the CRISIS 2014 software.

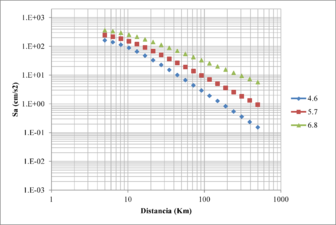

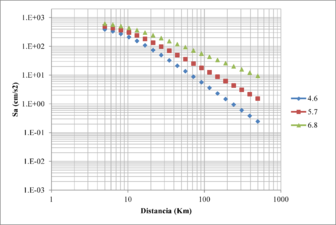

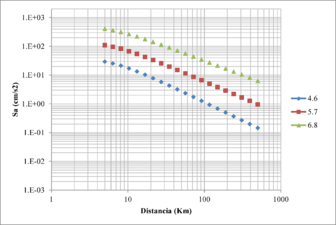

This same GMPE has been considered in previous PSHA in Spain such as the one conducted recently by IGN and UPM (2013). Figures 2.14 to 2.16 show in a graphical terms the expected intensities for different magnitudes and spectral ordinates.

2.3.8 Analysis procedure

The main steps of the selected methodology are as follows:

- 1. Definition and characterization of the main seismogenetic sources: from existing previous studies and instrumental seismicity the geometry of the different seismogenetic sources is defined.

- 2. Estimation and assignation of the seismicity parameters for the seismogenetic sources: based on the instrumental earthquakes catalogue, the seismicity parameters that can be estimated using statistical procedures are calculated for each seismogenetic source. Additionally, the maximum magnitude needs to be estimated for each source and it can be defined by means of existing studies, expert opinion and morph-tectonic information.

- 3. Assignation of GMPE to the seismogenetic sources: each source needs to have associated a GMPE to account for the attenuation pattern of the seismic waves from the point of occurrence of the event and the site of analysis.

- 4. Generation of a set of stochastic scenarios: All the scenarios that comprise the stochastic set are compatible with the geometrical and seismicity information of each seismogenetic source. By means of a recursive division of the geometry on each source into simple geometries (triangles) and the assignation of the seismicity parameters to each segment, weighted by their area, guaranteeing that in all cases the same seismicity per unit area is assigned the epicenters are defined.

- 5. Seismic hazard maps: maps with the spatial distribution of the seismic intensity are generated from the values recorded in the intensity exceedance curves by arbitrarily selecting an exceedance rate (or what is the same, a return period). Also, because a spectral PSHA has been conducted, seismic hazard maps can be obtained for any of the 32 calculated spectral ordinates.

- 6. Amplification of the hazard parameters because of the site effects: the dynamic response of soil deposits can modify the characteristics of the ground motion in terms of amplitude, frequency content and length. The amplification (or de-amplification) effect of the hazard parameters due to soil deposits can be accounted for by means of different methodologies, such as include them directly on the GMPE or by the propagation of shear waves by the different soil strata to define spectral transfer functions.

2.4 UNCERTAINTIES IN THE SEISMIC HAZARD ASSESSMENT

There are several uncertainties related to the seismic hazard assessment and, so far, there are different procedures, methodologies and approaches to include them in the analysis and therefore be considered in the final results. As explained before they can be classified in two main categories: epistemic and aleatory. The first is related to issues which uncertainties can be decreased or, in other words, aspects that can be better understood with more observations; although reducing epistemic uncertainties in seismic hazard can take generations (Woo, 2011). The second is related to issues than on the opposite, cannot be decreased even with a vast set of observations and is associated to the randomness of the occurrence process.

This section aims to explain how uncertainties have been considered in this analysis in the different stages of the seismic hazard assessment. At first, a categorization of the aspects with uncertainty is done into the above mentioned categories and later, an explanation of how they are considered in the selected methodology is done. Uncertainty is important for areas of low or moderate seismicity activity such as Spain since it is known that hazard assessments on those areas are based on incomplete and imperfect knowledge (Egozcue et al., 1991; Muir-Wood, 1993). Considering uncertainties in the analysis does not mean to increase the accuracy but contributes a lot in add transparency to the assessment process. Finally, it is noteworthy that PSHA allow considering the uncertainties only as long as they are quantifiable.

Uncertainty exists due to measurement errors (i.e. magnitude of an earthquake, depth of an event, PGA of an event), interpretation errors (i.e. well recorded accelerograms can be misunderstood by the person who handles it) and spatial reasons (even if the data is recorded and interpreted in an appropriate way, it is coarse-grain if compared with the resolution level of the analysis) (Caers, 2011). Aleatory uncertainties in the ground motion estimation are for example related to the rupture of the fault (both location and extension), the wave’s propagation path and at local level with the site effects.

PSHA uncertainty can be associated to the following aspects (Grossi et al., 1998; Woo, 2011):

- The number of considered seismogenetic sources: it is common practice to group different active faults with similar seismic activity into families; therefore, what is considered similar and how many families are considered in an specific tectonic context does not follow any formal standard and has associated several subjective decisions made by the experts.

- Geometry of the seismogenetic sources: this will exist independent of the selected geometrical model. Parameters related to the maximum depth, dip angle and surface projection although, mostly based on the best available data, does not exactly represent the exact location and geometrical characteristics of the faults.

- Maximum magnitude: There are different ways to establish the maximum magnitude, from expert opinion based to mathematically based approaches. It is a very important value since once this has been assigned to the seismogenetic sources, it means in practice that never an earthquake with higher magnitude than the assigned would occur on it.

- Magnitude recurrence rate: This rate is calculated after using information that may be affected by measurement and interpretation errors (historical and instrumental earthquakes).

- Attenuation of the seismic waves: there will be always dispersion in the recorded seismic intensities if compared with the ones to be predicted by the GMPE and also those are usually generated for specific tectonic environments using local or global ground motion databases.

In PSHA, the aleatory uncertainty is considered by directly integrating the scatter probability density function and the epistemic uncertainty is usually included by means of logic trees (Spence et al., 2003; Bommer, 2012; Arroyo et al. 2014). Most of the uncertainty in the PSHA is associated to the aleatory which on the other hand is related to the strong ground motion characteristics (Bommer and Crowley, 2006).

GMPE models, such as the one considered for this analysis, represent the uncertainty through a lognormal distribution which has proven to well represent the variability especially in terms of spectral accelerations (Abrahamson 1988; 2000). That is, in the GMPEs, the natural logarithm of the seismic intensity can be represented using a normal distribution. In seismic hazard assessments performed at bedrock level, uncertainty is considered in the σ value of the GMPE (Bazzurro and Luco, 2005) that represents the standard deviation of the logarithmic residuals. σ then, represents the aleatory variability in the GMPE in terms of the randomness of the observed motions with respect to the prediction equation. Said variability cannot be reduced unless there are changes in the original predictive model. An interesting characteristic of this σ value is that, whilst it cannot be reduced for any selected model, by introducing changes (such as improvements and refinements) on it, lower values of σ can be obtained.

The use of logic trees has become a common practice to, in theory, account for the epistemic uncertainties. To be classified in that category, it is assumed that all the selected GMPE are valid and appropriate for the area under analysis. The objective of the logic tree approach is to include the best estimation of both, what is known and what is not known in the same analysis. Anyhow, it is important to mention that, by doing so, it does not necessarily means that uncertainty is considered in an appropriate manner. First, it is common practice to use logic trees when assigning GMPEs to different tectonic environments but then the issue of what weights to assign to each model raises. There are several challenges in the way logic trees are built for PSHA (Bommer, 2012) and there are cases where the modeler at some stage loses control of what the weights mean and are representing. Different procedures to assign the weights to the GMPEs have recently been published and one with a very robust and consistent methodological basis has been developed by Arroyo et al. (2014).

In this study only one GMPE has been considered as previously mentioned, which is equivalent to assign a weight equal to 1.0 to that model; in other words, strictly speaking, epistemic uncertainty has not being considered directly. The reason for the use of only one GMPE is that because of the main objective of this PSHA, the set of stochastic scenarios that will be later used for the probabilistic risk analysis and not to only generate hazard maps. By including several GMPE (with their associated weights) and because it is normal to have important differences in the expected seismic intensities, after combining them for several magnitude-distance pairs, what is obtained is likely to be out of control of the modeler and then, verifying how rational are the risk results would not be possible.

What category (epistemic or aleatory) is associated to each of the aspects of the PSHA depends on the context and is part of the modeler’s challenges. Even in some cases, defining which aspects belongs to the aleatory category, constitute a philosophical issue. Also, uncertainties that, at first, only have a scientific aspect, at some stage become philosophic ones as there will be an impact in society once a decision is made based on them (Caers, 2011).

2.5 SEISMIC HAZARD RESULTS

This section presents the PSHA results for Spain in terms of hazard curves, uniform hazard spectra (UHS) and hazard maps for different return periods and spectral ordinates.

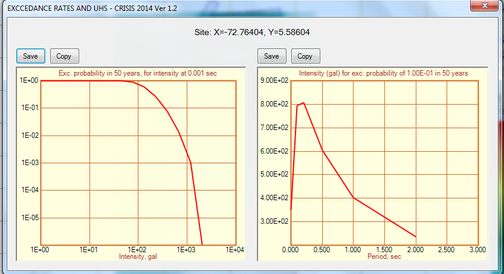

2.5.1 Hazard curves for selected cities

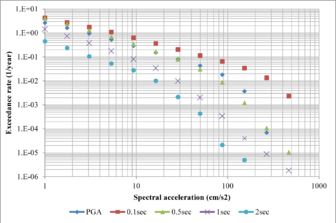

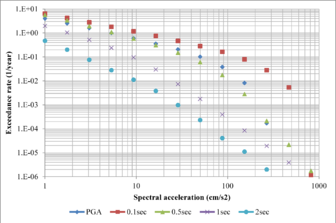

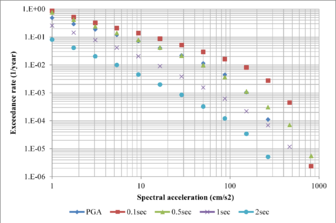

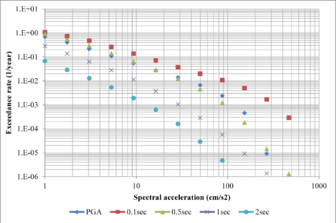

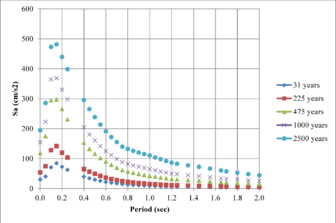

Hazard curves, also known as intensity exceedance curves, can be calculated for any point within the area of analysis as well as for any spectral ordinate within the range of the GMPE. They relate different intensity values (in this case spectral accelerations) with exceedance rates (in this case expressed in average number of times per year) Figures 2.17 to 2.20 present the hazard curves for five selected spectral ordinates of Lorca, Granada, Barcelona and Girona.

When interpreting the hazard curves, it is important to bear in mind that they do not only have information about earthquakes occurring at different distances and with different magnitudes, but about earthquakes occurring in different seismogenetic sources and therefore, their validation against a single observation is completely incorrect.

2.5.2 Uniform hazard spectra for selected cities

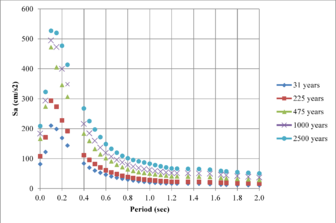

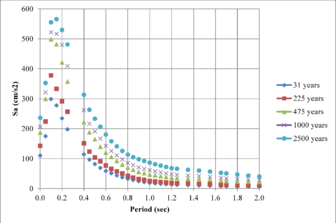

From the information that exists on the hazard curves for different spectral ordinates presented previously, it is possible to obtain the UHS for any return period. On them, any value has associated for each spectral ordinate the same exceedance rate or what is equivalent, the same return period. Figures 2.21 to 2.24 show the UHS for the same four cities mentioned above for the following return periods: 31, 225, 475, 1,000 and 2,500 years

2.5.3 Seismic hazard maps

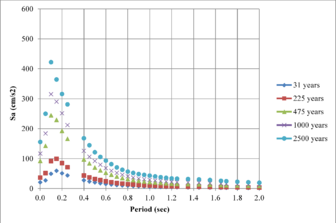

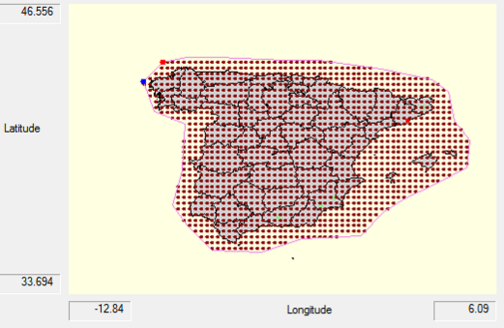

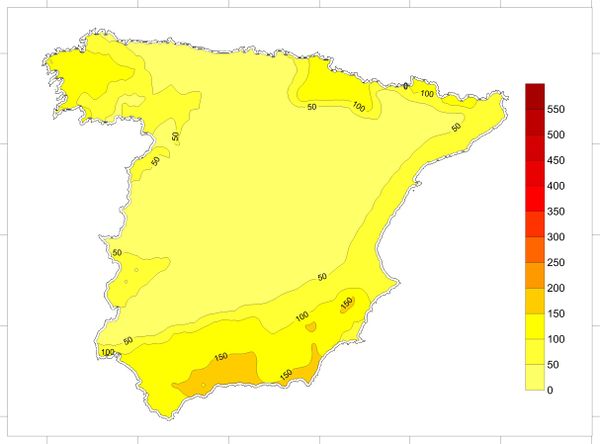

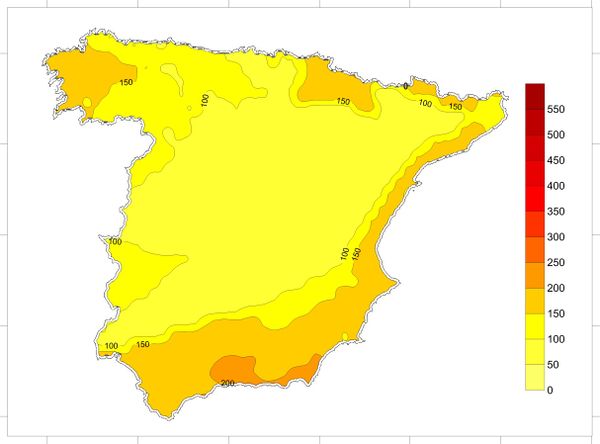

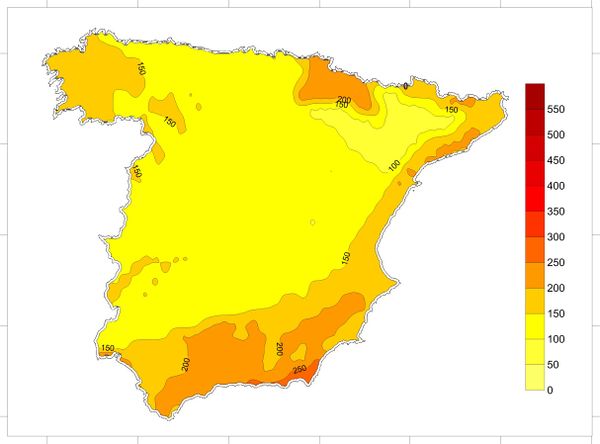

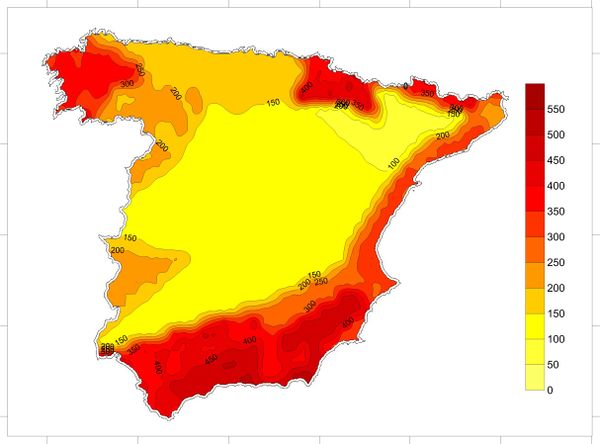

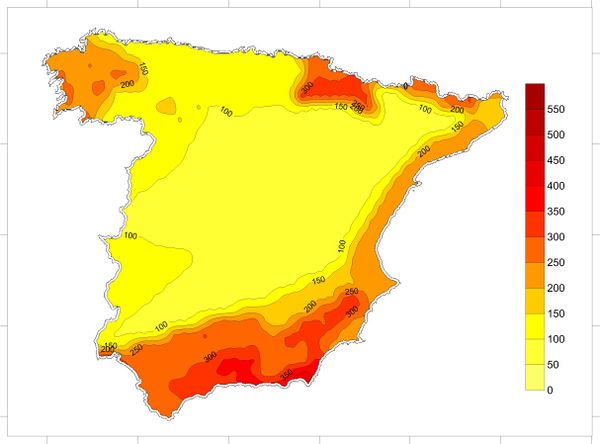

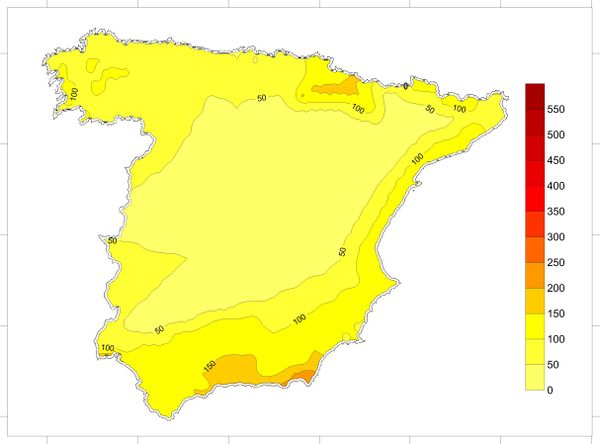

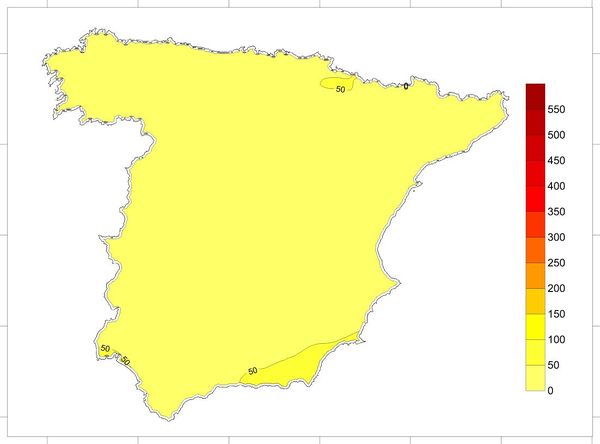

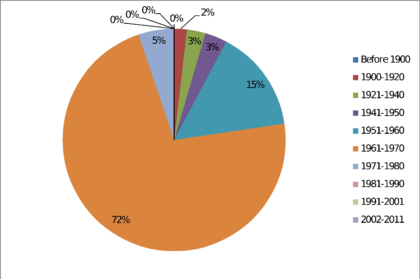

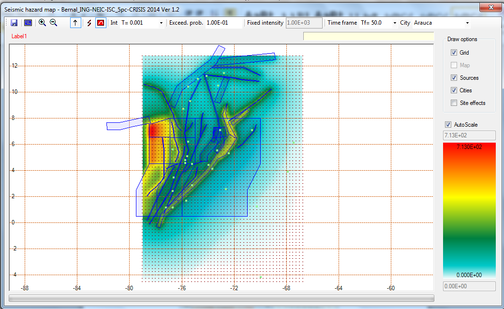

Based on the information contained on the hazard curves, it is possible to generate hazard maps for different return periods and spectral ordinates. The seismic hazard maps, in this case, have been calculated on a grid with 0.25° spacing in both orthogonal directions that cover the totality of the analysis area as shown in Figure 2.25.

Figure 2.26 shows the seismic hazard map of Spain for PGA and 475 years return period obtained in this study. Annex B shows hazard maps obtained for other return periods and spectral ordinates. Seismic maps are very useful tools to communicate the PSHA results but it must always be born on mind that any map is just a visual guide to reality (Woo, 2011).

Having mentioned all this steps, it is evident that the most important output of the PSHA is the hazard curve from where UHS and maps for different return periods can be generated.

2.5.4 Set of stochastic scenarios

Another important output of this PSHA is a set of stochastic scenarios with a large number of events associated to each one of the considered seismogenetic sources that are compatible with the seismicity parameters that describe their activity. This output is particularly important for the later probabilistic seismic risk assessment that is to be conducted at national and local level in this study.

Stochastic earthquakes allow considering events where they have not (yet) occurred and its generation has been a common practice in recent assessments (Ordaz, 2000; Grossi, 2000; Liechti et al., 2000; Zolfaghari, 2000). The stochastic scenarios are saved onto a *.AME file; this is a format that stores all the information regarding the expected intensities at ground level in terms of spectral acceleration for 32 fundamental periods, the variance and the frequency of occurrence for each scenario. For this assessment, a total 50,982 events have been generated and are later used to calculate seismic risk in probabilistic terms.

2.5.5 Comparison of the results with the elastic design spectra defined in NSCE-02 and Eurocode-8

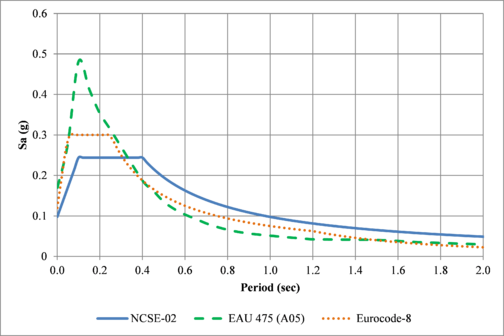

Elastic design spectra of the European Earthquake resistant building code considers the PGA value for an exceedance probability of 10% in 50 years, which is equivalent to a mean return period of 475 years. Figure 2.27 shows a comparison between the UHS for 475 years at bedrock level in Lorca and the one specified in the Spanish earthquake resistant building code - NCSE-02 (MF, 2009) and the Type 2 spectra, which applies to Spain, in the Eurocode-8 (ECS, 2004).

2.6 LOCAL SITE EFFECTS

During an earthquake, there are two main types of local site effects that can increase the free ground intensity. The first is that in which the soil modifies the frequency content as well as the amplitude of the earthquake waves, making it more or less severe with a direct influence on the expected damages and losses. The second has to do with the soil failure and breaking, generating both horizontal and vertical displacements with obvious damaging effects on infrastructure located in top of them.

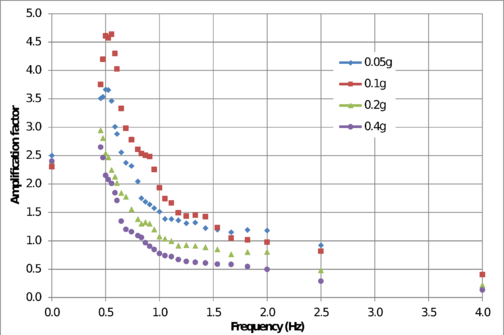

Dynamic behavior of stratified soil deposits is usually modelled by means of spectral transfer functions which allow knowing the amplification values that modify the spectral accelerations estimated initially at bedrock level. Those spectral transfer functions can be defined for different intensities at bedrock level to account for the non-linearity of the soil in terms of the stiffness degradation and damping increase. Figure 2.28 shows a typical spectral transfer function for a soft soil deposit where the highest amplification occurs for low frequency values.

From the calculated spectral transfer functions, the spectral accelerations at ground level Saground is calculated as follows:

|

|

(2.14) |

where AFPGA is the amplification factor for a given PGA and Satf is the spectral acceleration calculated at bedrock level from the initial seismic hazard model.

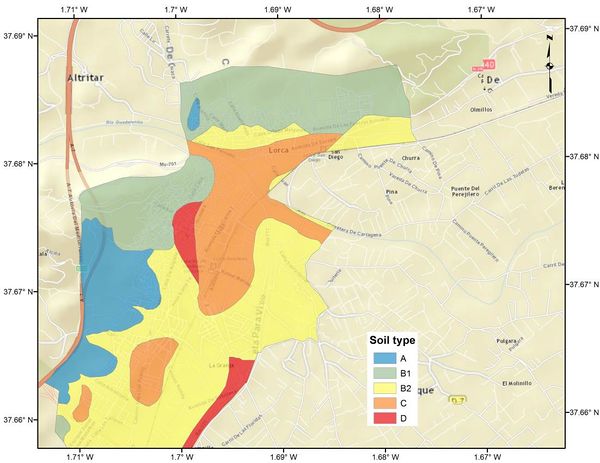

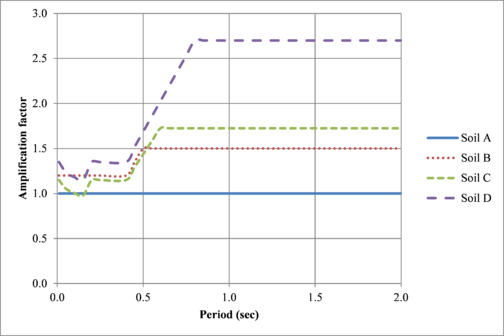

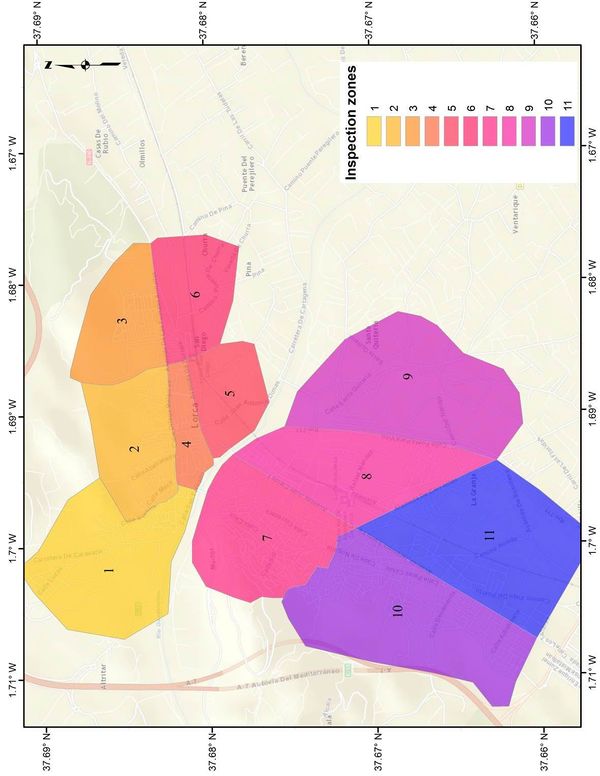

2.6.1 Site-effects in Lorca

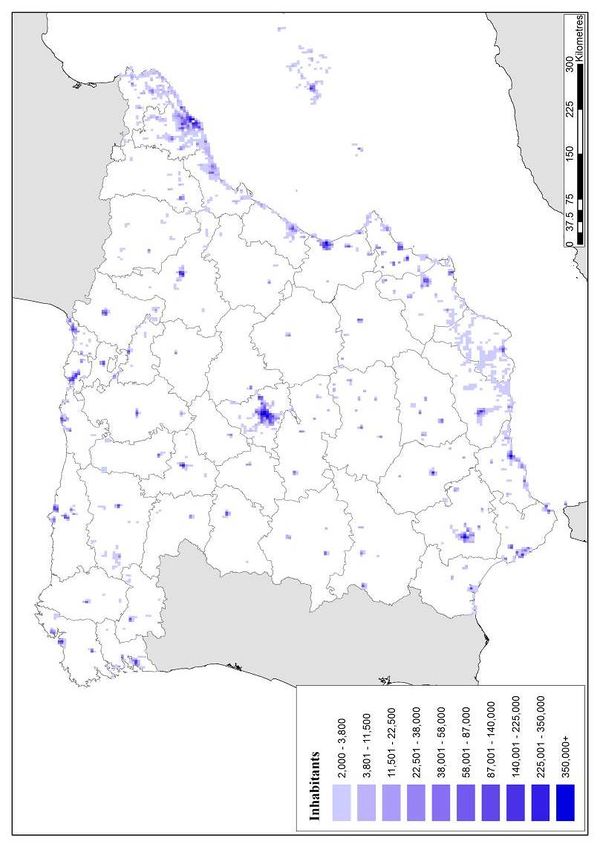

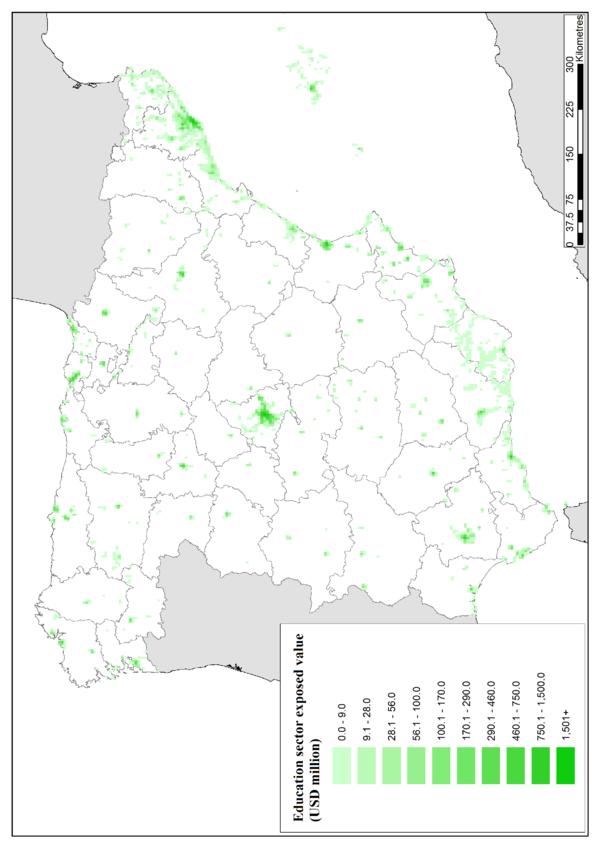

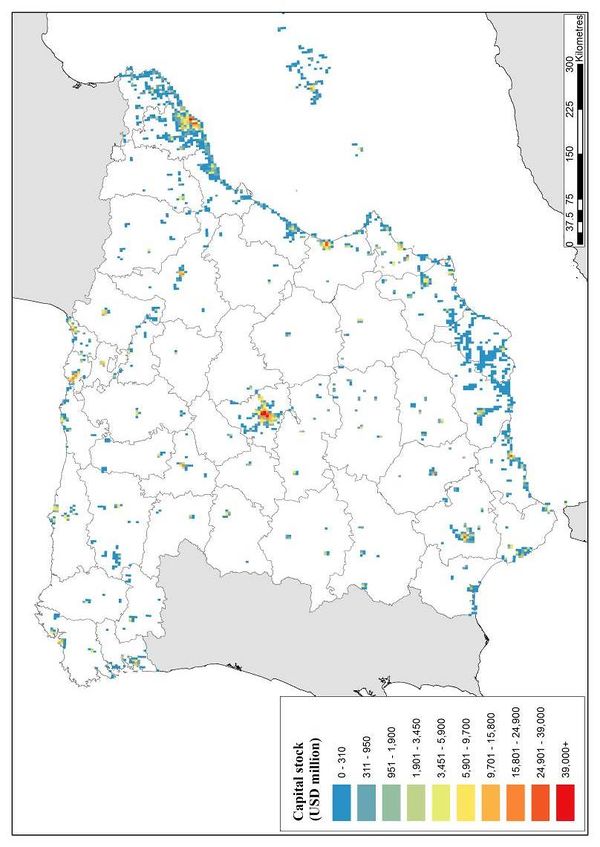

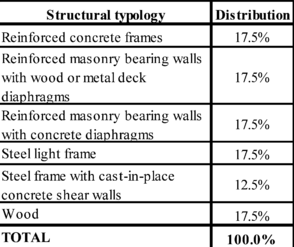

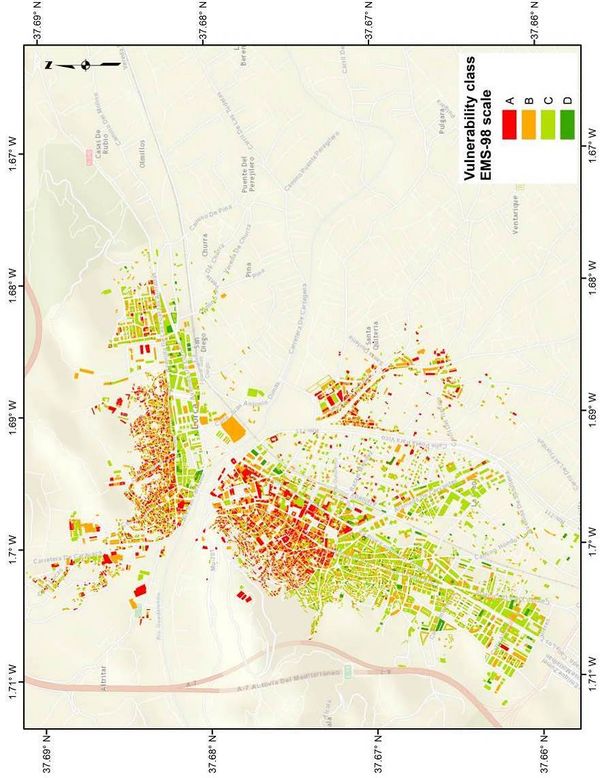

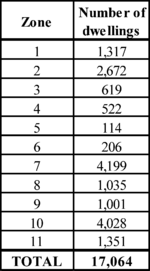

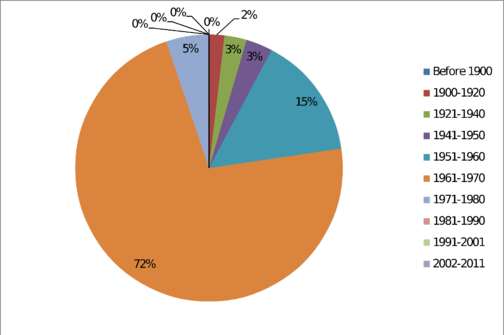

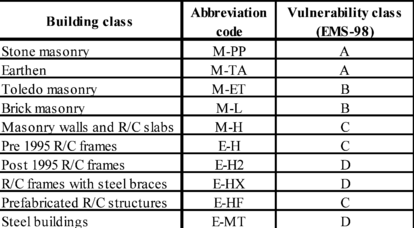

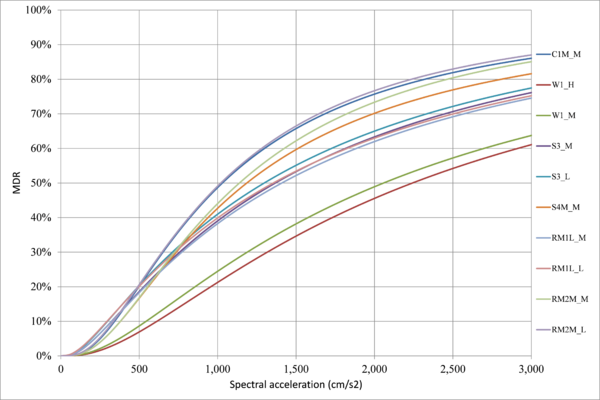

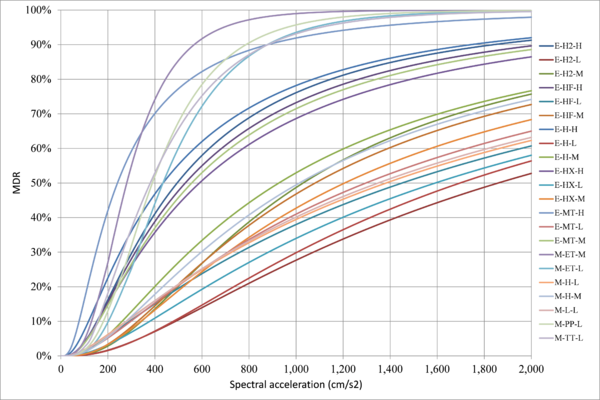

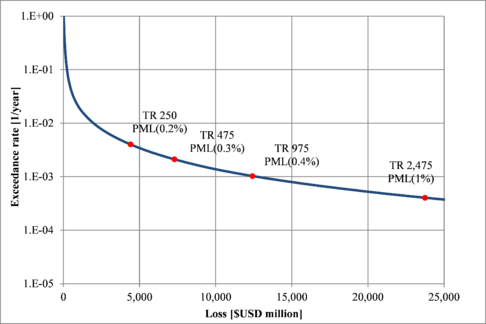

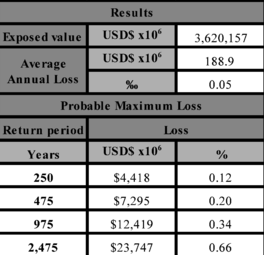

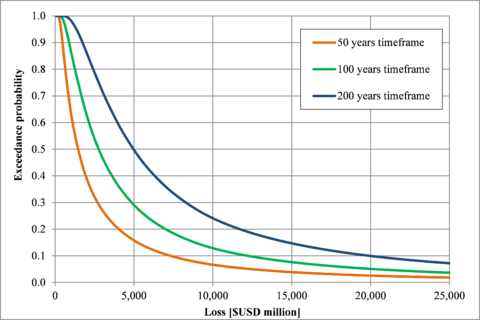

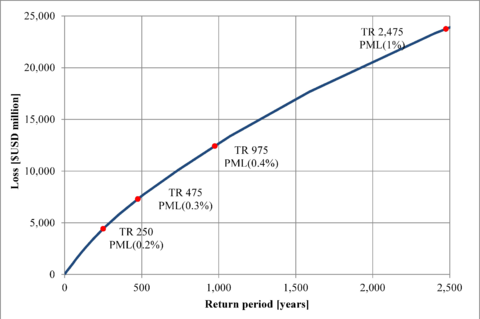

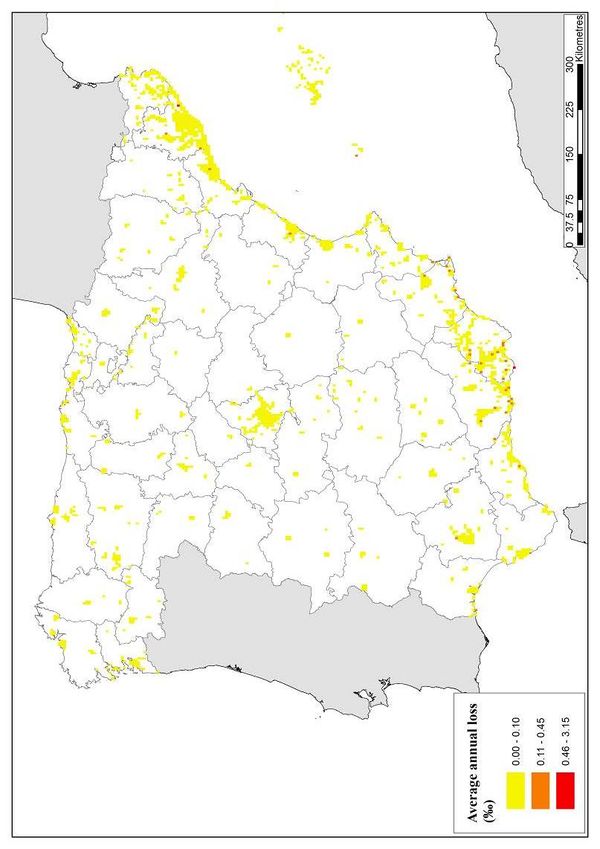

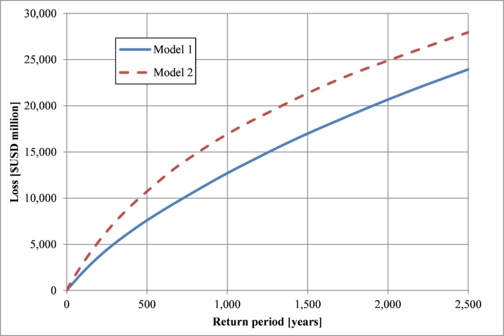

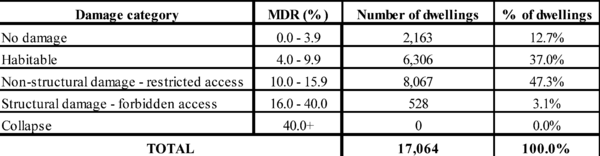

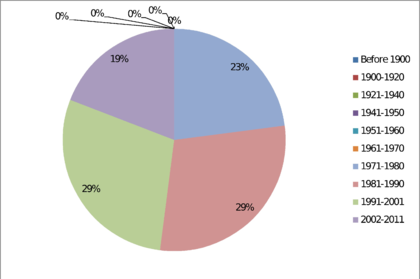

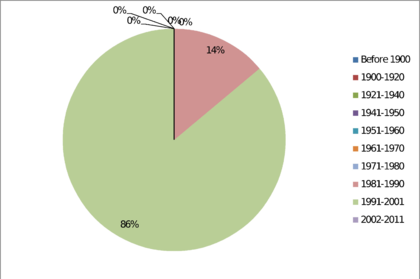

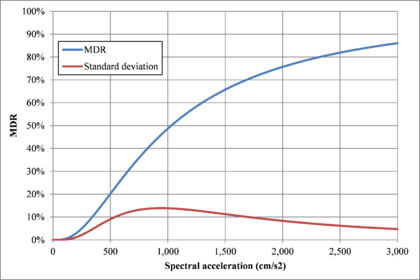

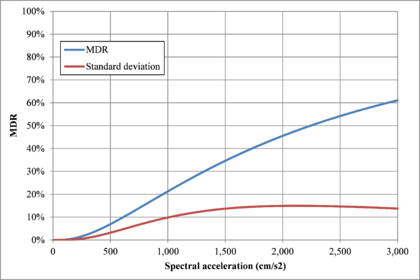

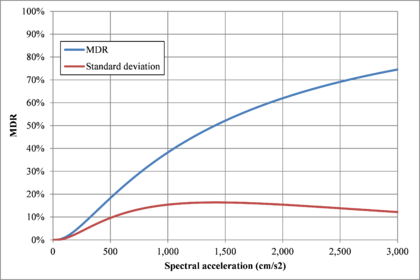

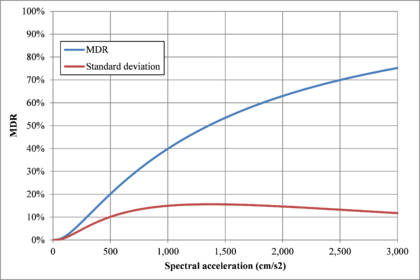

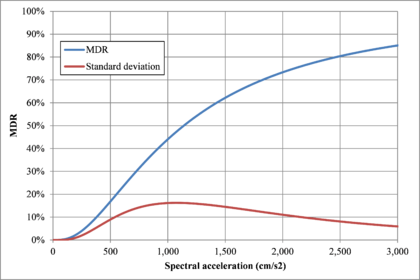

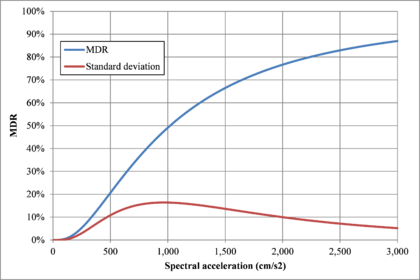

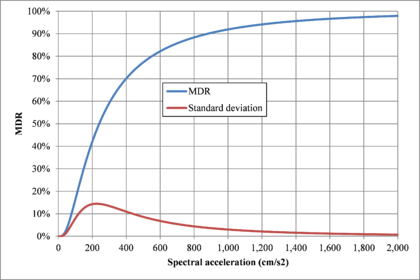

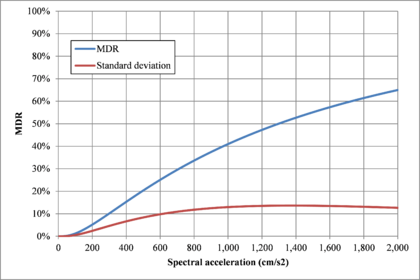

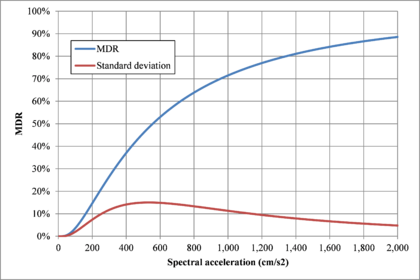

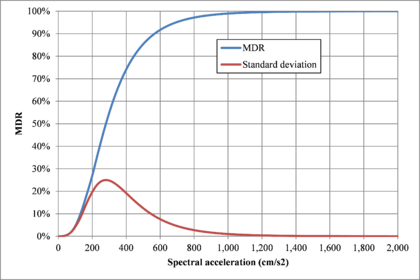

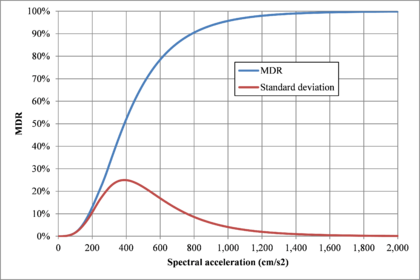

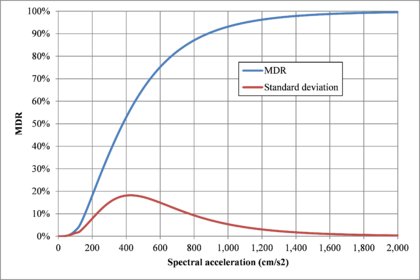

Based on the work developed by Navarro et al. (2014), it is possible to determine several homogeneous soil zones for the urban area of Lorca as shown in Figure 2.29. Each of the soil zones has been assigned a soil category according to the Eurocode-8 (ECS, 2004) classification. From that information it is possible to define spectral transfer functions for each of them by calculating the ratio between the design spectra for the identified soil condition and the design spectra for hard soil (rock).