Pulsa aquí para ver la versión en Español (ES)

Abstract

The development of Teacher’s Digital Competence (TDC) should start in initial teacher training, and continue throughout the following years of practice. All this with the purpose of using Digital Technologies (DT) to improve teaching and professional development. This paper presents a study focused on the diagnosis of TDC among ITT senior students from Chile and Uruguay. A quantitative methodology, with a representative sample of 568 students (N=273 from Chile and N=295 from Uruguay) was designed and implemented. TDC was also studied and discussed in relation to gender and educational level. Results showed a mostly basic level for the four dimensions of the TDC in the sample. Regarding the relationship between the variables and the TDC, the Planning, organization and management of spaces and technological resources’ dimension is the only one showing significant differences. In particular, male students achieved a higher TDC level compared with female students. Furthermore, the proportion of Primary Education students with a low TDC level was significantly higher than other students. In conclusion, it is necessary, for teacher training institutions in Chile and Uruguay, to implement policies at different moments and in different areas of the ITT process in order to improve the development of the TDC.

Resumen

The development of Teacher’s Digital Competence (TDC) should start in initial teacher training, and continue throughout the following years of practice. All this with the purpose of using Digital Technologies (DT) to improve teaching and professional development. This paper presents a study focused on the diagnosis of TDC among ITT senior students from Chile and Uruguay. A quantitative methodology, with a representative sample of 568 students (N=273 from Chile and N=295 from Uruguay) was designed and implemented. TDC was also studied and discussed in relation to gender and educational level. Results showed a mostly basic level for the four dimensions of the TDC in the sample. Regarding the relationship between the variables and the TDC, the planning, organization and management of spaces and technological resources’ dimension is the only one showing significant differences. In particular, male students achieved a higher TDC level compared with female students. Furthermore, the proportion of Primary Education students with a low TDC level was significantly higher than other students. In conclusion, it is necessary, for teacher training institutions in Chile and Uruguay, to implement policies at different moments and in different areas of the ITT process in order to improve the development of the TDC.

Resumen

El desarrollo de la Competencia Digital Docente (CDD) debe iniciarse en la etapa de formación inicial docente (FID) y extenderse durante los años de ejercicio. Todo ello con el propósito de usar las Tecnologías Digitales (TD) de manera que permitan enriquecer la docencia y el propio desarrollo profesional. El presente artículo expone los resultados de un trabajo con estudiantes de último año de FID de Chile y Uruguay para determinar su nivel de CDD. Para realizar el estudio se utilizó una metodología cuantitativa, con una muestra representativa estratificada de 568 estudiantes (n=273, Chile; n=295, Uruguay). Los datos se analizaron en relación al género y nivel educativo. Los resultados mostraron, para las cuatro dimensiones de la CDD, un desarrollo básico. Respecto a la relación entre las variables estudiadas y la CDD, destaca el porcentaje de hombres que alcanza competencias digitales avanzadas para la dimensión de Planificación, organización y gestión de espacios y recursos tecnológicos. También para esta dimensión la proporción de estudiantes de Educación Primaria con un desarrollo de CDD básico es significativamente superior al del resto de estudiantes. Como conclusión destacamos que es necesario que las instituciones formadoras de docentes implementen políticas a diferentes plazos y en diversos ámbitos de la FID como el sistema educativo, la formación y la docencia, para mejorar el nivel de desarrollo de la CDD.

Keywords

ICT standards, digital competence, teachers training, assessment, educational technology, high education, pedagogy, educational system

Keywords

Estándares TIC, competencia digital, formación de profesores, evaluación, tecnología educativa, educación superior, pedagogía, sistema educativo

Introduction

Digital competence (DC) is one of the key competences of the modern citizen. Over a decade ago, the European Commission (2018) considered that citizens should have some key skills to prepare them for adult life, to enable them to actively participate in society and to continue to learn throughout their lives. As one of these skills, DC must be considered broadly, in all educational systems (curricula, resources and support for training, continuous competency updates, teacher training, equity, special needs, educational policies, etc.). In a broader context, UNESCO (2015: 40-47), within the Education 2030 Framework for Action, highlights the potential of digital technology (DT) and the importance of technological skills training as part of programs to enter the labor market. In this reality, the teaching staff plays a key role to ensure that future citizens make effective use of digital technologies for their personal and professional development.

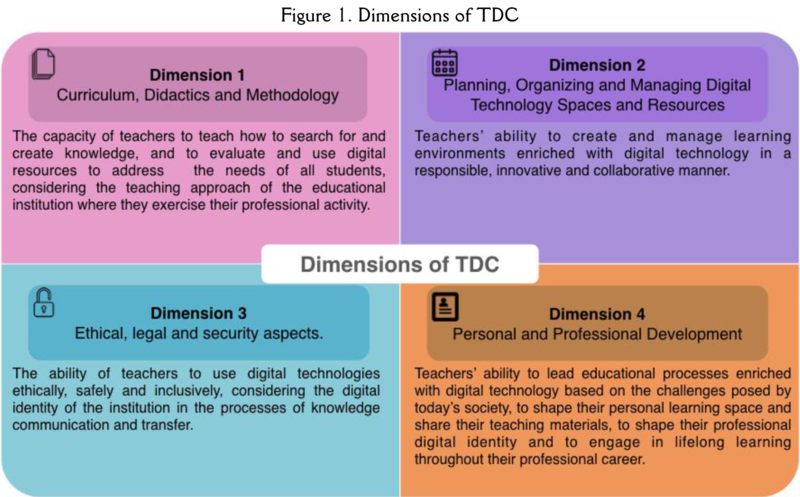

Various international reports highlight the need for well-trained educators in the use the DT for teaching (INTEF, 2017: 2; Redecker & Punie, 2017: 12; UNESCO, 2015: 55; 2017: 20), Teachers with an adequate level of Teacher’s Digital Competence (TDC), which is understood as the “set of abilities, skills, and attitudes that teachers must develop in order to be able to incorporate digital technologies into their practice and their professional development" (Lázaro, Usart, & Gisbert, 2019: 73). This concept is in line with proposals concerning recent developments, which define TDC, and emphasize the need to harness the potential of DT in the learning processes of future citizens of a digital society. Teachers themselves demonstrate, in their training needs, that TDC is one of their priorities (European Commission, 2015: 11). Specifically, the collection of knowledge, attitudes and skills that make up TDC are defined in different frameworks and standards that serve as referents for the training and evaluation of this competency: MINEDUC-Enlaces (2008; 2011), ISTE (2008), Unesco (2008 y 2018), Fraser, Atkins, & Richard (2013), Ministerio Educación Nacional (2013), INTEF (2014; 2017), DigiComp (Redecker & Punie, 2017). If we analyze the dimensions of TDC considered here, we can see that the focus is on didactic-pedagogical aspects, teacher professional development, ethical and safety aspects, search and management of information, and in the creation and communication of content. Most of them are directed toward TDC of in-service teachers, who may be able to assimilate initial or basic levels as a minimum requirement as a student pursuing a degree in education or pedagogy to complete their training at university.

TDC in initial teacher training

In education degree programs, DC has a different hue than in other areas of education. Initial Teacher Training should include digital training for the future teachers, so they are able to use digital technology in their professional activity (Escudero, Martínez-Domínguez, & Nieto, 2018; Papanikolaou, Makri, & Roussos, 2017; Prendes, Castañeda, & Gutiérrez, 2010).

Teacher training is one of the key factors for incorporating DT in pedagogical practices. This aspect takes on greater relevance in ITT, as they could enter the education system with an adequate level of TDC. In this way, future teachers would be able to enrich the learning environments through DT and incorporate them naturally into their future professional practice (Castañeda, Esteve, & Adell, 2018). ITT in Latin America has been incorporating DT in the study plans with little or no guidance and support from the ministries of education. In fact, the policy has focused on delivering infrastructure and training to teachers in the educational system, without offering support and guidance to teacher-training institutions. It is necessary to systematize and share experiences involving the inclusion of DT in the ITT curriculum (Brun, 2011), in alignment with international standards (Brushed & Prada, 2012).

In Chile, given the autonomy of institutions that train teachers and the shortage of policies and guidelines for including DT in ITT, there are a variety of specific subjects on DT distributed along different semesters of the syllabus. However, they are more focused on digital literacy, than on teaching with DT (Rodriguez & Silva, 2006). This fact has not hindered the development of particular initiatives created by some institutions, in line with guiding the development of the TDC. They use some national standards and, at the same time, integrate elements of other international frameworks (Cerda, Huete, Molina, Ruminot & Saiz, 2017). In this context, the level of self-perception of students with regard to TDC (MINEDUC-Enlaces, 2011). It has been observed that the level of TDC development of ITT students is based on technical and ethical aspects, rather than those related to teaching and knowledge management (Badilla, Jiménez, & Careaga, 2013; Ascencio, Garay, & Seguic, 2016). In the case of Uruguay, because there is an entity that controls teacher training, there are two ITT subjects: “Information and education” and “integrating digital technologies,” which include education in TDC of future teachers (Rombys, 2012). In both countries, no specific cross-sectional formulations are observed to guide the integration of DT in other subjects. Their work is subjected to the competencies and skills of the teaching staff itself (Silva & al., 2017).

Evaluation of TDC

Evaluating TDC in ITT presents important challenges that relate to the complexity of evaluating competencies and the assessment system used. Objective assessment tools are required, that are not based only on the perception of the user but measure the level of TDC by solving situations or problems in line with the indicators to be evaluated (Villar & Poblete, 2011: 150). Currently, there are TDC self-assessment tools that are based on self-perception (Redecker & Punie, 2017; Tourón, Martín, Navarro, Pradas & Íñigo, 2018). INTEF (2017) presents a proposal that uses a technological solution and also incorporates the use of a portfolio for evaluation. In our view, the challenge is to use an objective, reliable and valid TDC evaluation test that measures the knowledge of the future teacher. For this purpose, this study sets forth to analyze the development level of TDC in a sample of senior students of ITT in Chile and Uruguay, through a previously validated instrument (see section 2.2), which allows us to make an assessment aligned to TDC, using the indicators and dimensions proposed by Lázaro and Gisbert (2015) (Figure 1). At the same time, through the data obtained, research will also examine the relationship of the TDC level with other key variables.

|

|

Objectives and research questions

In order to determine the development level of TDC of the ITT senior students in Chile and Uruguay, the study will present and discuss the results for a representative sample in both countries, through quantitative analysis of the data obtained using the described instruments, and also with regard to the variables of gender and educational level. Specifically:

- O1. Assess the level of TDC in a sample of students from Chile and Uruguay.

- O2. Study the relationship between the level of TDC and the factors of gender and educational level.

The following research questions are established to guide the process and will be used to present and discuss the results:

- Q1. What is the distribution in the four dimensions of TDC of the sample studied?

- Q2. Are there significant differences for TDC in terms of gender?

- Q3. Are there differences in TDC among future teachers of primary and secondary education?

Material and methods

Sample

With the aim of studying the TDC of senior students of ITT in Chile and Uruguay, we chose a representative stratified sample composed of 568 students of both countries. We performed a stratified random sampling with p=5%. The sample was drawn from a population of 2,467 students for Uruguay and an estimated population of 12,928 in Chile, considering the public universities that provide ITT. To perform the stratified sample, the relative weight of the population was taken into account, of the various ITT institutions Uruguay and different public universities in Chile, considering each institution as a stratum.

In the case of Uruguay (there are two institutions —with a center for each stratum— in the capital city of the country, and the remaining 2 institutions with 28 centers scattered throughout the rest of the territory), in 2 of the 4 strata, a multistage sample was conducted, in which the centers were chosen first, and then, the students within these centers. Eleven centers participated of a total of 30. First, the sample was divided by strata, according to the number of students present in each center. Then, depending on feasibility decisions, students were drawn in the institutions of the capital and the centers of the rest of the country. Within these centers, students to be surveyed were drawn by an assigned number in the student lists. To select the individuals of the samples, another 10% was drawn for substitution, respecting the relative weight of each subsample.

|

|

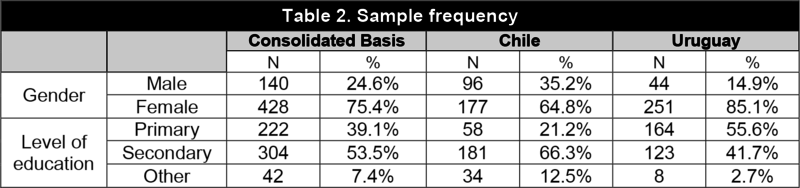

In the Chilean case, after dividing the sample in strata —by number of students present in each one of them— there was one drawing per university, while the instrument was applied by full classroom, with the participation of seven universities, of a total of 16. The universe and the samples are shown on Table 1. Table 2 characterizes the sample of 568 students who participated in the study. It is made up of 273 Chilean students (48.1%) and 295 Uruguayan students (51.9%).

|

|

Instruments and procedure

To study the TDC of the sample, a test-type assessment instrument was used to present problem situations that novel teachers may encounter during their professional practice. This instrument is composed of closed questions with hierarchical or weighted responses, with several answer options. The responses were scored according to their level of precision: 1, 0.75, 0.5, 0.25 points. This differentiation is explained because, faced with the same problem situation, there may be several correct answers, but with different levels of precision, depending on the situation. This was constructed from an array of indicators to assess TDC in ITT in the Chilean-Uruguayan context (Silva, Miranda, Gisbert, Moral, & Oneto, 2016) based primarily on ICT standards in ITT from MINEDUC-Enlaces (2008) and the proposed TDC rubric by Lázaro & Gisbert (2015).

The specifications table was reviewed by a panel of experts, both in Chile and Uruguay. This is a proposal of TDC contextualized to ITT, which is based on different international standards (Fraser, Atkins & Richard, 2013; INTEF, 2014; ISTE, 2008 and UNESCO, 2008), to ensure the construct validity of the instrument. In order to ensure content validity in the evaluation questionnaire, the 56 initial questions were validated through expert judgment which included nine experts in the field of Higher Education linked to ITT in Uruguay, Chile and Spain (3 per country). This process was carried out through validation matrices, where each expert individually answered yes or no to the conditions of validity of each question.

Of the 56 questions, 51 obtained a quality assessment of over 75%, while only six questions were evaluated with scores under 75%, making them unsuitable for the final evaluation instrument. The assessment instrument was made up of the four top rated questions by experts for each of the 10 indicators. In this way, the final instrument was composed of 40 questions, distributed in four dimensions: D1. Curriculum, Didactics and Methodology: 16 questions; D2. Planning, Organizing and Managing Digital Technology Spaces and Resources: eight questions; D3. Ethical, legal and security aspects: eight questions and D4. Personal and Professional Development: eight questions. Meanwhile, each correct answer was assigned one point and the instrument awarded 40 points maximum.

Below is an example of an item or question: "If you want your students to perform a CIICT (curricular integration of information and communication technologies) activity, which of the following digital technologies do you or would you use: (a) Educational Video (0.50); (b) Blog with curricular topic (0.75); (c) Specific software for the subject (1.00); (d) Presentation with curricular contents (0.25). The internal consistency of the instrument was studied (Silva & al., 2017), and interpreted on the basis of the criterion cited by Cohen, Manion and Morrison (2007). In our case, α=0.60, which indicates "good" internal reliability for scales between 0.6 and 0.8 points.

The process of administering the test took two months. The instrument was administered to the sample of students in the last year of pedagogy in Chile and Uruguay (see Section 2.1) online, from any place and device (tablet, cell phone, computer). Data from the test was downloaded and saved to a Microsoft Excel (2007) spreadsheet, taking into account the ethical aspects relating to anonymity and conformity of data transfer.

Statistical tests

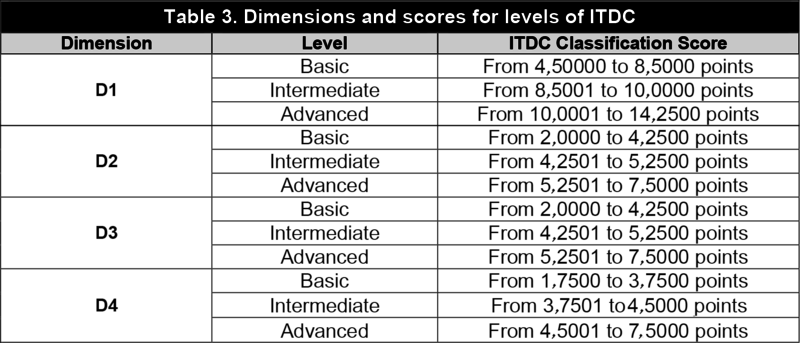

To analyze the implementation results of the instrument and to respond to the research questions, a descriptive data analysis of the assessment instrument for TDC at the level of dimensions and indicators, was performed. Later, different statistical tests were administered. In particular, to perform the analysis, the creation of "Indicators of Teacher’s Digital Competences (ITDC)” was proposed to categorize students in initial teacher training by level of TDC, into: basic, intermediate and advanced, for the dimensions, by crossing of variables: sex and educational level, with the purpose of identifying statistically significant differences with the chi-square (χ2) test and the comparison of distributions (Z test). Data were analyzed with SPSS for Windows, Version 24.

For the construction of each indicator, all the scores obtained for each item were added. The results of this sum of scores were categorized (recoded) according to a theoretical estimate that considers the actual distribution of the scores obtained: minimum score obtained. Analysis, maximum score obtained and the scores in the position 33 and 66 if the scores are sorted in ascending order. Considering the scores for each indicator that make up the dimensions mentioned above, the TDC indicator of classification was created, as described below in Table 3.

|

|

Results

First, we estimated the overall results of the sample by dimension of TDC. The mean of the four dimensions is between 2.0 and 2.3 points, which is equivalent to 51% to 59% of the total points available. These values have standard deviations between 0.3 and 0.6. For all dimensions, it should be noted that relatively similar scores between mean, median and mode enable us to see we are dealing with a normal distribution of data, which was verified through statistical tests. In particular, the results were compared using the Levene test to confirm the normal distribution for each dimension in the total sample. We present the results sorted by research question:

- Q1. What is the distribution in the four dimensions of TDC for the sample studied?

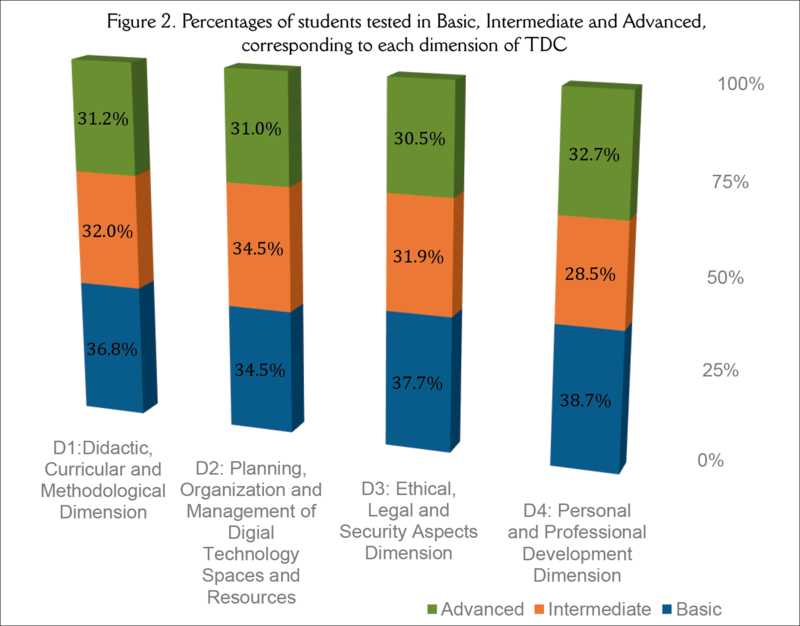

The results of the distribution in our sample by TDC dimension showed that for the four dimensions, students in the sample are mostly at the basic level (Figure 2), although 1 of every three subjects is at the advanced level.

|

|

Only differences that could be statistically significant in D4 are found: “Personal and Professional Development” (Figure 2). To calculate if the differences observed are significant among students with intermediate TDC (28.5%), and basic TDC (38.7%), a Chi-square test was applied to compare the different levels (χ2(2)=10.8 with p<0.01). This result indicates that we must reject the null hypothesis and therefore we can state that there is a statistical difference between these two groups of students. In other words, the distribution of students with low level of D4 is significantly higher than that of students with intermediate competency. Once the dimensions of TDC evaluated in our sample of students is studied, we detail the results for each of the questions that correlate their TDC with the variables of interest:

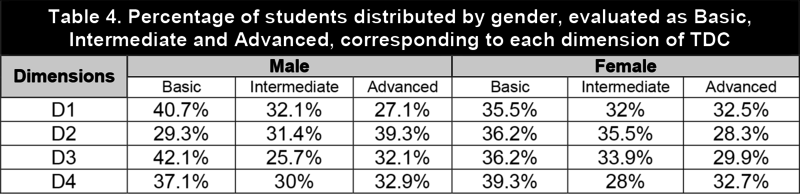

- P2. "Are there significant differences for TDC in terms of gender?" was first measured through the descriptive statistical study of the percentages of distribution (Table 4).

|

|

The results show that no significant differences were observed with regard to gender in the four dimensions studied. Even so, the percentage of male students that reach advanced digital competences is noteworthy in D2: “Planning, Organization and Management” (39.3%), compared to female students at that same level (28.3%). To evaluate this difference quantitatively, a Chi-square test was applied, which only showed a statistically significant value between these two groups (χ2(1)= 6.61, with p=0.047, <.05). We can say that there is a statistical difference between these two groups of male and female students for D2. In particular, the distribution of male students with an advanced level in this dimension is significantly higher than that of women. In reference to the third research question Q3: Are there differences of TDC among teachers of secondary and primary education? We can see in Table 5 the following percentages of distribution for each group, separated by levels of TDC development.

|

|

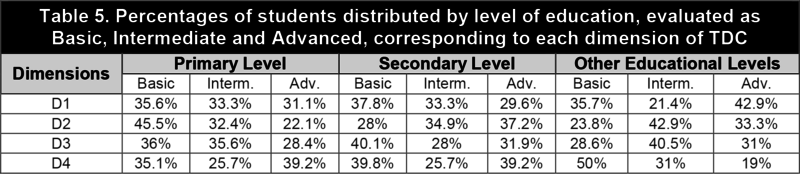

Percentage differences are observed for D1: “Didactic, Curricular and Methodological Dimension”, in which students from educational levels other than Primary and Secondary were mostly advanced TDC (42.9%). The situation is reversed in D2: “Planning, organization and management of spaces and digital technology resources”, where the percentage of Primary Education students with basic TDC development is noteworthy (45.5%). For D3. “Ethical, Legal and Security Aspects” 40.1% of students in Secondary Education has basic TDC development and 40.5% Basic students from other disciplines display intermediate development of TDC. Finally, dimension D4. “Personal and Professional Development” presents high values for students of Primary and Secondary Education at advanced levels (39.2%), but not for other disciplines, in which half of their students have a basic level of TDC development (50%).

To evaluate these differences, once again a Chi-square test was performed, which shows a significant value between groups in the D2 dimension (χ2(2)=14.28 with p<.01). Specifically, the Z-test indicates that the proportion of Primary Education students with basic development of TDC is significantly higher than that of Secondary and other levels of studies. In turn, the proportion of students of Secondary Education with advanced development of TDC is significantly higher than that of students at the Primary Level. Finally, for Dimension 4 there are no statistically significant differences (p=0.056).

Discussion and conclusions

The first general objective of this research was to assess the level of TDC of students in their last year of ITT, for a representative sample in Chile and Uruguay. We proceeded to discuss the results found in the previous section according to the proposed research questions. In particular, starting from the first question, our intention was to analyze the level of TDC in terms of the four dimensions defined, and study the possible differences between levels of development of this competency. The data on the differences between competence groups have shown a low level of development in general, with a significant difference in dimension D4. “Personal and Professional Development”. In particular, in our sample there are more students with low level in this item.

The results for the level of TDC in the sample studied differ from those obtained in studies of perception of this competence in ITT. Here, the level of development appears noticeably higher in the different levels, since students perceive a greater command of DT in relation to the actual level they reach (Badilla, Jiménez, & Careaga, 2013; Prendes, Castañeda, & Gutiérrez. 2010; Banister & Reinhart, 2014; Touron & al., 2018; Gutierrez & Serrano, 2016, Ascencio, Garay, & Seguic, 2016). However, the results are consistent with research that evaluates TDC in pedagogy students, from the results of a test, administered by the Ministry of Education that used simulated environments. This test assesses the technological skills of future teachers of primary education and preschool education, shows that only 58% of the graduates had an acceptable level of DT, 59% in primary education and 55% in preschool education (Canales & Hain, 2017). These data confirm the validity of our assessment instrument, in terms of criterion, which added to the previous study of construct validity and content (Silva & al., 2017), make this an applicable instrument for the evaluation of TDC in students of ITT in Latin American samples. A study of perception by MINEDUC with 3,425 teachers who participated in their formative plans in ICT, in relation to the integration of the pedagogical use of ICT in the classroom and in their own professional development, showed that 0.47% are at an initial level; 21% are at an elementary level; 77% obtained a higher level; and 0.23% are at an advanced level. The results are more optimistic than those observed in this study (MINEDUC, 2016).

The second general objective was to study the correlations between the level of TDC and the gender and educational level factors. This study allows us to analyze the possible personal factors or variables that may influence the development of TDC. Significant differences have appeared in both variables (gender and educational level). In particular, there is a high percentage of male students that reach advanced digital competences in D2: "Planning, Organization and Management" compared to female students. This differs from other studies in Chile with students of pedagogy of the humanities, which indicate that there are no significant differences between the groups compared and that students show homogeneous characteristics when it comes to their approach toward technology (Ayale & Joo, 2019). However, previous studies (Björk, Gudmundsdottir, & Hatlevik, 2018) found, in a sample of teachers in Malta, that men claimed to have more confidence in the use of technology in the classroom than women. Additionally, the study of Ming Te Wang and Degol (2017) among professionals in the technology sector shows that gender stereotypes are socio-cultural factors that may affect cognitive factors, including the perception of competence. Similarly, greater experience in the classroom with the use of technologies, more positive attitudes and self-confidence are generated in the specific case of women (Teo, 2008: 420) and better assessment of their TDC.

In the light of the observed results, it is both a need and a challenge to strengthen the development of TDC in general, and the didactic-pedagogical aspects, in particular, during ITT. To this end, teacher training institutions require guidance that will enable them to achieve improvements in the short, medium and long term, in various areas of ITT, such as the educational system, training and teaching, in order to make progress in the level of development of TDC. These must come from results arising from research, which should feed the diagnosis, evaluation, and accompaniment in developing TDC in ITT.

This study provides evidence that students of initial training, at one step of completing their teacher training, do not possess the TDC required to effectively use DT in their future exercise as teachers. This aspect is of concern because teachers who are not digitally competent will have difficulties in effectively using TDC in their daily practice and in teaching digital competence to their students. This low competence on the part of teachers is one of the main barriers for the use of DT in their teaching work and for their own professional development (UNESCO, 2013). We emphasize, in addition, that there is a positive correlation between the quality of the pedagogical practices and the use of DT in teaching (INTEF, 2016).

Finally, it is considered that the instrument is a good starting point to assess the TDC in students of ITT, because it puts together a set of questions that must be put into play in context, and it is applicable to the local scope of both countries. As proposals for the future, we consider interesting to:

- Undertake, in future studies, to improve the instrument, extending the battery of questions for each indicator and incorporating questions for indicators of the original matrix validated by experts that were not addressed in this study. Only 10 of the 14 originally approved were considered.

- Try the assessment instrument in other contexts and teachers in training of other educational levels, such as preschool or special needs education that exist in Latin America.

- Perform comparative studies between Latin American and European countries, since both contexts, despite differences in education plans, share the same problems with regard to the insertion of DC in ITT.

- It is also interesting within the same country or in comparison with other countries, to assess the differences, if any, between the training of teachers in public and in private institutions.

References

- Ayale-PérezT., Joo-NagataJ., . 2019.digital culture of students of Pedagogy specialising in the humanities in Santiago de Chile&author=Ayale-Pérez&publication_year= The digital culture of students of Pedagogy specialising in the humanities in Santiago de Chile.Computers & Education 133:1-12

- AscencioP., GarayM., SeguicE., . 2016.Formación Inicial Docente (FID) y Tecnologías de la Información y Comunicación (TIC) en la Universidad de Magallanes – Patagonia Chilena.Digital Education Review 30:123-134

- BadillaM., L.Jiménez, CareagaM., . 2013.Competencias TIC en formación inicial docente: Estudio de caso de seis especialidades en la Universidad Católica de la Santísima Concepción.Aloma 31(1):89-97

- BanisterS., ReinhartR., . 2014.NETS-T performance in teacher candidates: Exploring the Wayfind teacher assessment&author=Banister&publication_year= Assessing NETS-T performance in teacher candidates: Exploring the Wayfind teacher assessment.Journal of Digital Learning in Teacher Education 29(2):59-65

- Björk-GudmundsdottirG., HatlevikO.E., . 2018.qualified teachers’ professional digital competence: Implications for teacher education&author=Björk-Gudmundsdottir&publication_year= Newly qualified teachers’ professional digital competence: Implications for teacher education.European Journal of Teacher Education 41(2):214-231

- BrunM., . 2011.Las tecnologías de la información y las comunicaciones en la formación inicial docente de América Latina. Comisión Económica para América Latina y el Caribe (CEPAL), División de Desarrollo Social, Serie Políticas Sociales.

- R.Canales,, A.Hain,, . 2017.de informática educativa en Chile: Uso, apropiación y desafíos a nivel investigativo&author=R.&publication_year= Política de informática educativa en Chile: Uso, apropiación y desafíos a nivel investigativo. In: , ed. al estudio de procesos de apropiación de tecnologías&author=&publication_year= Contribuciones al estudio de procesos de apropiación de tecnologías. Buenos Aires: GatoGris.

- CastañedaL., EsteveF., AdellJ., . 2018.qué es necesario repensar la competencia docente para el mundo digital?&author=Castañeda&publication_year= ¿Por qué es necesario repensar la competencia docente para el mundo digital?Revista de Educación a Distancia 56:1-20

- CohenL., ManionL., MorrisonK., . 2007.Research methods in education. London: Routledge.

- CerdaC., Huete-NahuelJ., Molina-SandovalD., Ruminot-MartelE., SaizJ., . 2017.de tecnologías digitales y logro académico en estudiantes de Pedagogía chilenos&author=Cerda&publication_year= Uso de tecnologías digitales y logro académico en estudiantes de Pedagogía chilenos.Estudios Pedagógicos 54(3):119-133

- EscuderoJ.M., Martínez-DomínguezB., NietoJ.M., . 2018.TIC en la formación continua del profesorado en el contexto español&author=Escudero&publication_year= Las TIC en la formación continua del profesorado en el contexto español.Revista de Educación 382:57-78

- European Commission (Ed.). 2015.Marco estratégico: Educación y formación, 2020.

- European Commission (Ed.). 2018.Proposal for a council recommendation on key competences for lifelong learning.

- FraserJ., AtkinsL., RichardH., . 2013.DigiLit leicester. Supporting teachers, promoting digital literacy, transforming learning. Leicester City Council.

- GutiérrezI., SerranoJ., . 2016.y desarrollo de la competencia digital de futuros maestros en la Universidad de Murcia&author=Gutiérrez&publication_year= Evaluación y desarrollo de la competencia digital de futuros maestros en la Universidad de Murcia.New Approaches in Educational Research 5:53-59

- INTEF (Ed.). 2014. , ed. Común de Competencia Digital Docente&author=&publication_year= Marco Común de Competencia Digital Docente. Madrid: Instituto Nacional de Tecnologías Educativas y de Formación del Profesorado.

- INTEF (Ed.). 2016.Resumen Informe. Competencias para un mundo digital.

- INTEF (Ed.). 2017.Marco Común de Competencia Digital Docente.

- ISTE (Ed.). 2008. , ed. educational technology standards for teachers&author=&publication_year= National educational technology standards for teachers. Washington DC: International Society for Technology in Education.

- Lázaro-CantabranaJ.L., Gisbert-CerveraM., . 2015.de una rúbrica para evaluar la competencia digital del docente&author=Lázaro-Cantabrana&publication_year= Elaboración de una rúbrica para evaluar la competencia digital del docente.Universitas Tarraconensis 1(1):48-63

- Lázaro-CantabranaJ., Usart-RodríguezM., Gisbert-CerveraM., . 2019.teacher digital competence: The construction of an instrument for measuring the knowledge of pre-service teachers&author=Lázaro-Cantabrana&publication_year= Assessing teacher digital competence: The construction of an instrument for measuring the knowledge of pre-service teachers.Journal of New Approaches in Educational Research 8(1):73-78

- Ministerio de Educación Nacional (Ed.). 2013.Competencias TIC para el desarrollo profesional docente.

- Mineduc (Ed.). 2008. , ed. TIC para la formación inicial docente: Una propuesta en el contexto chileno&author=&publication_year= Estándares TIC para la formación inicial docente: Una propuesta en el contexto chileno. Santiago de Chile: Ministerio de Educación.

- Mineduc (Ed.). 2011. , ed. de competencias y estándares TIC en la profesión docente&author=&publication_year= Actualización de competencias y estándares TIC en la profesión docente. Santiago de Chile: Ministerio de Educación.

- Mineduc (Ed.). 2016. , ed. en Chile: Conocimiento y uso de las TIC 2014&author=&publication_year= Docentes en Chile: Conocimiento y uso de las TIC 2014. Santiago de Chile: MINEDUC, Serie Evidencias. 32

- PapanikolaouK., MakriK., RoussosP., . 2017.design as a vehicle for developing TPACK in blended teacher training on technology enhanced learning&author=Papanikolaou&publication_year= Learning design as a vehicle for developing TPACK in blended teacher training on technology enhanced learning.International Journal of Educational Technology in Higher Education 14(1):34-41

- M.P.Prendes,, L.Castañeda,, I.Gutiérrez,, . 2010.competences of future teachers. [Competencias para el uso de TIC de los futuros maestros&author=M.P.&publication_year= ICT competences of future teachers. [Competencias para el uso de TIC de los futuros maestros]]Comunicar 18(35):175-182

- RedeckerC., PunieY., . 2017. , ed. framework for the digital competence of educators: DigCompEdu&author=&publication_year= European framework for the digital competence of educators: DigCompEdu. Luxembourg: Publications Office of the European Union.

- RombysD., . 2012.Integración de las TIC para una buena enseñanza: Opiniones, actitudes y creencias de los docentes en un Instituto de Formación de Formadores.Tesis de Maestría, Instituto de Educación. Universidad ORT Uruguay

- RodríguezJ., SilvaJ., . 2006.Incorporación de las tecnologías de la información y la comunicación en la formación inicial docente el caso chileno.Innovación Educativa 6(32):19-35

- RozoA., PradaM., . 2012.Panorama de la formación inicial docente y TIC en la región Andina.Educación y Pedagogía 24(62):191-204

- SilvaJ., MirandaP., GisbertM., MoralesM., OnettoA., . 2016.para evaluar la competencia digital docente en la formación inicial en el contexto chileno-uruguayo&author=Silva&publication_year= Indicadores para evaluar la competencia digital docente en la formación inicial en el contexto chileno-uruguayo.Revista Latinoamericana de Tecnología Educativa 15(3):55-68

- SilvaJ., RivoirA., OnetoA., MoralesM., MirandaP., GisbertM., SalinasJ., . 2017.Informe de investigación. Estudio comparado de las competencias digitales para aprender y enseñar en docentes en formación de Uruguay y Chile.

- SilvaJ., J.Lázaro, MirandaP., RCanales, . 2018.desarrollo de la competencia digital docente durante la formación del profesorado&author=Silva&publication_year= El desarrollo de la competencia digital docente durante la formación del profesorado.Opción 34(86):423-449

- TeoT., . 2008.teachers attitudes towards computer use: A Singapore survey&author=Teo&publication_year= Pre-service teachers attitudes towards computer use: A Singapore survey.Australasian Journal of Educational Technology 24(4):413-424

- TourónJ., MartínD., NavarroE., PradasS., ÍñigoV., . 2018.de constructo de un instrumento para medir la competencia digital docente de los profesores&author=Tourón&publication_year= Validación de constructo de un instrumento para medir la competencia digital docente de los profesores.Revista Española de Pedagogía 76(269):25-54

- UNESCO (Ed.). 2008.Estándares de competencia en TIC para docentes.

- UNESCO (Ed.). 2015.Educación 2030: Declaración de Incheon y Marco de Acción para la realización del Objetivo de Desarrollo.

- UNESCO (Ed.). 2018.ICT competency framework for teachers.

- Villa-SánchezA., Poblete-RuizM., . 2011.Evaluación de competencias genéricas: Principios, oportunidades y limitaciones.Bordón 63(1):147-170

- WangM.T., DegolJ.L., . 2017.gap in science, technology, engineering, and mathematics (STEM): Current knowledge, implications for practice, policy, and future directions&author=Wang&publication_year= Gender gap in science, technology, engineering, and mathematics (STEM): Current knowledge, implications for practice, policy, and future directions.Educational Psychology Review 29(1):119-140

Click to see the English version (EN)

Resumen

El desarrollo de la Competencia Digital Docente (CDD) debe iniciarse en la etapa de formación inicial docente (FID) y extenderse durante los años de ejercicio. Todo ello con el propósito de usar las Tecnologías Digitales (TD) de manera que permitan enriquecer la docencia y el propio desarrollo profesional. El presente artículo expone los resultados de un trabajo con estudiantes de último año de FID de Chile y Uruguay para determinar su nivel de CDD. Para realizar el estudio se utilizó una metodología cuantitativa, con una muestra representativa estratificada de 568 estudiantes (n=273, Chile; n=295, Uruguay). Los datos se analizaron en relación al género y nivel educativo. Los resultados mostraron, para las cuatro dimensiones de la CDD, un desarrollo básico. Respecto a la relación entre las variables estudiadas y la CDD, destaca el porcentaje de hombres que alcanza competencias digitales avanzadas para la dimensión de Planificación, organización y gestión de espacios y recursos tecnológicos. También para esta dimensión la proporción de estudiantes de Educación Primaria con un desarrollo de CDD básico es significativamente superior al del resto de estudiantes. Como conclusión destacamos que es necesario que las instituciones formadoras de docentes implementen políticas a diferentes plazos y en diversos ámbitos de la FID como el sistema educativo, la formación y la docencia, para mejorar el nivel de desarrollo de la CDD.

Resumen

The development of Teacher’s Digital Competence (TDC) should start in initial teacher training, and continue throughout the following years of practice. All this with the purpose of using Digital Technologies (DT) to improve teaching and professional development. This paper presents a study focused on the diagnosis of TDC among ITT senior students from Chile and Uruguay. A quantitative methodology, with a representative sample of 568 students (N=273 from Chile and N=295 from Uruguay) was designed and implemented. TDC was also studied and discussed in relation to gender and educational level. Results showed a mostly basic level for the four dimensions of the TDC in the sample. Regarding the relationship between the variables and the TDC, the planning, organization and management of spaces and technological resources’ dimension is the only one showing significant differences. In particular, male students achieved a higher TDC level compared with female students. Furthermore, the proportion of Primary Education students with a low TDC level was significantly higher than other students. In conclusion, it is necessary, for teacher training institutions in Chile and Uruguay, to implement policies at different moments and in different areas of the ITT process in order to improve the development of the TDC.

Resumen

El desarrollo de la Competencia Digital Docente (CDD) debe iniciarse en la etapa de formación inicial docente (FID) y extenderse durante los años de ejercicio. Todo ello con el propósito de usar las Tecnologías Digitales (TD) de manera que permitan enriquecer la docencia y el propio desarrollo profesional. El presente artículo expone los resultados de un trabajo con estudiantes de último año de FID de Chile y Uruguay para determinar su nivel de CDD. Para realizar el estudio se utilizó una metodología cuantitativa, con una muestra representativa estratificada de 568 estudiantes (n=273, Chile; n=295, Uruguay). Los datos se analizaron en relación al género y nivel educativo. Los resultados mostraron, para las cuatro dimensiones de la CDD, un desarrollo básico. Respecto a la relación entre las variables estudiadas y la CDD, destaca el porcentaje de hombres que alcanza competencias digitales avanzadas para la dimensión de Planificación, organización y gestión de espacios y recursos tecnológicos. También para esta dimensión la proporción de estudiantes de Educación Primaria con un desarrollo de CDD básico es significativamente superior al del resto de estudiantes. Como conclusión destacamos que es necesario que las instituciones formadoras de docentes implementen políticas a diferentes plazos y en diversos ámbitos de la FID como el sistema educativo, la formación y la docencia, para mejorar el nivel de desarrollo de la CDD.

Keywords

ICT standards, digital competence, teachers training, assessment, educational technology, high education, pedagogy, educational system

Keywords

Estándares TIC, competencia digital, formación de profesores, evaluación, tecnología educativa, educación superior, pedagogía, sistema educativo

Introduction

Digital competence (DC) is one of the key competences of the modern citizen. Over a decade ago, the European Commission (2018) considered that citizens should have some key skills to prepare them for adult life, to enable them to actively participate in society and to continue to learn throughout their lives. As one of these skills, DC must be considered broadly, in all educational systems (curricula, resources and support for training, continuous competency updates, teacher training, equity, special needs, educational policies, etc.). In a broader context, UNESCO (2015: 40-47), within the Education 2030 Framework for Action, highlights the potential of digital technology (DT) and the importance of technological skills training as part of programs to enter the labor market. In this reality, the teaching staff plays a key role to ensure that future citizens make effective use of digital technologies for their personal and professional development.

Various international reports highlight the need for well-trained educators in the use the DT for teaching (INTEF, 2017: 2; Redecker & Punie, 2017: 12; UNESCO, 2015: 55; 2017: 20), Teachers with an adequate level of Teacher’s Digital Competence (TDC), which is understood as the “set of abilities, skills, and attitudes that teachers must develop in order to be able to incorporate digital technologies into their practice and their professional development" (Lázaro, Usart, & Gisbert, 2019: 73). This concept is in line with proposals concerning recent developments, which define TDC, and emphasize the need to harness the potential of DT in the learning processes of future citizens of a digital society. Teachers themselves demonstrate, in their training needs, that TDC is one of their priorities (European Commission, 2015: 11). Specifically, the collection of knowledge, attitudes and skills that make up TDC are defined in different frameworks and standards that serve as referents for the training and evaluation of this competency: MINEDUC-Enlaces (2008; 2011), ISTE (2008), Unesco (2008 y 2018), Fraser, Atkins, & Richard (2013), Ministerio Educación Nacional (2013), INTEF (2014; 2017), DigiComp (Redecker & Punie, 2017). If we analyze the dimensions of TDC considered here, we can see that the focus is on didactic-pedagogical aspects, teacher professional development, ethical and safety aspects, search and management of information, and in the creation and communication of content. Most of them are directed toward TDC of in-service teachers, who may be able to assimilate initial or basic levels as a minimum requirement as a student pursuing a degree in education or pedagogy to complete their training at university.

TDC in initial teacher training

In education degree programs, DC has a different hue than in other areas of education. Initial Teacher Training should include digital training for the future teachers, so they are able to use digital technology in their professional activity (Escudero, Martínez-Domínguez, & Nieto, 2018; Papanikolaou, Makri, & Roussos, 2017; Prendes, Castañeda, & Gutiérrez, 2010).

Teacher training is one of the key factors for incorporating DT in pedagogical practices. This aspect takes on greater relevance in ITT, as they could enter the education system with an adequate level of TDC. In this way, future teachers would be able to enrich the learning environments through DT and incorporate them naturally into their future professional practice (Castañeda, Esteve, & Adell, 2018). ITT in Latin America has been incorporating DT in the study plans with little or no guidance and support from the ministries of education. In fact, the policy has focused on delivering infrastructure and training to teachers in the educational system, without offering support and guidance to teacher-training institutions. It is necessary to systematize and share experiences involving the inclusion of DT in the ITT curriculum (Brun, 2011), in alignment with international standards (Brushed & Prada, 2012).

In Chile, given the autonomy of institutions that train teachers and the shortage of policies and guidelines for including DT in ITT, there are a variety of specific subjects on DT distributed along different semesters of the syllabus. However, they are more focused on digital literacy, than on teaching with DT (Rodriguez & Silva, 2006). This fact has not hindered the development of particular initiatives created by some institutions, in line with guiding the development of the TDC. They use some national standards and, at the same time, integrate elements of other international frameworks (Cerda, Huete, Molina, Ruminot & Saiz, 2017). In this context, the level of self-perception of students with regard to TDC (MINEDUC-Enlaces, 2011). It has been observed that the level of TDC development of ITT students is based on technical and ethical aspects, rather than those related to teaching and knowledge management (Badilla, Jiménez, & Careaga, 2013; Ascencio, Garay, & Seguic, 2016). In the case of Uruguay, because there is an entity that controls teacher training, there are two ITT subjects: “Information and education” and “integrating digital technologies,” which include education in TDC of future teachers (Rombys, 2012). In both countries, no specific cross-sectional formulations are observed to guide the integration of DT in other subjects. Their work is subjected to the competencies and skills of the teaching staff itself (Silva & al., 2017).

Evaluation of TDC

Evaluating TDC in ITT presents important challenges that relate to the complexity of evaluating competencies and the assessment system used. Objective assessment tools are required, that are not based only on the perception of the user but measure the level of TDC by solving situations or problems in line with the indicators to be evaluated (Villar & Poblete, 2011: 150). Currently, there are TDC self-assessment tools that are based on self-perception (Redecker & Punie, 2017; Tourón, Martín, Navarro, Pradas & Íñigo, 2018). INTEF (2017) presents a proposal that uses a technological solution and also incorporates the use of a portfolio for evaluation. In our view, the challenge is to use an objective, reliable and valid TDC evaluation test that measures the knowledge of the future teacher. For this purpose, this study sets forth to analyze the development level of TDC in a sample of senior students of ITT in Chile and Uruguay, through a previously validated instrument (see section 2.2), which allows us to make an assessment aligned to TDC, using the indicators and dimensions proposed by Lázaro and Gisbert (2015) (Figure 1). At the same time, through the data obtained, research will also examine the relationship of the TDC level with other key variables.

|

|

Objectives and research questions

In order to determine the development level of TDC of the ITT senior students in Chile and Uruguay, the study will present and discuss the results for a representative sample in both countries, through quantitative analysis of the data obtained using the described instruments, and also with regard to the variables of gender and educational level. Specifically:

- O1. Assess the level of TDC in a sample of students from Chile and Uruguay.

- O2. Study the relationship between the level of TDC and the factors of gender and educational level.

The following research questions are established to guide the process and will be used to present and discuss the results:

- Q1. What is the distribution in the four dimensions of TDC of the sample studied?

- Q2. Are there significant differences for TDC in terms of gender?

- Q3. Are there differences in TDC among future teachers of primary and secondary education?

Material and methods

Sample

With the aim of studying the TDC of senior students of ITT in Chile and Uruguay, we chose a representative stratified sample composed of 568 students of both countries. We performed a stratified random sampling with p=5%. The sample was drawn from a population of 2,467 students for Uruguay and an estimated population of 12,928 in Chile, considering the public universities that provide ITT. To perform the stratified sample, the relative weight of the population was taken into account, of the various ITT institutions Uruguay and different public universities in Chile, considering each institution as a stratum.

In the case of Uruguay (there are two institutions —with a center for each stratum— in the capital city of the country, and the remaining 2 institutions with 28 centers scattered throughout the rest of the territory), in 2 of the 4 strata, a multistage sample was conducted, in which the centers were chosen first, and then, the students within these centers. Eleven centers participated of a total of 30. First, the sample was divided by strata, according to the number of students present in each center. Then, depending on feasibility decisions, students were drawn in the institutions of the capital and the centers of the rest of the country. Within these centers, students to be surveyed were drawn by an assigned number in the student lists. To select the individuals of the samples, another 10% was drawn for substitution, respecting the relative weight of each subsample.

|

|

In the Chilean case, after dividing the sample in strata —by number of students present in each one of them— there was one drawing per university, while the instrument was applied by full classroom, with the participation of seven universities, of a total of 16. The universe and the samples are shown on Table 1. Table 2 characterizes the sample of 568 students who participated in the study. It is made up of 273 Chilean students (48.1%) and 295 Uruguayan students (51.9%).

|

|

Instruments and procedure

To study the TDC of the sample, a test-type assessment instrument was used to present problem situations that novel teachers may encounter during their professional practice. This instrument is composed of closed questions with hierarchical or weighted responses, with several answer options. The responses were scored according to their level of precision: 1, 0.75, 0.5, 0.25 points. This differentiation is explained because, faced with the same problem situation, there may be several correct answers, but with different levels of precision, depending on the situation. This was constructed from an array of indicators to assess TDC in ITT in the Chilean-Uruguayan context (Silva, Miranda, Gisbert, Moral, & Oneto, 2016) based primarily on ICT standards in ITT from MINEDUC-Enlaces (2008) and the proposed TDC rubric by Lázaro & Gisbert (2015).

The specifications table was reviewed by a panel of experts, both in Chile and Uruguay. This is a proposal of TDC contextualized to ITT, which is based on different international standards (Fraser, Atkins & Richard, 2013; INTEF, 2014; ISTE, 2008 and UNESCO, 2008), to ensure the construct validity of the instrument. In order to ensure content validity in the evaluation questionnaire, the 56 initial questions were validated through expert judgment which included nine experts in the field of Higher Education linked to ITT in Uruguay, Chile and Spain (3 per country). This process was carried out through validation matrices, where each expert individually answered yes or no to the conditions of validity of each question.

Of the 56 questions, 51 obtained a quality assessment of over 75%, while only six questions were evaluated with scores under 75%, making them unsuitable for the final evaluation instrument. The assessment instrument was made up of the four top rated questions by experts for each of the 10 indicators. In this way, the final instrument was composed of 40 questions, distributed in four dimensions: D1. Curriculum, Didactics and Methodology: 16 questions; D2. Planning, Organizing and Managing Digital Technology Spaces and Resources: eight questions; D3. Ethical, legal and security aspects: eight questions and D4. Personal and Professional Development: eight questions. Meanwhile, each correct answer was assigned one point and the instrument awarded 40 points maximum.

Below is an example of an item or question: "If you want your students to perform a CIICT (curricular integration of information and communication technologies) activity, which of the following digital technologies do you or would you use: (a) Educational Video (0.50); (b) Blog with curricular topic (0.75); (c) Specific software for the subject (1.00); (d) Presentation with curricular contents (0.25). The internal consistency of the instrument was studied (Silva & al., 2017), and interpreted on the basis of the criterion cited by Cohen, Manion and Morrison (2007). In our case, α=0.60, which indicates "good" internal reliability for scales between 0.6 and 0.8 points.

The process of administering the test took two months. The instrument was administered to the sample of students in the last year of pedagogy in Chile and Uruguay (see Section 2.1) online, from any place and device (tablet, cell phone, computer). Data from the test was downloaded and saved to a Microsoft Excel (2007) spreadsheet, taking into account the ethical aspects relating to anonymity and conformity of data transfer.

Statistical tests

To analyze the implementation results of the instrument and to respond to the research questions, a descriptive data analysis of the assessment instrument for TDC at the level of dimensions and indicators, was performed. Later, different statistical tests were administered. In particular, to perform the analysis, the creation of "Indicators of Teacher’s Digital Competences (ITDC)” was proposed to categorize students in initial teacher training by level of TDC, into: basic, intermediate and advanced, for the dimensions, by crossing of variables: sex and educational level, with the purpose of identifying statistically significant differences with the chi-square (χ2) test and the comparison of distributions (Z test). Data were analyzed with SPSS for Windows, Version 24.

For the construction of each indicator, all the scores obtained for each item were added. The results of this sum of scores were categorized (recoded) according to a theoretical estimate that considers the actual distribution of the scores obtained: minimum score obtained. Analysis, maximum score obtained and the scores in the position 33 and 66 if the scores are sorted in ascending order. Considering the scores for each indicator that make up the dimensions mentioned above, the TDC indicator of classification was created, as described below in Table 3.

|

|

Results

First, we estimated the overall results of the sample by dimension of TDC. The mean of the four dimensions is between 2.0 and 2.3 points, which is equivalent to 51% to 59% of the total points available. These values have standard deviations between 0.3 and 0.6. For all dimensions, it should be noted that relatively similar scores between mean, median and mode enable us to see we are dealing with a normal distribution of data, which was verified through statistical tests. In particular, the results were compared using the Levene test to confirm the normal distribution for each dimension in the total sample. We present the results sorted by research question:

- Q1. What is the distribution in the four dimensions of TDC for the sample studied?

The results of the distribution in our sample by TDC dimension showed that for the four dimensions, students in the sample are mostly at the basic level (Figure 2), although 1 of every three subjects is at the advanced level.

|

|

Only differences that could be statistically significant in D4 are found: “Personal and Professional Development” (Figure 2). To calculate if the differences observed are significant among students with intermediate TDC (28.5%), and basic TDC (38.7%), a Chi-square test was applied to compare the different levels (χ2(2)=10.8 with p<0.01). This result indicates that we must reject the null hypothesis and therefore we can state that there is a statistical difference between these two groups of students. In other words, the distribution of students with low level of D4 is significantly higher than that of students with intermediate competency. Once the dimensions of TDC evaluated in our sample of students is studied, we detail the results for each of the questions that correlate their TDC with the variables of interest:

- P2. "Are there significant differences for TDC in terms of gender?" was first measured through the descriptive statistical study of the percentages of distribution (Table 4).

|

|

The results show that no significant differences were observed with regard to gender in the four dimensions studied. Even so, the percentage of male students that reach advanced digital competences is noteworthy in D2: “Planning, Organization and Management” (39.3%), compared to female students at that same level (28.3%). To evaluate this difference quantitatively, a Chi-square test was applied, which only showed a statistically significant value between these two groups (χ2(1)= 6.61, with p=0.047, <.05). We can say that there is a statistical difference between these two groups of male and female students for D2. In particular, the distribution of male students with an advanced level in this dimension is significantly higher than that of women. In reference to the third research question Q3: Are there differences of TDC among teachers of secondary and primary education? We can see in Table 5 the following percentages of distribution for each group, separated by levels of TDC development.

|

|

Percentage differences are observed for D1: “Didactic, Curricular and Methodological Dimension”, in which students from educational levels other than Primary and Secondary were mostly advanced TDC (42.9%). The situation is reversed in D2: “Planning, organization and management of spaces and digital technology resources”, where the percentage of Primary Education students with basic TDC development is noteworthy (45.5%). For D3. “Ethical, Legal and Security Aspects” 40.1% of students in Secondary Education has basic TDC development and 40.5% Basic students from other disciplines display intermediate development of TDC. Finally, dimension D4. “Personal and Professional Development” presents high values for students of Primary and Secondary Education at advanced levels (39.2%), but not for other disciplines, in which half of their students have a basic level of TDC development (50%).

To evaluate these differences, once again a Chi-square test was performed, which shows a significant value between groups in the D2 dimension (χ2(2)=14.28 with p<.01). Specifically, the Z-test indicates that the proportion of Primary Education students with basic development of TDC is significantly higher than that of Secondary and other levels of studies. In turn, the proportion of students of Secondary Education with advanced development of TDC is significantly higher than that of students at the Primary Level. Finally, for Dimension 4 there are no statistically significant differences (p=0.056).

Discussion and conclusions

The first general objective of this research was to assess the level of TDC of students in their last year of ITT, for a representative sample in Chile and Uruguay. We proceeded to discuss the results found in the previous section according to the proposed research questions. In particular, starting from the first question, our intention was to analyze the level of TDC in terms of the four dimensions defined, and study the possible differences between levels of development of this competency. The data on the differences between competence groups have shown a low level of development in general, with a significant difference in dimension D4. “Personal and Professional Development”. In particular, in our sample there are more students with low level in this item.

The results for the level of TDC in the sample studied differ from those obtained in studies of perception of this competence in ITT. Here, the level of development appears noticeably higher in the different levels, since students perceive a greater command of DT in relation to the actual level they reach (Badilla, Jiménez, & Careaga, 2013; Prendes, Castañeda, & Gutiérrez. 2010; Banister & Reinhart, 2014; Touron & al., 2018; Gutierrez & Serrano, 2016, Ascencio, Garay, & Seguic, 2016). However, the results are consistent with research that evaluates TDC in pedagogy students, from the results of a test, administered by the Ministry of Education that used simulated environments. This test assesses the technological skills of future teachers of primary education and preschool education, shows that only 58% of the graduates had an acceptable level of DT, 59% in primary education and 55% in preschool education (Canales & Hain, 2017). These data confirm the validity of our assessment instrument, in terms of criterion, which added to the previous study of construct validity and content (Silva & al., 2017), make this an applicable instrument for the evaluation of TDC in students of ITT in Latin American samples. A study of perception by MINEDUC with 3,425 teachers who participated in their formative plans in ICT, in relation to the integration of the pedagogical use of ICT in the classroom and in their own professional development, showed that 0.47% are at an initial level; 21% are at an elementary level; 77% obtained a higher level; and 0.23% are at an advanced level. The results are more optimistic than those observed in this study (MINEDUC, 2016).

The second general objective was to study the correlations between the level of TDC and the gender and educational level factors. This study allows us to analyze the possible personal factors or variables that may influence the development of TDC. Significant differences have appeared in both variables (gender and educational level). In particular, there is a high percentage of male students that reach advanced digital competences in D2: "Planning, Organization and Management" compared to female students. This differs from other studies in Chile with students of pedagogy of the humanities, which indicate that there are no significant differences between the groups compared and that students show homogeneous characteristics when it comes to their approach toward technology (Ayale & Joo, 2019). However, previous studies (Björk, Gudmundsdottir, & Hatlevik, 2018) found, in a sample of teachers in Malta, that men claimed to have more confidence in the use of technology in the classroom than women. Additionally, the study of Ming Te Wang and Degol (2017) among professionals in the technology sector shows that gender stereotypes are socio-cultural factors that may affect cognitive factors, including the perception of competence. Similarly, greater experience in the classroom with the use of technologies, more positive attitudes and self-confidence are generated in the specific case of women (Teo, 2008: 420) and better assessment of their TDC.

In the light of the observed results, it is both a need and a challenge to strengthen the development of TDC in general, and the didactic-pedagogical aspects, in particular, during ITT. To this end, teacher training institutions require guidance that will enable them to achieve improvements in the short, medium and long term, in various areas of ITT, such as the educational system, training and teaching, in order to make progress in the level of development of TDC. These must come from results arising from research, which should feed the diagnosis, evaluation, and accompaniment in developing TDC in ITT.

This study provides evidence that students of initial training, at one step of completing their teacher training, do not possess the TDC required to effectively use DT in their future exercise as teachers. This aspect is of concern because teachers who are not digitally competent will have difficulties in effectively using TDC in their daily practice and in teaching digital competence to their students. This low competence on the part of teachers is one of the main barriers for the use of DT in their teaching work and for their own professional development (UNESCO, 2013). We emphasize, in addition, that there is a positive correlation between the quality of the pedagogical practices and the use of DT in teaching (INTEF, 2016).

Finally, it is considered that the instrument is a good starting point to assess the TDC in students of ITT, because it puts together a set of questions that must be put into play in context, and it is applicable to the local scope of both countries. As proposals for the future, we consider interesting to:

- Undertake, in future studies, to improve the instrument, extending the battery of questions for each indicator and incorporating questions for indicators of the original matrix validated by experts that were not addressed in this study. Only 10 of the 14 originally approved were considered.

- Try the assessment instrument in other contexts and teachers in training of other educational levels, such as preschool or special needs education that exist in Latin America.

- Perform comparative studies between Latin American and European countries, since both contexts, despite differences in education plans, share the same problems with regard to the insertion of DC in ITT.

- It is also interesting within the same country or in comparison with other countries, to assess the differences, if any, between the training of teachers in public and in private institutions.

References

- Ayale-PérezT., Joo-NagataJ., . 2019.digital culture of students of Pedagogy specialising in the humanities in Santiago de Chile&author=Ayale-Pérez&publication_year= The digital culture of students of Pedagogy specialising in the humanities in Santiago de Chile.Computers & Education 133:1-12

- AscencioP., GarayM., SeguicE., . 2016.Formación Inicial Docente (FID) y Tecnologías de la Información y Comunicación (TIC) en la Universidad de Magallanes – Patagonia Chilena.Digital Education Review 30:123-134

- BadillaM., L.Jiménez, CareagaM., . 2013.Competencias TIC en formación inicial docente: Estudio de caso de seis especialidades en la Universidad Católica de la Santísima Concepción.Aloma 31(1):89-97

- BanisterS., ReinhartR., . 2014.NETS-T performance in teacher candidates: Exploring the Wayfind teacher assessment&author=Banister&publication_year= Assessing NETS-T performance in teacher candidates: Exploring the Wayfind teacher assessment.Journal of Digital Learning in Teacher Education 29(2):59-65

- Björk-GudmundsdottirG., HatlevikO.E., . 2018.qualified teachers’ professional digital competence: Implications for teacher education&author=Björk-Gudmundsdottir&publication_year= Newly qualified teachers’ professional digital competence: Implications for teacher education.European Journal of Teacher Education 41(2):214-231

- BrunM., . 2011.Las tecnologías de la información y las comunicaciones en la formación inicial docente de América Latina. Comisión Económica para América Latina y el Caribe (CEPAL), División de Desarrollo Social, Serie Políticas Sociales.

- R.Canales,, A.Hain,, . 2017.de informática educativa en Chile: Uso, apropiación y desafíos a nivel investigativo&author=R.&publication_year= Política de informática educativa en Chile: Uso, apropiación y desafíos a nivel investigativo. In: , ed. al estudio de procesos de apropiación de tecnologías&author=&publication_year= Contribuciones al estudio de procesos de apropiación de tecnologías. Buenos Aires: GatoGris.

- CastañedaL., EsteveF., AdellJ., . 2018.qué es necesario repensar la competencia docente para el mundo digital?&author=Castañeda&publication_year= ¿Por qué es necesario repensar la competencia docente para el mundo digital?Revista de Educación a Distancia 56:1-20

- CohenL., ManionL., MorrisonK., . 2007.Research methods in education. London: Routledge.

- CerdaC., Huete-NahuelJ., Molina-SandovalD., Ruminot-MartelE., SaizJ., . 2017.de tecnologías digitales y logro académico en estudiantes de Pedagogía chilenos&author=Cerda&publication_year= Uso de tecnologías digitales y logro académico en estudiantes de Pedagogía chilenos.Estudios Pedagógicos 54(3):119-133

- EscuderoJ.M., Martínez-DomínguezB., NietoJ.M., . 2018.TIC en la formación continua del profesorado en el contexto español&author=Escudero&publication_year= Las TIC en la formación continua del profesorado en el contexto español.Revista de Educación 382:57-78

- European Commission (Ed.). 2015.Marco estratégico: Educación y formación, 2020.

- European Commission (Ed.). 2018.Proposal for a council recommendation on key competences for lifelong learning.

- FraserJ., AtkinsL., RichardH., . 2013.DigiLit leicester. Supporting teachers, promoting digital literacy, transforming learning. Leicester City Council.

- GutiérrezI., SerranoJ., . 2016.y desarrollo de la competencia digital de futuros maestros en la Universidad de Murcia&author=Gutiérrez&publication_year= Evaluación y desarrollo de la competencia digital de futuros maestros en la Universidad de Murcia.New Approaches in Educational Research 5:53-59

- INTEF (Ed.). 2014. , ed. Común de Competencia Digital Docente&author=&publication_year= Marco Común de Competencia Digital Docente. Madrid: Instituto Nacional de Tecnologías Educativas y de Formación del Profesorado.

- INTEF (Ed.). 2016.Resumen Informe. Competencias para un mundo digital.

- INTEF (Ed.). 2017.Marco Común de Competencia Digital Docente.

- ISTE (Ed.). 2008. , ed. educational technology standards for teachers&author=&publication_year= National educational technology standards for teachers. Washington DC: International Society for Technology in Education.

- Lázaro-CantabranaJ.L., Gisbert-CerveraM., . 2015.de una rúbrica para evaluar la competencia digital del docente&author=Lázaro-Cantabrana&publication_year= Elaboración de una rúbrica para evaluar la competencia digital del docente.Universitas Tarraconensis 1(1):48-63

- Lázaro-CantabranaJ., Usart-RodríguezM., Gisbert-CerveraM., . 2019.teacher digital competence: The construction of an instrument for measuring the knowledge of pre-service teachers&author=Lázaro-Cantabrana&publication_year= Assessing teacher digital competence: The construction of an instrument for measuring the knowledge of pre-service teachers.Journal of New Approaches in Educational Research 8(1):73-78

- Ministerio de Educación Nacional (Ed.). 2013.Competencias TIC para el desarrollo profesional docente.

- Mineduc (Ed.). 2008. , ed. TIC para la formación inicial docente: Una propuesta en el contexto chileno&author=&publication_year= Estándares TIC para la formación inicial docente: Una propuesta en el contexto chileno. Santiago de Chile: Ministerio de Educación.

- Mineduc (Ed.). 2011. , ed. de competencias y estándares TIC en la profesión docente&author=&publication_year= Actualización de competencias y estándares TIC en la profesión docente. Santiago de Chile: Ministerio de Educación.

- Mineduc (Ed.). 2016. , ed. en Chile: Conocimiento y uso de las TIC 2014&author=&publication_year= Docentes en Chile: Conocimiento y uso de las TIC 2014. Santiago de Chile: MINEDUC, Serie Evidencias. 32

- PapanikolaouK., MakriK., RoussosP., . 2017.design as a vehicle for developing TPACK in blended teacher training on technology enhanced learning&author=Papanikolaou&publication_year= Learning design as a vehicle for developing TPACK in blended teacher training on technology enhanced learning.International Journal of Educational Technology in Higher Education 14(1):34-41

- M.P.Prendes,, L.Castañeda,, I.Gutiérrez,, . 2010.competences of future teachers. [Competencias para el uso de TIC de los futuros maestros&author=M.P.&publication_year= ICT competences of future teachers. [Competencias para el uso de TIC de los futuros maestros]]Comunicar 18(35):175-182

- RedeckerC., PunieY., . 2017. , ed. framework for the digital competence of educators: DigCompEdu&author=&publication_year= European framework for the digital competence of educators: DigCompEdu. Luxembourg: Publications Office of the European Union.

- RombysD., . 2012.Integración de las TIC para una buena enseñanza: Opiniones, actitudes y creencias de los docentes en un Instituto de Formación de Formadores.Tesis de Maestría, Instituto de Educación. Universidad ORT Uruguay

- RodríguezJ., SilvaJ., . 2006.Incorporación de las tecnologías de la información y la comunicación en la formación inicial docente el caso chileno.Innovación Educativa 6(32):19-35

- RozoA., PradaM., . 2012.Panorama de la formación inicial docente y TIC en la región Andina.Educación y Pedagogía 24(62):191-204

- SilvaJ., MirandaP., GisbertM., MoralesM., OnettoA., . 2016.para evaluar la competencia digital docente en la formación inicial en el contexto chileno-uruguayo&author=Silva&publication_year= Indicadores para evaluar la competencia digital docente en la formación inicial en el contexto chileno-uruguayo.Revista Latinoamericana de Tecnología Educativa 15(3):55-68

- SilvaJ., RivoirA., OnetoA., MoralesM., MirandaP., GisbertM., SalinasJ., . 2017.Informe de investigación. Estudio comparado de las competencias digitales para aprender y enseñar en docentes en formación de Uruguay y Chile.

- SilvaJ., J.Lázaro, MirandaP., RCanales, . 2018.desarrollo de la competencia digital docente durante la formación del profesorado&author=Silva&publication_year= El desarrollo de la competencia digital docente durante la formación del profesorado.Opción 34(86):423-449

- TeoT., . 2008.teachers attitudes towards computer use: A Singapore survey&author=Teo&publication_year= Pre-service teachers attitudes towards computer use: A Singapore survey.Australasian Journal of Educational Technology 24(4):413-424

- TourónJ., MartínD., NavarroE., PradasS., ÍñigoV., . 2018.de constructo de un instrumento para medir la competencia digital docente de los profesores&author=Tourón&publication_year= Validación de constructo de un instrumento para medir la competencia digital docente de los profesores.Revista Española de Pedagogía 76(269):25-54

- UNESCO (Ed.). 2008.Estándares de competencia en TIC para docentes.

- UNESCO (Ed.). 2015.Educación 2030: Declaración de Incheon y Marco de Acción para la realización del Objetivo de Desarrollo.

- UNESCO (Ed.). 2018.ICT competency framework for teachers.

- Villa-SánchezA., Poblete-RuizM., . 2011.Evaluación de competencias genéricas: Principios, oportunidades y limitaciones.Bordón 63(1):147-170