"Non quia difficilia sunt non audemus, sed quia non audemus difficilia sunt."

Seneca (CIV,26)

Acknowledgement

It has been a long and challenging way, but, fortunately, during these years I have met many people, who encouraged me to go ahead and taught me not to give up. I am very grateful for this.

I would like to thank my advisor, Prof. Eugenio Oñate, for giving me the opportunity to study in CIMNE. During the years spent at this research center, I could find many sources of knowledge and inspiration, that I will take with me wherever I will be in the future. A special thanks goes to my co-advisor, Prof. Antonia Larese, for introducing me to the field of computational mechanics and for supporting me in the most important moments of this experience. I would like to thank those colleagues of CIMNE, who with great patience, helped me dedicating part of their time, Riccardo, Stefano, Charlie, Pablo, Lorenzo, Jordi and Alessandro.

I would like to thank Prof. Massimiliano Cremonesi for his supervision and kind welcome during my visit at the Department of Civil and Environmental Engineering, at Politecnico di Milano. I want to express my gratitude to Dr. Fausto Di Muzio for his kindness and warming welcome during my brief stay at Nestlé. This experience allowed me to look at the research world from a different perspective and to recognize how important is to conjugate fundamental research with more practical applied aspects. In this respect, I would like to thank Dr. Julien Dupas for his collaboration and support in providing some experimental data.

I have been enough fortunate to be part of the European project T-MAPPP, which greatly contributed not only to the development of my technical knowledge, but also of my soft skills. I would like to thank all the researchers involved in the project. In particular, Prof. Stefan Luding and Prof. Vanessa Magnanimo, for the valuable discussions and the interest shown in my work; Yousef, Kostas, Kianoosh, Behzad, Somik, Sasha and Niki, because without them it would have not been the same.

A special thank you goes to all the people who made my day with a smile and let me see the positive side in any situation. Thank you Josie, Manu, Edu, Vicente, Nanno, Giulia, Eugenia and Alessandra.

Last but not least, all my gratitude goes to my family: Marta, Gianni and Claudia, for their wholehearted support and love.

The research was supported by the Research Executive Agency through the T-MAPPP project (FP7 PEOPLE 2013 ITN-G.A.n607453).

Resumen

El manejo, el transporte y el procesamiento de materiales granulares y en polvo son operaciones fundamentales en una amplia gama de procesos industriales y de fenómenos geofísicos. Los materiales particulados, que pueden encontrarse en la naturaleza, generalmente están caracterizados por un tamaño de grano, que puede variar entre varios órdenes de magnitud: desde el nanómetro hasta el orden de los metros. En función de las condiciones de fracción volumétrica y de deformación de cortante, los materiales granulares pueden tener un comportamiento diferente y a menudo pueden convertirse en un nuevo estado de materia con propiedades de sólidos, de líquidos y de gases. Como consecuencia, tanto el análisis experimental como la simulación numérica de medios granulares es aún una tarea compleja y la predicción de su comportamiento dinámico representa aun hoy día un desafío muy importante. El principal objetivo de esta monografía es el desarrollo de una estrategia numérica con la finalidad de estudiar el comportamiento macroscópico de los flujos de medios granulares secos en régimen cuasiestático y en régimen dinámico. El problema está definido en el contexto de la mecánica de medios continuos y las leyes de gobierno están resueltas mediante un formalismo Lagrangiano. El Metodo de los Puntos Materiales (MPM), método basado en el concepto de discretización del cuerpo en partículas, se ha elegido por sus características que lo convierten en una técnica apropiada para resolver problemas en grandes deformaciones donde se tienen que utilizar complejas leyes constitutivas. En el marco del MPM se ha implementado una formulación irreducible que usa una ley constitutiva de Mohr-Coulomb y que tiene en cuenta no-linealidades geométricas. La estrategia numérica está verificada y validada con respecto a tests de referencia a resultados experimentales disponibles en la literatura. También, se ha implementado una formulación mixta para resolver los casos de flujo granular en condiciones no drenadas. Por último, la estrategia MPM desarrollada se ha utilizado y evaluado con respecto a un estudio experimental realizado para caracterizar la fluidez de diferentes tipologías de azúcar. Finalmente se presentan unas observaciones y una discusión sobre las capacidades y las limitaciones de esta herramienta numérica y se describen las bases para una investigación futura.

Abstract

Bulk handling, transport and processing of granular materials and powders are fundamental operations in a wide range of industrial processes and geophysical phenomena. Particulate materials, which can be found in nature, are usually characterized by a grain size which can range across several scales: from nanometre to the order of metre. Depending on the volume fraction and on the shear strain conditions, granular materials can have different behaviours and often can be expressed as a new state of matter with properties of solids, liquids and gases. For the above reasons, both the experimental and the numerical analysis of granular media is still a difficult task and the prediction of their dynamic behaviour still represents, nowadays, an important challenge. The main goal of the current monograph is the development of a numerical strategy with the objective of studying the macroscopic behaviour of dry granular flows in quasi-static and dense flow regime. The problem is defined in a continuum mechanics framework and the balance laws, which govern the behaviour of a solid body, are solved by using a Lagrangian formalism. The Material Point Method (MPM), a particle-based method, is chosen due to its features which make it very suitable for the solution of large deformation problems involving complex history-dependent constitutive laws. An irreducible formulation using a Mohr-Coulomb constitutive law, which takes into account geometric non-linearities, is implemented within the MPM framework. The numerical strategy is verified and validated against several benchmark tests and experimental results, available in the literature. Further, a mixed formulation is implemented for the solution of granular flows that undergo undrained conditions. Finally, the developed MPM strategy is used and tested against the experimental study performed for the characterization of the flowability of several types of sucrose. The capabilities and limitations of this numerical strategy are observed and discussed and the bases for future research are outlined.

List of Symbols

| : | time; |

| : | internal friction angle; |

| : | particle diameter; |

| : | particle density; |

| : | shear rate; |

| : | undeformed configuration; |

| or : | deformed configuration at time ; |

| or : | deformed configuration at time ; |

| : | characteristic function in the Generalized Interpolation Material Point Method; |

| : | particle volume; |

| : | current particle domain; |

| : | current domain occupied by the continuum; |

| : | shape function relative to node evaluated at position at position ; |

| : | gradient shape function relative to node evaluated at position at position ; |

| : | gradient shape function from node-based calculation; |

| : | gradient shape function evaluated in Dual Domain Material Point Method; |

| : | relative to the material point ; |

| : | relative to the node ; |

| : | relative to the node at time ; |

| : | relative to the node at time and iteration ; |

| : | displacement vector; |

| : | increment displacement vector; |

| : | velocity vector; |

| : | acceleration vector; |

| : | pressure; |

| : | momentum vector; |

| : | inertia vector; |

| : | mass; |

| : | stability parameters of Bossak method; |

| : | vector of unknown incremental displacement; |

| : | tangent matrix of the linearised system of equation; |

| : | residual vector of the linearised system of equation; |

| : | local coordinates relative to a material point; |

| : | on-negative locality coefficient in Local Maximum Entropy technique; |

| : | measure of nodal spacing in Local Maximum Entropy technique; |

| : | dimensionless parameter in Local Maximum Entropy technique; |

| : | total deformation gradient; |

| : | determinant of the total deformation gradient; |

| : | incremental deformation gradient; |

| : | First Piola-Kirchhoff stress tensor; |

| : | Second Piola-Kirchhoff stress tensor; |

| : | Kirchhoff stress tensor; |

| : | Cauchy stress tensor; |

| : | specific strain energy function; |

| : | rotation tensor; |

| : | rotation tensor from polar decomposition of ; |

| : | right stretch tensor; |

| : | left stretch tensor; |

| : | right Cauchy-Green tensor; |

| : | first principal invariant of ; |

| : | first invariant of ; |

| : | left Cauchy-Green tensor; |

| : | Lame constant; |

| : | bulk modulus; |

| : | shear modulus; |

| : | material incremental constitutive tensor; |

| : | spatial incremental constitutive tensor; |

| : | symmetric second order unit; |

| : | fourth order identity tensor; |

| : | fourth order symmetric identity tensor; |

| : | fourth order deviatoric projector tensor; |

| : | symmetrical spatial velocity gradient; |

| : | rate of deformation tensor; |

| : | spin tensor; |

| : | velocity gradient tensor; |

| : | volumetric part of ; |

| : | deviatoric part of ; |

| : | deviatoric part of ; |

| : | elastic part of ; |

| : | plastic part of ; |

| : | volume preserving part of ; |

| : | flow stress; |

| : | isotropic hardening; |

| : | hardening parameter; |

| : | plastic multiplier; |

| : | unit vector of ; |

| : | in trial state; |

| : | trace of ; |

| : | metric tensor in current configuration; |

| : | spatial algorithmic elastoplastic moduli; |

| : | cohesion; |

| : | dilation angle; |

| : | normal stress; |

| : | principal Hencky strain; |

| : | Hencky elastic constitutive tensor; |

| : | elasto-plastic fourth order constitutive tensor; |

| : | consistent elasto-plastic tangent; |

| : | eigenvalues vector of ; |

| : | eigenvector vector of ; |

| : | eigenbases vector of ; |

| : | eigenvector vector of ; |

| : | eigenbases vector of ; |

| : | body; |

| : | 3D Euclidean space; |

| : | real coordinate space in 3D; |

| : | mass density; |

| : | mass density in underformed configuration; |

| : | body force; |

| : | gravity; |

| : | Neumann boundary in deformed configuration; |

| : | Dirichlet boundary in deformed configuration; |

| : | prescribed normal tension on Neumann boundary; |

| : | prescribed displacement on Dirichlet boundary; |

| : | displacement weight function; |

| : | pressure weight function; |

| : | displacement space; |

| : | displacement finite element space; |

| : | in the finite element space; |

| : | geometrical representation of ; |

| : | finite volume assigned to a material point; |

| : | Hilbert space; |

| : | differential volume in deformed configuration; |

| : | differential boundary surface in deformed configuration; |

| : | differential volume in undeformed configuration; |

| : | material gradient operator; |

| : | spatial gradient operator; |

| : | symmatric part of gradient; |

| : | fourth order incremental constitutive tensor relative to ; |

| : | fourth order incremental constitutive tensor relative to ; |

| : | fourth order incremental constitutive tensor relative to ; |

| : | matrix form of ; |

| : | deformation matrix; |

| : | geometric stiffness matrix; |

| : | material stiffness matrix; |

| : | static part of ; |

| : | dynamic part of ; |

| : | pressure space; |

| : | vector of unknown pressure; |

| : | geometric stiffness matrix in mixed formulation; |

| : | material stiffness matrix in mixed formulation; |

| : | residual vector relative to the momentum balance equation; |

| : | residual vector relative to the pressure continuity equation; |

| : | residual vector relative to the stabilization term; |

| : | mass matrix; |

| : | mass matrix relative to the stabilization term; |

| : | residual vector relative to the stabilization term; |

| : | stabilization parameter; |

| : | mixed terms in the mixed formulation |

| : | diameter at which 10% of the sample's mass is comprised of particles with a diameter less than this value; |

| : | diameter at which 50% of the sample's mass is comprised of particles with a diameter less than this value; |

| : | diameter at which 90% of the sample's mass is comprised of particles with a diameter less than this value; |

| : | matrix form of in axisymmetric case; |

| : | tangent stiffness matrix in axisymmetric case; |

| : | geometric stiffness matrix in axisymmetric case; |

| : | material stiffness matrix in axisymmetric case; |

1 Introduction

Bulk handling, transport and processing of particulate materials, such as, granular materials and powders, are fundamental operations in a wide range of industrial processes [1] or geophysical phenomena and hazards, such as, landslides, debris flows, etc. [2]. Particulate systems are difficult to handle and they can show an unpredictable behaviour, representing a great challenge in the industrial production, concerning both design and functionality of unit operations in plants, but also in the research community of Powders and Grains [3,4]. Granular materials and powders consist of discrete particles such as, e.g., separate sand-grains, agglomerates (made of several primary particles), natural solid materials like sandstone, ceramics, metals or polymers sintered during additive manufacturing. The primary particles can be as small as nano-metres, micro-metres, or millimetres [5] covering multiple scales in size and a variety of mechanical and other interaction mechanisms, such as, friction and cohesion [6], which become more and more important the smaller the particles are. All those particle systems have a particulate, usually disordered, possibly inhomogeneous and often anisotropic micro-structure; nowadays, the research community is working actively in order to have a deeper understanding and aware knowledge of bulk behaviour affected by micro-scale parameters. Indeed, particle systems as bulk show a completely different behaviour as one would expect from the individual particles. Collectively, particles either flow like a fluid or rest static like a solid. In the former case, for rapid flows, granular materials are collisional, inertia dominated and compressible similar to a gas. In the latter case, particle aggregates are solid-like and, thus, can form, e.g., sand piles or slopes that do not move for long time. Between these two extremes, there is a third flow regime, dense and slow, characterized by the transitions (i) from static to flowing (failure, yield) or vice-versa (ii) from fluid to solid (jamming). At the particle and contact scale, the most important property of particle systems is their dissipative, frictional, and possibly cohesive nature. In this context, dissipation shall be understood as kinetic energy, at the particle scale, which converts into heat, for instance, due to plastic deformations. The transition from fluid to solid can be caused by dissipation alone, which tends to slow down motion. The transition from solid to fluid (start of flow) is due to failure and instability when dissipation is not strong enough and the solid yields and transits to a flowing regime.

In this Chapter, the granular flow theory is presented more in detail and the main attempts, available in the literature, for the modelling of granular matter behaviour in the different regimes are discussed. Afterwards, objectives and layout of the current monograph are presented.

1.1 The granular flows

The heavy involvement of particle materials in many different industrial processes makes the granular matter, nowadays, a remarkable object of study. Particulate materials exist in large quantity in nature and it is established that most of the industrial processes, such as, pharmaceutical, agricultural, chemical, just to cite a few, deal with materials that are particulate in structure. In the industrial field, dealing with processes at large scales and huge quantities of raw material, any issue, encountered in the production line, may cause losses in terms of productivity, and, thus, of money. During the last decades, it has been documented that also in processes of granular matters, a lack of knowledge implies non-optimal production quality. In older industrial surveys, Merrow [7] found that the main factor causing long start-up delays in chemical plants is represented by the processing granular materials, especially due to the lack of reliable predictive models and simulations, while Ennis et al. [8] reported that 40 of the capacity of industrial plants is wasted because of granular solid phenomena. More recently, Feise [9] analysed the changes in chemical industry and predicted an increase in particulate solids usage along with new challenges due to new concepts like versatile multi-purpose plants, and fields like nano and bio-technology. For these reasons, it is clear that it is fundamental to have a better understanding of particulate materials behaviour under different conditions and to be able to improve the production quality through experimental campaigns and numerical modelling works. However, due to the wide variety of intrinsic properties of particulate materials a unified constitutive description, under any condition, has not been established yet.

With granular flow, we refer to motions where the particle-particle interactions play an important role in determining the flow properties and the flow patterns which are quite different from those of conventional fluids. The most evident differences between granular systems and simple fluids affecting the macroscopic properties of the flow, as pointed out in [10], are:

- The size of grains is typically approximately more massive and voluminous than a water molecule. As both fluid and particle motions can be studied according to the laws of classical mechanics, this is not a fundamental difference, but could represent an important factor while evaluating the applicability of continuum hypothesis, as explained below;

- In granular systems, when granules collide, a loss of kinetic energy converted in true heat is observed. This difference determines the main feature which deeply distinguishes the granular flows modelling from the fluid flows one;

- In nature, grains are not identical: particle shape, particle roughness and solid density are only some of the particle properties which characterize a grain from the other. As real particles are not exactly spherical and typically the surface is rough, in grain-grain interactions frictional forces and torque are created and grains rotate during the collision.

The above comparison is useful to emphasise that the main assumptions on the basis of fluid flow modelling can not coincide with those of granular flow modelling. Further, this comparison can also be useful to set the bounds of the continuum assumptions enforceability. Three length scales have to be considered for the definition of these hypotheses. The first one is related to the particle size. Typically the value of density in grain systems is much smaller than in molecular fluids; this means that, for instance, in a cubic mm of fluids the number of molecules is much higher than the number of grains. If a macroscopic quantity changes significantly over a 1 mm of length, the variation over the molecules is small, but in the granular materials, if the number of particles in a 1 mm is low, a bigger variation is registered falling out of the continuum assumptions. The second length is related to the container confining the system and the third one to the inelasticity in grain-grain collisions. The latter can be defined as the radius of the pulse, related to a degradation of factor of the total kinetic energy in a system of grains after a localized input of energy. If the inelasticity is not small this length covers just a few particles. This implies substantial changes in macroscopic quantities over distances measured over a small number of grain diameters, which do not allow to respect the continuum assumptions.

When one wants to study the flow of granular materials has to bear in mind that the bulk in motion is represented by an assembly of discrete solid particles interacting with each other. Depending on the intrinsic properties of the grains and the macroscopic characteristic of the system (i.e. geometry, density, velocity gradient), the internal forces can be transmitted in different ways within the granular material. Depending on this, three main flow patterns can be observed experimentally and numerically [11]. At large solids concentration and low shear rate, the stresses are not evenly distributed, but are concentrated along networks of particles, called force chains. The force chains are dynamic structures, which rotate, become unstable and, finally, collapse as a result of the shear motion. When granular material fails it is observed that the failure occurs along narrow planes, within the material, which have not infinitesimal thickness, but are zones of the order of ten particles across called shear bands. Within the shear bands, the stresses are still distributed along the force chains and the shear and normal stresses are related in non-cohesive material as

|

|

(1.1) |

where is the internal friction angle. Observing Equation 1.1, it is noted that depends on the geometry of the force chains; thus, the friction-like response of the bulk is a result of the internal structure of force chains, as well.

This flow pattern takes the name of Quasi-static regime because the rate of formation of the force chain divided by their lifetime is independent on the shear rate and with this also the generated stresses. If on one hand, the shear rate does not affect the response of the system, on the other hand, it is worth highlighting that the inter-particle stiffness k plays an important role, as the stresses within the force chains show a linear dependence with k. The inter-particle stiffness, in turn, can be expressed as a linear function of the Young modulus E of the material and also depends on the local radius of curvature, thus, on the geometry of the contact. Bathurst and Rothenburg [12] have derived an expression for the bulk elastic modulus K, which linearly depends on the stiffness and can be used in the definition of the sound speed in a static granular material. As shown in the data of Goddard [13] and Duffy Mindlin [14], the wave velocity is dependent on the pressure applied to the bulk assembly. Thus, increasing the confining pressure, the elastic bulk modulus increases along with the inter-particle stiffness k and the force chains lifetime.

Until the shear rates are kept low, the inertia effects are small and force chains are the only mechanism available to balance the applied load. Increasing the shear rate, the particles are still locked in force chains, but the forces generated have to take into account the inertia introduced in the system. Further increasing , the inertial component of the internal forces linearly increases with the shear rate and when these are comparable with the static forces the flow transition to the Inertial regime takes place. The Inertial regime encompasses flows where force chains cannot form and the momentum is transported largely by particle inertia. In this regime, the shear stresses are independent of the stiffness, but dependent on the second power of the shear rate, as expressed in the Bagnold scaling [15], where and are the particle diameter and particle density, respectively. Even if force chains are not present, multiple simultaneous contacts between the particles still coexist allowing longer contact period . By defining with the binary contact time, i.e., the duration of a contact between two freely colliding particles, the ratio . If this ratio has the value of 1, the dominant particle collisions are binary and instantaneous and the flow is defined with the name of Rapid Granular Flow [16], which can be considered as an asymptotic case of the Inertial Regime. This flow path is controlled by the property of granular temperature, which represents a measure of the unsteady components of velocity. The granular temperature is generated by the shear work and it drives the transport rate in two principal modes of internal (momentum) transport: a collisional and a streaming mode. In the first case, the granular temperature provides the relative velocity that drives particle to collide; while, in the second case, it generates a random velocity that makes the particles move relatively to the velocity gradient. In the Rapid Granular Flow the coexistence of contact and streaming stresses can be observed; obviously, the collisional mode dominates at high concentrations, while the streaming mode at low concentrations. It is generally assumed that, at small shear rates, a flow behaves quasi-statically, and that by increasing the shear rate, one will eventually end up in the Rapid Flow regime. As pointed out by Campbell [11], the transition through the regimes is regulated by the volume fraction and the shear rate. However, by fixing the first or the second field, the transition may take place in a different way.

1.2 Granular flow modelling

Despite the prevalence of granular materials in most of the industrial applications, there is still a large discrepancy between results predicted by analytical or numerical solutions and their real behaviour [17]. Thus, structures and facilities for dealing with particulate material handling are not functioning efficiently and there is always a probability of structural failure and an unexpected arrest of the production line. Due to the intrinsic nature of granular materials, the prediction of their dynamic behaviour represents nowadays an important challenge for two main reasons. Firstly, the characteristic grain size has an excessively wide span: from nanoscale powders (such as colloids with a typical size of nanometre) to large blocks of coal extracted from mines. This feature gives rise to some difficulties in defining a unique model able to properly work across many scales. Secondly, although these materials are solid in nature, they behave differently in various circumstances and often changes in a new state of matter with properties of solids, liquids and gases [17]. Indeed, as with solids, they can withstand deformation and form heap; as with liquids, they can flow; as with gases, they can exhibit compressibility. This second aspect makes the modelling of granular matter even more difficult to define, as the macroscopic behaviour is affected by a set of microscopic parameters which often are not directly measurable from laboratory tests. Nevertheless, these challenges encouraged the research community to work actively in the particle technology field, developing and improving several numerical and experimental techniques for the characterization of granular materials.

As explained in Section 1.1, a granular flow can undergo three main regimes in different domains of volume fraction and shear rate. When the grains have very little kinetic energy, the assembly of the particle is dense, and if the structure is dominated by the force chains the response of the bulk is independent on the shear rate. In this case, the flow pattern is known as Quasi-static regime and the behaviour is well described by classical models used in soil mechanics [18]. On the other hand, if a lot of energy is brought to the grains, the system is dilute, granular materials are collisional, inertia-dominated and compressible similar to a gas. In this case, the stresses vary as the square of the shear rate [15] and the flow falls under the Rapid granular flow theory. The principal approach, provided in the literature, for modelling granular flow under these conditions is represented by the Kinetic Theory of granular gases [16]. In the definition of such a model, the formalism of gas kinetic theory is used with the constraint to consider the particles perfectly rigid and the kinetic theory formalism leads to a set of Navier-Stokes equations. Between these two regimes, we can find the dense and slow flow regime, characterized by the presence of multiple particles contact, but also by the absence of force chains. For the modelling of granular flows under this regime, in [19] the constitutive relation of a viscoplastic fluid is proposed, commonly known with the name of rheology. The idea comes from the analogy observed with Bingham fluids, characterized by a yield criterion and a complex dependence on the shear rate. By assuming the particles perfectly rigid and a homogeneous and steady flow, a set of Navier-Stokes equations is provided. Despite it has been demonstrated that the model can successfully reproduce the results of some experimental tests [19], the model can only qualitatively predict the basic features of granular flows. In fact, some phenomena, such as, the formation of shear bands, flow intermittence and hysteresis in the transition solid to fluid and vice-versa, cannot be modelled through the law.

Even if there are models able to predict the flow behaviour in single regimes, a comprehensive rheology, able to gather together all three regimes, is still missing in the literature. Many attempts have been done during the last years. To cite a few of them, it is worth mentioning the contribution of Vescovi and Luding [20] where a homogeneous steady shear flow of soft frictionless particles is investigated; both fluid and solid regimes are considered and merged into a continuous and differentiable phenomenological constitutive relation, with a focus on the volume fraction close to the jamming value. Also Chialvo and coworkers [21] turn the attention to the interface between the quasi-static and the inertial regime in the context of a jamming transition, still neglecting any time dependency (under the assumption of steady-state flow), but considering soft friction particles. Other proposals are based on the relaxation of some hypotheses at the base of the rheology, such as, for instance, the constitutive laws provided by Kamrin et al. [22] where the non-local effects are considered and by Singh et al. [23] where the particle stiffness influence is included in the model.

In order to perform a numerical investigation of the granular flow problem, one has to keep in mind that not only a constitutive model is needed for its accomplishment, but also a numerical technique used to solve the system of algebraic equations which govern the problem. Numerical methods can be distinguished according to the kinematic description adopted and to the spatial and time scales that balance laws are based on. As previously observed, particulate materials can be studied at different scales and depending on this the selection of the numerical technique may change.

Granular materials have a discrete nature and it is of paramount importance to have a clear description at the microscale for a deep understanding of the physics behind the bulk behaviour. In recent decades, the most common and used numerical tool for such investigation is represented by the Discrete Element Method (DEM) [24], which considers a finite number of discrete interacting particles, whose displacement is described by the Newton's equations related to translational and rotational motions.

In the research community, granular materials are studied by using a continuum approach, as well. For instance, all the aforementioned constitutive models, proposed under the condition which range between the Quasi-static regime and Rapid granular flow, are based on a continuous description of the granular matter behaviour. On one hand these approaches, obviously, do not allow to predict the material response on the point, where two particles collide, i.e., at the microscale, but are able to provide a mesoscale response, constant on a representative elemental volume (REV), where the continuum assumptions are still valid [10].

Other methods, available in the literature, are used to scale-up from the micro-level. In this regard, it is worth mentioning the Population Balance Method (PBM) [25], able to describe the evolution of a population of particles from the analysis of single particle in local conditions. For instance, PBM is widely used to track the change of particle size distribution during processes where agglomeration or breakage of particles are involved, by using information, such as, impact velocity distribution, provided by DEM analysis [26,27].

1.3 Objectives

In the present work, we focus on the macroscale analysis of granular flows. More specifically, the current monograph aims at providing a verified and validated numerical model able to predict the behaviour of highly deforming bulk of granular materials in their real scale systems. Some examples, objects of this study, are represented by hopper flows, the collapse of granular columns and measurement of bearing capacity of a soil undergoing the movement of a rigid strip footing. These tests are characterized by some common features which are essential for the definition of the numerical tool to be developed. All the examples of granular flow mentioned above are densely packed with solid concentrations well above 50% of the volume and they can be considered dense granular flow where forces are largely generated by inter-particle contacts. This implies that a collisional state of the matter, where the principal mechanism of momentum transport is based on binary particle contacts, will never be reached. Moreover, in all cases an elastic/quasi-static regime usually coexists with a plastic/flowing regime. In order to be able to model the quasi-static and the flowing behaviours simultaneously in different parts of the material domain, a constitutive law which accounts for both the elastic and plastic regime is needed. The use of viscous fluid materials, as those described by the rheology and its different versions, can predict the granular flow behaviour under the inertial regime. However, the good reliability of these models is still limited to the steady case and to volume fractions whose values never exceed the jamming point. Further, if a viscous material is chosen, some difficulties might be encountered in the evaluation of internal forces where a zero strain rate is present. With the picture described above, the numerical model is conceived in a continuum mechanics framework in order to optimize the high, not to say prohibitive, computational cost which might be induced by the high value of density in the grain system, if a discrete technique is, then, selected. Moreover, the transition between the solid-like and fluid-like behaviour induces large displacement and huge deformation of the continuum which, from the numerical viewpoint, is well established to be tough to handle with standard techniques, such as the well-known Finite Element Method (FEM). Last but not least, not only geometric, but also material non-linearities should be considered. To address to this concern, the choice falls on those elasto-plastic laws, defined within the solid mechanics framework, whose stress response depends on the total strain history and historical parameters characteristic of the material model. The numerical model to adopt has to be based not only on a continuum mechanics framework, but also has to be able to track with high accuracy the huge deformation of the medium and the spatial and time evolution of its own material properties. After a search focused on the numerical model which closest fits with the features outlined above, it is found that the Material Point Method (MPM) [28], a continuum-based particle method, might be a good candidate in solving granular flows problems under multi-regime conditions and an optimal platform for the numerical implementation of new constitutive laws which attempt to include a bridge between different scales (from the particle-particle contact (micro) to the bulk (macro) scale). In the current work, an implicit MPM is developed by the author in the multi-disciplinary Finite Element codes framework Kratos Multiphysics [29,30,31]. Unlike most MPM codes, which make use of explicit time integration, in this monograph it is decided to adopt an implicit integration scheme. The choice is made with the aim of analysing cases characterized by a low-frequency motion and providing results with a higher stability and better convergence properties. Two formulations are implemented within the MPM framework by taking into account the geometric non-linearity, which allows to treat problems of finite deformation, usually not considered in many MPM codes that one can find in the literature. Firstly, an irreducible formulation and a Mohr-Coulomb constitutive law are developed. Further, a mixed formulation is proposed for the analysis of granular flows under undrained conditions, which represents, to the knowledge of the author, an original solution in the context of the MPM technique. The MPM strategy, with both the formulations, is validated by using experimental results or solutions of other studies, available in the literature. Last but not least, as final objective of this monograph, the developed MPM numerical tool is successfully tested in an industrial framework, in the context of a collaboration with Nestlé. A comparison is performed against an unpublished experimental study conducted for the characterization of flowability of several types of sucrose. Advantages and limitations of the numerical strategy provided are observed and discussed.

1.4 T-MAPPP project

The current work has been funded by the T-MAPPP (Training in Multiscale Analysis of MultiPhase Particulate Processes and Systems, FP7 PEOPLE 2013 ITN-G.A. n60) project. This project has been conceived in order to bring together European organizations leading in their respective fields of production, handling and use of particulate systems. T-MAPPP is an Initial Training Network funded by FP7 Marie Curie Actions with 10 full partners and 6 associate partners. The role of the network is to train the next generation of researchers who can support and develop the emerging inter- and supra-disciplinary community of Multiscale Analysis (MA) of multi Phase Particulate Processes. The goal is to develop skills to progress the field in both academia and industry, by devising new multiscale technologies, improving existing designs and optimising dry, wet, or multiphase operating conditions. One aim of the project is to train researchers who can transform multiscale analysis and modelling from an exciting scientific tool into a widely adopted industrial method; in other words, the establishment of an avenue able to increasingly link academic to real world challenges.

1.5 Layout of the monograph

The layout of the document is as follows: in Chapter 2, after a brief review of the state of the art in particle methods, the focus is put on those methods which are more consistently used for the prediction of granular flows behaviour, such as, the Discrete Element Method (DEM), the Particle Finite Element Method (PFEM), the Galerkin Meshless Methods (GMM) and the Material Point Method (MPM). The latter is the chosen approach, used and developed in this monograph. The choice is discussed and the details of the proposed formulations are provided. In Chapter 3 the theory of constitutive laws used in the current work is presented with their implementations under the assumption of finite strains. In Chapter 4 and Chapter 5 an irreducible and a mixed stabilized formulation, respectively, are presented and verified with solid mechanics benchmark examples. Then, in Chapter 6 the numerical model of MPM, presented in the previous chapters, is applied and validated (with experimental and numerical results available in the literature) against granular flow examples, such as, the granular column collapse and the rigid strip footing test. In Chapter 7 the MPM strategy is applied in an industrial framework. The numerical results are compared against experimental measurements performed for the assessment of the flowability performance of different types of sucrose. Finally, in Chapter 8 some conclusions are drawn, where observations and limitations of the numerical strategy are provided, and the bases for future research are outlined.

2 Particle Methods

Computer modelling and simulation are now an indispensable tool for resolving a multitude of scientific and challenging problems in science and engineering. During the last decades the importance of computer-based science has exponentially grown in the engineering field and, nowadays, it is widely adopted in the study of different processes because of its advantages of low cost, safety and efficiency over the experimental modelling. The numerical simulation of solid mechanics problems involving history-dependent materials and large deformations has historically represented one of the most important topics in computational mechanics. Depending on the way deformation and motion are described, existing spatial discretisation methods can be classified into Lagrangian, Eulerian and hybrid ones. Both Lagrangian and Eulerian methods have been widely used to tackle different examples characterized by extreme deformations. In this chapter, firstly, the most common numerical techniques used in the modelling of granular flows are presented. Then, the focus is put on the Material Point Method, which is the object of the present study.

2.1 Lagrangian and Eulerian approaches

In continuum mechanics two fundamental descriptions of the kinematic and the material properties of the body, under analysis, are possible. The first one is represented by the Lagrangian approach. In this case the description is made as the observer were attached to a material point forming part of the continuum. Lagrangian algorithms, traditionally employed in structural mechanics, make use of a moving deforming mesh dependent on the motion of the body and are distinguished by the ease with which the material interfaces can be tracked and the boundary conditions can be imposed. According to [32] three Lagrangian formulations can be defined:

- the Total Lagrangian formulation, where all the variables are written with reference to the undeformed configuration at the initial time

- the Updated Lagrangian formulation, where all the variables are written with reference to the deformed configuration at the previous time

- the Updated Lagrangian formulation, where all the variables are written with reference to the deformed configuration at the current time

Moreover, history-dependent constitutive laws can be readily implemented and, since there is not advection between the grid and the material, no advection term appears in the governing equations. In this regard, Lagrangian methods are more simple and more efficient than Eulerian methods. The greatest drawback of this approach is represented by the high distortion of the mesh and element entanglement when the material undergoes really large deformation, which makes more difficult to obtain a stable solution with an explicit integration scheme. The second approach lies on an Eulerian description, i.e., the observer is located at a fixed spatial point. Thus, Eulerian techniques, mostly employed in fluid mechanics, are characterized by the use of a fixed grid and no mesh distortion or element entanglement are observed neither in the case of very large deformation. On the other hand, due to its intrinsic nature, it is difficult to identify the material interfaces and the definition of history-dependent behaviour is computationally intensive if compared with Lagrangian methods. As can be seen, each of the two approaches has advantages and drawbacks; thus, depending on the problem to solve, one technique is preferable over the second one.

In the framework of granular flow modelling the Lagrangian viewpoint presents, in this context, a rather obliged choice, since the adoption of such a framework greatly simplifies the constitutive modelling and the tracking of the entire deformation process. In the case of mesh-based methods, the natural limitation of the Lagrangian approach is related to the deformation of the underlying discretisation, which tends to get tangled as the deformation increases. Massive remeshing procedures have proved to be capable of further extending the realm of applicability of Lagrangian approaches, effectively extending the limits of the approach well beyond its original boundaries. Nevertheless, while, on one hand, it is possible to alleviate the distortion of the mesh, on the other hand, additional numerical errors arise from the remeshing and the mapping of state variables from the old to the new mesh. In this regard, the Arbitrary Lagrangian–Eulerian method (ALE) [32], a generalization of the two approaches described earlier, has been developed in the attempt to overcome the limitation of the Total Lagrangian (TL) and Updated Lagrangian (UL) techniques when severe mesh distortion occurs by making the mesh independent of the material, so that the mesh distortion can be minimized. However, for very large deformation severe computational errors are introduced by the distorted mesh. Furthermore, the convective transport effects can lead to spurious oscillations that need to be stabilized by artificial diffusion or by other stabilization techniques. Such disadvantages make the ALE methods less suitable than other techniques which can be found in the literature.

In the current work, the Lagrangian framework is considered, but the focus is on the so-called particle methods, a series of techniques which represent a natural choice for the solution of granular flow problems involving large displacement, large deformation and history-dependent materials. The next section introduces a brief state of the art of the most common particle methods with their distinguished features and fields of application.

2.2 Particle methods. A review of the state of the art

Particle methods are techniques which have in common the discretisation of the continuum by only a set of nodal points or particles. According to [33], they can be classified based on two different criteria: physical principles or computational formulations. For those methods classified according to physical principles a further distinction is made if the model is deterministic or probabilistic; while according to the computational formulations, the particle methods can be distinguished in two subcategories, those serving as approximations of the strong forms of the governing partial differential equations (PDEs), and those serving as approximations of their weak forms. In Tables 2.1 and 2.2 the classification is graphically shown with a list of the main approaches which fall under each category.

| Physical principles | |

| Deterministic models | Probabilistic models |

| Discrete Element Method (DEM) | Molecular Dynamics |

| Monte Carlo methods | |

| Lattice Boltzmann Equation method | |

| Computational formulations | |

| Approximations of the strong form | Approximations of the weak form |

| Smooth Particle Hydrodynamics | Meshfree Galerkin Method: |

| Vortex Method | - Diffusive Element Method |

| Generalized finite Difference Method | - Element Free Galerkin Method |

| Finite Volume PIC | - Reproducing Kernel Method |

| - h-p Cloud Method | |

| - Partition of Unity Method | |

| - Meshless Local Petrov-Galerkin Method | |

| - Free Mesh Method | |

| Mesh-based Galerkin Method | |

| - Material Point Method | |

| - Particle Finite Element Method | |

In the following sections, a bibliographic review of the most common and widely used particle methods in granular flow modelling is presented. The first method to be presented is the Discrete Element Method. Then, the Smooth Particle Hydrodynamics, the Meshfree Galerkin Method, the Particle Finite Element Method and the Particle Finite Element Method 2 are briefly introduced. For each of those advantages and disadvantages are discussed. Finally, the Material Point Method and a meshless variation of it and their algorithms are extensively described.

2.2.1 The Discrete Element Method (DEM)

The numerical approach which considers the problem domain as a conglomeration of independent units is known as Discrete Element Method (DEM), developed by Cundall and Strack in 1979 [24]. DEM was initially used for studying of rock mechanics problems using deformable polygonal-shaped blocks. Later, it has been widely utilized to study geomechanics, powder technology and fluid mechanics problems. Each particle is identified separately having its own mass, velocity and contact properties and, during the calculation, it is possible to track the displacement of particles and evaluate the magnitude and direction of forces acting on them. The main distinction between DEM and continuum approaches is the assumption on material representation; in DEM every particles represents a physical entity, e.g., the single grains in the granular system, while in a continuum method particles take the place of material points, which have instead just a numerical purpose in the computation of the solution.

According to [24] the time step must be chosen in a way that disturbances from an individual particle cannot propagate further than their neighbours. Usually, in order to avoid significant instability in the granular system the time step should be smaller than a critical time step, called the Rayleigh time step.

DEM is a good example of numerical technique that treats the bulk solid as a system of distinct interacting bodies. Thus, with DEM it is possible to simulate interaction at the particle level (at a spatial scale which ranges from to , depending on the size of the grains) and, at the same time, to obtain an insight into overall response, bulk properties such as stresses and mean velocities [34]. Therefore, it can provide a clear explanation on particle-scale behaviour of granular solids to characterize bulk mechanical responses, as it is done in several contributions [35,5]. Moreover, this technique is really useful and interesting in the research field of granular matter since DEM can be seen as a tool for performing numerical experiments that allow contact-less measurements of microscopic quantities that are usually impossible to quantify using physical experiments. Discrete element modelling has been also used extensively to analyse various handling and processing systems that deals with multiple bulk solids [36,37,38]. However, the extremely high computational cost, proportional to the number of particles, leads to the limitation of considering relatively small system sizes and idealized geometries.

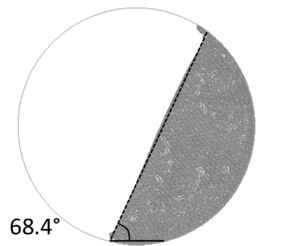

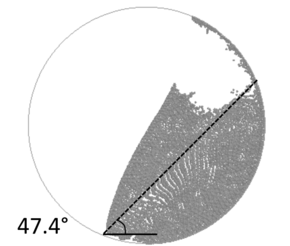

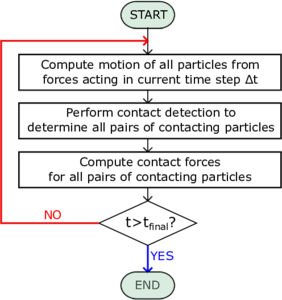

The schematic flowchart, which has to be followed in order to execute a DEM calculation [39] at each time step, is displayed in Figure 2.1a. Even if the algorithm looks to be straightforward to run and easy to implement, the computational cost is proportional to the number of discrete elements and to their shapes. Thus, simplified assumptions have been made in the mathematical models in order to reduce the computational efforts. The primary idealized factor in DEM simulations is the shape of particles which is considered as spheres to simplify the contact detection process, which is the most time consuming step in DEM simulations. Among diverse physical properties of individual particles in particulate materials, the shape and morphology play important roles in shear strength and flowability of the bulk. In order to improve this aspect several approaches have been utilized in DEM, such as, clumped spheres [40], polyhedral shapes [41], super-quadric function [42]. However, in order to obtain accurate results the computational cost may arise significantly. Another important step in DEM is to realistically simulate the physical impact between particles. This is usually approximated by defining spring and dashpots between contacting surfaces, as it is done in the linear-spring dash-pot models [43] or the Hertzian visco-elastic models. In the literature other contact models can be found, such as meso-scale models [35,44,45] or realistic contact models [46,47,48], which can provide a high accuracy both at the particle and bulk level, but valid only for the limited class of materials they are particularly designed for.

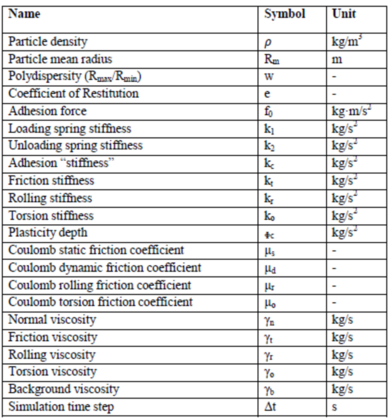

Moreover, for a proper understanding of a process and to study realistic behaviour through DEM simulations, the input parameters, listed in Figure 2.1b, play a vital role. The input parameters are often assumed without careful assessment or calibration which often leads to unrealistic behaviours and erroneous results. Designing of equipment or of a process route with an un-calibrated DEM model may lead to serious handling and processing operations such as segregations, unexpected wear, irregular density of products, flow blockages and etc. Thus, a correct definition of the input parameters by experimental characterization and/or calibration, using particle-level tests, directly affect the reliability of the final response at the bulk level. However, this might result in an extremely time and cost consuming procedure, that not always it is possible to perform for a lack of time and/or money.

In conclusion, DEM is a valid and useful numerical tool for research in the granular matter field and development of new contact models. However, the drawbacks aforementioned in the current section make the DEM still not an easy and limited tool to use in the engineering and industrial framework, mainly when real scale systems are under study.

| |

| (a) | |

| |

| (b) | |

| Figure 2.1: Dem algorithm (a), with the time instant and the time interval, and input parameters in DEM model (b). | |

2.2.2 The Smoothed Particle Hydrodynamics

Among the methods which serve as approximations of the strong of PDEs, the Smoothed Particle Hydrodynamics (SPH) [49,50] is one of the earliest particle methods in computational mechanics. It was initially designed for solving hydrodynamics problems [51,52], such as astrophysical applications [53,54,55]; later, SPH has been also applied to solid mechanics problems involving impact, penetration and large deformation of geomaterials [56,57,58,59] or compressible and incompressible flow problems [60,61]. The PDE is usually discretized by a specific collocation technique: the essence of the method is to choose a smooth kernel which not only smoothly discretized a PDE, but also furnishes an interpolant scheme on a set of moving particles. Even if the method is widely used by the computational mechanics community, it is well established in the literature that the SPH suffers of some pathologies such as tensile instability [62,63], lack of interpolation consistency [64,65], zero-energy mode [66] and difficulty in enforcing essential boundary condition [67,68]. To solve the aforementioned fundamental issues several improvements have been provided through the years. To mitigate the tensile instability and the zero-mode issues the stress point approach [63,69] has been proposed. To correct completeness, or consistency, closely related to convergence, the use of corrective kernels are considered; for instance Liu et al. [64] proposed new interpolants named the Reproducing Kernel Particle Method and many other approaches can be found in the literature [70,71,72] addressing this shortcoming. To enforce essential boundary conditions it is worth mentioning the contribute of Randles and Libersky [67], where the so-called ghost particle approach is proposed.

2.2.3 The Meshfree Galerkin Methods

The Meshfree Galerkin Methods, unlike the SPH, were mainly developed only in the early of 1990s. The first meshless methods appeared in the literature are represented by the Diffusive Element Method [73], where moving least square (MLS) interpolants [74] are employed, and the Reproducing Kernel Particle Method (RKPM) [64,75], defined in the attempt to provide a corrective SPH. Later, other techniques were proposed, such as the Element Free Galerkin Method (EFGM) [76], in which the MLS interpolants are for the first time used in a Galerkin procedure, or the Partition of Unity Method [77], where a partition of unity is taken and multiplied by any independent basis. Usually most meshfree interpolants do not satisfy the Kronecker delta property 1 and the impossibility of a correct imposition of the essential boundary conditions represents one of the principal bottleneck of these approaches. Some remedies for the enforcement of the EBCs are given by the Lagrange Multipliers and Penalty method [78], the Transformation method [79], the Boundary singular kernel method [74] and the Coupled finite element and particle approach [80]. Most Meshfree Galerkin Methods make use of background grid to locate the quadrature points to integrate the weak form. From this aspect some problems in terms of accuracy as well as invertibility of the stiffness matrix may arise, due to the arbitrariness in locating the Gauss quadrature points. If these points are not enough in a compact support or are not evenly distributed spurious modes may also occur. In order to completely eliminate quadrature points some approaches have been proposed in the literature, e.g., the one proposed by Chen et al. [81] based on a stabilized nodal integration method. Despite the typical drawbacks of Meshfree Galerkin Methods, e.g., the aforementioned issue of quadrature integration and the higher computation cost in comparison with standard FEM, during the last decades meshless methods have been increasingly used to solve applied mechanics problem due to some key advantages which distinguish them from other techniques. For instance to mention some of them, in these methods the connectivity changes with time as they do not have a fixed topological data structure, the accuracy can be controlled easily given a h-adaptivity procedure and the meshfree discretisation can provide accurate representation of geometric object. Initially, meshfree methods have been used to address the challenging field of computational fracture mechanics. In this regards, the EFGM and the Partition of Unity Methods have been applied to crack growth and propagation problems [82,83,84]. The great advantage of not using a remeshing procedure has been also exploited in the application of large deformation problems; in particular, it is worth mentioning the use of the RPKM to metal forming, extrusion [85] and soil mechanics problems [86,87]. Meshfree methods have been also extensively applied to simulation of strain localization problems [88] since meshfree interpolants can successfully reduce the mesh alignment sensitivity in the formation of the shear bands.

(1) Let us define the Lagrange polynomials of degree , . satisfy the Kronecker delta property if

|

|

(2.1) |

i.e., . With regards to the shape functions, these ones lack of the Kronecker delta property when the weight function associated with the nodal points is not zero at the location of nodal point of interest .

2.2.4 The Particle Finite Element Method

The Particle Finite Element Method (PFEM) is a particle method which falls under the category of mesh-based Galerkin approaches. In PFEM the domain is modelled using an Updated Lagrangian formulation and the continuum equations are solved by means of a FEM approach on the mesh built up from the underlying node, also called . The main feature of the method is based on the employment of a fast remeshing procedure to relieve the typical issue of high distortion of the mesh and a boundary recognition method, i.e., the alpha-shape technique [89], needed to define the free surfaces and the boundaries of the material domain. Given an initial mesh, the remeshing procedure can be used arbitrarily at every time step [90] or when the mesh starts affecting the accuracy of the numerical solution, as in the case of explicit formulations [91]. This Lagrangian technique was first developed for the simulation of free surface flows and breaking waves [92,93], and then successfully adapted for structural mechanics problems involving large deformations [94,95], for the simulation of viscoplastic materials [96,91,97,98,99,100], in geomechanics [101,102] and Fluid-Structure Interaction (FSI) applications [103,104,105]. Although the method has broad capabilities, some disadvantages come from the use of remeshing procedures [106,107]. In the practice, although possible, the application of PFEM in problems with elastic or elasto-plastic behaviour faces difficulties related to the storage of historical variables, since information on the integration points is not preserved and needs to be remapped at the moment of remeshing. Moreover the alpha-shape technique, and the remeshing itself, lead to intrinsic conservation problems related to the arbitrariness of the reconnection patterns [108]. Last but not least, the characteristics of the remeshing approach at the base of the PFEM make it very hard to parallelize, thus, limiting the possibility of the method in terms of computational efficiency.

2.2.5 The Particle Finite Element Method 2

A second generation of PFEM (PFEM2) has been recently introduced [109,110,111,112], which tries to repair to the shortcomings observed in the previous version. PFEM2 is a hybrid particle method, which exploits the combination of both the Eulerian and Lagrangian approaches, as in the Material Point Method. The method has been tested in the analysis of interaction between different materials, such as, incompressible multifluids and fluid-structure interaction [113], and simulation of landslides and granular flows problems [114]. This technique is based on the use of a set of Lagrangian particles, in order to track properties of the continuum, and by a fixed finite element mesh, employed for the solution of the governing equations. Moreover, a projection of the data between the two spaces is performed during the calculation of a time step in order to keep updated the kinematic information between the particles and the nodes of the Eulerian mesh. One of the main differences which distinguishes PFEM2 from the Material Point Method lies on the definition of Lagrangian particle itself. In the case of PFEM2, the particles represent material points without a fixed amount of mass; in order to guarantee a good particle distribution in the computational domain, the number of particle might change during the simulation time. These Lagrangian entities do not represent integration points, but are just used with the purpose of convecting all the historical material properties and kinematic information through the simulation. This feature makes PFEM2 particularly adapted for the modelling of incompressible fluids, with Newtonian and non-Newtonian rheology and FSI problems.

2.3 The Materal Point Method

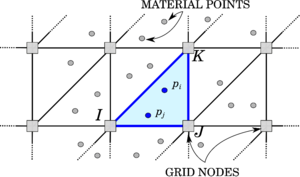

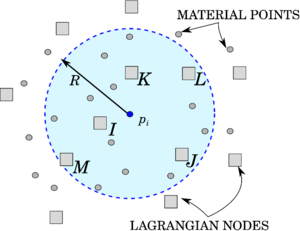

An alternative particle method proposed in the literature is represented by the Material Point Method (MPM) [115,28], which is object of study of this monograph. The Material Point Method (MPM) is a particle-based method, whose origin goes back to the paper of [116], where the particle-in-cell method (PIC), a technique for the solution of fluid flow problems, was proposed for the first time. Some decades after, in the works of [115,28], the PIC method is redefined within the solid mechanics framework, and after that, it is known to the computational community with the name of Material Point Method. MPM combines a Lagrangian description of the body under analysis, which is represented by a set of particles, the so-called material points, with the use of a computational mesh, named background grid, as can be observed in Figure 2.2.

|

| Figure 2.2: MPM. The shape functions on the material point are evaluated using FE shape function of element I-J-K. |

This distinctive feature allows one to track the deformation of the body and retrieve the history-dependent material information at each time instant of the simulation, without committing mapping information errors, typical of methods which make use of remeshing techniques. This makes the method particularly attractive for the solution of problems, characterized by very large deformations and by the use of complex constitutive laws [117,118]. For instance, the method has been extensively used for geotechnical problems [119,120,118] for its capabilities in tracking extremely large deformation while preserving material properties of the material points.

In the key works of Sulsky and co-workers [115,28], the MPM has been applied for the first time in the solid mechanics framework. Even if through the original MPM it was possible to solve complex problems involving, for instance, contact [121], interaction between different materials [122,123] and the use of history-dependent material laws [115], it was observed that the first version of MPM suffers from some intrinsic shortcomings. Indeed, due to the use of piece-wise linear shape functions, the latter are only locally defined and their gradients are discontinuous. This implies that a material point on the cell boundary would not be covered by the local shape functions defined within the respective cells around the particle. This would produce a noise in the numerical solution, which is called cell-crossing error. Recently, many improvements to the original MPM have been provided to alleviate the cell-crossing noise and to have a more efficient and algorithmically straightforward evaluation of grid node integrals in the weak formulation. The Generalized Interpolation Material Point method (GIMP) [124] represents the first attempt to provide an improved version of the original MPM. The essence of this method is based on the definition of a characteristic function which has to satisfy the partition of unity criterion, i.e.,

|

|

(2.2) |

The particle characteristic function defines the spatial volume occupied by the particle as

|

|

(2.3) |

where and are the current particle domain and the current domain occupied by the continuum, respectively. Moreover, since a material property can be approximated by its particle value as

|

|

(2.4) |

acts as a smoothing of the particle properties and it determines the smoothness of the spatial variation. The full version of GIMP requires integration over the current support of , which deforms and rotates according to the deformation of the background grid. To do that, a tracking of the particle shape is mandatory, but in a multi-dimensional problem this could be very difficult to accomplish. Thus, an alternative version of the GIMP is represented by the uniform GIMP (uGIMP), where shear deformation and rotation of the particles are neglected. The uGIMP assumes that the sizes of particles are fixed during the material deformation. In this way, the particle characteristic function, whose support may overlap or leave gaps for very large deformation, is no longer able to satisfy the partition of unity criterion, and, thus, the ability of computing rigid body motion is lost. Therefore, the uGIMP is unable to completely eliminate the cell-crossing error.

In the attempt to improve the issues left by the GIMP, the Convected Particle Domain Interpolation technique (CPDI) [125] is proposed. In the CPDI the particle has an initial parallelogram shape and a constant deformation gradient is assumed over the particle domain. This technique is a first-order accurate approximation of the particle domain . Even if in the CPDI a more accurate approximation of is obtained, the issues of overlaps and gaps are not overcome. Only with the second-order Convected Particle Domain Interpolation (CPDI2) [126], an enhanced CPDI, which provides a second-order approximation of the particle domain, these issues are totally corrected. It is also worth mentioning the Dual Domain Material Point Method (DDMPM) [127], an alternative technique which is able to definitely eliminate the cell-crossing error. Unlike the GIMP or CPDI, the DDMPM does not make use of particle characteristic functions and the issue of tracking the particle domain through the whole simulation is avoided. The essence of this technique relies on the use of modified gradient of the shape function, defined as follows

|

|

(2.5) |

where is the gradient of the shape function evaluated as in the original MPM, is the gradient from the node-based calculation as used in FLIP ( FLuid-Implicit Particle)[128].

Most MPM codes make use of explicit time integration, due to the ease of the formulation and implementation [115,129,130]. Explicit methods are preferable to solve transient problems, such as impact or blast, where high frequencies are excited in the system [131,132,121]. However, when only the low-frequency motion is of interest, the adoption of an implicit time scheme may reduce the computational cost in comparison to the employment of an explicit time scheme [133]. Some implicit versions of MPM can be found in the literature. For instance, Guilkey [134] exploits the similarities between MPM and FEM in an implicit solution strategy. Beuth [135] proposes an implicit MPM formulation for quasi-static problems using high order elements and a special integration procedure for partially filled boundary elements. Sanchez [136] presented an implicit MPM for quasi-static problems using a Jacobian free algorithm and in [137] a GIMP method is used together with an implicit formulation.

In order to assess the features of MPM, as reference for a comparison, a standard Lagrangian FEM is chosen. In Table 2.3, the two methods are compared and a list of differences is made, according to the basic formulation, computational efficiency and computational accuracy. It is observed that in the small deformation range the MPM has a lower accuracy and efficiency than a Lagrangian FEM. Nevertheless, the FEM procedure shows its advantageous use only in a narrow range of strain magnitude, established by a critical deformed configuration for which the element quality is seriously compromised, which may cause a drastic deterioration of accuracy or even the end of the computation. In this regard, it is evident that MPM finds its natural field of application in large deformation problems. However, it is important to highlight that an extra computational cost is expected in MPM compared to FEM. This is due to additional steps in the MPM algorithmic procedure, in order to be able to track the kinematic and historical variables through the deformation process, and to a number of material points higher than the number of Gauss points normally employed in a FEM simulation.

| STANDARD LAGRANGIAN FEM | MPM |

| BASIC FORMULATION | |

| Gauss quadrature | Particle quadrature |

| The Lagrangian computational mesh is attached to the continuum during the whole solution process | No fixed mesh connectivity is required |

| Higher accuracy and efficiency for small deformations | Lower accuracy and efficiency of the MPM for small deformations |

| For large deformations accuracy rapidly deteriorates and computational cost increases dramatically due to mesh distortion and the need for remeshing | It naturally deals with large deformation problems |

| Contact between different bodies can be modelled only by applying a contact technique | Unphysical material interpenetration and non-slip contact constraint are inherent in the MPM |

| COMPUTATIONAL EFFICIENCY | |

| Mass and momentum are carried by the mesh nodes and they are calculated only at the beginning of the analysis. Further Gauss points move according to the mesh such that it is not necessary to update their positions and velocities | Additional steps have to be performed such as mapping of particle info (mass and momentum) on the grid and the update of particle info at the end of the Lagrangian step |

| Less Gauss points per element | Minimum number of particles per element higher than number of Gauss points per element |

| In explicit time scheme, the critical time step decreases with the element deformation | In MPM the characteristic element length does not change, thus, the critical time step size in MPM is constant |

| COMPUTATIONAL ACCURACY | |

| The Gauss quadrature can integrate accurately the weak form | The particle quadrature is less accurate than the Gauss one for integrating the weak form |

| For large deformations, in order to avoid element entanglement, a remeshing technique has to be adopted. However, this can lead to conservation issues of mass, momentum and energy. Further, the remapping of material properties of history-dependent material will result in significative errors | The original MPM suffers from a cell crossing instability |

As earlier discussed, the MPM is a particle method, whose advantages are evident in applications at large strain and displacement regime. Moreover, the MPM is characterized by some features which make this technique able to overcome all the typical disadvantages of other particle methods, listed in the previous sections. MPM does not employ any kind of remeshing procedure, the calculation is performed always at a local level, allowing an easy adaptation of the code to parallel computation and a good conservation of the properties. A conservation of the mass is also guaranteed during the whole simulation time, as the total mass is distributed between the material points representing the volume of the entire continuum under study. A remapping of the state variables is avoided and the employment of complex time dependent constitutive laws can be used without committing any mapping error. In addition, since this technique is a grid-based method all the issues, related to the meshless methods, such as, lack of interpolation consistency and difficulties in enforcing the Essential Boundary conditions are avoided. Last but not least, MPM is a technique defined in the continuum mechanics framework, thus, it can be easily applied to real scale problems at a not prohibitive computational cost. Given the fulfillment of the aforementioned features, MPM represents a suitable choice for the solution of real scale large deformation problems and particularly attractive for the modelling of granular flow problems.

2.4 The Material Point Method in Kratos Multiphysics

In the current work, an implicit MPM is developed in the multi-disciplinary Finite Element codes framework Kratos Multiphysics [29,30,31]. Two formulations are investigated: a displacement-based [138] and a mixed displacement-pressure (u-p) [139,140] formulations, presented in Chapters 4 and 5, respectively. In both the numerical strategies, the original version of the MPM is implemented, i.e., a particle integration is adopted, where the particle mass is assumed to be concentrated only on one spatial point, the particle position. As earlier discussed, this may lead to the so-called cell-crossing error; however, it is demonstrated that GIMP method is not able to definitely fix this issue and only other more computationally expensive techniques, such as, the CPDI2 and the DDMPM can remarkably mitigate this inherent error. In this work, it is made the choice to focus on the capabilities of the method in the modelling of granular flows under large deformation and large displacement regime and to leave, as future work, the exploration and investigation of other versions of MPM, which can improve the accuracy of the numerical results. The MPM in Kratos Multiphysics is developed in an Updated Lagrangian finite deformation framework and the matrix system to be solved is built-up from taking into account the contribution of each material point, to be considered as integration point, as well. In the initialization of the solving process, the initial position of the material points is chosen to coincide with the Gauss points of a FE grid and the mass, which remains constant during the simulation, is equally distributed between the material points, falling, initially, in the same element. At each time step, the governing equations are solved on the computational nodes, while history dependent variables and material information are saved on the particles during the entire deformation process. Thus, in the MPM the material points shall be understood as the integration points of the calculation, each carrying information about the material and kinematic response. Each material point represents a computational element with one single integration point (the material point itself), whose connectivity is defined by the nodes of the elements in which it falls. In Figure 2.2, for instance, the case of the i-th material point, which falls in a triangular element with a connectivity of nodes and , is depicted. In the evaluation of the FEM integrals, the shape functions are evaluated at the material point location on the basis of the grid element the material point falls into (Figure 2.2). At the end of every time step, in order to prevent mesh distortion, the undeformed background grid is recovered, i.e., the nodal solution is deleted.

In what follows, the algorithm of MPM for an implicit time scheme discretization is presented in detail.

2.4.1 MPM Algorithm

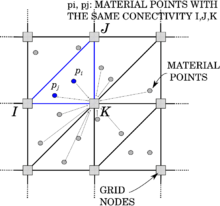

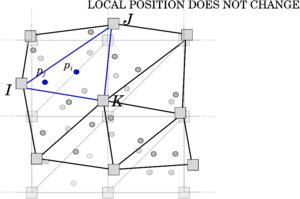

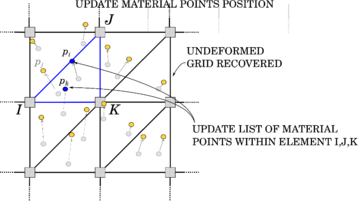

Traditionally, the MPM algorithm is composed of three different phases [28], as graphically represented in Figure 2.3 and below described:

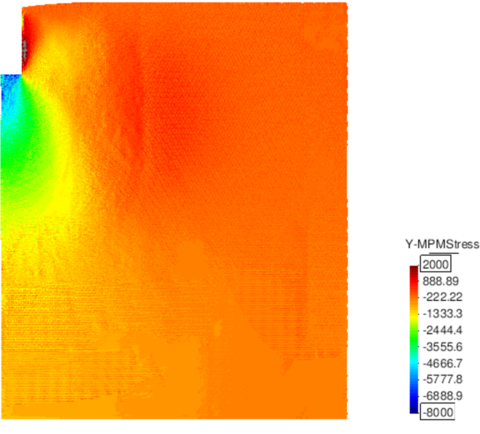

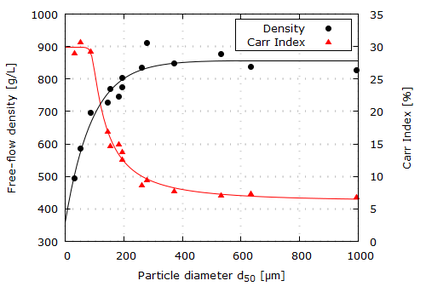

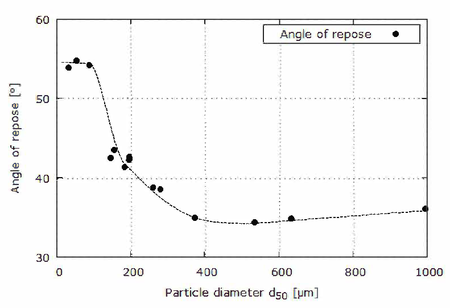

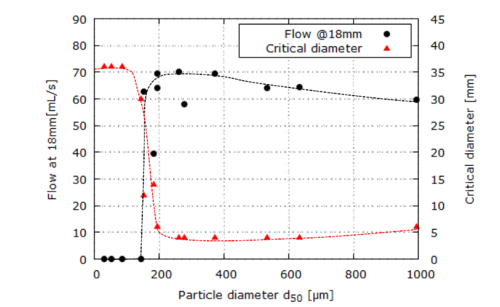

- Initialization phase (Fig.2.3a): at the beginning of the time step the connectivity is defined for each material points and the initial conditions on the FE grid nodes are created by means of a projection of material points information obtained at the previous time step ;