Abstract

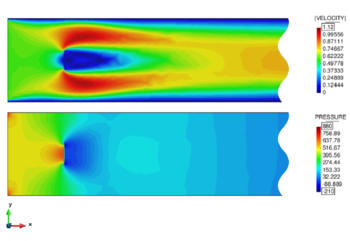

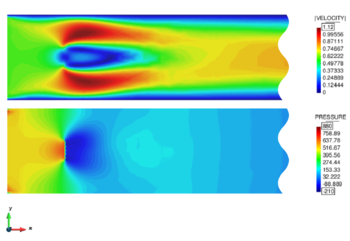

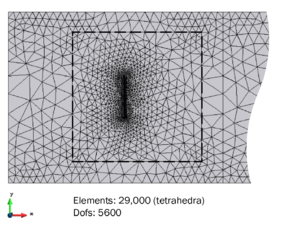

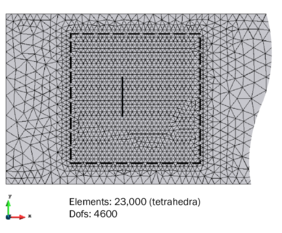

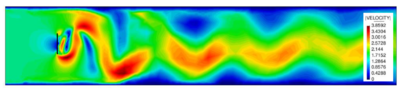

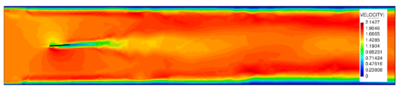

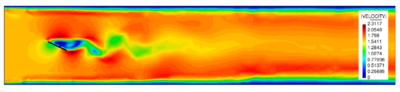

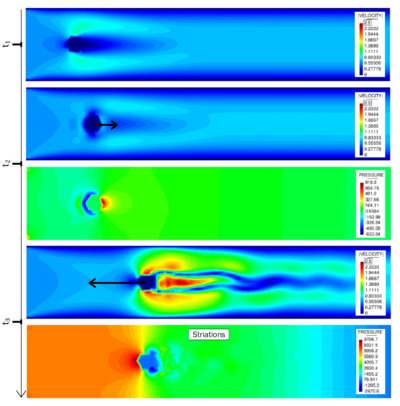

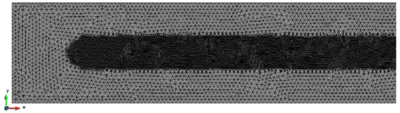

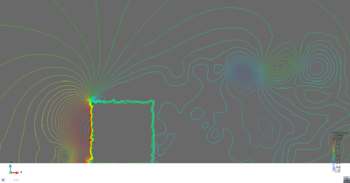

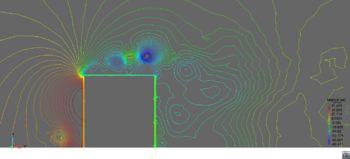

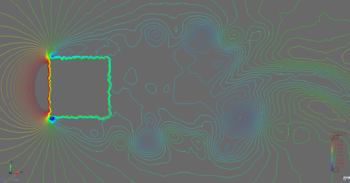

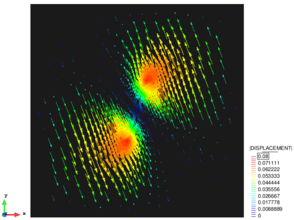

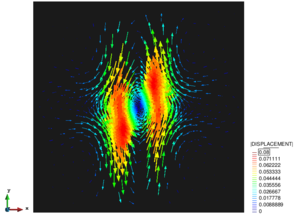

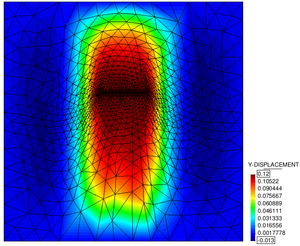

Designing large ultra-lightweight structures within a fluid flow, such as inflatable hangars in an atmospheric environment, requires an analysis of the naturally occurring fluid-structure interaction (FSI). To this end multidisciplinary simulation techniques may be used. The latter, though, have to be capable of dealing with complex shapes and large deformations as well as challenging phenomena like wrinkling or folding of the structure. To overcome such problems the method of embedded domains may be used. In this work we discuss a new solution procedure for FSI analyses based on the method of embedded domains. In doing so, we are in particular answering the questions: How to track the interface in the embedded approach, how does the subsequent solution procedure look like and how does both compare to the well-known Arbitrary Lagrangian-Eulerian (ALE) approach? In this context a level set technique as well as different mapping and mesh-updating strategies are developed and evaluated. Furthermore the solution procedure of a completely embedded FSI analysis is established and tested using different small- and large-scale examples. All results are finally compared to results from an ALE approach. It is shown that the embedded approach offers a powerful and robust alternative in terms of the FSI analysis of ultra-lightweight structures with complex shapes and large deformations. With regard to the solution accuracy, however, clear restrictions are elaborated.

Acknowledgements

This monograph was written at the International Center for Numerical Methods in Engineering (CIMNE, Barcelona) based on a joint research project with the Technical University Munich (TUM) during the period from May to December 2013. During that time, we gained vast experience in numerical methods, software development and their practical application to solve complex engineering problems within a dynamic and innovative research team. The project, however, would not have been possible without the tremendous support of Riccardo Rossi (CIMNE) and Roland Wüchner (TUM). Both of them contributed decisively to the success of this work and are responsible for a challenging but exciting topic branching into the world of FSI simulations.

Roland Wüchner dedicated himself to the subject with a lot of interest that he showed during the numerous intercontinental sessions where he often sacrificed his valuable evenings for an in-depth discussion. He reserved many hours to shape the subject in detail in order to guarantee a benefit for all the contributors. Moreover, he constantly motivated us by guiding and refining all our ideas. The same is true for Riccardo Rossi. He offered a fantastic technical and personal support in all questions that arose. Many of the here presented ideas were driven by his support or on his initiative. We thank you both a lot!

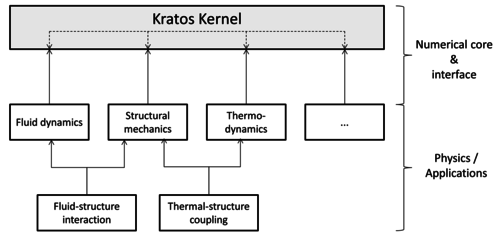

Furthermore, we want to thank Pooyan Dadvand and Jordi Cotela who provided us with the implementation of many functionalities in Kratos. For any kind of problem with Kratos they patiently did intensive investigations until the problem was solved. In general, we acknowledge the support of every single person of the Kratos and the GiD team.

Finally, all the authors wish to thank the ERC for the support under the projects uLites FP7-SME-2012 GA n.314891 and NUMEXAS FP7-ICT-611636.

1 Introduction

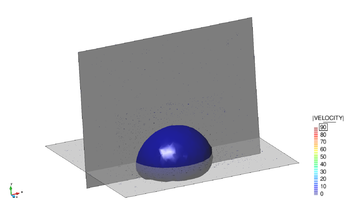

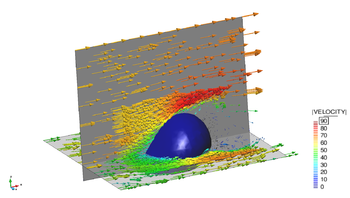

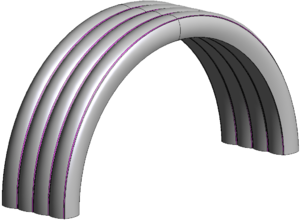

Objects of interest within the scope of this work are inflatable mobile light-weight hangars for the application in aerospace industry (See figure 1). Purpose of such a hangar is to cover aircrafts ranging from smaller propeller machines up to large scale passenger aircraft both from civil and military services. The advantage of such a structure is obvious: It offers the possibility to flexibly and quickly build up and position a hangar without occupying expensive and rare space permanently. This allows a fast reaction to current needs such as the protection of single aircraft from weather influences or the setup of a provisional operating base while being protected from external surveillance.

] ]

|

| Figure 1: Example of an inflatable hangar - Adopted from [1] |

In order to ensure functionality and safety, prior tests regarding the structure's behavior within the environmental fluid, i.e. an air flow, are essential. Here the investigation of the respective fluid-structure-interaction is of particular importance due to the lightweight concept being strongly affected by e.g. wind loads. Physical tests in this regards are, however, very costly since the lightweight concept does typically not allow for any scaling of the model to smaller sizes. This means, that a physical test always requires a very cost-intensive full-scale model. That is the reason why people are particularly interested in the powerful as well as resource- and cost-efficient virtual design analysis, which in this case means a computational analysis of the fluid-structure interaction (FSI).

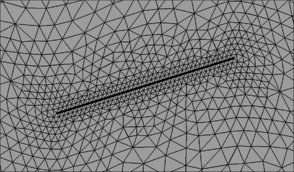

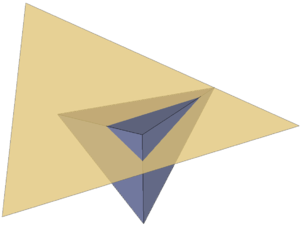

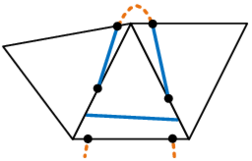

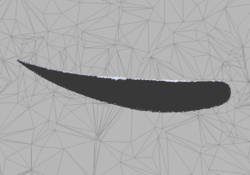

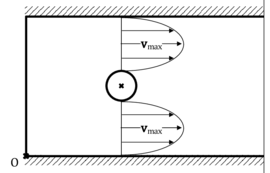

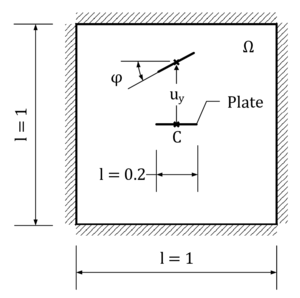

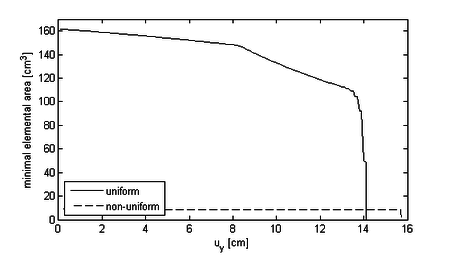

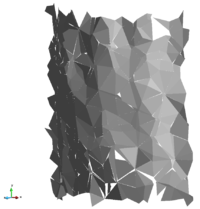

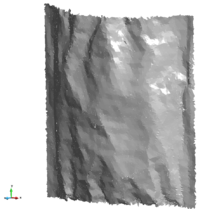

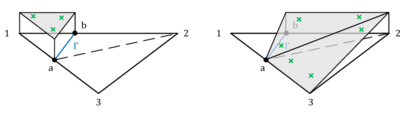

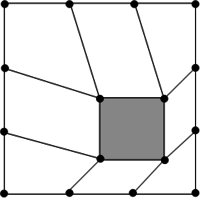

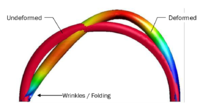

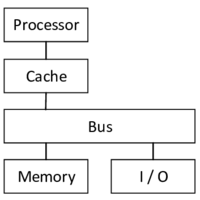

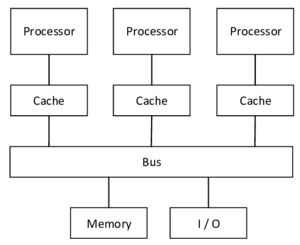

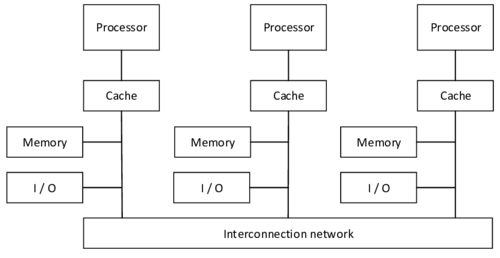

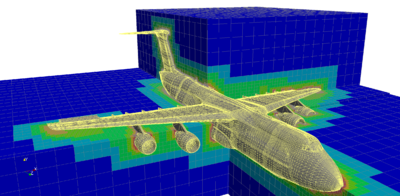

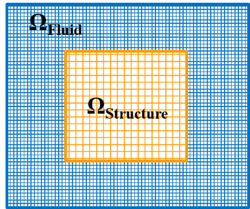

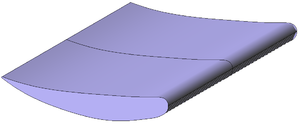

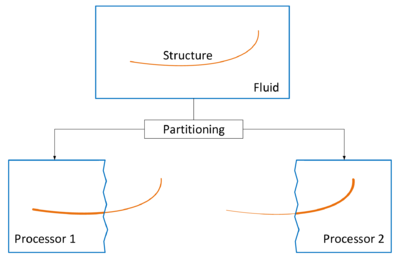

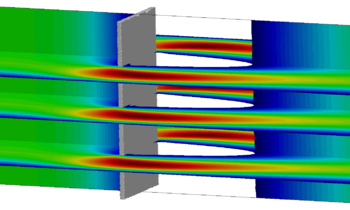

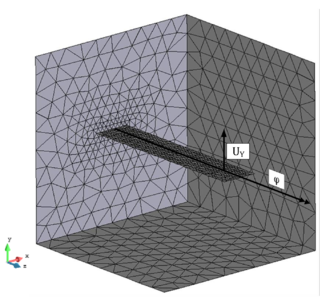

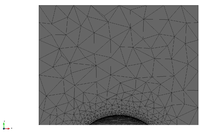

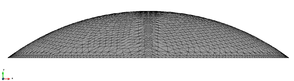

Requirement for the corresponding coupled simulation, though, is the ability to deal with large deformations, wrinkling or folding, respectively. To this end a simulation technology based on the method of embedded domains1 is developed at the International Center of Numerical Methods in Engineering (CIMNE). The method of embedded domains is an alternative methodology for the computation of partial differential equations and as such offers the interesting advantage of efficiently dealing with complex boundaries and large deformations where a body-fitted technique like the Arbitrary Lagrangian-Eulerian method (ALE) for examples uses an often expensive moving domain discretization (“Moving Mesh”). Particularly in the scope of fluid-structure interaction analysis, the embedded methods allows to separately handle the different physical entities without having to account for a specific interface model. Instead different overlapping discretizations ("embedded meshes") are used. See figure 2 for an illustration of the different approaches.

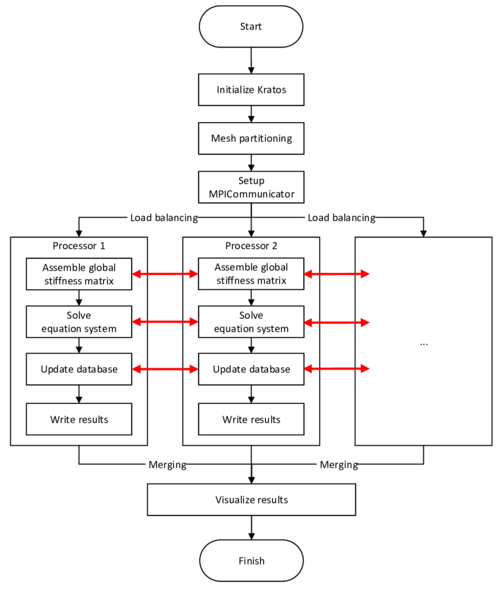

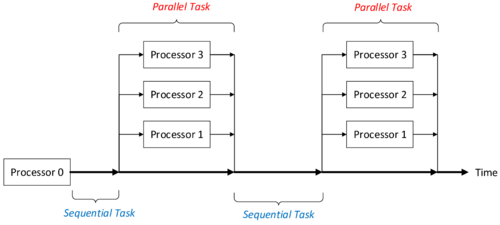

In this context, the goal of the present monograph is 1) the further development of the at CIMNE developed method of embedded domains such that it may be used for large-scale CFD and FSI problems and 2) the evaluation of the method compared to the well-known ALE approach. In doing so the tasks were split into two key topics: first the interface tracking in the embedded case and second the setup and comparison of the FSI solution procedure in both cases. For the interface tracking in the embedded method, level set techniques were to be implemented and verified. In terms of the solution procedures the goal was to develop and implement different mesh-updating strategies in the ALE-case as well as mapping techniques for the embedded method. Hence different test scenarios of coupled fluid-structure problems were to be developed, set up and simulated in order to finally compare the methods. For the sake of software modularity focus was here set on partitioned solution techniques. Furthermore in order to keep the computational costs of the coupled analyses as low as possible, parallelization techniques ought to be applied throughout the entire implementation phase.

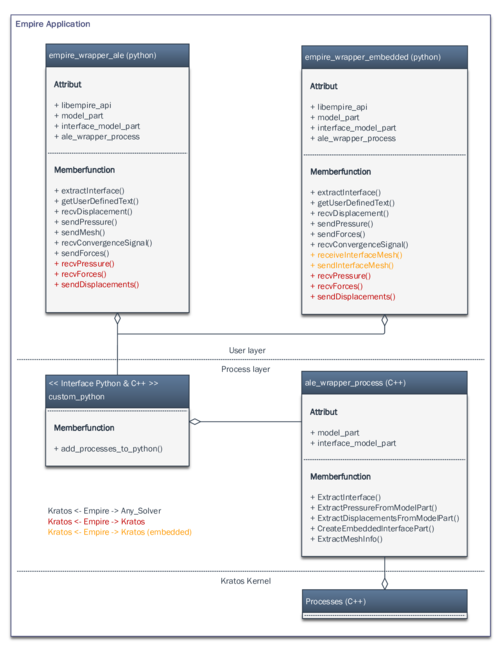

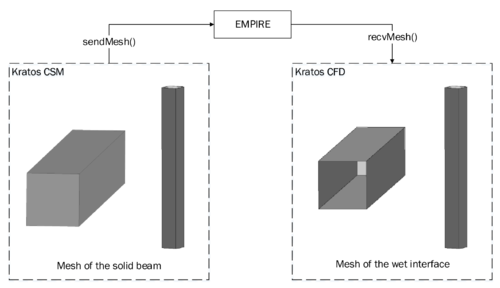

The software environment was generally given by the CIMNE in-house multiphysics finite element solver “Kratos”. For the partitioned analysis furthermore the simulation environment EMPIRE (“Enhanced Multi Physics Interface Research Engine”, Technical University Munich) was to be used. So an additional specification arising from this context required the set up of an interface between both software frameworks in order to be able to use the full functionality of EMPIRE together with all features in Kratos. Perspectively the goal is to use Kratos via EMPIRE together with the at the Technical University Munich (TUM) developed structural solver “Carat++” in a common partitioned FSI-environment. This shall allow to combine and consolidate capabilities of either software package and hence the knowledge of either of the related research groups.

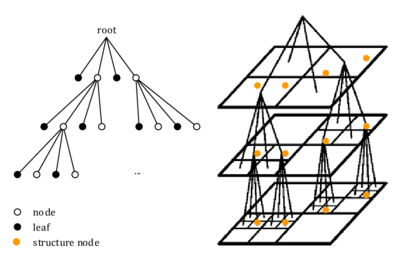

Based on all the aforementioned goals, the present monograph is organized as follows: In the first section (chapter 2 to 4) the theoretical background regarding the analysis of coupled fluid-structure problems is given. Here it starts with a discussion of the single field problems which subsequently is extended and merged to the fundamentals of coupled fluid-structure analyses. In both cases the above stated and newly in Kratos implemented method of embedded domains is introduced in detail. Part of the theoretical framework is also a discussion of how in both approaches the computational efficiency may be improved. This includes the presentation of parallelization techniques as well as spatial search algorithms specifically applied in case of the embedded method.

In the second section then (chapter 5 to 6) the different applied software packages as well as the corresponding software interface are described. Here the contents are presented in a very application oriented way in order to provide a documentation for future users.

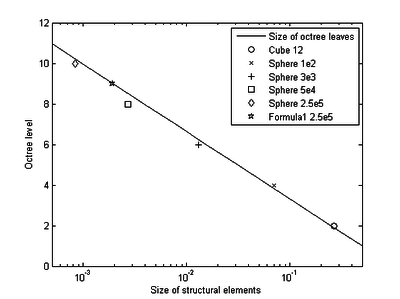

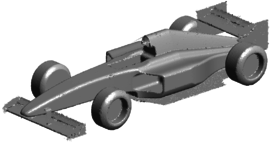

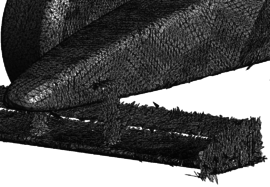

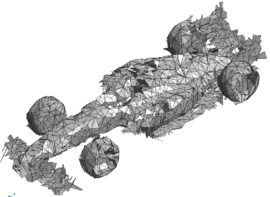

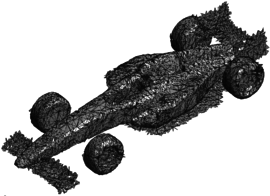

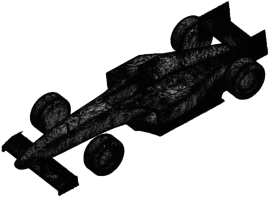

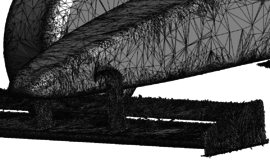

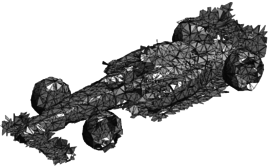

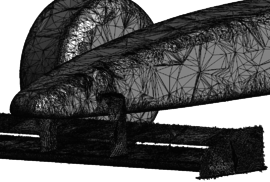

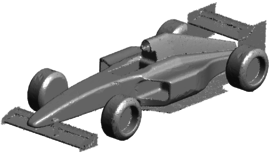

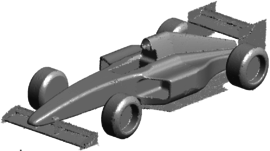

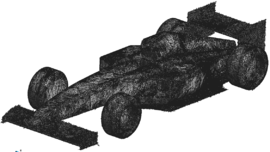

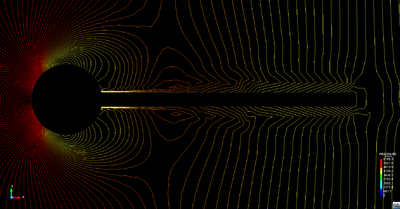

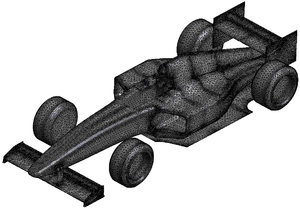

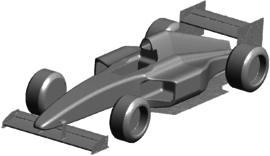

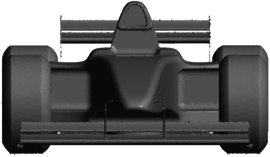

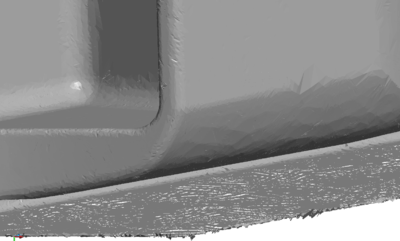

In the third section (chapter 7) the first key topic of the present monograph is elaborated, i.e. the interface tracking in the scope of the embedded method. Here we answer the questions regarding how to track the interface with two overlapping meshes and how does this affect the corresponding solution quality. Therefore different geometry examples, from a generic structure to a large scale Formula One car, are investigated. Furthermore in this context, different fluid problems are simulated with the embedded method and subsequently evaluated.

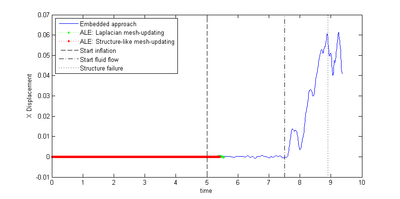

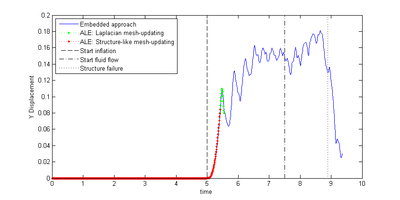

Having discussed how to track the interface in the embedded case and knowing about the situation in a body-fitted approach, the fourth section (chapter 8) is dedicated to the second key topic of this monograph, i.e. the actual solution procedure with fluid-structure simulations using either of the aforementioned methods. Here the different developed process steps are elaborated and evaluated in detail giving finally a complete overview of the entire solution process in both cases. With all the implementations then at hand, two solution examples of fully coupled problems are presented which eventually allows for a comparison of the two different approaches.

Finally all the results are briefly summarized and contrasted to the above stated goals.

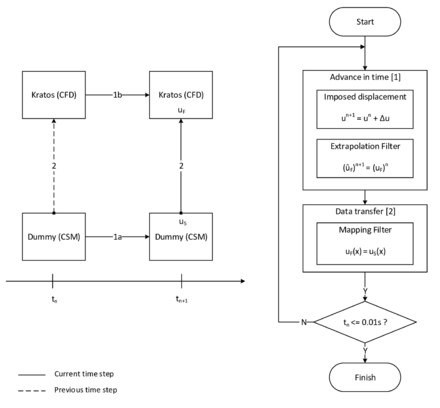

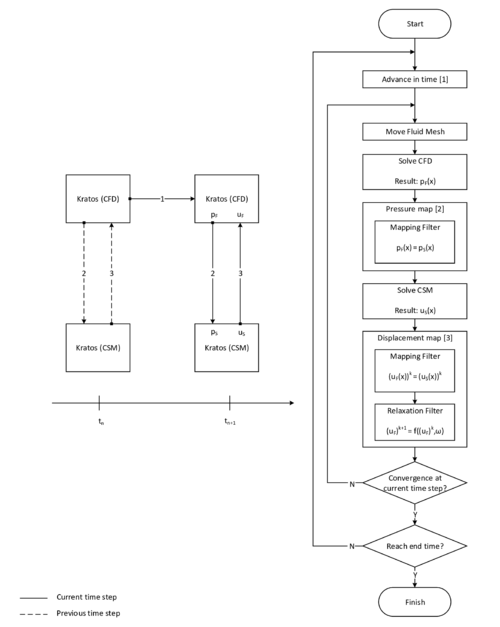

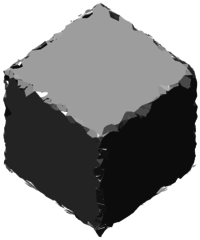

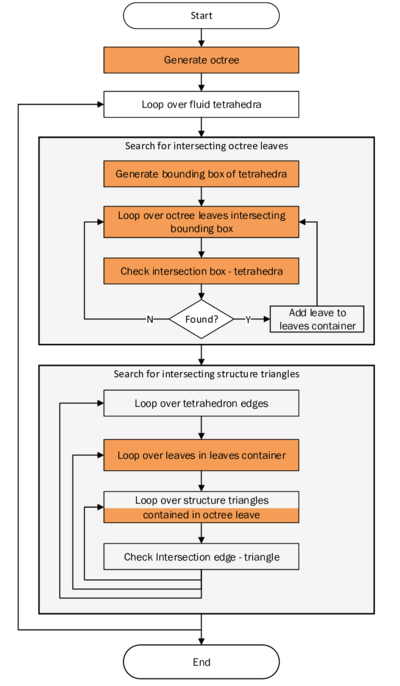

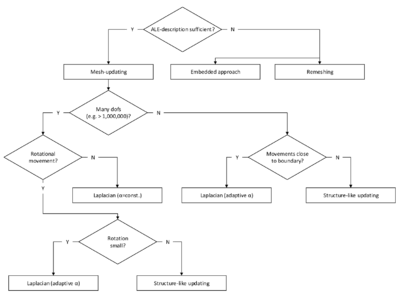

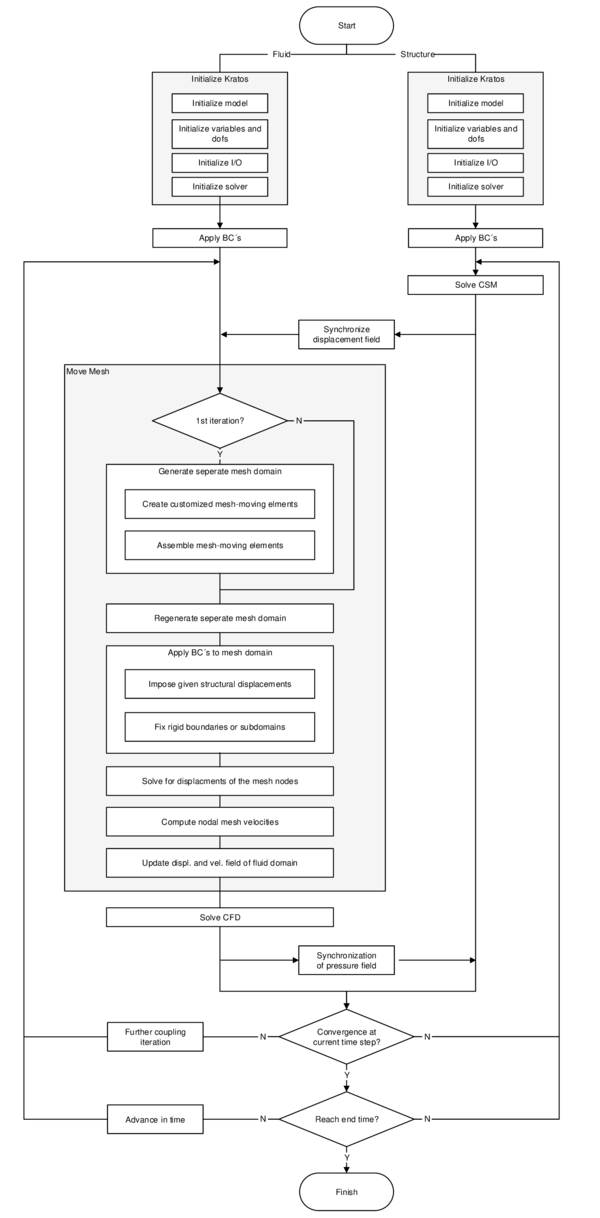

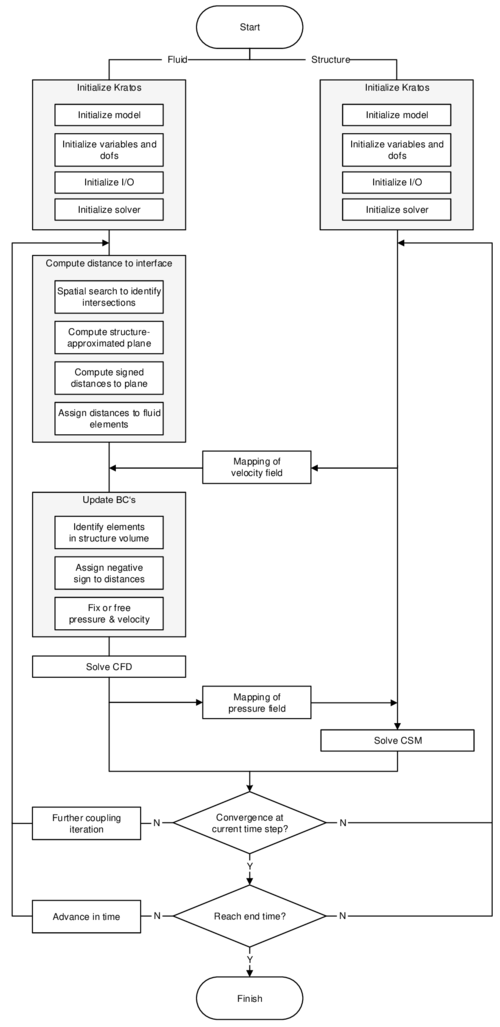

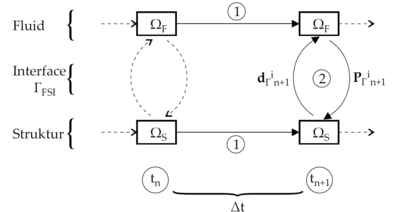

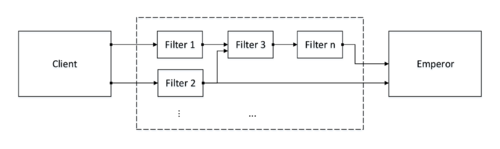

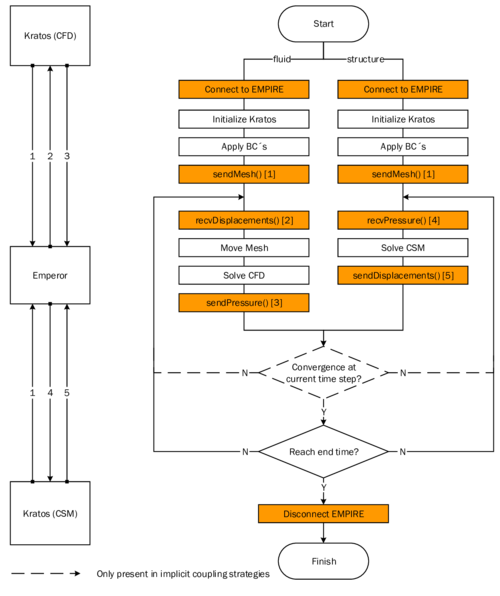

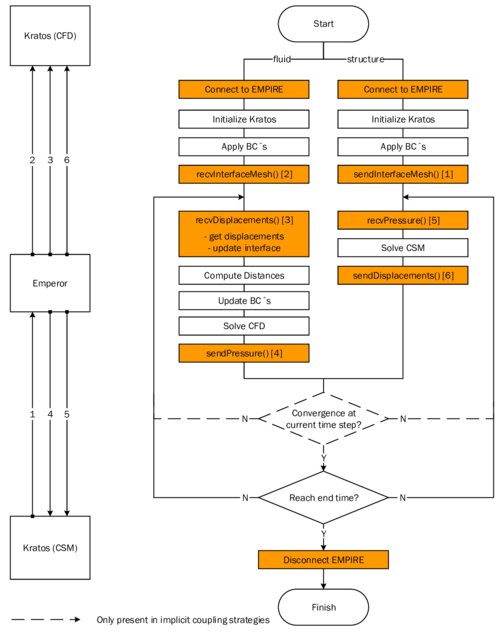

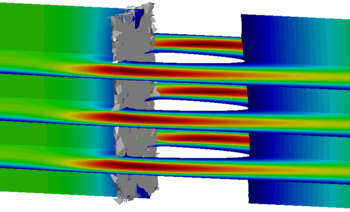

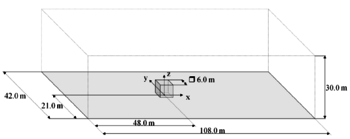

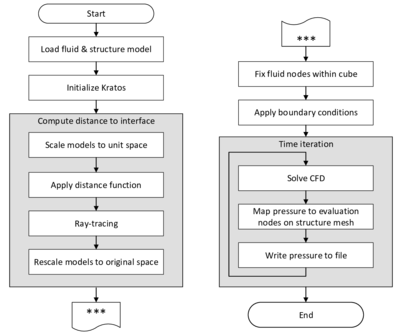

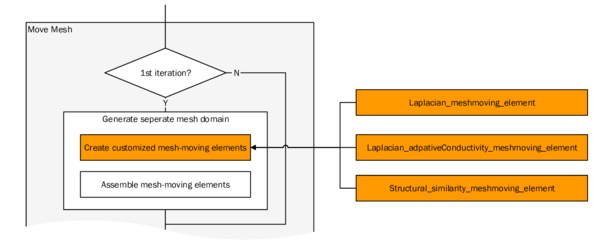

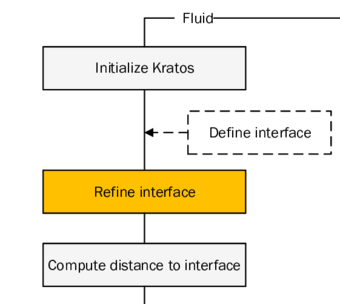

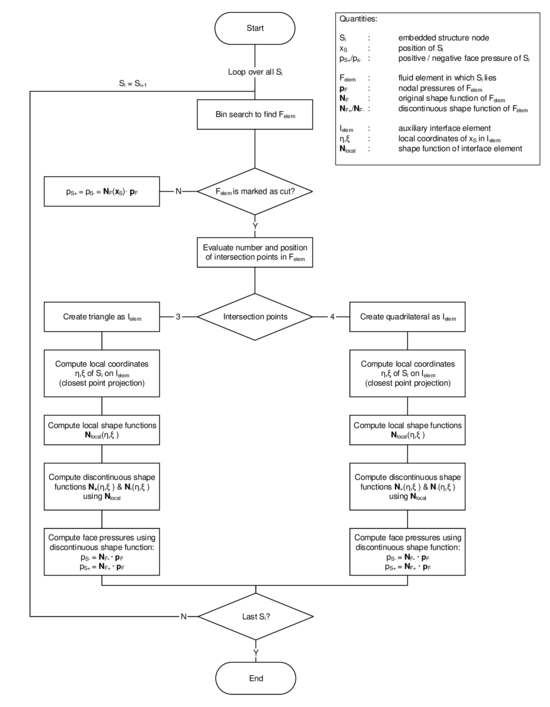

In order to facilitate the understanding of the later presented developments, the two process flows corresponding to the two different solution approaches as they were established in Kratos, shall be outlined here in advance (3, 4)2. For now, they shall just give an idea about the necessary steps to establish a coupled fluid-structure simulation using one of the above mentioned approaches. In the later course of this monograph then, whenever a feature is discussed or developed, its integration into the overall process is illustrated by means of these two charts. This allows to see importance and impact of single developments in a more general context.

|

| Figure 3: Partitioned FSI simulation using the ALE approach |

|

| Figure 4: Partitioned FSI simulation using the embedded approach |

(1) In literature also called “immersed” or “fix-grid” methods

(2) Note that both of the depicted processes show a partitioned analysis

2 Fluid and structure as uncoupled fields

In the following the mechanical fundamentals of fluids and structures shall be discussed together with their numerical treatment, i.e. their spatial discretization via FEM, their time discretization using different time integration schemes and their solution by some selected procedures. Both fluid and structure will be regarded as a continuum. Consequently their formulation will be similar and based on classical continuum mechanics. Given this assumption, the chapter will start with a brief introduction into the general description of motion according to basic continuum mechanics. Actual differences between structures and fluids from the point of view of their mechanical description will be elaborated in the later course of the chapter. Afterwards the method of embedded domains will be introduced into the context of classical fluid mechanics. Particularly the relevant element formulation will be of interest here. A discussion of how to impose corresponding boundary conditions will finally close the chapter.

2.1 Lagrangian and Eulerian description of motion

In continuum mechanics there are different ways to describe motion. We will focus in this context on the most established ones for each the structure and the fluid. For detailed information in this context, the reader is referred to the classical textbooks of Malvern [2] or Donea [3] and Cengel [4].

In the referential description the motion is described with respect to a reference configuration in which a particle of the continuum is located on the position at time . It is called Lagrangian description when the reference configuration coincides with the initial configuration at . In elasticity theory the initial configuration is typically chosen to be the unstressed state. Within the Lagrangian viewpoint, one keeps track of the motion of an individual material particle by recording the position of a particle at a time linking its material coordinates to the spatial coordinates via the function :

|

|

(2.1) |

Computationally this means that each individual node of a discretized domain is permanently attached to an associated material particle at any point of time as shown in figure 5. When the motion results in large deformations and therefore large distortions of the computational mesh, this method approaches a limit and might even fail due to excessively distorted finite elements which are linked to the material particles.

![Lagrangian viewpoint - The computational grid follows the material particles in the course of their motion (adapted from [3]).](/wd/images/thumb/7/71/Draft_Samper_908356597-monograph-J01_LagrangianConfiguration.png/400px-Draft_Samper_908356597-monograph-J01_LagrangianConfiguration.png)

|

| Figure 5: Lagrangian viewpoint - The computational grid follows the material particles in the course of their motion (adapted from [3]). |

On the contrary, the spatial description - also called the Eulerian description - avoids such difficulties by considering a control volume fixed in space. In that case the continuum is moving and deforming relatively to the discretized domain of the control volume. We do not keep track of the motion of an individual material particle, rather we observe how the flow field at the fixed computational mesh nodes is changing over time by introducing field variables within the control volume. For example, the spatial description of the velocity field can be defined as a field function of the spatial coordinates and the time instant :

|

|

(2.2) |

In this equation it becomes obvious that there is no link to the initial configuration or the material coordinates . Moreover, the velocity of the material at a given node of the computational grid is associated to the velocity of the material particle coinciding with the node. It is also possible to conclude from the flow field at given nodes to the total rate of change of the flow field when following a particle that moves through the fluid domain. This is done via the material derivative which basically links the Eulerian and the Lagrangian description. Applied to a pressure field, the relation is as follows:

|

|

(2.3) |

or correspondingly for the velocity field, it reads:

|

|

(2.4) |

The first part on the right hand side is called the local or unsteady term describing the local rate of change and the second part is the convective term constituting the rate of change of a particle when it moves to a region with different pressure or velocity.

Table 1 is supposed to contrast the most significant advantages and disadvantages of the two descriptions of motion. In order to combine the advantages of both Lagrangian and Eulerian description of motion the so-called Arbitrary Lagrangian-Eulerian method (ALE) has been developed. This method will be treated in chapter 3.2.2.

| Lagrangian description | Eulerian description |

| Allows to keep track of the history-dependent material behavior and to back-reference from the current configuration to the initial configuration of any material particle. | Does not permit to conclude from the current configuration to the initial configuration and the material coordinates . |

| The coincidence of material particles and the computational grid affects that the convective term drops out in the material derivative 2.4 leading to a simple time derivative. | The computational mesh is decoupled from the motion of material particles resulting in a convective term (see formula 2.4). The numerical handling of such a convective term leads to difficulties due to its nonsymmetric character. |

| Following the motion of the particles might lead to excessive distortions of the finite elements when no remeshing is applied what in turn can cause numerical problems during simulation. | Due to the fixed computational mesh there are no distortions of finite elements such that large motion and deformation in the continuum can be analyzed. |

| Is mostly used in structure mechanics, free surface flow and simulations incorporating moving interfaces between different materials (e.g. FSI). | Application especially in the field of fluid mechanics (e.g. simulation of vortices). Following free surfaces and interfaces between different materials comes along with larger numerical effort. |

2.2 Computational fluid mechanics

Within this chapter the fundamental formulation of the fluid mechanical problem, that is applied throughout this monograph, shall be introduced. Thereby we will first discuss the governing equations, their numerical discretization in space and time as well as an established solution procedure. Unless stated elsewhere, we will restrict ourselves to a Eulerian description of motion. Furthermore in this context we will focus on finite element techniques as well as the fractional step solution method, which both accounts for the later application in problems related to fluid-structure interaction. In all explanations we will closely follow the theoretical basics given in [3], the implementation and solver related details from chapter 3 in [6] and some specific research results in terms of the finite element method for fluid analysis elaborated in [7,8].

In the second part then a newly developed embedded approach shall be introduced, with which simulations, comprising highly deforming structures in a CFD context, shall be eased significantly. In here we will explain, based on the finite element method, both the new modeling technique and its corresponding immanent assumptions. It shall be of particular importance here that the latter assumptions are introduced critically and if necessary linked to corresponding investigations contained in the later course of the present monograph. All of the explanations will furthermore be kept general in that sense that it can be easily transferred from a shear CFD to a fully coupled FSI analysis.

2.2.1 Governing equations

The first step in describing the mechanics of a material, including fluids, is the assumption about the underlying material model. We will in the following rely on the continuum assumption which models the material as a continuous mass rather than for instance as discrete particles1. Based on this assumption any material is governed by the conservation of linear momentum:

|

|

(2.5) |

the conservation of mass:

|

|

(2.6) |

and the conservation of energy.

|

|

(2.7) |

where is the velocity vector, is the density, is the physical pressure, the body force vector, the sum of internal and kinetic energies, the heat flux vector, and and are the internal heat generation and internal heat dissipation function, respectively. The equations here are written in conservative form, which means they are arranged such, that they actually show that the overall change of a quantity is zero, i.e. the quantity is conserved. Note that the system is completely coupled, i.e. a solution of one equation is not possible without taking into account all the others.

Now we will introduce the following assumptions: 1) All state variables are continuous in space so their derivatives exist, 2) the fluid is considered to be incompressible, which yields

|

|

(2.8) |

and 3) the fluid is considered Newtonian, with constant viscosity, where

|

|

(2.9) |

Here, describes the Cauchy stress, the dynamic viscosity, the symmetric part of the velocity gradient and is the identity matrix. In fact the latter assumption about the material constitution represents the only major difference between the description of a fluid, as it is done here, and the description of a structure whose constitutive relation is typically given in the form . Note that the latter is based on the actual strains rather than the strain rates as they are implied in 2.9.

A consequence of these assumptions is, that the energy conservation is not anymore necessary to sufficiently describe the mechanical system. Thus for an incompressible flow, instead of four governing equations (conservation of momentum, mass and energy as well as the constitutive equation) we only have two simplified partial differential equations with the two independent state variables and 2. The remaining two equations with all the above assumptions included are known as the incompressible Navier-Stokes equations (NSE), which are typically given in the non-conservative form:

|

|

(2.10.a) |

|

|

(2.10.b) |

Here is the kinematic viscosity and the kinematic pressure. Note that the NSE describe a non-linear, coupled dynamic system.

Eventually we have the choice to either express w.r.t. a stationary coordinate system, where corresponds to the physical velocity at a given fixed point in space (Eulerian approach) or a moving coordinate system (Lagrangian approach), where is determined from the point of view of the moving fluid particle. These approaches can also be combined in a generalized formulation in order to be able to resolve the dynamics of the different domains in an FSI context, as we will see later.

To ensure that the system has a unique solution and to make the problem well posed, it is finally necessary to prescribe exactly one boundary condition at each the Neumann and the Dirichlet boundary. The NSE hence pose a classical boundary value problem where a strong imposition of the latter boundary conditions reads:

|

|

(2.11.a) |

|

|

(2.11.b) |

The assumption of initial conditions in the form

|

|

(2.12.a) |

|

|

(2.12.b) |

completes the problem formulation. Having now mathematically formalized the underlying physics. We have to discretize the NSE in order to solve it numerically.

(1) A famous particle-based model in computational fluid dynamics is e.g. the Lattice-Boltzmann-Method.

(2) Note that the velocity is a three-dimensional vector actually resulting in four independent state variables. The number of equations within the NSE increases accordingly.

2.2.2 Discretization

The NSE can be discretized in various ways both in time and space. All solutions throughout this monograph, though, were computed based on a finite element discretization in space and finite difference schemes in time which is why the latter techniques shall be introduced in the following. We will thereby follow an idea which in the literature is called the method of lines.

The method of lines is a usual practice in finite analysis of time-dependent problems. In here we are first discretizing with respect to the spatial variables from which we obtain a system of coupled first-order ordinary differential equations (with respect to time). The latter is called the semi-discrete system. Then to complete the discretization of the original PDE, we integrate the first-order differential system forward in time to trace the temporal evolution of the solution starting form the initial point .

2.2.2.1 Spatial discretization

Within this section it is assumed that the reader is familiar with the basic concepts of the finite element method using variational calculus.

Given the NSE, its weak form is obtained by multiplying 2.10.a and 2.10.b with the test functions and respectively. The corresponding weighted residual formulation reads:

|

|

(2.13.a) |

|

|

(2.13.b) |

By performing an integration by parts of the viscous term and the pressure gradient term in the momentum balance and each applying the divergence theorem the final weak form of the NSE is obtained as:

|

|

(2.14.a) |

|

|

(2.14.b) |

It can be seen from the equation, that by this approach we have naturally produced a Neumann boundary which is now given in a weak formulation as:

|

|

(2.15) |

where describes the boundary normal. In this weak formulation the Dirichlet boundary terms vanish since the test-functions and are by definition zero on the Dirichlet boundary. This implies that, given that is part of the solution of the NSE, the Dirichlet boundary conditions are automatically fulfilled. One important practical example of a Dirichlet boundary condition is the application of no-slip-conditions on walls.

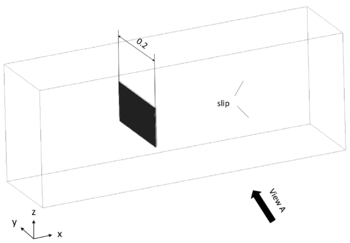

At this point it shall be mentioned that for the imposition of slip boundary conditions by contrast, we may partially integrate 2.13.b such that we get:

|

|

(2.16) |

Then we split the boundary term in parts where we want to enforce slip-conditions and parts where we do not. This may read:

|

|

(2.17) |

By simply omitting the computation of the slip-boundary integral during the simulation, i.e.

|

|

(2.18) |

we imply that the velocities along this parts of the boundary are in a weak sense perpendicular to the local boundary normals . This basically reflects a tangent sliding of the fluid along the respective walls and hence an imposition of a slip boundary condition.

To finish the spatial discretization we introduce linear shape functions in order to approximate and as well as and . Here a Galerkin formulation is used where shape functions and test-functions are of the same kind. By assuming Einstein's summation convention, the approximation reads:

|

|

(2.19) |

|

|

(2.20) |

|

|

(2.21) |

|

|

(2.22) |

Plugging these interpolations into the weak form of the NSE given by equation 2.14.a and 2.14.b we obtain the following semi-discrete form of the incompressible NSE:

|

|

(2.23) |

where on elemental level

|

|

(2.24.a) |

|

|

(2.24.b) |

|

|

(2.24.c) |

|

|

(2.24.d) |

|

|

(2.24.e) |

|

|

(2.24.f) |

Note that the problem is still time dependent. That is, we still need to introduce a time discretization scheme in order to assemble the local contributions to a global system that can be solved. Note also that and here are the approximated quantities of the velocity and the pressure. Since this will be the case for the remainder of the monograph, we will refrain from an indexation for the sake of readability. For the actual computation in an FEM framework, numerical integration methods (such as Gauss-Integration) are necessary to compute the integrals in 2.24.

At the end of this section two basic problems shall be mentioned, that arise in this form of the discretization: The first problem occurs in cases where the convective term is dominant, for example in high-Reynolds or turbulent flows. In these cases the standard Galerkin approach gets unstable. Another numerical difficulty arises from the incompressibility constraint. In incompressible flows the pressure is acting as a Lagrange multiplier that enforces the velocity not to violate the mass conservation in a very strong form. The role of the pressure variable is thus to adjust itself instantaneously to the given velocity field such that the mass conservation holds.This leads to a coupling between the velocity and the pressure unknowns that causes the system in 2.23 to get ill-conditioned or even singular.

Both problems lead to the fact that in an FEM formulated flow problem, the compliance to certain numerical conditions or the application of stabilization techniques are inevitable.

2.2.2.2 Time discretization

Given the first order time dependent, semi-discrete NSE in 2.23, we may use a variety of different methods to integrate in time and hence compute the numerical solution of the NSE. Depending on the application we may therefore use either single step or multistep methods in an explicit or implicit formulation. Famous classes of integration schemes which are based on a single time step are: the list of Runge-Kutta methods or all customized integrators from the Newmark-family. A vast source of detailed information, in particular also in terms of accuracy order or stability, can be found in [9]. For a quick reference about the principal idea of the time integration chapter 3.4.1 in [3] is recommended.

By contrast, a famous class of integration schemes based on multi-step procedures is given by the different schemes of the Backward Differentiation Formula (BDF). In fact the BDF scheme of second order, i.e. BDF-2, is used by the fractional step solver, with which the solutions throughout this monograph were generated.

In the BDF-2 scheme, unlike in other multi-step integration variants, we, for a given function and time, approximate the time derivative of quantities rather than the quantities itself by incorporating information from previous, current and following time-steps. This typically renders this method sufficiently accurate and stable also for numerically stiff problems. The discretization of the time derivative in BDF-2 reads:

|

|

(2.25) |

with the coefficients

|

|

(2.26) |

|

|

(2.27) |

|

|

(2.28) |

If we now introduce the time discretization into the semi-discrete local systems, i.e. we plug 2.25 in 2.23, and furthermore replace in the latter equation the continuous quantities and by their time discrete correspondents and , we obtain the fully discretized local system in block-matrix form as:

|

|

(2.29) |

Finally the elemental contributions can be assembled to a global discrete system that may be solved iteratively using the fractional step method. In fact the combination of the BDF-2 scheme with a fractional step solution procedure poses an established compromise between accuracy, stability and computational costs in an FEM environment.

2.2.3 Fractional step solution

Having the discrete system in equation 2.29, we want to solve for and . In practical examples this system is typically very large comprising up to several millions degrees of freedom, which renders the application of accurate and robust direct solution techniques very inefficient or even impossible. Iterative solvers by contrast are known to behave very efficient with large problems, which is why it might be preferable to use them for a solution. Iterative solvers, however, tend to severe robustness problems with badly conditioned systems. The critical conditioning that may arise from the convective term and the incompressibility constraint, as they were described above, hence require specialized iterative techniques, such as the fractional step method. In the following the idea of the latter shall be sketched briefly. For a detailed derivation, the reader is referred to chapter 3.8 in [6].

The idea of the fractional step method is to split the overall monolithic solution of 2.29 into several steps, such that each step contains a well-conditioned subsystem that can be solved more efficiently. In order to do so an estimate of the velocity field is introduced and hence the convective term is approximated as

|

|

(2.30) |

which is a distinct assumption causing the fractional step method to only deliver an approximate solution of . The approximation, though, converges to the exact solution as tends to zero and is hence a valid assumption for small time steps.

Using the previous assumptions and introducing a time integration scheme as described in the previous section, the following solution steps can be derived[7]:

Step 1:

|

|

(2.31.a) |

Step 2:

|

|

(2.31.b) |

Step 3:

|

|

(2.31.c) |

where is a numerical parameter, whose values of interest are and . In order to keep the equations simple, instead of the above discussed BDF-2 scheme we have chosen a BDF-1 time discretization1 where

|

|

(2.32) |

Given the steps above the fractional step iteration rule reads:

- Given and we can solve for the velocity estimate by means of 2.31.a.

- Having and we use them in 2.31.b to solve for

- Having , and we compute in 2.31.c the approximated solution . If convergence not achieved, start again at 1.

The fractional step method as described here has different properties making it very interesting for the application in a FEM-based framework for the solution of incompressible flows. An important property for example is the improved stabilization in case of a badly-conditioned system. This property is a consequence of computing several well-conditioned steps instead of solving a monolithic ill-conditioned system at once. It is worthwhile to note that this holds even without any explicit implementation of stabilization terms. Another important property is the reduced computational effort due to the fact that the single steps are typically converging much faster compared to the time that is needed for convergence of the overall monolithic system. Finally it shall be mentioned, that the fractional step method allows a very natural introduction of the structural contribution during the solution of an FSI problem. This will also hold for the case of the embedded approach, as it will be shown later.

(1) Note that the BDF-1 discretization exactly represents a first order implicit Euler-scheme.

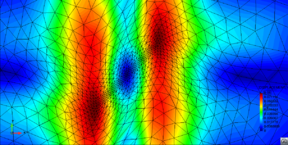

2.2.4 Embedded formulation

Within this chapter the fundamentals of a new embedded formulation shall be discussed. Thereby all the explanations will be kept general in the sense that an extension of this method to an FSI-scenario can be easily understood. By that we want to clearly emphasize the underlying model capabilities with regards to both a pure CFD analysis and a fully coupled simulation of fluid-structure interactions.

The organization of this chapter is oriented at the successive tasks necessary to set up an embedded environment. That is, in the first part it is discussed how different types of structures may be in general embedded and hence approximated within a background fluid mesh. In this context it will be of particular interest how a voluminous body differs from other types of structures. Having approximated the embedded model, a new element formulation will be introduced that is capable of dealing with the embedded boundaries. This in particular refers to the discontinuity that arises at respective borders. Finally it shall be shown how boundary conditions, that appear as a consequence of the present structure, are imposed on the fluid.

An important aspect throughout all the given sections will be the elaboration and distinct presentation of all the fundamental approximations based on which the embedded formulation was designed. An investigation of their impact will then follow in chapter 7 and 8.

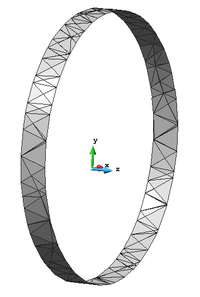

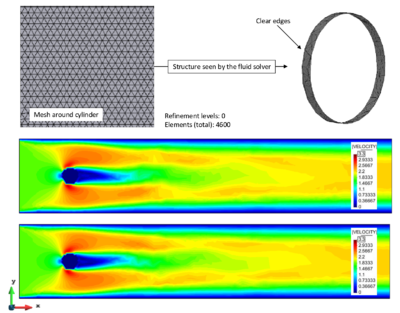

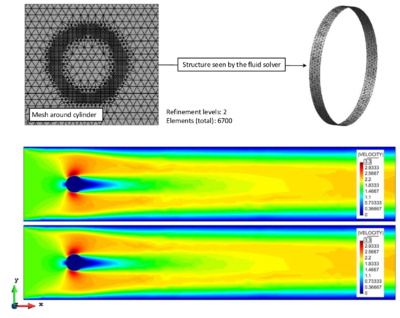

2.2.4.1 Embedding open and closed structures

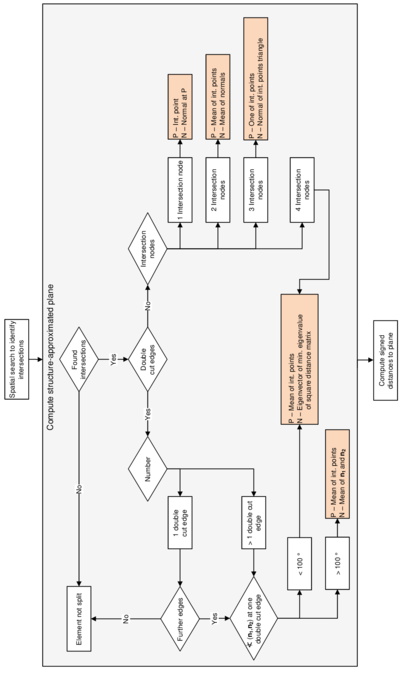

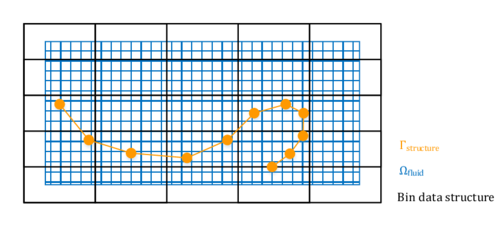

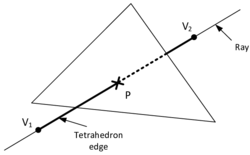

Embedding a structure into a fluid domain requires a mathematical description of the former relative to the latter. This is typically done by using, what in literature is often referred to as, level set methods. The embedded approach we are following here is a level set method in that sense, that we are tracking the motion of an arbitrary interface within a surrounding domain by embedding the interface as the zero level set of a given distance function. The surrounding domain in our case is described by a fluid model whereas the interface corresponds to the physical connection of the given fluid to an embedded structure.

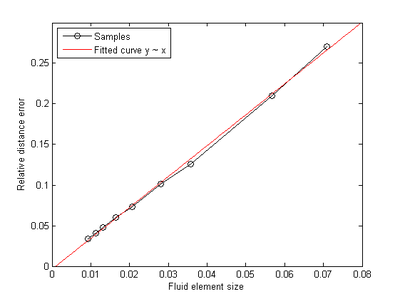

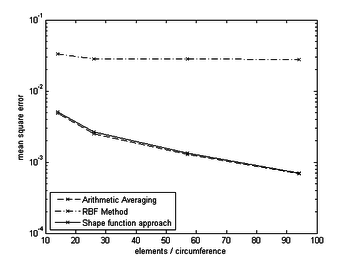

All embedded approaches of that kind have in common, that they are approximating the embedded domain by means of some distance function. Thereby the embedded domain is typically given in a discrete form. For example when we are embedding a structure into a fluid mesh, we are classically using the discrete description of the structure, i.e. its FE-mesh. That means for the embedded method, however, that not the actual structure will be approximated by the distance function but its discretization, which in all cases results in a further level of approximation, that is not present in a body-fitted formulation. It is thus important to note at first, that this additional approximation level leads to an immanent error which only can be reduced but not avoided. A detailed evaluation of the latter will follow in later the course of this monograph.

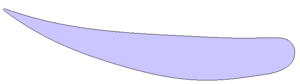

As already indicated, embedding the structure into a background fluid mesh implies classically a distance function which describes the spatial distance between points in both domains such that we are able to identify the common interface. The development of a respective distance function is one focus of this monograph and will be elaborated in detail in chapter 7. In this section we will be rather more interested in the more general question of how to embed into a given domain both open structures like membranes or shells, where we only have an exterior flow, and closed or voluminous structures like bluff bodies or the inflatable Hangar from the beginning, where the interior and exterior part of the fluid needs to be treated differently.

In order to be able to embed both types of structures, first the idea of the level set method needs to be generalized. To this end two distance functions are used, instead of just relying on one. So conceptually there is

- a discontinuous distance function in order to identify and keep track of the position of an embedded interface within a background fluid mesh and

- a continuous distance function which allows to classify fluid nodes as either “inside” or “outside” the embedded structure.

Note that the latter distinction is only necessary given that the embedded structure is voluminous.

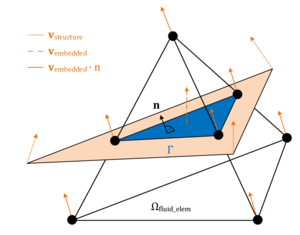

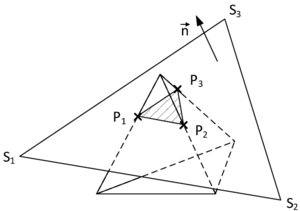

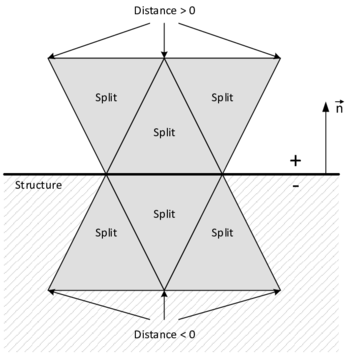

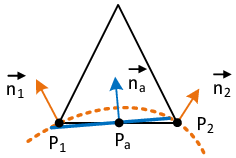

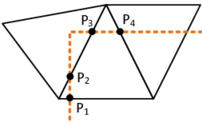

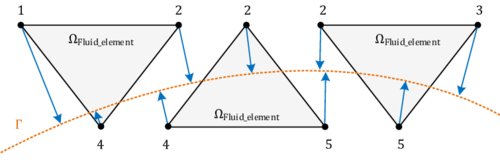

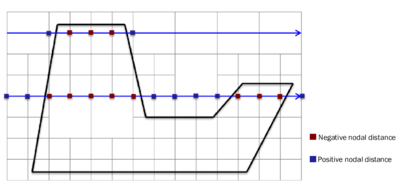

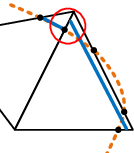

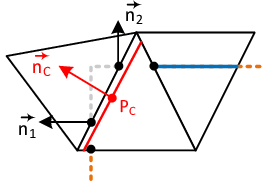

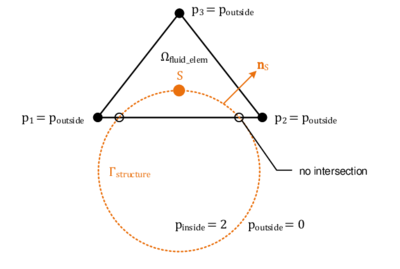

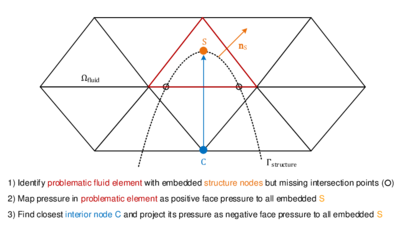

In the first case, so for the identification of the embedded interface, the idea is to use a signed distance function which associates to each node in a given cut fluid element a signed distance to the given embedded structure. The sign is thereby chosen according to the normal orientation of the structure. By that we are able to numerically distinguish between the structure's positive and negative side. Figure 6 illustrates the concept at a 2D example. Having the signed distance values on all nodes of a cut fluid element we can then reproduce the intersection points and approximate the actual embedded interface by means of techniques that are going to be introduced later.

|

| Figure 6: Concept of a distance function within a level set approach |

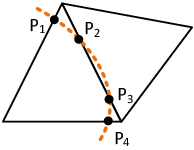

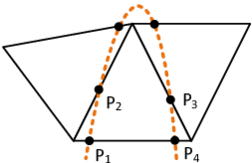

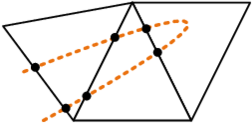

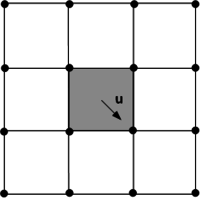

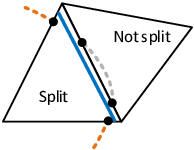

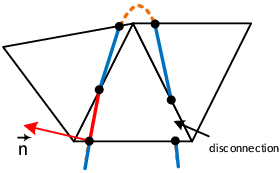

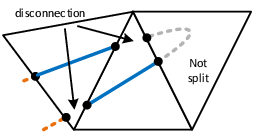

This procedure of computing signed distances and tagging the surrounding fluid nodes with the corresponding values is performed for each cut fluid element independently as indicated in figure 7. That is, the signed distances of the first distance function are not considered to be nodal quantities such as the velocities or displacements, but rather more elemental ones. Due to this customization of the original level set method, the approximation of the embedded interface becomes a purely local operation. Besides of some very nice computationally advantageous aspects, this localization leads to an important characteristic of this first distance function: Since the distances are elemental quantities, different distances may be given at one physical node, which in turn means that there will be not necessarily a continuous representation of the embedded structure as can be guessed from figure 7.

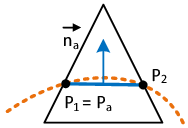

What at a first glance might look very rough has in fact a lot of advantages from which the most important advantage is the possibility to deal with several discontinuous structures within one fluid mesh as may be easily understood from figure 8.

In here a point is shown, that, depending on which structure it is referred to, should have a positive distance according to the red triangle but a negative one when seen as a part of the blue one. If the distance function was continuous and the distances were nodal quantities, node would clearly not be able to describe both structure parts at once which is, however, necessary obviously to correctly represent it. With the distance function being discontinuous by contrast, the embedded structure in each of the highlighted triangles can be reconstructed exactly since the distances are not stored on the nodes but are rather more members of the single elements. In fact this makes the discontinuous approach very powerful, since this implies that we are principally not restricted in terms of possible intersection patterns that may arise across several elements. This is what forms a real embedded approach. In this context it is moreover worthwhile to mention, that the above indicated computational advantages arise just because of this purely local operation, since in such a framework a parallelization of the computation is straight forward and very effective as we will see later.

|

| Figure 7: Elemental computation of distances |

So in a nutshell, the discontinuous distance function is needed in order to be able to identify the embedded interface without any restriction to certain intersection patterns. Given a voluminous structure, however, it might not be enough to “only” know about the embedded interface. Typically it is also of interest which nodes of the fluid mesh are lying inside the structure and which ones are outside. This in particular is the case when we have inflatable structures for which we might want to treat the closed fluid part in the interior of the structure differently from the environmental fluid. Therefore another indicator to identify “inside” and “outside” is needed.

|

| Figure 8: Need for discontinuity in an embedded approach |

An obvious indicator for the distinction is again the sign of the distances that are computed. Different from before, however, the nodal distance to the structure is needed, since we want to classify each node as either outside or inside. That is basically why we need a continuous distance function instead of a discontinuous here. Details to their implementation will follow in chapter 7. For now it is only important that this distance function computes for each node a distance to the embedded structure and assigns its sign automatically according to a technique which is based on what in computer graphics is called “ray tracing”. Following this terminology the process of assigning the indicator to the single nodes is typically referred to as “coloring”

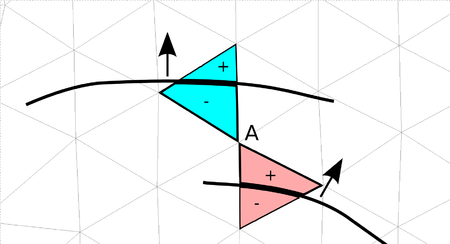

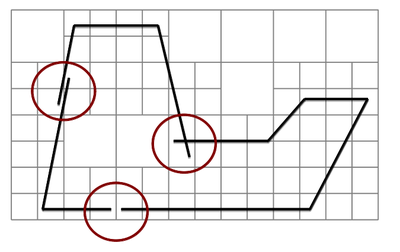

The underlying concept with this type of coloring is straightforward: Depending on an sequence of choice, different rays are “shot” through the fluid domain such that they start and end at a node which by definition lies outside. Along their way they assign every trespassed node the indication for “outside”, which we chose to be a positive sign for the respective nodal distance. Whenever a structure boundary is now crossed the rays switch their status and henceforth assign the opposite indication. So if just started the nodal distances will be tagged with a negative sign after the ray crosses a structure boundary, indicating that they belong to the interior. This is done until every fluid node was touched by a ray at least once. As a result we obtain fluid nodes that either have a negative distance to the structure, iff a fluid node is part of the structure's interior domain, or a positive distance else. Figure 9 illustrates the concept.

|

| Figure 9: Coloring by means of ray tracing |

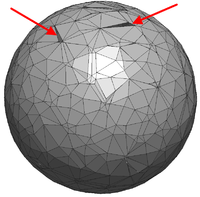

Unfortunately the algorithm in its basic form only works for simple cases, excluding any model defects or similar challenges as shown in figure 10. In order to obtain a robust automatic coloring, additional implementations were necessary which will, however, not be further detailed here.

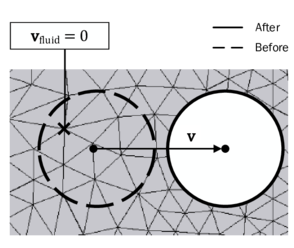

Assuming a robust coloring technique, we are finally able to apply any model assumptions to the different domains of the fluid, which are point-wise identified with positive or negative distances. Since in a lot of applications the flow in the interior is not of interest, we simply deactivate the corresponding degrees of freedom by setting all the velocities and pressures at nodes with negative distances to zero. By that the respective degrees of freedom are effectively excluded from the overall solution of the fluid.

At the end of this chapter it can be concluded: Using two different distance functions in combination with a powerful coloring technique allows to take into account both voluminous and membrane or shell structures in an embedded environment. It is worthwhile to mention that it is of no importance whether the embedded approach is applied in a CFD or an FSI context.

|

| Figure 10: Challenges in coloring by means of ray tracing |

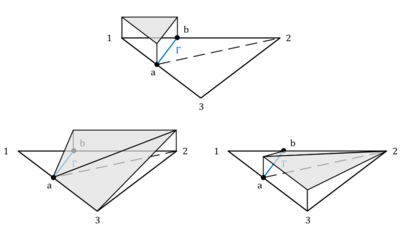

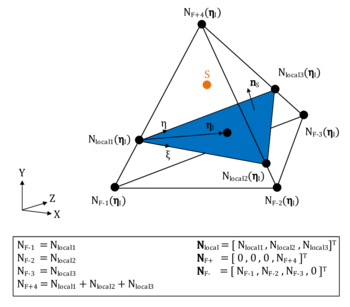

2.2.4.2 Element technology

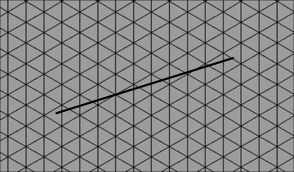

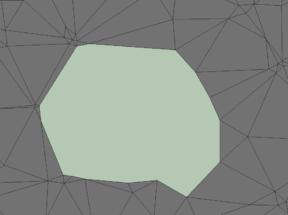

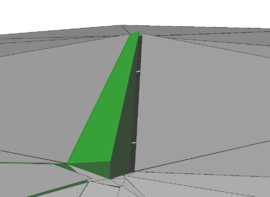

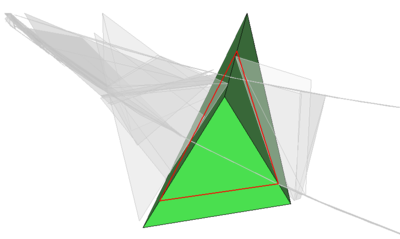

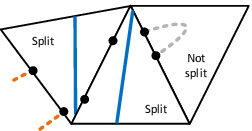

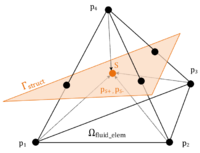

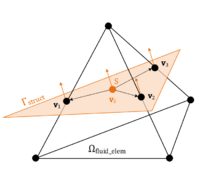

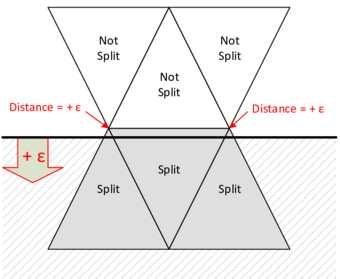

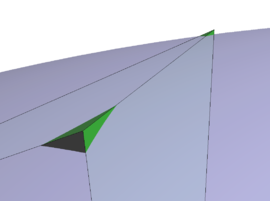

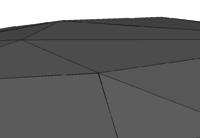

Given that the embedded structure is properly represented within the fluid domain by the techniques that were introduced in the previous section, we have to take care about the discontinuities at the embedded interface. To this end we are customizing the element formulation of the one elements that intersect with the structure. Conceptually the idea is here, to add nodes at the interface and to perform a local subdivision. Figure 11 illustrates the concept.

To introduce finally a discontinuity an obvious and easy approach might be to introduce Dirichlet boundary conditions on the newly added nodes. Unfortunately such an approach is by far not robust since the mesh obtained may become arbitrarily bad, i.e. very small and deformed elements might appear, which finally can lead to a severe ill-conditioning of the system.

|

| Figure 11: Virtual subdivision of split element |

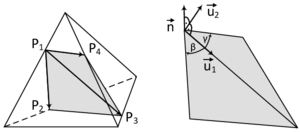

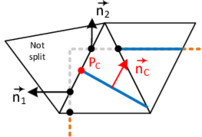

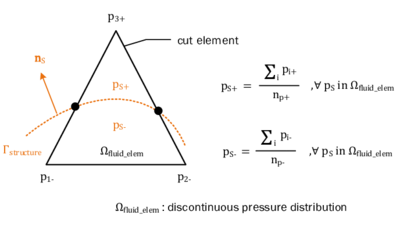

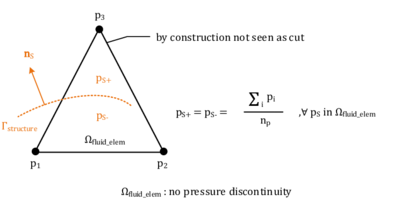

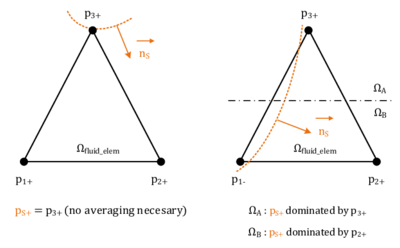

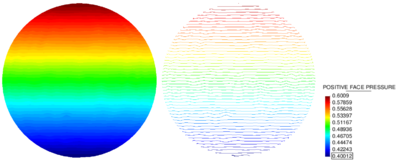

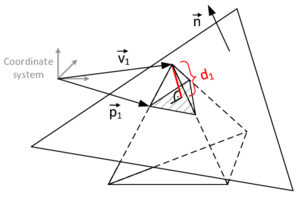

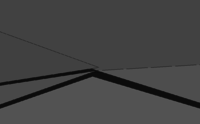

Therefore a different approach was chosen, instead. In there we first duplicate the nodes at the interface and divide the domain into two virtual blocks with each two virtual nodes as shown in the figure 12. Taking into account the later application we distinguish the two new virtual blocks by referring to them as each the positive or negative side of the split fluid element. As a result we may independently describe quantities on the positive side (such as a positive face pressure) or the negative side (negative face pressure), respectively.

The key idea now to solve the conditioning problem is, that instead of giving full freedom to the virtual nodes, we will express the degrees of freedom associated to those nodes as a function of the degrees of freedom of the actual fluid nodes on the respective side of the virtual domain. In our case for instance, we are imposing that the variables on the interface must respect the following constraints:

|

|

(2.33) |

and

|

|

(2.34) |

where represents any degree of freedom and the arguments correspond to the node numbers. This can be graphically understood as illustrated in figure 13.

|

| Figure 12: Separation of domain by duplication of nodes |

|

| Figure 13: Constraints on virtual nodes in a discontinuous element formulation |

As might be guessed up to now, an approach as described above would require a further introduction of constraints after the fluid domain was modeled with finite elements. So a better way would be to incorporate such constraints already by construction. This can now be achieved by introducing modified shape functions which indeed span exactly the same space as the one described by the standard finite element space, however, take into account the new nodes and constraints.

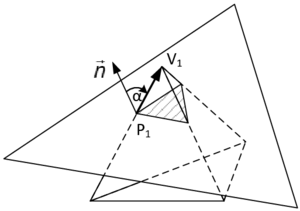

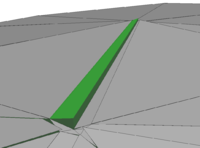

Functions with these characteristics were developed for other purposes in the work of Ausas et al. in [10]. The basic idea is thereby as simple as effective: The shape functions of the split fluid element are constructed in the same way as for standard finite elements, however, with the two major differences of 1) each being just defined on one of two separate virtual domains and 2) each containing a vanishing gradient along cut edges in 2D or intersecting faces in 3D. An illustration is given in figure 14.

|

| Figure 14: Discontinuous shape functions |

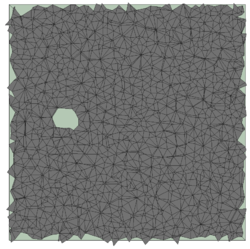

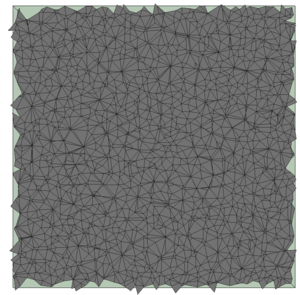

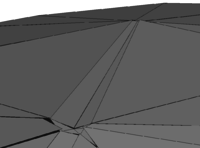

The numerical integration over the split fluid element domain is eventually done by using the well-known Gauss-Integration method where we simply introduce separate Gauss points on each sub-triangle and integrate for each sub-triangle independently. Figure 15 highlights the idea.

Note that this is a purely local approach where the introduced auxiliary nodes on the interface are not needed, and actually not even seen in the computation of the overall system. In fact all the operations in the embedded approach are purely local with such a formulation, which is very advantageous in terms of high performance computing.

Note also that by using these modified shape functions, the finite element solution along the edges or faces that are not cut, is not changing. This is an important characteristic in that sense, that these elements can be used together with standard finite elements in a common model. As a matter of fact, the only thing that changes in the elements cut by the interface is the kinematic description used in reconstructing the different fields of interest.

Finally it is worthwhile to highlight, that by applying this modified shape function approach within an element, we introduced by construction an element that does not allow any flux over an embedded interface. That is exactly the discontinuity we wanted to implement.

Apart from this discontinuity the modified shape functions are generally continuous between elements. As will be elaborated in the later course of this monograph, however, there are “intersection patterns” in which this does not hold anymore. Anyways the hence introduced error might be still a valid approximation.

|

| Figure 15: Gauss integration in an embedded approach - The figure shows a separate integration for each sub-triangle as well as each side of the split fluid element |

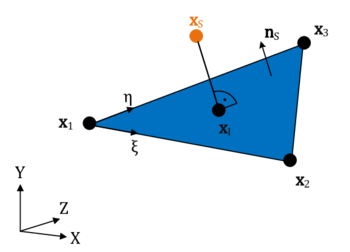

2.2.4.3 Velocity boundary conditions

Because of the constraints imposed on the virtual nodes, the shape functions described above have a zero gradient in direction of each edge or face intersected by the structure. While this solves the ill-conditioning problem it implies that the gradient of the shape function normal to the embedded interface is zero. In turn this implies that if we use this modified space to describe the velocities, the only suitable boundary condition for the beginning is “slip”. How the latter is imposed in an embedded scenario is going to be explained in this section. At the end then, we will briefly sketch possibilities how a stick behavior might be introduced at embedded walls.

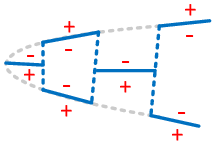

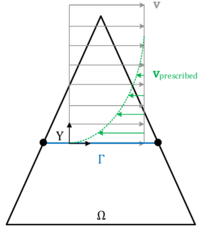

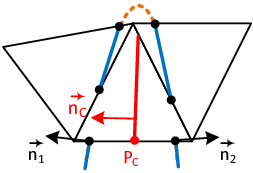

Given the NSE on elemental level, velocities at the embedded interface are imposed weakly by making use of a partial integration of the weighted mass conservation equation, 2.14.b. Since we have to take into account the positive and the negative side of the cut fluid element independently, we first split the integral domain into the positive and negative virtual subdomain and then perform the integration by parts. Furthermore we subdivide the generated boundary terms into the “standard” element boundaries and the embedded boundary which all together form the complete boundary of the cut fluid element:

|

|

(2.35) |

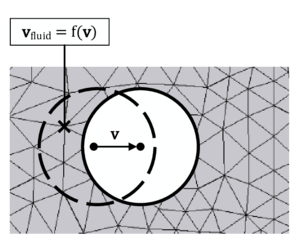

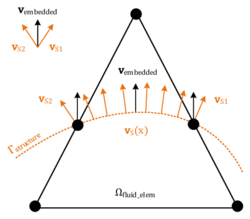

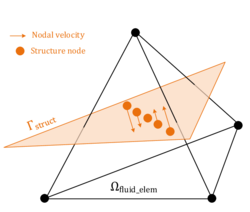

The volume integrals in here are solved using the Gauss integration for discontinuous fluid elements as described in section 2.2.4.2. The embedded boundary by contrast represents the contact to the embedded structure and is consequently constrained by the structure´s velocity. As we will see in chapter 8.3.3, it is from a practical point of view, however, difficult to introduce a corresponding constraint to the fluid formulation since in general the velocity of the structure is not constant along the embedded boundary. Therefore, in order to significantly ease the computation of the boundary integrals in 2.35, we assume that the velocity is constant along the interface within a split fluid element. This constant velocity is in the following referred to as the “embedded velocity” and it is obtained by averaging or interpolating the given velocities of the structure inside the respective fluid element. See chapter 8.3.3 for further details in this regard. Furthermore, since we regarded values along intersected edges of the fluid element as constant, as explained in the previous chapter, the embedded velocity is constant throughout the entire cut fluid element and will hence act at each fluid node equally. See figure 16 for an illustration.

Introducing this embedded velocity and having constant values along cut edges obviously makes a proper representation of the boundary layer very difficult. Apart from that, however, it has important advantages when it comes for example to the mapping of the physical quantities between the different domains, as we will see later. Since the discussed embedded method was at first not designed for an application in highly turbulent flows driven by corresponding boundary layers, and since in all other cases the boundary layer is often anyways not resolved but rather more incorporated using wall-functions, we considered this as a valid assumptions.

Introducing now the aforementioned assumptions into 2.35, we obtain for the embedded boundary in the fluid:

|

|

(2.36) |

which means that inside the cut fluid element the fluid is free to slip along the embedded boundary , but the velocity of the fluid in normal direction to the embedded boundary is prescribed by the velocity of the structure along the same direction1. This exactly corresponds to the weak form of a slip-boundary condition in a CFD simulation. It is important to note that by this approach only the part of the embedded velocity in normal direction is taken into account in the CFD solution.

We can utilize now the assumptions in terms of the constant embedded velocity to simplify the final computation of the integral from 2.36. The idea is thereby similar to the Gauss integration: We assign to each intersection node between structure and fluid (see figure 16) a part of the area of the embedded interface. Then, having assumed the velocity of the structure to be constant along the embedded interface, the computation of the integral reduces to a simple multiplication in the form:

|

|

(2.37) |

where describes a fraction of the overall area of the embedded boundary at the intersection node . is here computed by means of weighting the overall interface area which in turn relies on simple geometric considerations. As already pointed out, this way of computing the integral is of course just valid under the assumption of a constant embedded velocity.

At this point the two major approximations that were introduced and discussed in the course of this section shall be recapped briefly:

- So far in the embedded approach we assumed by construction a slip boundary condition along the interface of the embedded structure

- We considered the velocity of the structure to be constant within a single cut fluid element.

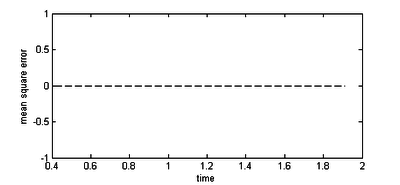

These are clearly assumptions that will lead to approximation errors. Their actual influence has to be tested, though. First investigations to this end will follow in subsequent chapters. Here it shall only be emphasized that, while the errors introduced due to the second assumption are becoming negligibly small as refining the background fluid mesh, errors due to a restriction to slip-conditions do not. An implementation of stick-conditions in the given embedded approach is, however, still part of ongoing developments, which is why at the end of this section only the principal idea of the main approach in this context shall be sketched.

A stick boundary condition may be introduced in the form:

|

|

(2.38) |

where is the orthogonal distance to the wall. The latter may be computed from geometric considerations that can be done once the embedded structure is mathematically captured by the distance function. Given that , a simple wall law might read:

|

|

(2.39) |

where we introduce a pseudo viscosity in that sense that we apply a prescribed velocity in opposite direction to the given velocity field at the embedded boundary. The concept is illustrated in figure 17. This procedure of introducing wall laws within cut elements is in fact very promising and might be further exploited in future developments. The objective should be to enrich the embedded approach with powerful wall-laws in order to be able to sufficiently represent the boundary layer.

|

| Figure 17: Introduction of a wall law to allow for stick boundary conditions on an embedded interface |

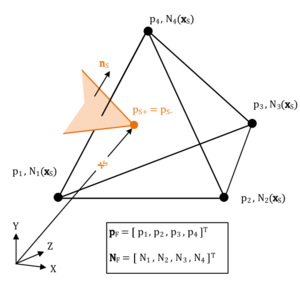

2.2.4.4 Pressure boundary conditions

Having applied a velocity boundary condition of Dirichlet type at the embedded interface within a cut fluid element in order to incorporate the movement of the structure, the fluid at the interface immediately reacts to this by adjusting the pressure on the interface such that there is no flux through it, i.e. the discontinuity requirement is maintained. This pressure change in turn has to be applied as Neumann BC on the remaining fluid domain. Classically this can be done either in a strong or in a weak from, respectively. Therefore we recap the pressure term from the weighted NSE given in equation 2.13.a:

|

|

(2.40) |

Partial integration of this term yields:

|

|

(2.41) |

In principal this equation offers now two possibilities: Either we prescribe the pressure in a strong form by inserting it on the left hand side of the equation and use the latter in the computation of the NSE, or we use the partial integrated formulation on the right hand side and hence impose the pressure in a weak form by introducing a traction in the respective boundary term such that we get a total of

|

|

(2.42) |

where describes the Neumann boundary. Note that we are changing by this the continuity requirements with regards to the pressure. I.e. while the pressure needs to be continuous in 2.40 it can be discontinuous in 2.42, which clearly relaxes requirements in terms of the solution space. So with the computation of the NSE, we have to choose between either using the strong formulation given in 2.40 or the weak formulation with relaxed solution requirements given in 2.42.

Knowing that the fractional step method we are using is generally based on a strong imposition of pressure boundary conditions, we chose to apply pressure boundary conditions at the embedded interface in a strong form. By that we are increasing the accuracy2 whereas at the same time lowering the computational effort 3. It shall nevertheless be emphasized that this win-win kind of situation is only given with the fractional step method and may look different in other cases in which a weak imposition of the pressures boundary conditions might be inevitable. In the framework of the given embedded method, though, this means: Pressures at embedded interfaces are imposed strongly in the form of 2.40.

(1) Note in this context the scalar multiplication of with the boundary or structure normal

(2) Since we are fulfilling the pressure boundary condition point-wise

(3) Since we are not forced to compute additional terms as they occur in a weak formulation

2.3 Computational structure mechanics

In the framework of this chapter a very basic but general overview is given about the differential equation of an elastic solid and its discretization in space and time. An extensive introduction into the topic is given in Malvern [2], Holzapfel [11] and Belytschko [12]. A further very recommendable work in this context is the classical textbook on FEM by Zienkiewicz [13].

2.3.1 Governing equations

As already mentioned in table 1 the structure is mainly described by a Lagrangian description of motion. Based on this approach we will discuss the main equations in the following. This will finally lead us to the initial boundary-value problem of elasticity theory.

Kinematics

Considering a deformed body in the current configuration - expressed by the coordinates - it can be related to the reference configuration by means of the displacement field at any point of time:

|

|

(2.43) |

Accordingly, the velocity field of the material particles can be derived

|

|

(2.44) |

as well as the acceleration field

|

|

(2.45) |

In order to describe the relation between both configurations, the deformation gradient as a fundamental measure in continuum mechanics is introduced

|

|

(2.46) |

and therefore represents a mapping function of a line element in the reference configuration to the current configuration. As the deformation gradient is not suitable as strain measure, the non-linear Green-Lagrange strain tensor is introduced which is applicable for large deformations and equals to zero in an undeformed state

|

|

(2.47) |

whereas denotes the unit tensor. From the equation it appears that the Green-Lagrange strain tensor refers to the undeformed configuration. There is also other strain measures such as the Euler-Almansi strain tensor which refers to the deformed configuration and contains the inverse of .

Balance equations

The inertia forces, internal forces as well as the external forces reacting on a body in the current configuration are in equilibrium according to Cauchy's first equation of motion (balance of linear momentum):

|

|

(2.48) |

Here, denotes the material density in the current configuration, the Cauchy stress tensor and an acceleration field characterizing the external force. This field equation is stated in strong or local form indicating that it is fulfilled at any point throughout the current domain . As we want to refer the set of equations to the reference configuration in the manner of the Total-Lagrangian formulation, the equilibrium equation can be transformed to reference configuration. To this end, the Cauchy stress tensor has to be rewritten with regard to the reference configuration resulting in the second Piola-Kirchhoff stress tensor :

|

|

(2.49) |

Then, the balance of linear momentum w.r.t. the reference configuration can be formulated as

|

|

(2.50) |

in which we use the material density in the reference configuration. The external volume body force is now considered to be a function of the reference configuration

|

|

(2.51) |

The aforementioned symmetry of the second Piola-Kirchhoff stress tensor is particularly expressed by Cauchy's second equation of motion (balance of angular momentum)

|

|

(2.52) |

which is also valid for the Cauchy stress tensor

|

|

(2.53) |

For the sake of completeness, we also want to mention the mass balance equation

|

|

(2.54) |

at which characterizes a measure for the volume ratio of infinitesimal small volume elements in an undeformed and a deformed configuration.

Constitutive equations

The constitutive equations manifest a relation between the stress and the strain measure and thereby linking the reaction of the material to the applied loads. In the course of this work we will use materials which allow large displacements but small strains, what advises to use the St. Venant-Kirchhoff material model resulting in a linear relationship between the Green-Lagrange strain tensor and the second Piola-Kirchhoff stress tensor

|

|

(2.55) |

whereas describes the elasticity tensor of fourth order. Further assuming an isotropic elastic material, the stress-strain-relationship can be resolved to the following equation

|

|

(2.56) |

at which and are the Lamé constants which depend on the material specific Young's modulus and Poisson coefficient . In problems witch small deformations, the difference between the deformed and undeformed configuration can be neglected such that the constitutive equation reduces to

|

|

(2.57) |

what describes a linear elastic material behavior. Therein, denotes the linear elastic strain tensor.

Initial boundary value problem

The kinematic relation 2.47, the balance of momentum 2.50 and the constitutive equation 2.55 hold throughout the entire domain which is initially defined by a prescribed displacement field and velocity field

|

|

(2.58) |

The domain is limited by the boundary along which the boundary conditions have to be defined for any point of time. At every location of the boundary either the state variables itself have to be prescribed (Dirichlet boundary conditions) or their derivatives (Neumann boundary conditions)

|

|

(2.59) |

|

|

(2.60) |

The normal vector denotes the vector normal to the Neumann boundary. and are non-overlapping and jointly cover the complete boundary .

2.3.2 Discretization

Generally the strong form of the momentum balance can not be solved analytically which requires to use discretization techniques in order to find an approximate solution. This section discusses the applied methods for the discretization in space and time.

2.3.2.1 Spatial discretization

The method of Finite Elements is used for spatial discretization. The idea is to introduce a finite number of nodes throughout the domain at which the displacement field is approximated. The field in between the nodes is described by an interpolation by means of shape functions, e.g. Lagrange polynomials. The Finite Element method does not solve the strong form but the weak formulation of the differential equation which can be derived by integral principles, more precisely the principle of virtual work. This principle states that if a domain is subjected to an admissible, infinitesimally small virtual displacement , the generated virtual work has to vanish (i.e. ).

The application of the principle of virtual work to the strong form 2.50 leads to the following equation in Total-Lagrangian formulation in which the structure is considered w.r.t. the reference configuration (characterized by the undeformed domain and the undeformed boundary )

|

|

(2.61) |

with

|

|

(2.62) |

|

|

(2.63) |

|

|

(2.64) |

This set of equations expresses that the sum of virtual work of inertia forces , internal forces and external forces vanishes.

Introducing the concept of Finite Elements, the displacement as well as the material coordinate at any location within an element can be described with the matrix of shape functions based on the nodal displacements :

|

|

(2.65) |

Based on this approximation, the strain-displacement relation can be written, assuming small deformations and rotations (derived from equation 2.47)

|

|

(2.66) |

whereas is the strain-to-displacement differentiation operator, which can be reviewed in the recommended literature, and denotes the strain-displacement matrix. Substituting these equations into the weak form we finally obtain for an arbitrary finite element in the Total-Lagrangian formulation

|

|

(2.67) |

|

|

(2.68) |

In an Updated-Lagrangian formulation, which considers the system in the deformed state, the shape functions are a function of the current configuration . Further, the integration has to be performed over the current domain and the current boundary :

|

|

(2.69) |

|

|

(2.70) |

The integration on element level is usually approximated with the Gaussian quadrature (see e.g. [13]). Taking the sum over all elements we will end up in the semi-discrete problem [13]

|

|

(2.71) |

with

|

|

(2.72) |

where is the quadratic, symmetric and sparse mass matrix, the internal force vector and the external force vector.

2.3.2.2 Time discretization

The time discretization is performed with a second order Newmark-Bossak scheme, [14], which shall be shortly introduced here. We want to concentrate on the Updated-Lagrangian formulation in which the reference configuration is updated at each time step. Therefore we can compute the current configuration at time step based on the reference configuration at time step .

In the Newmark scheme [15] the set of unknown variables in equation 2.71 is reduced to the displacements which implies that the velocity and the acceleration have to be expressed as functions of the displacements in time step :

|

|

(2.73) |

|

Here, and and are constants which control the order of accuracy and numerical stability and can be chosen e.g. according to [15]. Inserting equation (2.74) into the semi-discrete differential equation (2.71) yields

|

|

(2.75) |

in which the internal force vector is typically given in the form

|

|

where is the global stiffness matrix. Equation (2.75) is a non-linear equation system for the unknown displacements which can be solved in an iterative solution procedure using e.g. the Newton-Raphson method.

3 Fluid-structure interaction

Up to now we considered structure and fluid to be independent and restricted ourselves to their single field solution. In engineering practice, though, both mechanical systems are often tightly coupled and hence need to be combined in a global model whose interaction may be simulated by means of dedicated solution procedures. Nowadays, as a result of intense research and development during the last decades, powerful and efficient technologies are available therefore making it more and more attractive to incorporate different interaction phenomena in the classical single field analysis. The expectation here is to be able to get a more profound understanding of complex fluid-structure systems in which the coupling plays an important role, such as for instance light-weight structures in a CFD context. This chapter now shall provide the relevant theoretical background for such a coupled fluid-structure analysis.

The chapter is organized in three different parts. In the first part the coupling conditions are introduced, so it will be briefly discussed what it formally means to couple a fluid- with structure-mechanical problem. In the second part then different possibilities for the mechanical formulation of the global FSI-problem shall be presented. At this point indeed a brief overview of possible approaches will be given, but general focus will be the embedded and the Arbitrary Lagrangian-Eulerian approach. Finally relevant solution procedures will be discussed in more detail. To this end both the monolithic and the partitioned approach shall be discussed together with the question of how to get from the system in the first approach to the one in the latter as well as what possibilities and drawbacks either way has got. Focus here will be the partitioned approach. In this context the numerical problems related to the artificial added-mass effect will be introduced for which at the end of the chapter a stabilization method will be presented.

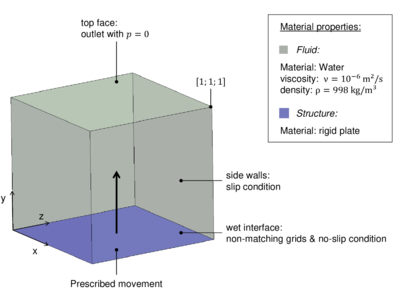

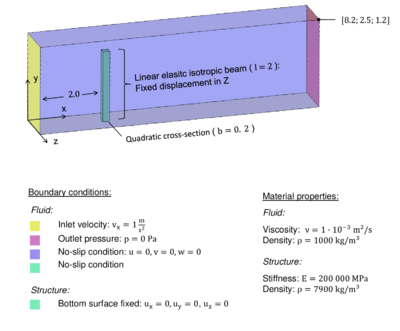

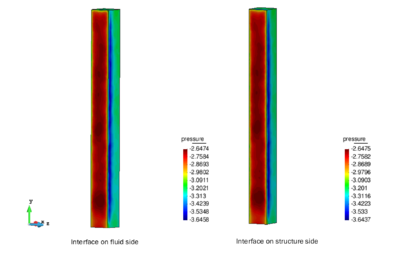

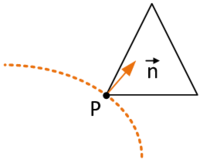

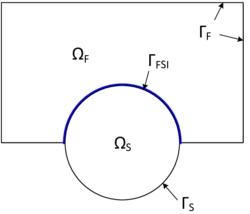

3.1 Coupling conditions of the FSI problem

In the previous chapter the fluid as well as the structure have been considered as separate fields which do not interact with each other. In order to take a strong coupling between the fluid domain and the structure domain into account, the coupling conditions at the coupling interface, which is defined as the shared boundary , have to be fulfilled. The notations can be taken from the visualization in figure 18.

|

| Figure 18: FSI coupling interface - The fluid domain with the boundary and the structure domain with the boundary share the FSI interface . |

On the one hand, the particles are not allowed to cross the shared interface which enforces a kinematic condition depending on the applied fluid model [5]. In case the viscosity of the fluid cannot be neglected (viscous fluid), a "no-slip"-condition at the interface can be defined as follows

|

|

(3.1) |

|

|

(3.2) |

These equations express the continuity of displacements and velocities across the interface. Physically it means that the fluid particles close to the interface conduct the same movement as the particles on the structure domain. Depending on which formulation and therefore which coupling variable is chosen (displacement or velocity), only one of the two equations is applied as they are equivalent in their physical meaning. If viscous effects of the fluid can be neglected, a "slip" condition needs to be defined instead. This results in the following relations

|

|

(3.3) |

|

which describes the continuity of displacements and velocities perpendicular to the interface.

On the other hand there are dynamic conditions which the fluid and structure have to comply to at the interface:

|

|

(3.5) |

These conditions guarantee that the force equilibrium of the surface traction vectors along the interface is fulfilled.

3.2 Formulation of the FSI problem

A key question in approaching FSI problems is the question about how to formulate the material motion in the fluid and the structure field. In the last decades many different formulation methods have been proposed of which each has advantages and drawbacks when applied to certain physical problems. In the framework of this monograph we will focus on the fundamentally different ALE method and embedded method. However, in the first part of this chapter we want to relate these methods to a very general context of FSI formulation methods. Afterwards, the ALE approach will be further discussed in detail, whereas the embedded method was already treated intensively in chapter 2.2.4.

3.2.1 Two principal formulation methods

A very detailed and widespread overview about formulation methods in general may be found e.g. in [16], [17] and [18] as well as in the included literature references.

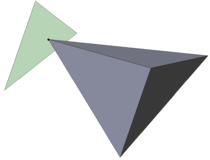

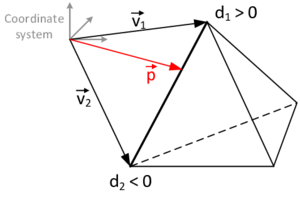

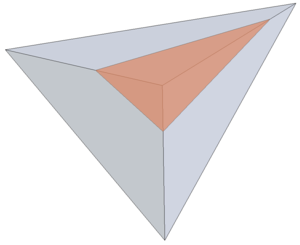

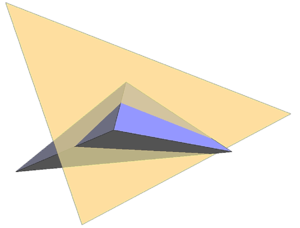

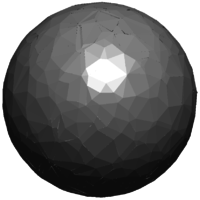

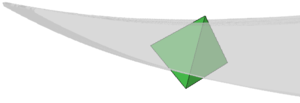

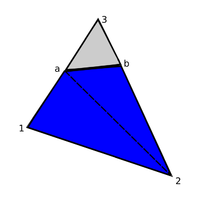

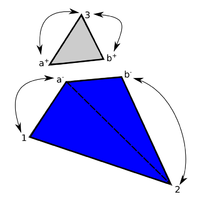

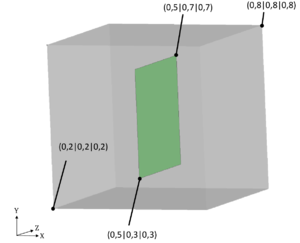

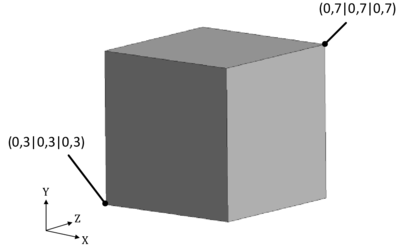

To explain two principal formulation methods, let us first of all assume a rigid body motion of a structure within a discretized fluid domain (See figure 19).

|

| Figure 19: Rigid body motion of a structure (grey) in a fluid domain |

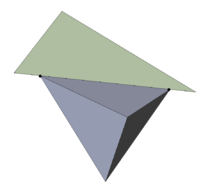

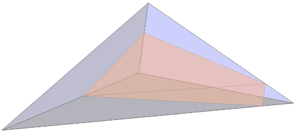

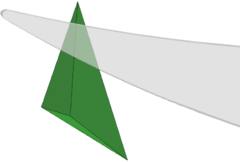

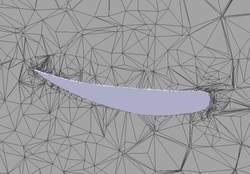

A classical way to handle the coupled motion is by using a body-fitted solution approach in which the fluid nodes at the FSI interface are forced to follow the movement of the structure at the same interface (See figure 20).

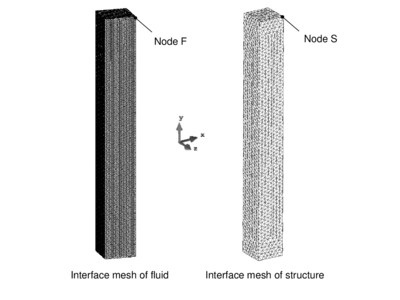

This is done in the so-called Arbitrary Lagrangian-Eulerian (ALE) method [5,19]. The ALE method has many advantages making it the method of choice for many applications. It allows for example an easy tracking of the FSI interface and therefore provides high accuracy of the flow along the interface. This may result in a high overall accuracy of the solution. Even cases in which the grids of the fluid and the structure along the interface do not exactly match can be handled. Therefore mapping techniques are used which in general allow to map quantities between arbitrary different grids. Throughout the present work we therefore use the Mortar Element Method described in [20].

|

| Figure 20: Body-fitted solution approach |

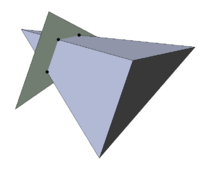

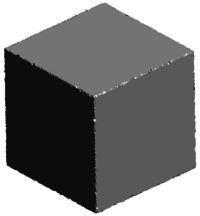

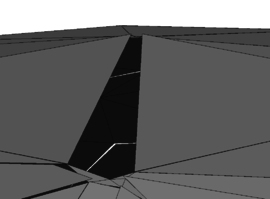

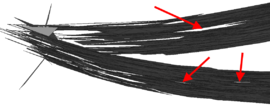

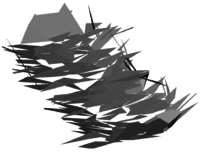

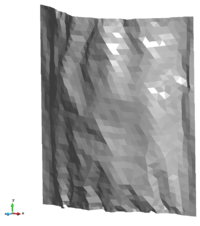

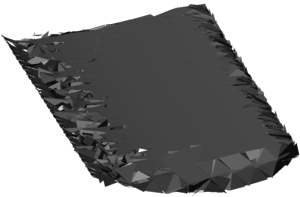

On the other side, however, it is possible in an ALE approach, that large deformations and rotations distort the mesh such that even a costly remeshing may become necessary. In fact this typically happens when simulating the previously introduced inflatable hangar. Here the problem becomes even more critical since the structure starts to wrinkle or fold as indicated in figure 21.

|

| Figure 21: Folded tube of an inflatable hangar structure - If the hangar is subjected to severe wind loads, the tubes may be massively deformed resulting in such a wrinkling and folding. |

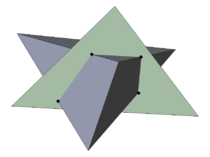

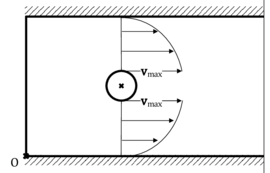

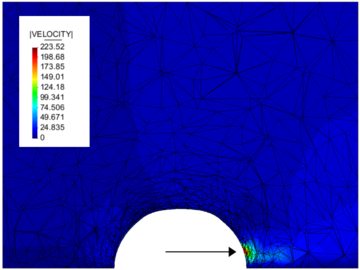

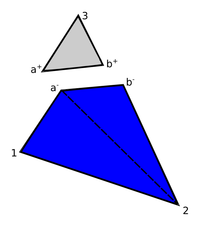

In order to treat large deformations or such complex movements, one can apply non-body-fitted or fixed-grid methods in which the fluid mesh remains unchanged. The fluid domain is then described by an Eulerian formulation and the structure moves independently from the fluid nodes locations. The concept is visually explained in figure 22.

|

| Figure 22: Embedded approach - The fluid and the structure mesh are completely decoupled from each other. |

A widely used method based on the fixed-grid approach is the embedded or immersed boundary method which was first proposed by Peskin [21,22] in order to simulate the blood flow through a beating heart. Initially used with Finite Differences, it was later extended to Finite Elements as the Immersed Finite Element Method, e.g. by using the discrete Dirac delta functions [23]. Another derived method is the Fictitious Domain Method discussed e.g. by Glowinski et al. [24] which describes the interface between fluid and structure by means of a distributed Lagrange multiplier. An extension of the fixed-grid approach to the application of compressible solids and fluids is provided by the Immersed Continuum Method (see also [25]). A general overview on the derivations of Immersed Boundary Methods is e.g. given in [26]. It is important to realize that with all immersed methods the solution accuracy or stability depends on the given background mesh which typically is seen as a big disadvantage.

Finally, the advantages of the ALE- and the embedded method can be combined in Chimera method which divides the fluid into a moving domain around the FSI interface and a non-moving domain further away from the interface. An example where the Chimera method was successfully applied with flexible structures is given in [27].

In the following the ALE method is discussed on a more theoretical basis. The theoretical background of the embedded method is given in chapter 2.2.4.

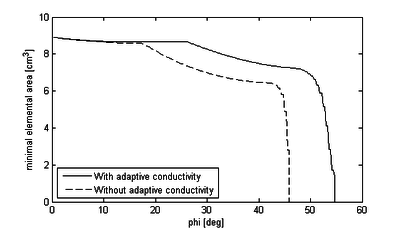

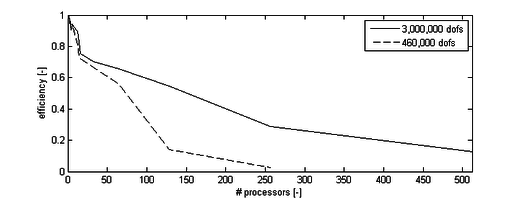

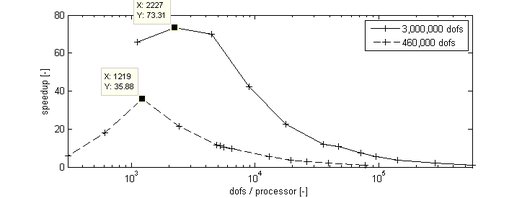

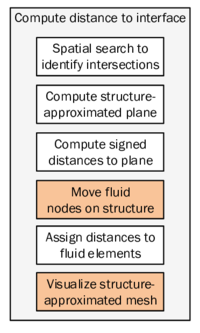

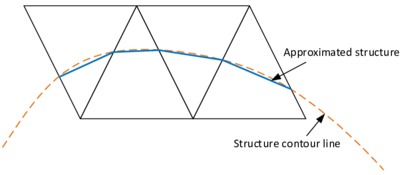

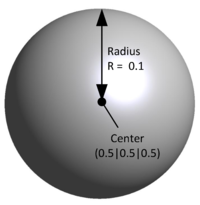

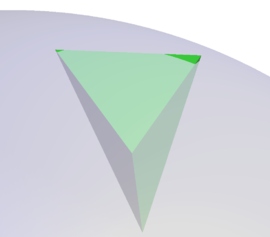

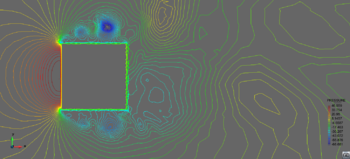

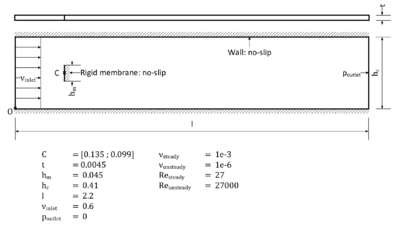

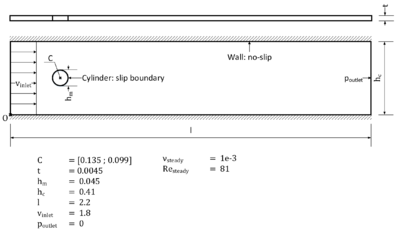

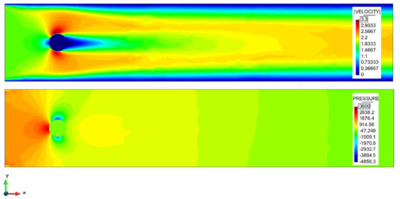

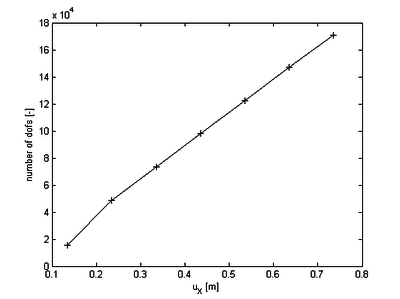

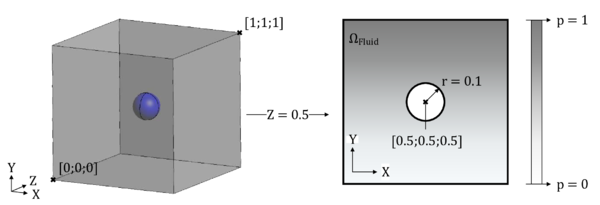

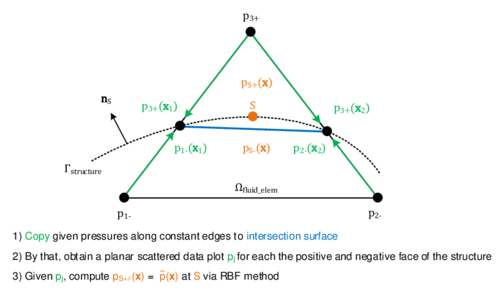

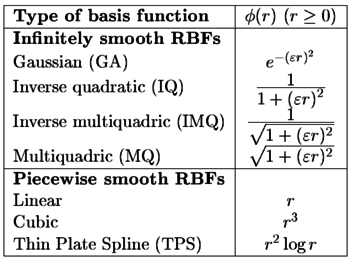

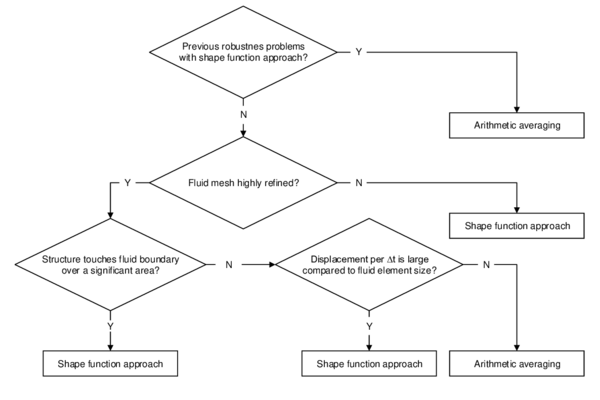

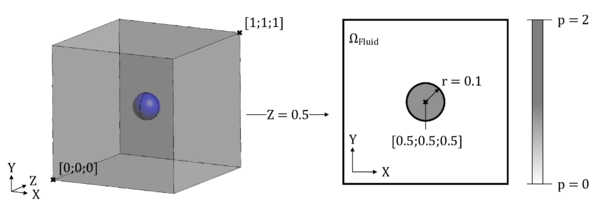

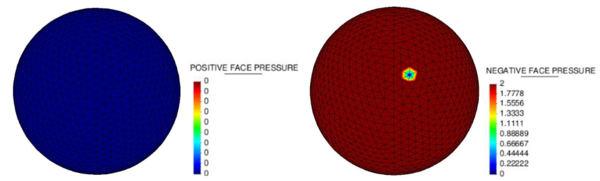

3.2.2 Arbitrary Lagrangian-Eulerian Method