En memoria del meu amic Pablo

Acknowledgements

In the very last lesson of Estructuras III of my undergraduate degree in Civil Engineering, Professor Oñate presented several animations of simulations carried out in CIMNE. As a student, to see that all those bunch of equations of finite elements could be applied in real engineering problems greatly amazed me. Surprisingly, when I expressed to Oñate my interest in the field, he offered me a position to start implementing the Discrete Element Method in the new plataform Kratos. I combined it with the development of my final degree monograph and I moved on undertaking the Master in Numerical Methods in Engineering. Afterwards, I got a scholarship from the programme Doctorats Industrials de la Generalitat de Catalunya which allowed me to develop my Ph.D. thesis in a partnership of the research centre CIMNE and the company CITECHSA.

This work encompasses the result of the 4 years in CIMNE working in the field of Discrete Element Methods under the guidance of Prof. Eugenio Oñate.

First of all, I must thank my advisor Prof. Oñate for his support all over these years. His advice has been not only on the topic of research but also on the development of my career. He gave me great freedom in the decision of the research line and helped me having a rich international experience through my research stay abroad as well as the participation in several conferences. I have learned a lot from Prof. Oñate in many aspects and I consider myself fortunate to have had the opportunity to work with him.

I would like to mention Miguel Ángel Celigueta who has helped me a lot, specially during my first steps in CIMNE by allowing me take part in several ongoing project meetings. From him I learned most of what I know about coding in an efficient and organized manner. Later, J. Maria Carbonell became my second supervisor of the monograph helping me in the developments regarding the coupling with the solid mechanics code as well as in the elaboration of this document. For that and for his support along my monograph I would like to express my gratitude to him.

I would also like to thank Prof. Wriggers for finding me a seat in the Institute of Continuum Mechanics in Hanover where I learnt about contact mechanics and developed part of the monograph under his advisory. My stay in Germany has been very fruitful for my monograph but also for learning German. That is specially Tobias Steiner to thank, my Bürokollege and now my good friend. Danke!

From the CITECHSA side I want to acknowledge Natalia Alonso and María Angeles Viciana and thank the rest of the team as well.

Thanks to the DEM Team members: Salva, Ferran and Guillermo for the work done together and the intense discussions on the DEM. Thanks to Joaquín Irázabal who has become my closer collaborator; with him I share an article, a lot of developments and of course good moments in congresses during these years. I don't want to miss mentioning Jordi and Pablo who are exemplar engineers to me and Charlie who solved memory errors in my code uncountable times. Also Ignasi, Roberto, Kike, Pooyan, Riccardo, Antonia, Miguel, Abel, Anna, Adria, Javier Mora, Sonia S., Feng Chun, María Jesús and the rest of the CIMNE family. A little part of them is somehow in this monograph. Thank you!

Last but not least to my friends from Navas and specially to my family. Gracies Montse, Marina, Mery, Josep and Celia. 谢谢 Xiaojing for your necessary support and company during the last steps. This work was carried out with financial support from the programme Doctorats Industrials de la Generalitat de Catalunya, Weatherford Ltd. and the BALAMED project (BIA2012-39172) of MINECO, Spain.

Abstract

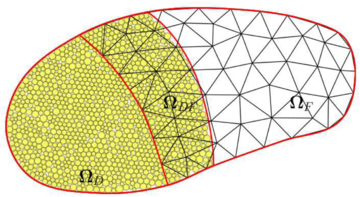

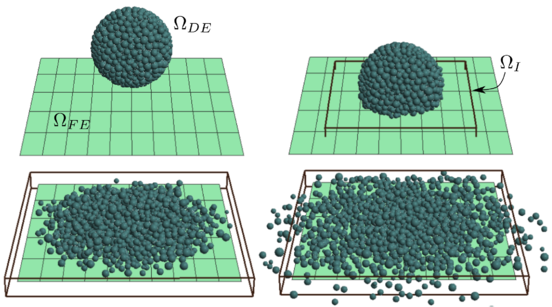

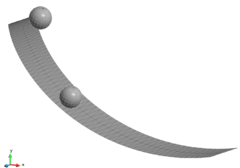

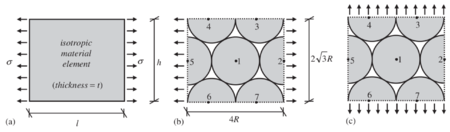

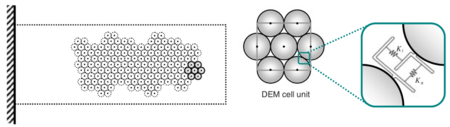

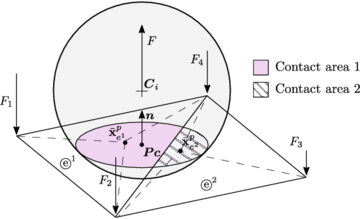

This works encompasses a broad review of the basic aspects of the Discrete Element Method for its application to general granular material handling problems with special emphasis on the topics of particle-structure interaction and the modelling of cohesive materials. On the one hand, a special contact detection algorithm has been developed for the case of spherical particles representing the granular media in contact with the finite elements that discretize the surface of rigid structures. The method, named Double Hierarchy Method, improves the existing state of the art in the field by solving the problems that non-smooth contact regions and multi contact situations present. This topic is later extended to the contact with deformable structures by means of a coupled DE-FE method. To do so, a special procedure is described aiming to consistently transfer the contact forces, which are first calculated on the particles, to the nodes of the FE representing the solids or structures. On the other hand, a model developed by Oñate et al. for the modelling of cohesive materials with the DEM is numerically analysed to draw some conclusions about its capabilities and limitations.

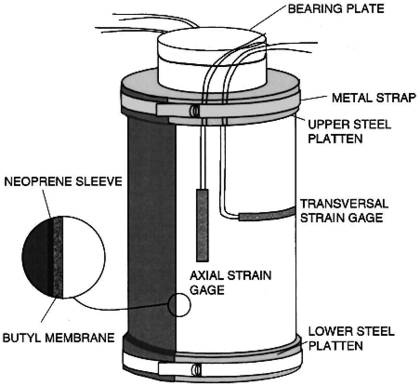

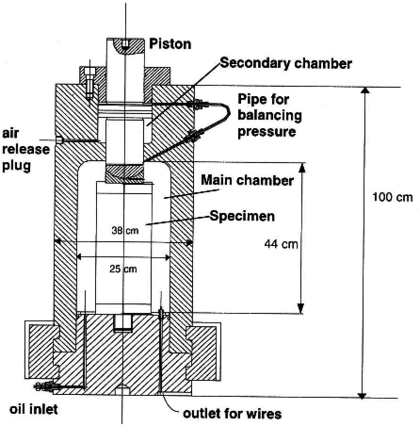

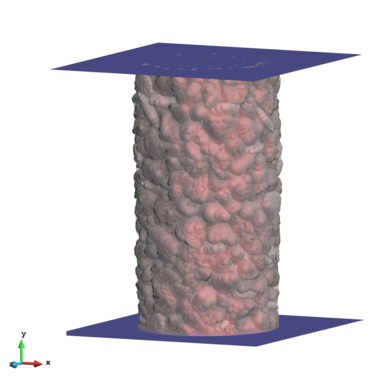

In parallel to the theoretical developments, one of the objectives of the monograph is to provide the industrial partner of the doctoral programme, CITECHSA, a computer software called DEMPack (www.cimne.com/dem/) that can apply the coupled DE-FE procedure to real engineering projects. One of the remarkable applications of the developments in the framework of the monograph has been a project with the company Weatherford Ltd. involving the simulation of concrete-like material testing.

The monograph is framed within the first graduation (2012-13) of the Industrial Doctorate programme of the Generalitat de Catalunya. The monograph proposal comes out from the agreement between the company CITECHSA and the research centre CIMNE from the Polytechnical University of Catalonia (UPC).

Resum

Aquest treball compen una amplia revisió dels aspectes basics del Metode dels Elements Discrets (DEM) per a la seva aplicació generica en problemes que involucren la manipulació i transport de material granular posant emfasi en els temes de la interacció partícula-estructura i la simulació de materials cohesius. Per una banda, s'ha desenvolupat un algoritme especialitzat en la detecció de contactes entre partícules esferiques que representen el medi granular i els elements finits que conformen una malla de superfície en el modelatge d'estructures rígides. El metode, anomenat Double Hierarchy Method, suposa una millora en l'estat de l'art existent en solucionar els problemes que deriven del contacte en regions de transició no suau i en casos amb múltiples contactes. Aquest tema és posteriorment estes al contacte amb estructures deformables per mitja de l'acoblament entre el DEM i el Metode dels Elements Finits (FEM) el qual governa la solució de mecanica de solids en l'estructura. Per a fer-ho, es descriu un procediment pel qual les forces de contacte, que es calculen en les partícules, es transfereixen de forma consistent als nodes que formen part de l'estructura o solid en qüestió. Per altra banda, un model desenvolupat per Oñate et al. per a modelar materials cohesius mitjancant el DEM és analitzat numericament per tal d'extreure conclusions sobre les seves capacitats i limitacions.

En parallel als desenvolupaments teorics, un dels objectius de la monografia és proveir al partner industrial del programa doctoral, CITECHSA, d'un software anomenat DEMpack (http://www.cimne.com/dem/) que permeti aplicar l'acoblament DEM-FEM en projectes d'enginyeria reals. Una de les aplicacions remarcables dels desenvolupaments en el marc de la monografia ha estat un projecte per l'empresa Weatherford Ltd. que involucra la simulació de tests en provetes de materials cimentosos tipus formigó.

Aquesta monografia doctoral s'emmarca en la primera promoció (2012-13) del programa de Doctorats Industrials de la Generalitat de Catalunya. La proposta de la monografia prové de l'acord entre l'empresa CITECHSA i el centre de recerca CIMNE de la Universitat Politecnica de Catalunya (UPC).

1. Introduction

Truesdell and Noll in the introduction of The Non-Linear Field Theories of Mechanics [1] state:

Whether the continuum approach is justified, in any particular case, is a matter, not for the philosophy or methodology of science, but for the experimental test…

The ones that agree on that statement may also agree that the same applies for the discontinuum approach in which the Discrete Element Method is framed on.

Before the introduction of the Discrete Element Method in the 70's, lot of effort has been placed in developing constitutive models for the macroscopic description of particle flows. However, the continuum based methods fail to predict the special rheology of granular materials which can rapidly change from a solid-like behaviour in zones where the deformation is small and rather homogeneous to a fluid-like behaviour with huge variation and distortion that can be concentrated in narrow areas such as shear bands. Within the DEM this behaviour, which is driven by the collisional and frictional mechanisms of the material, can be simulated at the grain level where each discrete element corresponds to a physical particle. The quality of the results depends then on the accuracy in the representation of the shape of the particles and their interaction.

The DEM is nothing else than Molecular Dynamics with rotational degrees of freedom and contact mechanisms. In its first conception, the method was designed for simulations of dynamic systems of particles where each element is considered to be an independent and non deformable entity which interacts with other particles by the laws of the contact mechanics and moves following the Newton-Euler equations.

The simplicity of the method is in contrast however, with the high computation cost which, in general, has associated to it due to the large number of particles needed in a real simulation and the time scales that have to be resolved. Imagine a hooper discharge problem which may require the computation of millions of particles simulated during tens of minutes when, at the same time, the phenomena that rules the problem lies in reproducing the behaviour of individual particles the interaction of which happens in distances several orders of magnitude smaller than their particle diameter. This implies that the necessary time steps to be used in the simulation have to be smaller than the characteristic contact duration.

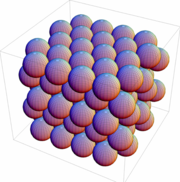

In this sense, the implementation of the method using massive parallelization is something of crucial importance. Also the use of simple geometries such as spheres presents a great difference to other more complex geometries such as polyhedra, NURBS, etc. in the detection and characterization of the contacts. That is why still today the use of basic spheres is intensively used.

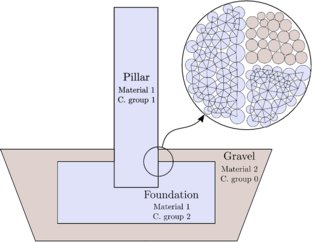

In many real applications involving granular materials, the interaction with structures and fluids are present. The employment of the FEM to simulate the structures involved in those industrial applications can provide better understanding of the problem and, therefore, could play an important role in the process of design optimization. To that end an efficient coupling of the method with a FEM-based solver for solids is of special interest.

Another field of interest of the application of the DEM is the simulation of material fracturing. The DEM as a discontinuum-based method has attractive features in contrast to continuum-based methods in problems where large deformations and fracture are involved. Many attemps have been done aiming to unify both the modelling of the mechanical behaviour of solid and particulate materials, including the transition from solid phase to particulate phase. Nowadays however, the DEM still presents many drawbacks and lack of reliability in the modelling of solids. Differently from other particle-based methods such as MPM, PFEM or SPH, the DEM shall not be regarded as a discretization method for the solution of PDE.

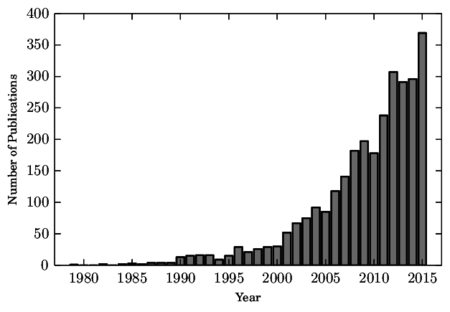

The interest in the Discrete Element Method has exponentially increased since the publication in 1979 of the first article by Cundall and Strack [2] and is still a hot topic nowadays. This can be seen in fig. 1 where the number of publications related to discrete element procedures from 1979 to 2016 are displayed. They were obtained from Google Scholar with the following keywords in the title of the article: 'Discrete Element Method/Model', or 'Distinct Element Method/Model', or 'Using a DEM' or 'A DEM' or 'With the DEM' or 'DEM Simulation'. This does not include all the publications related to DEM and may introduce other non related articles, however it gives a good image of the tendency of research in the field.

There is a great interest in the application of this method to a wide range of industrial problems.

1.1 DE-FE couplings

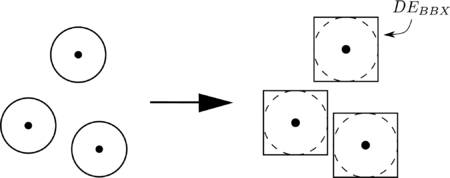

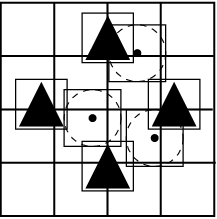

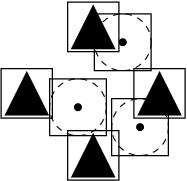

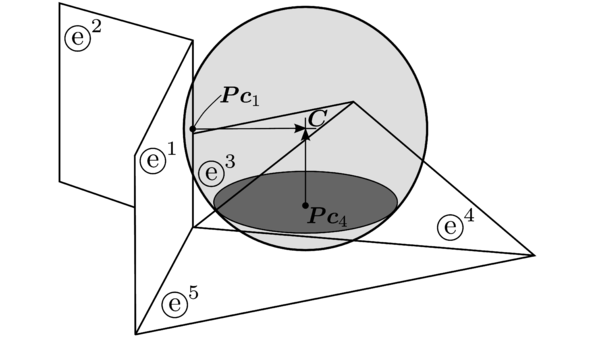

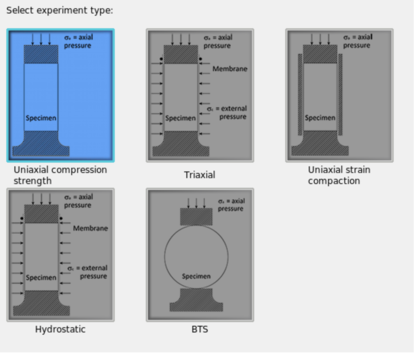

The term coupled DE-FE or combined DE-FE Method for soil and solid mechanics applications appears in the literature with different meanings and can be quite confusing. The most common ones are grouped here in 5 categories along with an example figure (Fig. 2). Other categories for DE-FE couplings are for instance coupling with fluids, thermal problems, etc.

- Particle-structure interaction: The two domains are calculated separately and their communication is through contact models. This is the category in which the monograph is mainly focused on. It is developed in Section 4.

- Two-scale models: These methods solve the problem at two different scales. The micro-macro transition is accomplished employing an overlapping zone to provide a smooth transition between a DE model (micro) and a FE material description (macro). The coupling is achieved by the imposition of kinematic constrains between the two domains. The original idea was presented by Xiao and Belytschko [3] for Molecular Dynamics, Wellmann [4] applied it to granular material while Rojek and Oñate [5] developed it for cohesive materials.

- Projection techniques: Coarse-graining, averaging and other projection techniques are used to derive continuum fields out of discrete quantities. To do so, often a reference mesh is required either for the calculation or simply for the representation of the continuum results [6].

- Embedded DE on FE: This technique consists on embedding (typically spherical) particles in the boundaries of FE models of solids and structures in order to detect and enforce the contact [7]. Recently, this technique has been applied to multi-fracturing in cohesive materials [8]. A FE-based method with failure or crack propagation models is combined with embedded particles that assist the detection and characterization of the contact forces.

- FE discretization of discrete entities: This category involves methods that use a FE discretization to calculate the deformation of the particles and solve their interaction using a DEM-like technique [9]. A particular case is the so called DEM-Block method [10] which consists on a FE-based Method which elements are connected through breakable spring-like bounds imitating the cohesive DE models.

|

|

| (a) Particle-Structure | (b) Two-scale. Taken from: Labra 101 |

|

|

| (c) Projection onto a FE mesh | (d) Embedded particles. Taken from: Zárate and Oñate 8 |

| |

| (e) Discretized DE. Taken from: Gethin et al. 9 | |

| Figure 2: Examples of different techniques that combine FE and DE methods | |

1.2 Objectives

This monograph has been developed in the framework of the first graduation of Doctorats Industrials de la Generalitat de Catalunya (Industrial Doctorates of Catalonia). The objectives defined for this work comprise an agreement between the research line determined by the research centre CIMNE in the Polytechnical University of Catalonia (UPC) and the business objectives of the society CITECHSA which is interested in the exploitation of a DEM-based software in its application to industrial engineering problems. In this regard, the objectives involve research, development of a code and educational and dissemination actions.

On the one hand, the research has to be focused in a deep revision of the state of the art of the Discrete Element Method in order to analyse and select the existing techniques that have to be adapted and implemented for the solution of the problems of interest which are basically three:

- General application of DEM to granular material handling problems

- Particle-structure interaction

- DE models for the simulation of cohesive materials

The research has to be conducted from a general point of view determining the advantages and drawbacks of the existing methods and proposing new developments that can improve the state of the art. The theoretical contributions will be communicated by dissemination actions.

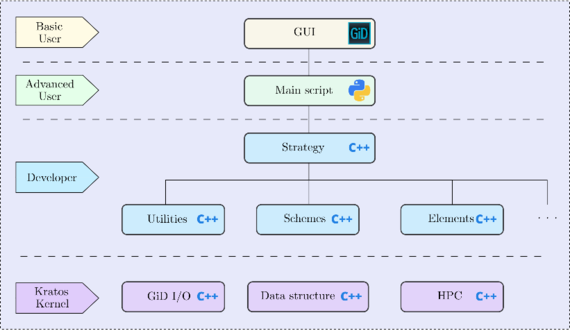

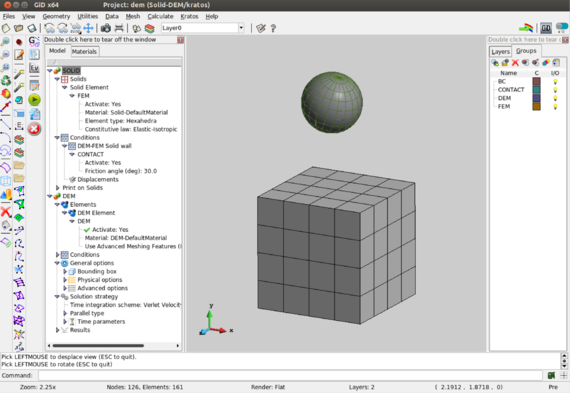

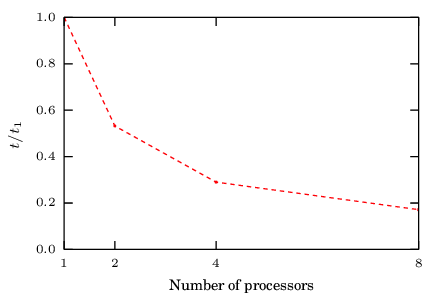

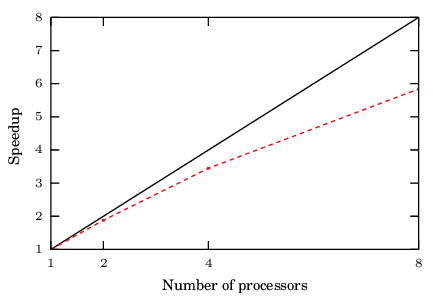

The theoretical research in the above-mentioned topics have to accompanied by its implementation into the open-source code DEMpack (www.cimne.com/dem). The code will be developed with concerns on efficiency and parallelism as it is devised to be employed in real application projects. To that end, several GUIs for specific applications will be developed. This will be done forming part of a larger group of researchers that contribute to the development of the code.

Finally, the developments will be applied in ongoing projects of the research centre.

1.3 Organization of this work

The document is structured as follows:

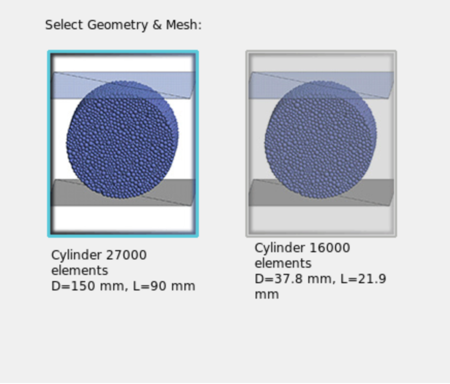

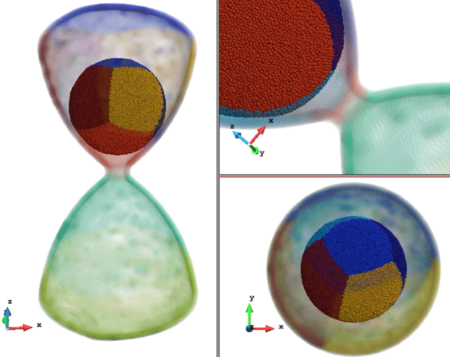

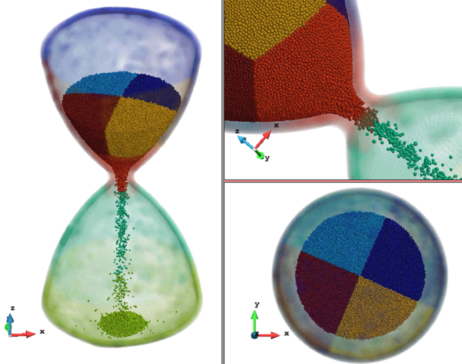

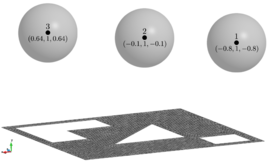

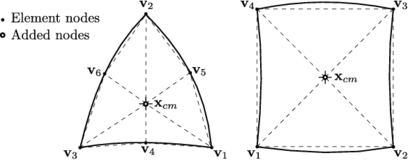

After the introduction and the objectives, chapter two reviews the basic aspects of the Discrete Element Method that will set the basis upon which the developments in the monograph are established. It includes a revision of the most common contact models and integration methods. An assessment on performance, accuracy and stability is given to help choosing the most appropriate integration scheme. The treatment of clusters of spheres for the representation of non-spherical particles and the contact detection are also discussed in detail.

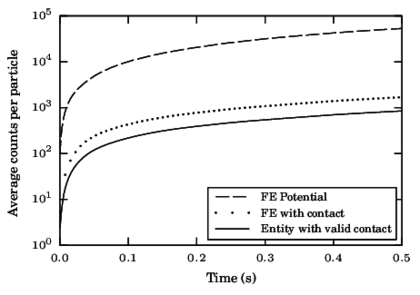

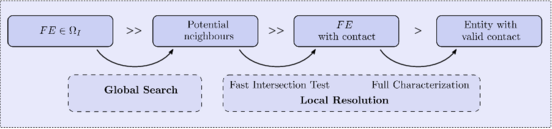

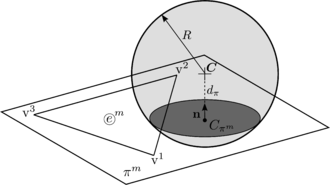

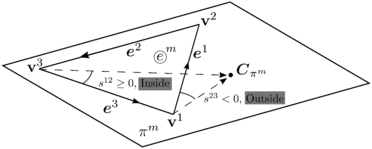

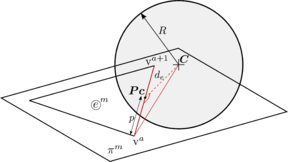

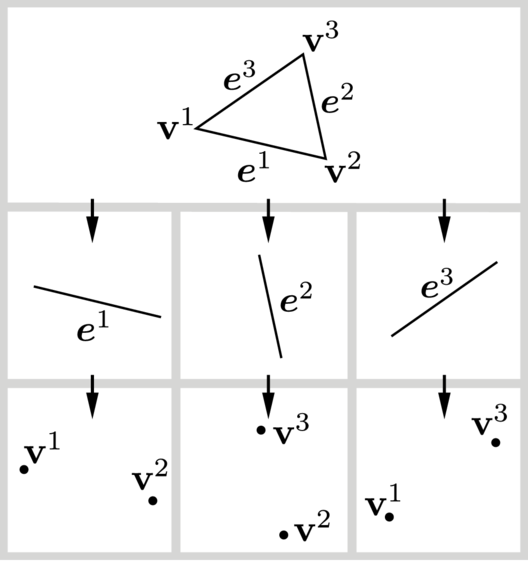

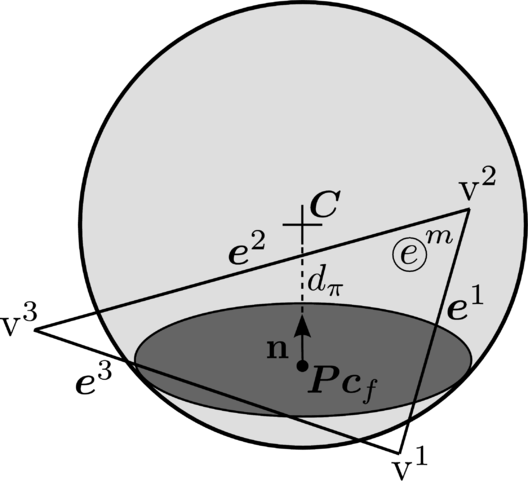

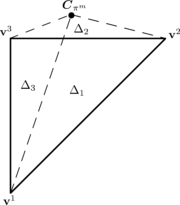

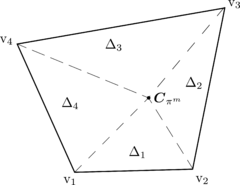

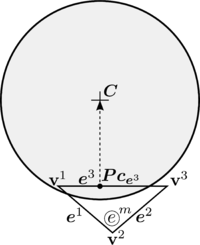

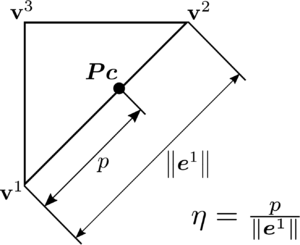

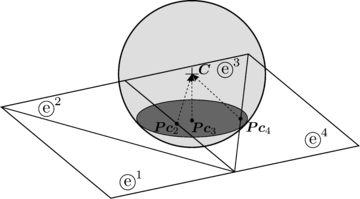

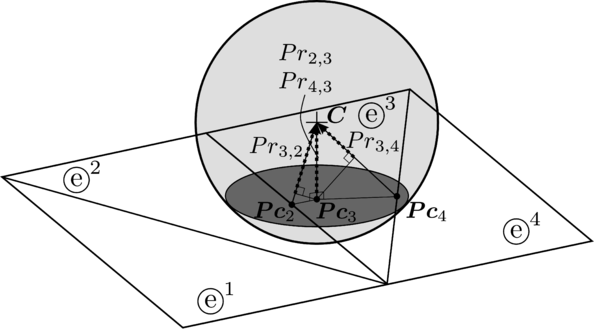

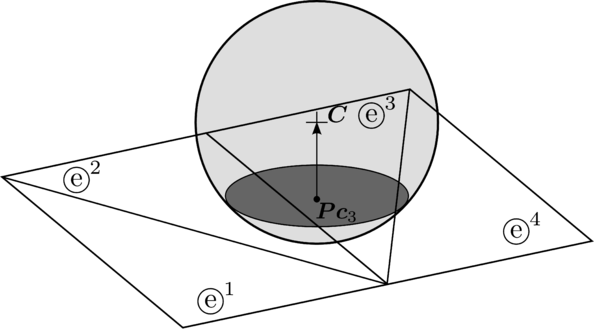

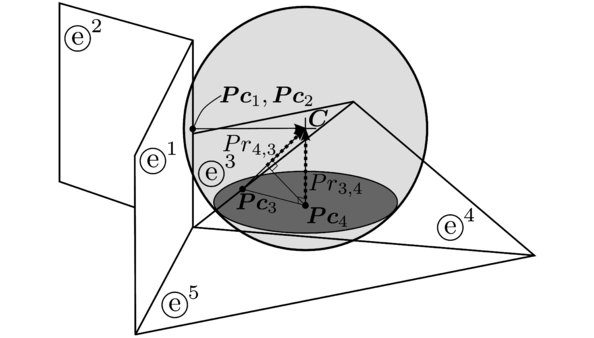

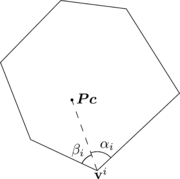

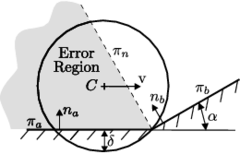

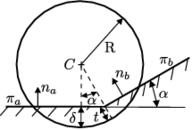

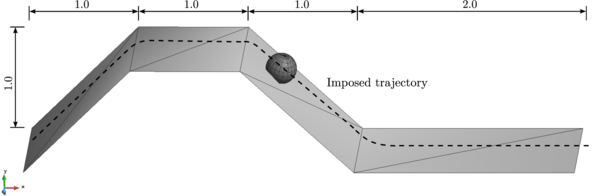

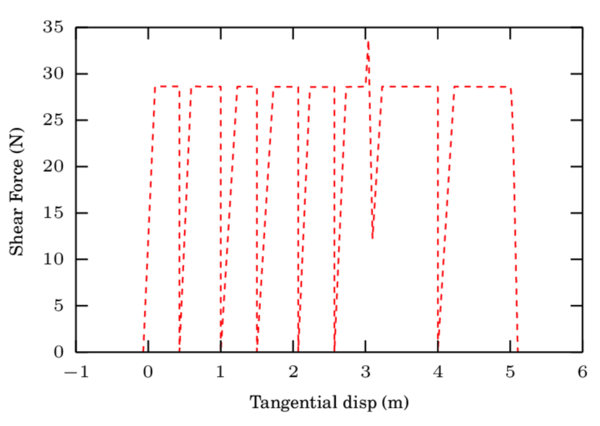

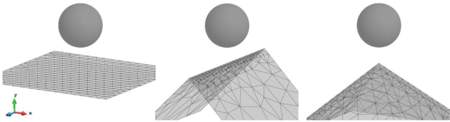

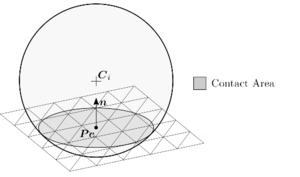

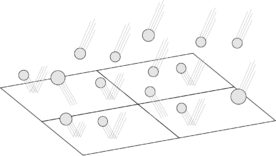

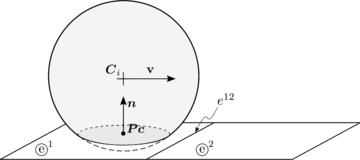

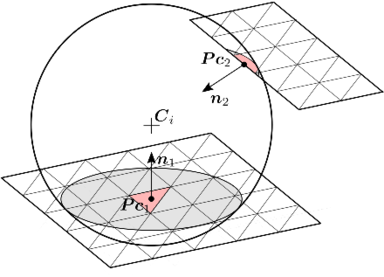

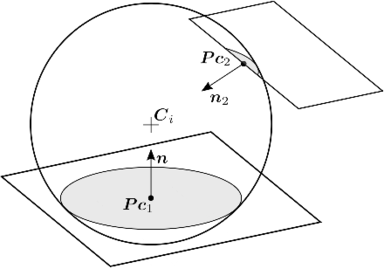

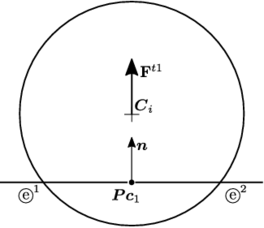

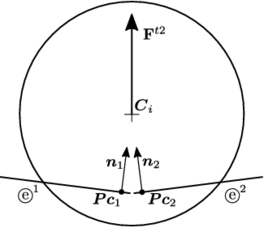

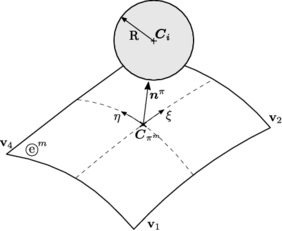

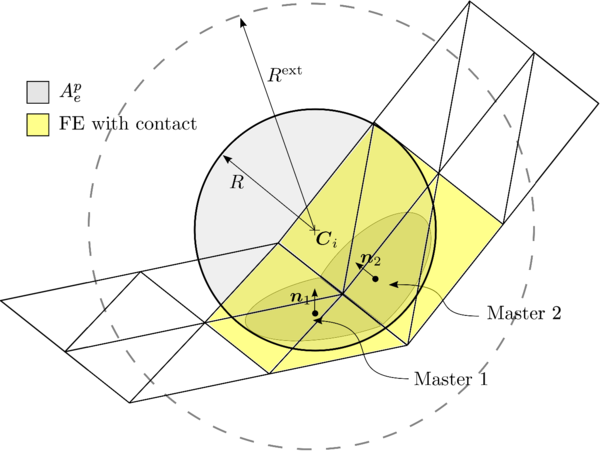

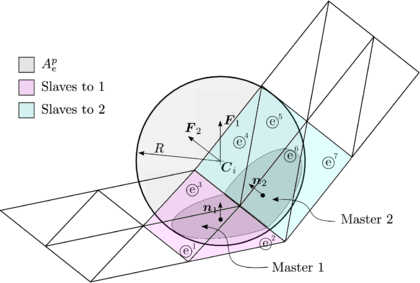

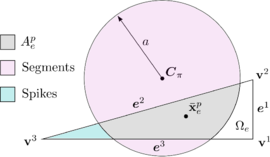

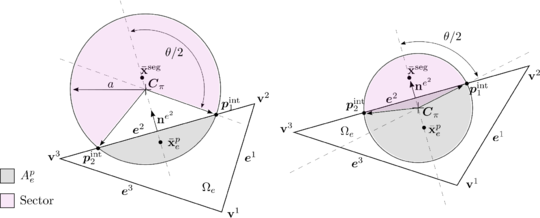

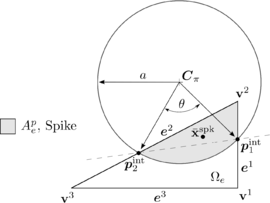

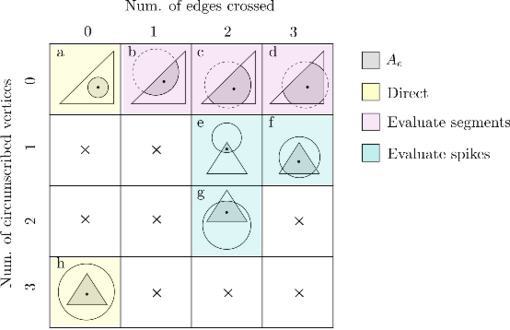

Chapter three is dedicated to the contact detection between spherical Discrete Elements and triangular or planar quadrilateral Finite Elements. The chapter starts with a complete review of the state of the art and follows with a thorough description of the strategy adopted for the global and local detection of contacts. The idea of using an intermediate fast intersection test is introduced and later proved to be efficient within an application example. Regarding the local resolution, the novel Double Hierarchy Method for contact with rigid boundaries is presented. The description of the methods is equipped with algorithm details, validation examples and limitations analysis.

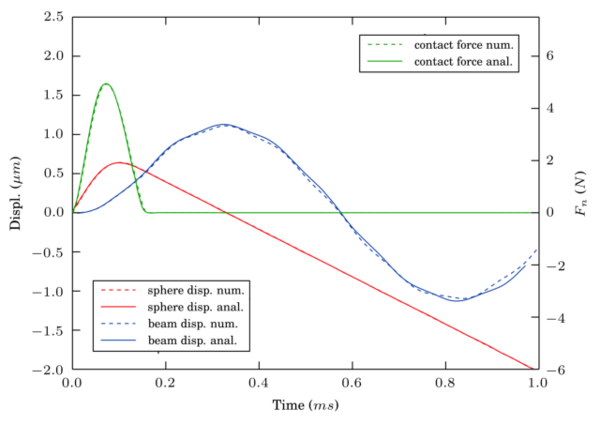

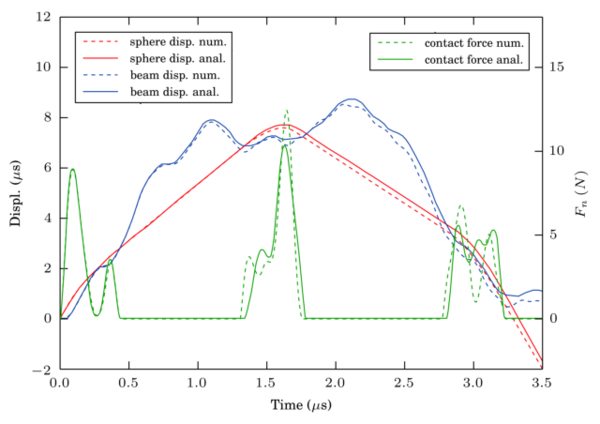

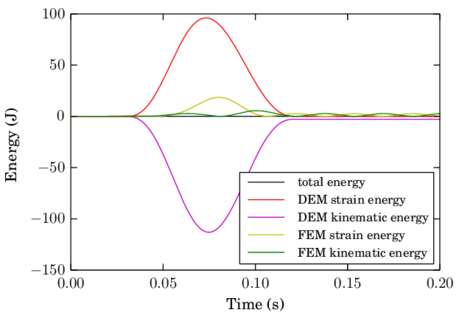

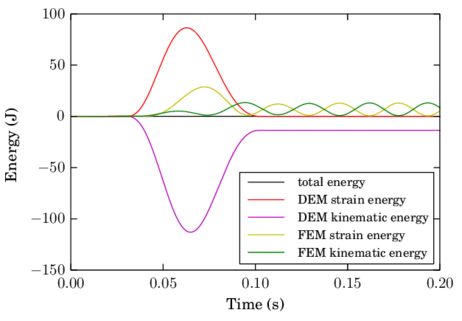

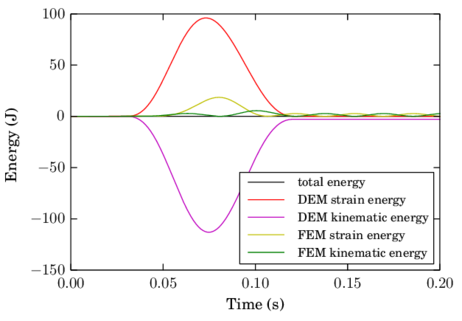

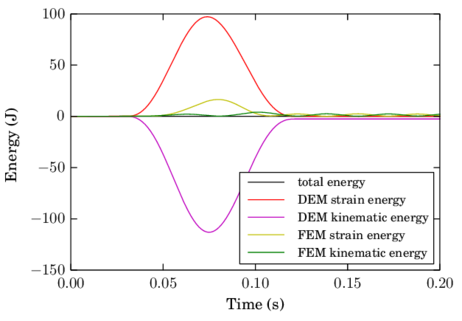

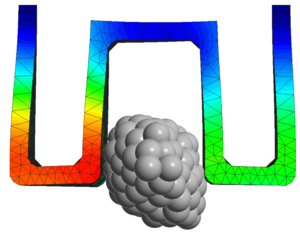

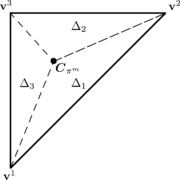

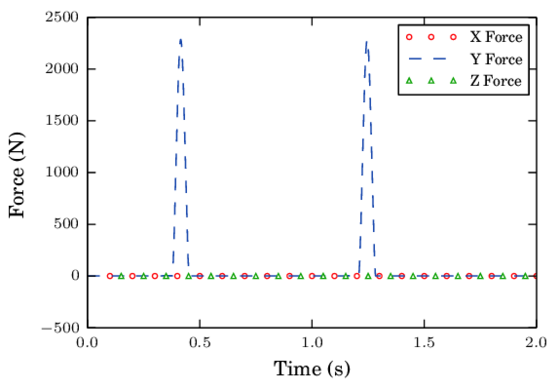

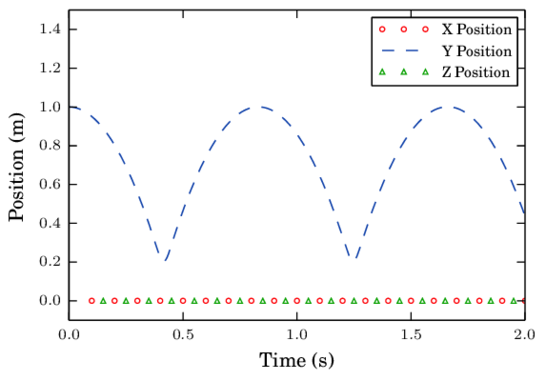

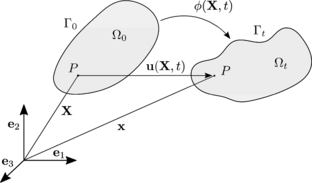

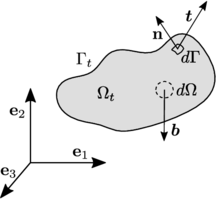

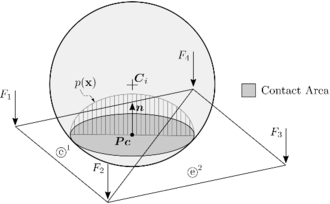

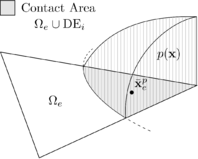

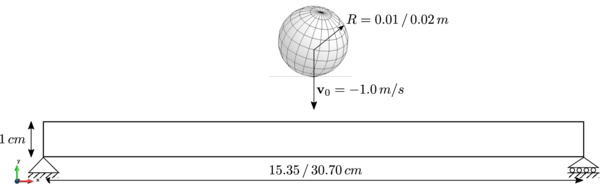

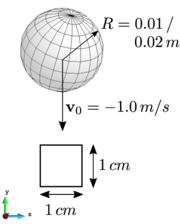

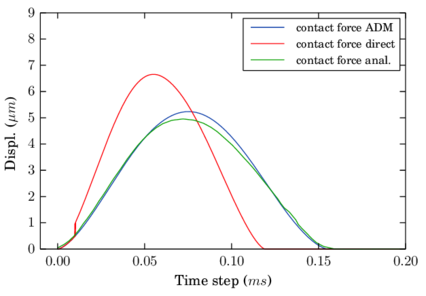

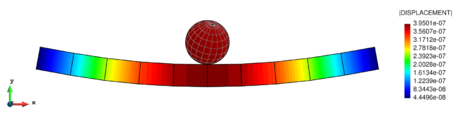

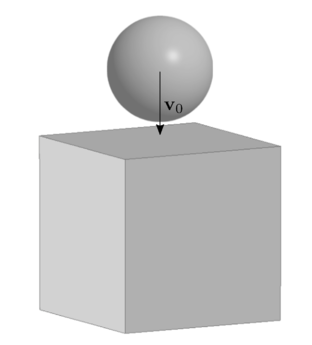

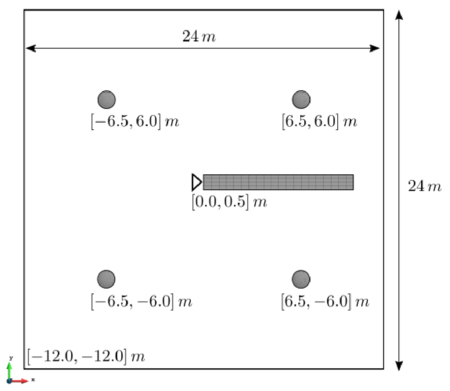

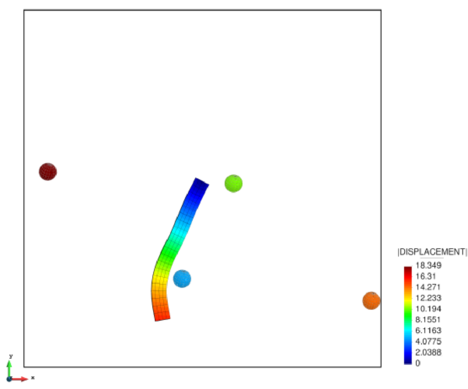

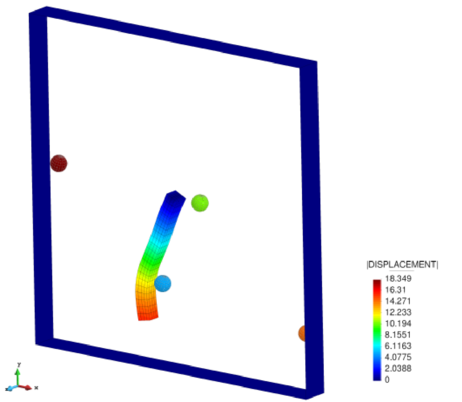

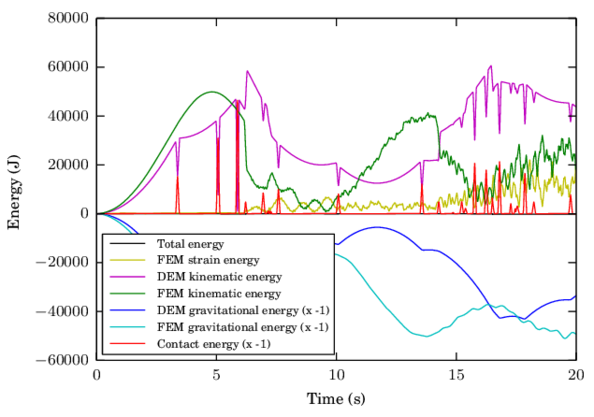

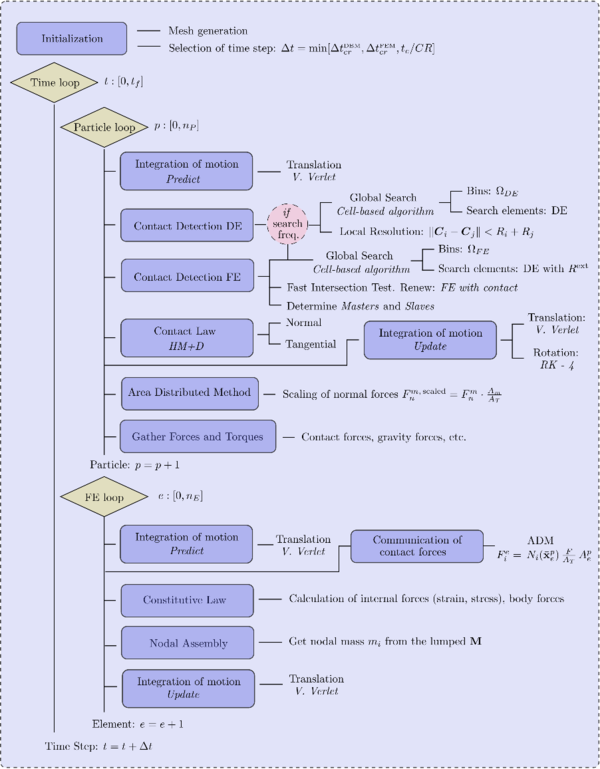

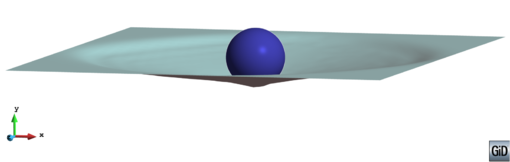

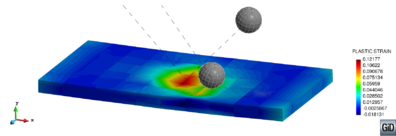

The fourth chapter introduces the DE-FE coupling for the particle-structure interaction problem. After an introduction to the solid mechanics formulation employed, the coupled scheme is presented. The key point lies in the communication of the contact forces, which are calculated by the DE particles, to the nodes of the FEs. The described procedure proposes the distribution of the forces to all the FEs involved based on their area of intersection with the particles. Several examples show that this strategy improves the commonly used direct interpolation approach for the case of contacts with deformable solids or structures. The good functioning of the coupling is assessed by some tests with special attention placed on energy conservation.

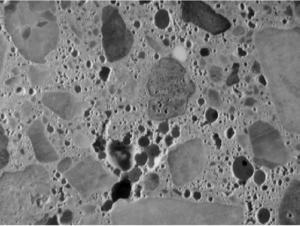

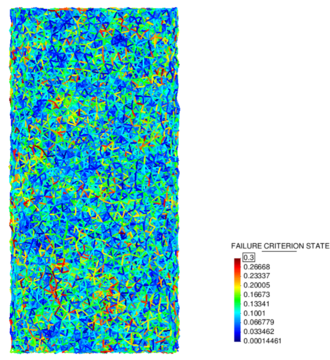

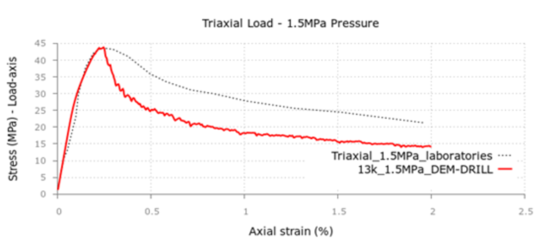

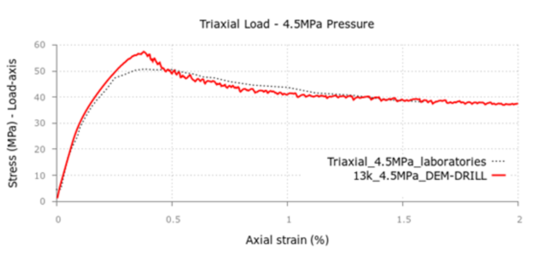

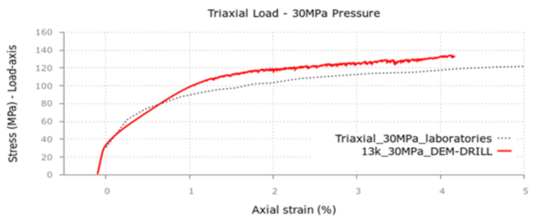

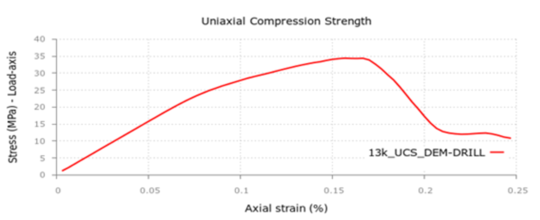

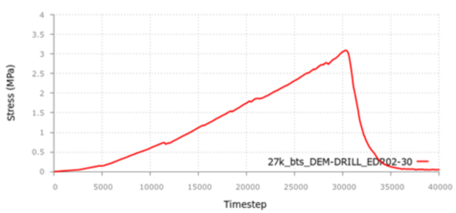

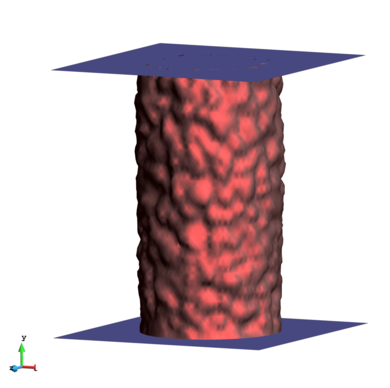

The topic of DE modelling of cohesive materials such as concrete or rock is presented in chapter five. It begins with an overview of the state of the art of the methods available for this purpose together with a study of their limitations and capabilities. After, the model developed by Oñate, Santasusana et al. is described along with application examples where the numerical simulations and the laboratory tests are compared.

Chapter six is dedicated to the implementation of the code in the platform Kratos constituting the DEMpack software together with remarks on the efficiency and parallelitzation of the code.

Finally, the last chapter comprises the conclusions and the outlook of the work.

1.4 Related publications and dissemination

1.4.1 Papers in scientific journals

- E. Oñate, F. Zárate, J. Miquel, M. Santasusana et al. Computational Particle Mechanics - Springer: Local constitutive model for the Discrete Element Method. Application to geomaterials and concrete.

- M. Santasusana, J. Irazábal, E. Oñate, J.M. Carbonell. Computational Particle Mechanics - Springer: The Double Hierarchy Method. A parallel 3D contact method for the interaction of spherical particles with rigid FE boundaries using the DEM.

1.4.2 Communications in congresses

- M. Santasusana, E. Oñate, M.A. Celigueta, F. Arrufat, K. Valiullin, R. Gandikota. 11th. World Congress on Computational Mechanics (WCCM XI): A parallelized discrete element method for analysis of drill-bit mechanics problems in hard and soft soils.

- C.A. Roig, P. Dadvand, M. Santasusana, E. Oñate. 11th. World Congress on Computational Mechanics (WCCM XI): Minimal surface partitioning for particle-based models.

- M. Santasusana, E. Oñate, J.M. Carbonell, J. Irazábal, P. Wriggers. 4th. International Conference on Computational Contact Mechanics (ICCCM 2015): Combined DE/FE method for the simulation of particle-solid contact using a Cluster-DEM approach.

- E. Oñate, F. Arrufat, M. Santasusana, J. Miquel, M.A. Celigueta. 4th. International Conference on Particle-Based Methods (Particles 2015): A local constitutive model for multifracture analysis of concrete and geomaterials with DEM.

- M. Santasusana, E. Oñate, J.M. Carbonell, J. Irazábal, P. Wriggers. 4th. International Conference on Particle-Based Methods (Particles 2015): A Coupled FEM-DEM procedure for nonlinear analysis of structural interaction with particles.

- M. Santasusana, J. Irazábal, E. Oñate, J.M. Carbonell. 7th. European Congress on Computational Methods in Applied Sciences and Engineering (ECCOMAS Congress 2016): Contact Methods for the Interaction of Particles with Rigid and Deformable Structures using a coupled DEM-FEM procedure.

Participation in research projects

- Implementation of the DEM Technology, DEM material models and validation of the DEM Technology. Weatherford International LTD. July 2013 to March 2014.

- Nuevo proceso de voladura mediante técnicas predictives y adaptatives, eficaz y eficiente en la utilización de los recursos y materias primes, minimizando las emisiones. MINECO. January 2014 to June 2016.

2. The Discrete Element Method

The Discrete Element Method (DEM) was firstly introduced by Cundall in 1971 [11] for the analysis of the fracture mechanics problems. Afterwards, in 1979, Cundall and Strack [2] applied it to granular dynamics. The DEM in its original conception simulates the mechanical behaviour of a system formed by a set of particles arbitrarily disposed. The method considers the particles to be discrete elements forming part of a higher more complex system. Each discrete element has an independent movement; the overall behaviour of the system is determined by the application of contact laws in the interaction between the particles.

There exist two main approaches, namely the soft and the hard particle approach. The soft particle approach is a time-driven method where particles are allowed to inter-penetrate simulating small deformations due to contact. The elastic, plastic and frictional forces are calculated out of these deformations. The method allows accounting for multiple simultaneous particle contacts. Once the forces are calculated, the motion of the particles is earned from the application of the classical Newton's law of motion which is usually integrated by means of an explicit scheme. The hard-particle approach, on the other hand, is an event-driven method which treats the contacts as instantaneous and binary (no-multi contact). It uses momentum conservation laws and restitution coefficients (inelastic or frictional contacts) to determine the states of particles after a collision. These assumptions are only valid when the interaction time between particles is small compared to the time of free motion. A good review and comparison of the methods can be found in [12]. This thesis is developed using the soft-particle approach.

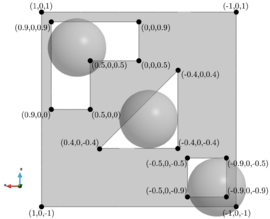

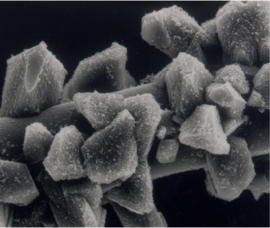

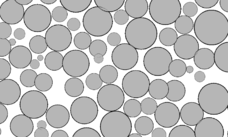

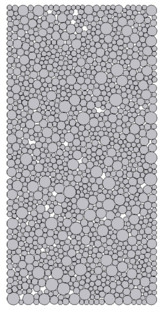

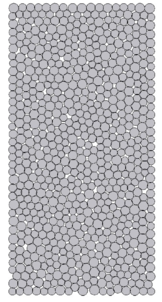

The DEM, as a particle method, has been used in a very wide range of applications. An important decision to take is to select which is the relation between a discrete element in the simulation and the physical particles or media in the reality. On the one hand, the one-to-one approach tries to assign a discrete element to every particle in the domain. The method describes all the contact and other interaction forces between particles with a model that only depends on the local relations and does not require fitting. On the other hand, a very common approach is to simulate granular matter or other media using discrete elements that represent a higher amount of material than just one particle. This technique, known as coarse-graining or up-scaling [13], represents a completely phenomenological approach which does require the fitting of parameters out of bulk experiments. Both techniques are used to simulate particulate matter that ranges from powder particles () to the simulation of rock blocks ().

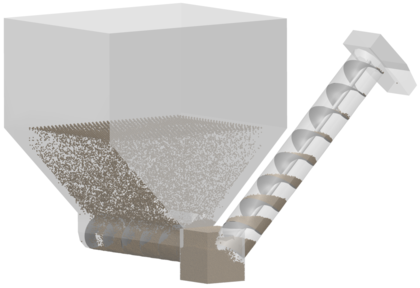

Common applications of the Discrete Element Method are the simulation of granular mater in soil mechanics. A soil can deform as a solid or flow as a fluid depending on its properties and the situation. The use of DEM comes naturally as it can handle both behaviours of the soil and also account for discontinuous and very large deformations [14,15,4]. The DEM adapts also perfectly to the simulation of granular material handling in industrial processes. Some examples of applications are silo flows [16,12], screw-conveyors [17,18], vibrated beds [19,20], ball mill processes [21,22], etc.

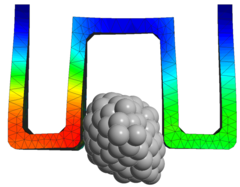

Another application which is of special attention in this thesis is the particle-structure interaction problem. This category encompasses, among others, particle-tyre simulations [14,23], shot peening processes [24,25], impacts with flexible barriers [26], soil-structure interaction [4,27], etc. Some examples of applications are presented in section 6.3.

In particle-fluid flow modelling, the difficulty relies on the particulate phase rather than fluid phase. Therefore, a coupled CFD-DEM approach [28] is attractive because of its capability to capture the particle physics. This comprehends a large family of applications which includes gas fluidization, pneumatic conveying flows, particle coating, blast furnace, etc. [16]. Applications in civil and marine engineering are rock avalanches into water reservoirs [29], sediment and bed-load transportation in rivers and sea [30,31,32], etc. A comprehensive literature review on the applications of DEM to the simulation of particulate systems processes can be found in the work published by Zhu et al. [16].

In recent years the DEM has also been object of intense research to the study of multi-fracture and failure of solids involving geomaterials (soils, rocks, concrete), masonry and ceramic materials, among others. Some key developments can be found in [33,34,35,36]. In the cohesive models the contact law can be seen as the formulation of the material model on the microscopic level. Cohesive bonds can be broken, which allows to simulate the fractures in the material and its propagation. The analysis of solid materials within the DEM poses however, a number of difficulties for adequately reproducing the correct constitutive behaviour of the material under linear (elastic) and non-linear conditions (section 5).

2.1 Basic steps for DEM

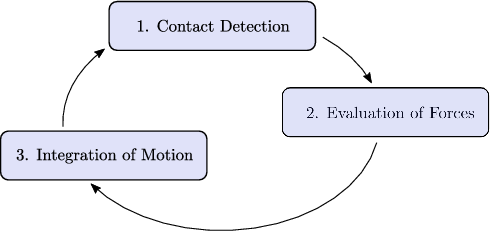

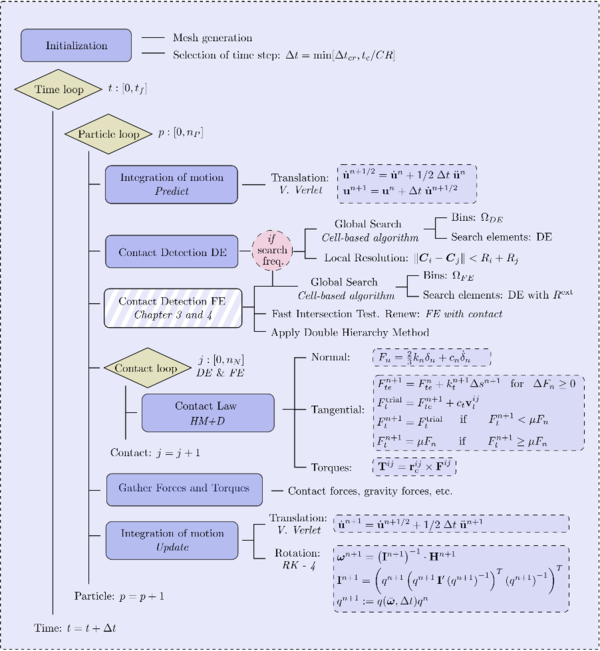

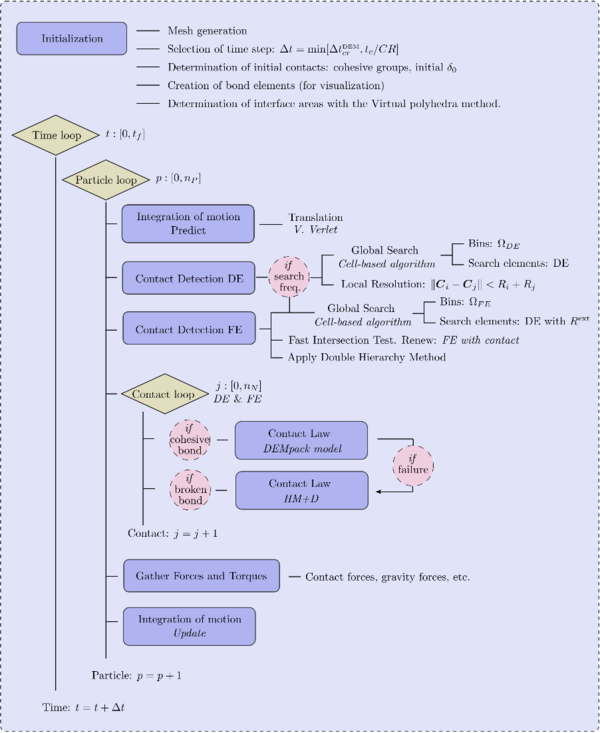

From a computational point of view a basic DEM algorithm consists of three basic steps:

|

| Figure 3: Basic computational scheme for the DEM |

After an initialization step, the time loop starts. First, the neighbouring particles for every discrete element needs to be detected (section 2.2) as well as the contact with rigid boundaries included in the simulation domain (chapter 3). Afterwards, for every contacting pair a the contact model is applied (section 2.5) to determine the forces and torques that have to be added to the rest of actions to be considered on a particle. Finally, given all the forces and the torques, the equations of motion are integrated and the particle's new position is usually calculated by means of an explicit time marching scheme (section 2.6). At this point, new contacts have to be detected and thus, the loop starts again. This sequence repeats over time until the simulation comes to an end.

2.2 Contact detection

Due to the method formulation, the definition of appropriate contact laws is fundamental and a fast contact detection is something of significant importance in DEM. Contact status between individual objects, which can be two DE particles or a DE particle and a boundary element (chapter 3), can be calculated from their relative position at the previous time step and it is used for updating the contact forces at the current step. The relative cost of the contact detection over the total computational cost is generally high in DEM simulations. Therefore, the problem of how to recognize all contacts precisely and efficiently has received considerable attention in the literature [37,38].

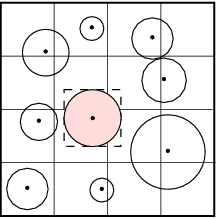

Traditionally, the contact detection is split into two stages: Global Neighbour Search and Local Contact Resolution. By the application of this split the computational cost can be reduced from , in an all-to-all check, to .

Global Contact Search

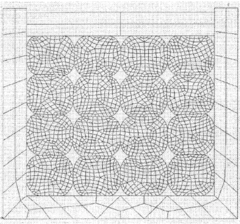

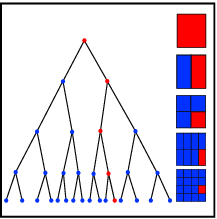

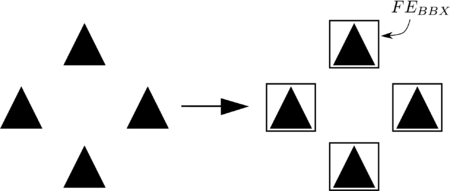

It consists on locating the list of potential contact objects for each given target body. There are two main basic schemes: the Grid/Cell based algorithms and the Tree based ones, each of them with numerous available versions in the literature.

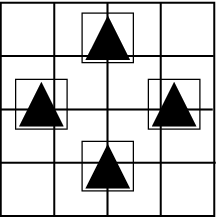

|

|

| (a) Grid/Cell-based structure | (b) Tree-based structure |

In the Grid based algorithms [39,40,41] a general rectangular grid is defined dividing in cells the entire domain (figure cell). A simple bounding box (rectangular or spheric) is adopted to circumscribe the discrete elements (of any shape) and is used to check in a approximate way which are the cells that have intersection with it. Those intersecting cells, store in their local lists the particles contained in the bounding boxes. The potential neighbours for every target particle are determined by selecting all the elements stored in the different cells where the bounding box of that target particle has been assigned to.

In the Tree based algorithms [42,43,44,45] each element is represented by a point at coordinates . Starting from a centred one, it splits the domain into two sub-domains. Points that have larger coordinate () are placed in one sub-domain while points with smaller coordinates () in the other sub-domain. The method proceeds for next points alternating every time the splitting dimension and obtaining a tree structure like the one shown in figure tree. Once the tree is constructed, for every particle the nearest neighbours is determined following the tree in upwind direction.

Han et al [46] compared the most common Global Neighbour Search algorithms (cell-based and tree-based) in simulations with spherical particles. Numerical tests showed better performance for the cell based algorithms (D-Cell [40] and NBS [39]) over the tree-based ones (ADT [42] and SDT [44]), specially for large-scale problems. It should be noted also that the efficiency depends on the cell dimension and, in general, the size distribution can affect the performance. Han et al [46] suggest a cell size of three times the average discrete object size for 2D and five times for 3D problems. It is worth noting that, using these or other efficient algorithms, the cost of the Global Neighbour Search represents typically less than 5 percent of the total computation while the total cost of the search can reach values over 75 percent [14], specially when the search involves non-spherical geometries since it requires, in general, the resolution of a non-linear system of equations (see the case of superquadrics [4,47] or polyhedra [48,49,50]). In this sense, the focus should be placed on the Local Contact Resolution check rather than on optimizing the Global Neighbour Search algorithms.

Local Resolution Check

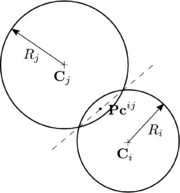

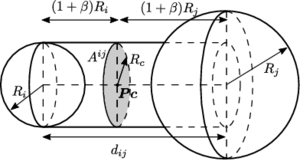

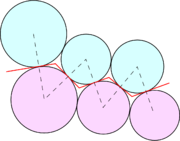

The local contact detection basically consists in determining which of the potential neighbours found during the global search algorithm constitute an actual contact with the target particle and to determine their contact characteristics (point of contact, normal direction, etc.). The case of spheres is trivial (fig. 4), contact exists if the following condition is met:

|

|

(1) |

and the normal and point of contact can be easily determined as it will be detailed in section 2.4.

|

| Figure 4: Spherical particles in contact |

The problem of contact determination becomes complex and time consuming when other geometries such as superquadrics, polyhedra or NURBS are used to represent the particles or boundaries. A way to improve the efficiency is to take advantage of the temporal coherence. Normally the duration of a contact is encompassed by several calculation time steps and therefore the particle positions will only change a little bit. In this regard, it seems wise to perform the contact detection after several time steps instead of at every time step aiming to reduce the computational cost that it involves. However, if the contacts are not determined when the particles start to collide, the indentations will achieve high values which will lead to inaccurate results and numerical instabilities (section 2.6.4).

A possible solution for this issue is the use of a technique known as Verlet neighbouring lists [51,4]. It consists on using enlarged bounding boxes in the global search so that more remote particles are stored as well. This local Verlet list need no update during several time steps since the particles move only small distances every step. This way it can be assured that no contacts are missed along the simulation and the frequency of the search is reduced. This method is efficient for cases with high dispersion of particles.

In the framework of this dissertation, a basic cell-based algorithm [40] is chosen which has been parallelized using OMP. The geometries used for the particles are only spheres or clusters of spheres and thus the local detection is efficient. The treatment of the contact with FE representing rigid or deformable boundaries is extensively discussed in chapter 3.

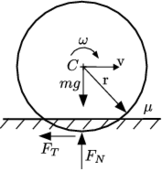

2.3 Equations of motion

In the basic soft particle DEM approach the translational and rotational motion of particles are defined by the standard equations for the dynamics of rigid bodies. For the special case of spherical particles, these equations can be written as:

|

|

(2) |

|

|

(3) |

where , , are respectively the particle centroid displacement, its first and second derivative in a fixed coordinate system , m is the particle mass, the inertia tensor, is the angular velocity and the angular acceleration.

The forces {F} and the torques {T} to be considered at the equations of motion (eq.2 and eq. 3) are computed as the sum of:

- all forces {F}^{ext} and torques {T}^{ext} applied to the particle due to external loads.

- all the contact interactions with neighbouring spheres and boundary finite elements {F}^{ij}, j=1,\cdots , n^c, where i is the index of the element in consideration and j the neighbour index of the entities (particles or finite elements) being in contact with it.

- all forces {F}^{damp} and torques {T}^{damp} resulting from external damping.

This can be expressed for every particle \end{array}</math>i as:

|

|

(5) |

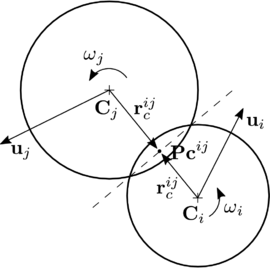

where is the vector connecting the centre of mass of the -th particle with the contact point with the -th particle (eq. 8). and satisfy . Fig. 5 shows contact forces between two spherical particles.

The rotational movement equation (3) is a simplified version of the Euler equations coming from the fact that a sphere has constant coefficients for its three principal inertia axes which are independent of the frame. The complete equations can be found in section 2.7 where the case of generic particle shapes is discussed.

2.4 Contact kinematics

The forces and torques that develop from a contact event are derived from the contact kinematics at the point of contact . The local reference frame in the contact point is defined by a normal and a tangential unit vectors as shown in figure 5.

|

|

| (a) Contact between two particles | (b) Contact force decomposition |

| Figure 5: Kinematics of the contact between two particles | |

The normal is defined along the line connecting the centres of the two particles and directed outwards from particle .

|

|

(6) |

The indentation or inter-penetration is calculated as:

|

|

(7) |

where , are the centre of the particles and , their respective radius.

The vectors from the centre of particles to the contact point and are in general dependent on the contact model. they should take into account the contribution of each particle to the equivalent stiffness of the system. Eq. 8 describes the simple case of two linear springs with different Young's modulus set in serial:

|

|

(8) |

The position of the contact point can then be determined from any of the particles:

|

|

(9) |

The velocity at the contact point is determined by eq. 10 taking into account the angular and translational velocities of the contacting particles, as shown in fig. 5.

|

|

(10) |

In case of contact with a boundary , the velocity of the rigid (or deformable) structure at the contact point has to be determined. If finite elements are used to discretize the boundaries, typically the velocities can be interpolated from the nodal velocities by means of the shape functions (see chapter 4). Equation 10 is then modified to:

|

|

(11) |

The velocity at the contact point can be decomposed in the local reference frame defined at the contact point as:

|

And thus, the definition of the tangential unit vector becomes:

|

|

(13) |

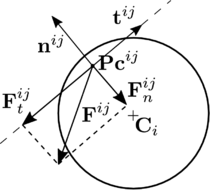

Now the contact force }{F}^{ij}\mbox{ between the two interacting spheres and can be decomposed into its normal and tangential components (Fig. 5):

|

|

(14) |

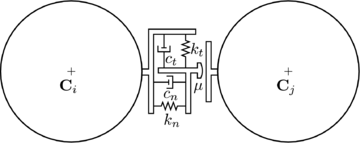

The forces , are obtained using a contact constitutive model. Standard models in the DEM are characterized by the normal and tangential stiffness, normal and tangential local damping coefficients at the contact interface and Coulomb friction coefficient represented schematically in Fig. 6 for the case of two discrete spherical particles.

|

| Figure 6: DEM standard contact rheology |

Some of the most common models are detailed in the next section 2.5. The models used in a combined DE-FE strategy are described in Chapter 4.

2.5 Contact models

The contact between two particles poses in general a complex problem which is highly non-linear and dependent on the shape, material properties, relative movement of the particles, etc. Theoretically, it is possible to calculate these forces directly from the deformation that the particles experience during the contact [52]. In the framework of the DEM however, simplified models are used which depend on a few contact parameters such as the particles relative velocity, indentation, radius and material properties such as the Young's modulus and Poisson's ratio toghether with some parameters that summarize the local loss of energy during the contacts.

The most common model is the so-called linear spring-dashpot model (LS+D) proposed by Cundall and Strack [2] which has an elastic stiffness device and a dashpot which introduces viscous (velocity-dependent) dissipation. This model, while being the simplest one, happens to yield nice results as described in the work from Di Renzo and Di Maio [53] for the case of elastic collisions and in the work of Thornton [54] for the case of inelastic collisions. This model is described in section 2.5.1.

In a second level of complexity, we find models that derive from the theory of Hertz-Mindlin and Deresiewicz. Hertz [55] proposes that the relationship between the normal force and normal displacement is non-linear. Mindlin and Deresiewicz [56] proposed a general tangential force model where the force-displacement relationship depends on the whole loading history as well as on the instantaneous rate of change of the normal and tangential force or displacement. This model was adapted to the DEM by Vu-Quoc and Zhang [57] and later by Di Renzo and Di Maio [53]. This model is quite complicated and requires high computational effort. Other simplified models exist [58,53,54] which consider only the non-slip regime of the Mindlin theory [59]. The model presented in section 2.5.2 is the simplified model by Thornton et al. [54], labeled HM+D.

Other models exist in literature which introduce plastic energy dissipation in a non-viscous manner. This includes the semi-latched spring force-displacement models of Walton and Braun [60] which uses, for the normal direction, different spring stiffnesses for loading and unloading. Similarly, Thornton [61] introduced a model in which the evolution of the contact pressure can be approximated by an elastic stage up to some limit followed by a plastic stage.

Unless the contrary is specified, the HM+D contact law will be used in examples of the thesis. In general, the criterion suggested here is to employ this model with the real material parameters whenever the physics of the contact have influence in the simulation results. In other cases, where the details of the contacts are not relevant, both linear and Hertzian contact laws can be used as a mere penalty technique being the stiffness value a trade-off between simulation time and admissible interpenetration. The model presented for the cohesive materials in chapter 5 is an extension of the linear law (LS+D).

2.5.1 Linear contact law (LS+D)

The model presented here corresponds to a modification of the original model from Cundall and Strack [2] in which the damping force is included in the way the contact rheology has been presented (figure 6).

Normal force

In the basic linear contact law the normal contact force is decomposed into the elastic part and the damping contact force :

|

|

(15) |

The damping part is a viscous force which models the loss of energy during a contact. It also serves as a numerical artifact that helps to decrease oscillations of the contact forces which is useful when using an explicit time scheme.

Normal elastic force

The elastic part of the normal compressive contact force is, in the basic model, proportional to the normal stiffness and to the indentation (or interpenetration) (eq. 7) of the two particle surfaces, i.e.:

|

|

(16) |

Since no cohesive forces are accounted in the basic model. eq. 16 holds only if , otherwise . The cohesive contact will be considered in Chapter 5.

Normal contact damping

The contact damping force is assumed to be of viscous type and given by:

|

|

(17) |

where is the normal relative velocity of the centres of the two particles in contact, defined by:

|

|

(18) |

The damping coefficient is taken as a fraction of the critical damping for the system of two rigid bodies with masses and connected by a spring of stiffness with:

|

|

(19) |

with and is the equivalent mass of the contact,

|

|

(20) |

The fraction is related with the coefficient of restitution , which is a fractional value representing the ratio of speeds after and before an impact, through the following expression (see [62]):

|

|

(21) |

Contact duration

The equation of motion describing the collision of particles with the LS+D model in the normal direction is achieved solving the differential equation resultant from the application of equation 2 in a frame centred at the point of contact:

|

|

(22) |

Eq. 22 can be rewritten as [62]:

|

|

(23) |

Where is the frequency of the undamped harmonic oscillator and is the part accounting for the energy dissipation.

The solution of the eq. 22 for the initial conditions and and for the sub-critical damped case1 ( or ) reads:

(1) The cases of critical and super-critical damping yield to other solutions which can be found in [63]

|

|

(24) |

And the relative normal velocity of the spheres:

|

|

(25) |

Now the contact duration can be determined from the condition , which combined with eq. 24 gives:

|

|

(26) |

Note that the contact duration does not depend on the initial approaching velocity which is obviously wrong as the formulation is not derived from the theory of elasticity [52] (see section 4.4 for more details).

The coefficient of restitution can be rewritten as:

|

|

(27) |

The inverse relationship allows the determination of the parameter of the model from the restitution coefficient , with the intermediate calculation of :

|

|

(28) |

Finally, the maximum indentation can be obtained from the condition :

|

|

(29) |

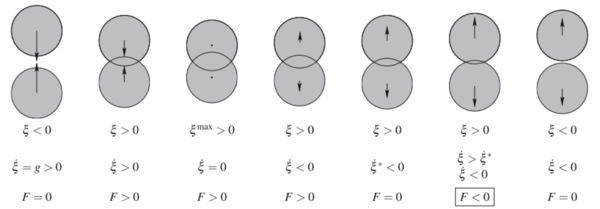

Note on tensional forces

It has been appointed by different authors [62,64,54] that this simple model presents unrealistic tension force when the particles are separating if the damping force is large enough (Fig. 7). Normally in the implementation of the codes the normal force is constrained to be exclusively positive, i.e always, as no tractions occur in frictional cohesion-less contacts. In this situation the definition of the contact duration should be modified as it has been derived by Schwager and Pöschel [64]. ] ]

|

| Figure 7: The different stages of a normal collision of spheres with a viscous damped model. Taken from: Fig. 1 in Schwager and Pöschel [64] |

The determination of the damping coefficients and the maximum indentation vary accordingly. It is not possible to derive an explicit expression for the damping coefficient in function of the restitution coefficient . Fitting curves are proposed in [54].

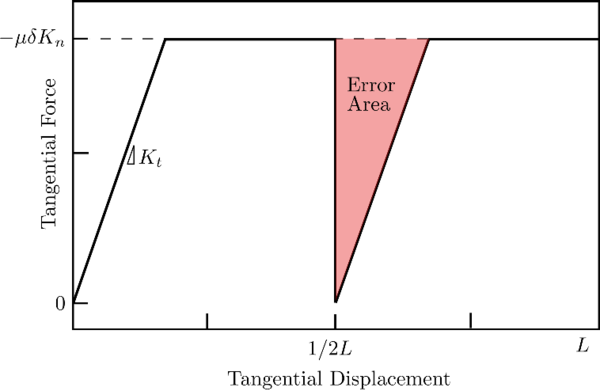

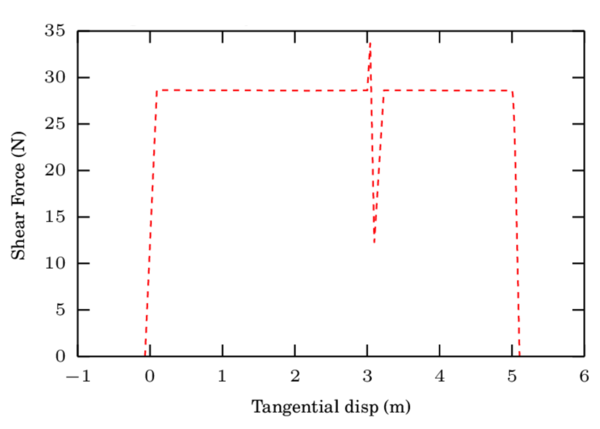

Tangential frictional contact

In the original model from Cundall and Strack [2] the relationship between the elastic shear force and the relative tangential displacement is defined through a regularized Coulomb model. The update of the tangential force at time step reads:

|

|

(30) |

Several authors (including the original paper) calculate the increment of tangential displacement at a given time step , , as . In our in-house code implementation it is calculated from the integration of the relative displacement and rotation in the local frame:

|

In the original paper [2] the damping is included only during the non-sliding phase () and it is applied afterwards as an extra force which opposes the relative velocity. The magnitude of the damping force is evaluated as where the tangential damping coefficient. In other authors' works and also in our code implementation it is chosen to include the dissipation in the check for sliding. In case of sliding (), extra decision on how to distribute the resultant tangential force in elastic and dissipative part have to be taken. This will not be discussed here. Eq. 30 modifies as:

|

The previous time step forces are transferred from its previous local coordinate frame to the new local contact frame with a rotation of the force vector (section 2.7.1).

Selection of the stiffness and damping parameters

The selection of the normal stiffness is, in the LS+D model, a design parameter. The general rule of thumb is that the value of should be large enough to avoid excessive particle inter-penetration but at the same time should be small enough to permit reasonable simulation time steps (section 2.6.4) [65].

Cundall and Strack [2] investigated several values for the relation in the range , obtained from the following expression:

|

|

(33) |

The values for the damping in the original paper [2] are selected as a proportion of the respective stiffnesses:

|

Normally, the selection of will be based on the desired restitution coefficient through eq. 19 and 21. Alternatively, Schäfer [66] suggests a value of equal to two-sevenths of the normal stiffness coefficient and a damping as half of the normal damping coefficient. Thornton [67], in his turn, suggests a value of that yields the same contact duration as the one predicted by the Hertzian theory (section 2.5.2).

(1) The cases of critical and super-critical damping yield to other solutions which can be found in [63]

2.5.2 Hertzian contact law (HM+D)

As introduced in section 2.5, there exist in literature several contact laws under the framework the Hertzian contact theory [55]. The model chosen for this dissertation is an adaptation of the one referred as HM+D model in the work by Thornton [54] due to its balance between simplicity and accuracy in both elastic [53,67] and inelastic collisions [54]. This is a model based on the original one by Tsuji [58] in which the tangential spring is provided by the no slip theory of Mindlin [59].

The magnitude of the normal force can be calculated as:

|

|

(35) |

The tangential update has two branches whether the normal force is increasing (loading phase) or decreasing (unloading case). For the loading phase the tangential force is increased as usual due to the tangential displacement (Eq. 36.a). In the unloading phase, however (Eq. 36.b), the tangential force must be reduced (even with no tangential displacement) due to the reduction in the contact area. The interpretation of this is that the previous tangential force can not longer be supported [54].

|

Finally, the check for sliding is performed restricting the maximum tangential force to the Coulomb's friction limit:

|

The stiffness parameters were described by Tsuji [58] following from the Hertz theory [55] and the works of Mindlin and Deresiewicz [56]:

|

The same for the damping parameters:

|

The expressions presented here (eq. 9.2 and 9.2) are a generalization to the case of two spheres and colliding with different values of , , and . This generalization includes the case of a sphere colliding with a fixed wall which will be discussed in section 2.5.3.

|

Although the selection of the stiffness has here a physical meaning, it is common practice however, to diminish its value to increase the calculation speed in simulations where the correct contact duration and rebound angles are not of capital importance. The derivation of the force-displacement relationship and the collision time by the Hertzian theory are described in the Appendix A.

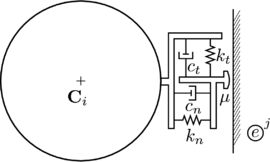

2.5.3 Contact with rigid boundaries

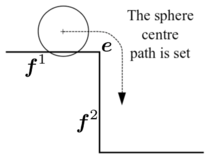

Rigid boundaries are commonly introduced in a DE simulation to model the interaction of particles with mechanical components that can be either fixed or have an imposed rigid body motion. Although they are normally discretized with a FE mesh for contact detection purposes (section 3.1), they are not calculated by means of a FE procedure. The rheology of a particle contacting a FE is presented in figure 8.

|

| Figure 8: DE-FE standard contact rheology |

Same as for DE/DE contact, Hertzian contact law is preferred to model the contacts or impacts in a physical basis. Alternatively the linear contact law can still be used as basic penalty method. The adaptation of the presented Hertzian contact law to the case of rigid boundaries is straightforward, it simply requires the particularization of the equivalent contact parameters summarized in 9.2 setting: and . The normal stiffness of the wall is left as an input parameter so that a certain elasticity of the wall can be modelled. Since the tangential displacement of the wall will be in most cases much smaller than the particle's one, it is recommended to be set [58]. The equivalent values become:

|

The stiffness and damping parameters are modified accordingly inserting these equivalent values in eq. 9.2 and eq. 9.2. The friction value to be employed in this case is a new parameter to be introduced, which is characteristic of the contact between the two materials involved and might be different from the particle-particle friction.

Additionally, special contact laws can be applied which model other effects such as a specific dynamic response, wear, plasticity, thermal coupling, etc. [68,69,70].

2.5.4 Rolling friction

It should be noted that the use of spherical particles to represent real materials may lead to excessive rotation. To avoid this effect the rolling resistance approach has been used. This approach consists in imposing a virtual resistive torque which is proportional to the normal contact force and opposites the rolling direction. The rolling resistance torque is defined as;

|

|

(42) |

where is the rolling resistance coefficient that depends on the material, is the smallest radius of the DEs in contact and the relative angular velocity of both DEs. Note that for the case where particle is in contact with a wall ().

An improvement to the classical Rolling Resistance Model A presented by Wensrich and Katterfeld [71] has been developed by Irazábal [72] in order to avoid the instabilities that appear when is close to .

2.6 Time integration

The equations of motion introduced in section 2.3 can be numerically integrated to obtain a solution of the problem. Traditionally there are two strategies to achieve this: a) An explicit scheme where the information at the current (or previous steps) suffices to predict the solution at the next step. b) An implicit scheme, which requires the solution of a non-linear system of equations to compute the state at the new time step. The disadvantage of the explicit schemes is that they require the time step to be below a certain limit in order to be stable. Implicit schemes instead, are unconditionally stable and thus, allow for larger time steps.

Some analysis on both implicit and explicit methods for discrete element simulations showed that the second one is generally preferable [73,74]. Implicit algorithms turn to be not efficient for DEM simulations because of the nature of the dynamics of particles where relatively large motions are simulated combined with very small characteristic relative displacements between particles during contact events. In order to correctly capture the dynamics of the contact, the time resolution should be several times smaller than the duration of these contacts [74]. Under this condition, the explicit integration yields sufficient accuracy and the time step is generally below its stability limits (see section 2.6.4). Following the same reasoning, low order explicit schemes are usually preferred rather than higher order ones. Another important outcome of the use of an explicit integration is the easier parallelization of the code and the avoidance of linearization and employment of system solvers.

In other situations where the same contacts are kept for large simulation times, such as cohesive models for DE (chapter 5), the use implicit schemes can be advantageous. Otherwise, the stiffness matrices have to be rebuild, in general, at each iteration and time step due to the formation and destruction of contacts. Amongst the most popular implicit approaches in DEM is the Discontinuous Deformation Analysis [75].

2.6.1 Explicit integration schemes

In the present dissertation an explicit integration is used. Next, four different one-step integration algorithms with similar computational cost are described and compared in this section. The derivation of these methods comes from the application of the Taylor series approximation to the second order differential equations of motion (2) that describes the problem.

|

|

(43) |

Forward Euler

The forward difference approximation of the first derivative of a function reads as:

|

|

(44) |

The terms can be rearranged to obtain an integration formula:

|

|

(45) |

which is applied to the integrate the acceleration and the velocity respectively:

|

The truncation error of the Taylor expansion approximations are of . Hence, the method is referred to as a first order approximation of the displacement and velocities.

Symplectic Euler

The Symplectic Euler is a modification of the previous method which uses a backward difference approximation for the derivative of the position:

|

|

(48) |

The algorithm is as follows:

|

This way a higher accuracy and order of convergence can be achieved as it is shown in the numerical convergence analysis performed in the following section 2.6.3.

Taylor Scheme

The Taylor schemes are a family of integration methods which make use of the Taylor expansion (43) to approximate the next values of the variable of interest. If the series are truncated at the first derivative for the velocity and at the second derivative for the position, the following integration rule is obtained:

|

Which is a first order integrator for the velocity and a second order integrator for the position.

Velocity Verlet

This algorithm is sometimes simply called Central Differences [76,38] and some other times it is interpreted as the velocity form of the Verlet algorithm [74,77]. It also coincides with the special case of the Newmark-beta method [78] with and . The derivation presented here is the same as it is described by Belytschko in [76]. The central difference formula is written as:

|

|

(53) |

Applying it to the velocity at an intermediate position :

|

|

(54) |

and to the acceleration at the time step :

|

|

(55) |

Inserting equation 54 and its counterpart for the previous time step () into equation 55, the central difference formula for the second derivative of the displacement is obtained:

|

|

(56) |

The algorithm follows from the rearrangement of equations 54 and 55

|

Since it may be necessary to have both velocity and position evaluated at every time step of the discretization, a split in the calculation of can be performed.

|

The implementation of the method is summarized in the following table:

| Initialization of the scheme. | , |

| while | |

| Update step: | , |

| First velocity update: | |

| Position update: | |

| Calculate forces | |

| Calculate acceleration: | |

| Second velocity update: |

This is the selected scheme for the examples in this dissertation.

2.6.2 Integration of the rotation

The particular case of spherical particles simplifies the equations for the rotation of rigid bodies yielding to equation 3. Some authors [79,80,60] adapt a simple central difference scheme to integrate the equations:

|

The vector of incremental rotation is then calculated as:

|

|

(63) |

Knowledge of the incremental rotation suffices to update the tangential contact forces. If necessary, it is also possible to track the rotational position of particles, as detailed in section 2.7.1.

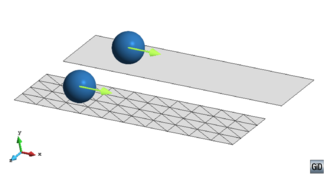

2.6.3 Accuracy analysis

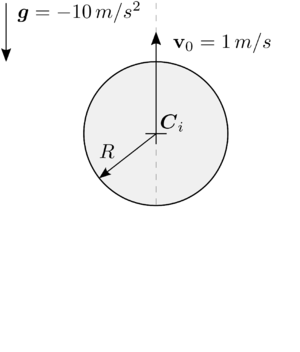

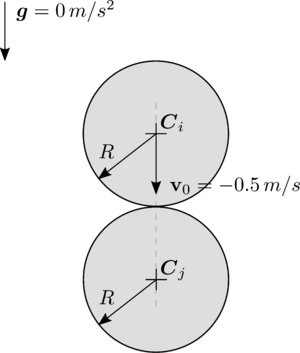

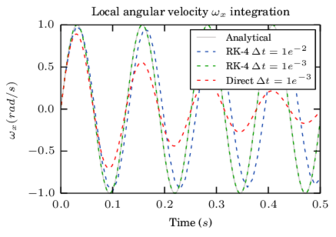

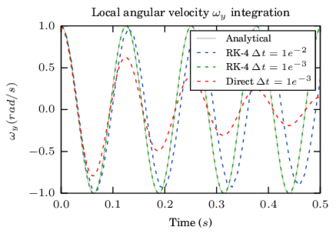

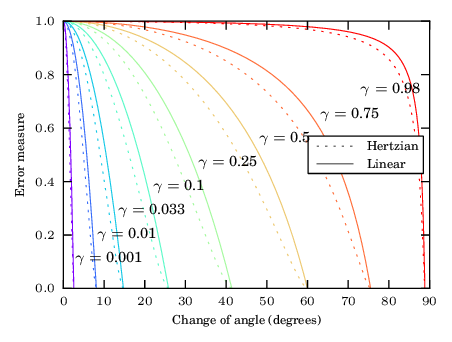

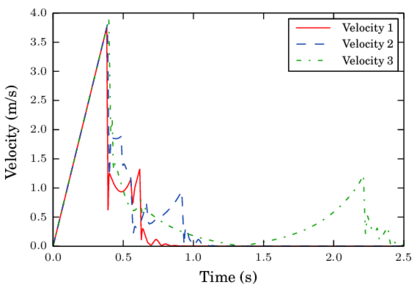

In this section the error of the different integration methods previously introduced is addressed by means of accuracy and convergence analysis. Three cases representative of translational motion occurring in a DEM simulation are analysed here: free parabolic motion, normal contact between two spheres using a linear contact law and normal contact between two spheres using a Hertzian contact law. The description of the test examples is in figure 9. A similar analysis has been performed by Samiei [74] for the comparison of some explicit schemes against implicit integration.

The case of rotational motion is analysed in section 2.7.3 where a higher order scheme is implemented for the case of a generic rigid body which can be also applied to the spheres. It is shown that the integration of the rotation equation requires higher order schemes for similar levels of accuracy as the one-step methods.

|

|

| (a) Set-up parabolic motion | (b) Set-up normal contact |

| Figure 9: Examples for the accuracy and convergence analysis on time integration schemes | |

Parabolic motion analysis

An initial upwards velocity of is set to a particle situated at the origin of coordinates which moves freely only under the effect of gravity which is set to during seconds.

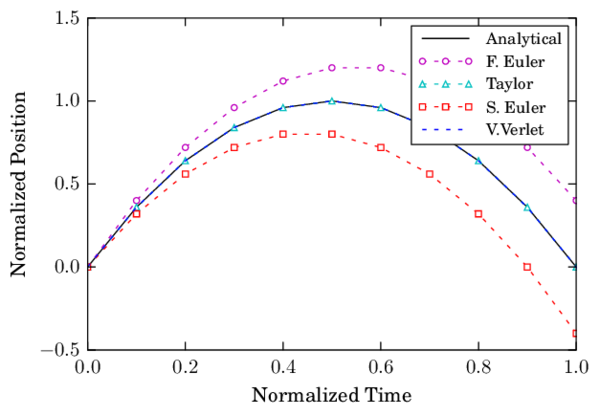

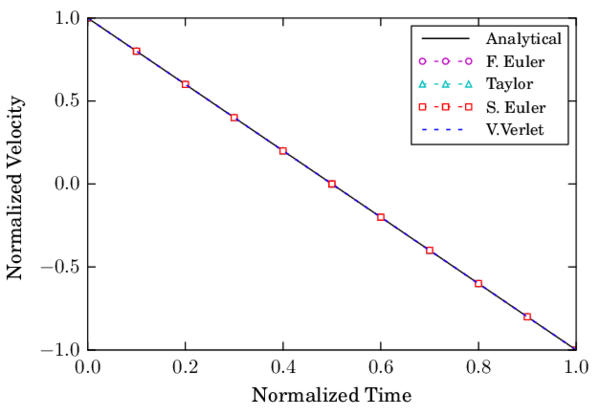

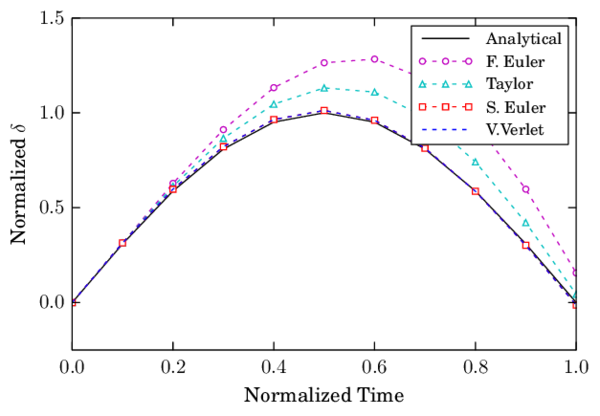

A numerical integration of the problem is performed with the presented methods and compared against the analytical solution. The time step is chosen to be a tenth of the total time so that the error of the methods can be easily observed.

|

| Figure 10: Vertical displacement of a sphere under gravity using time steps |

|

| Figure 11: Velocity of a sphere under gravity using time steps |

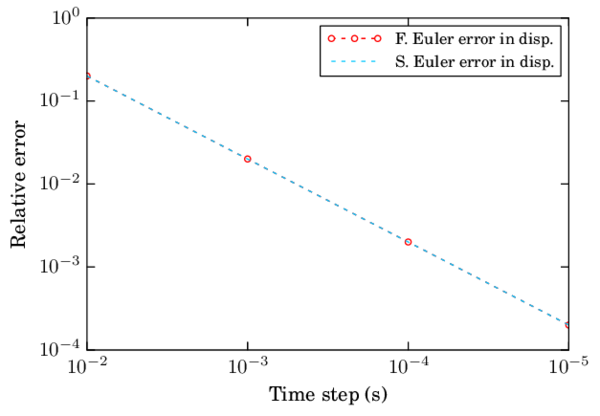

|

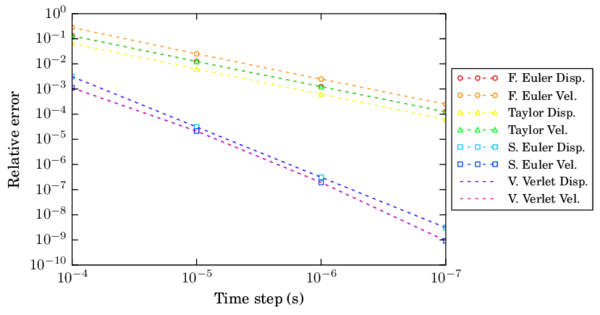

| Figure 12: Convergence in velocity and displacement for different integration schemes |

Figure 12 shows that the Forward Euler and Symplectic Euler schemes have a linear convergence when integrating the position. The convergence is omitted for other schemes and for the velocity since the algorithmic error is null.

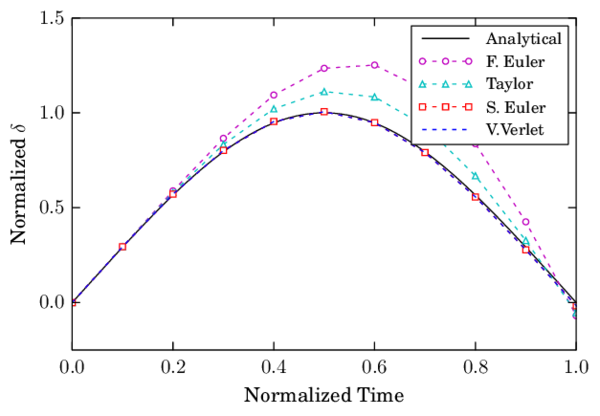

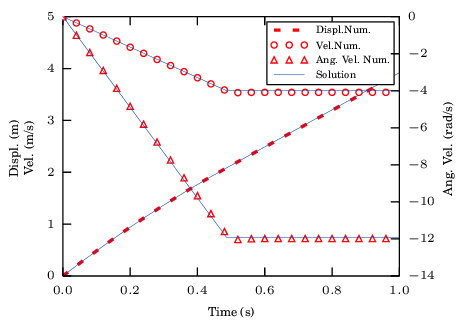

Normal contact analysis with the LS+D model

Two spheres are set in space with tangential contact (no indentation) and without the effect of the gravity. One of the spheres approaches the other one with an initial velocity in the direction of the vector joining the spheres' centres as depicted in figure 9c. The linear contact law introduced in section 2.5.1 is applied.

The expression for the maximum indentation (eq. 29) for the non-damped case turns into:

|

|

(64) |

And the contact duration (eq. 26):

|

|

(65) |

The simulation is carried out for the different schemes with a time step corresponding to a contact resolution1 () of 10, i.e. the time step corresponds to a tenth of the contact duration. The parameters of the simulation are summarized in the following Table 2:

| Contact law | Linear Contact Law (section 2.5.1) |

| Radius | |

| Density | |

| Restitution coeff. | |

| Contact time | |

|

| Figure 13: Indentation during the collision of two spheres using LS+D with |

|

| Figure 14: Velocity during the collision of two spheres using LS+D with |

|

| Figure 15: Convergence in velocity and displacement for the FE and SE schemes |

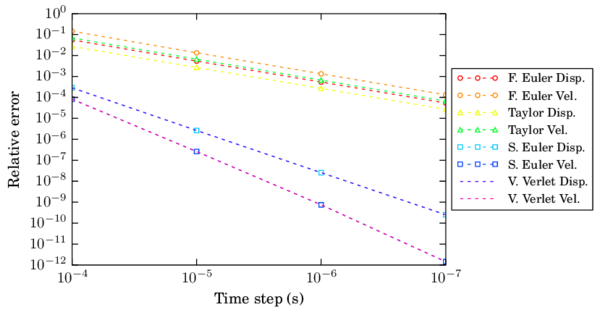

The numerical results for the maximum indentation as well as the exit velocity of the contact have been taken as the measures to evaluate the error for different time steps. Both F.E. and Taylor schemes showed linear convergence in displacement and velocity (Fig. 15). On the other hand, S.E. and V.V. showed quadratic convergence for the displacement and velocities.

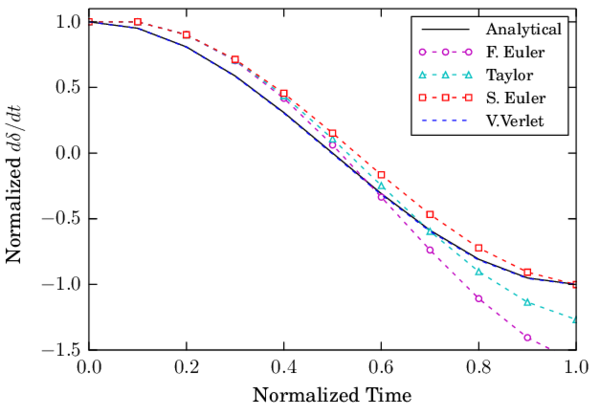

Normal contact analysis with the HM+D model

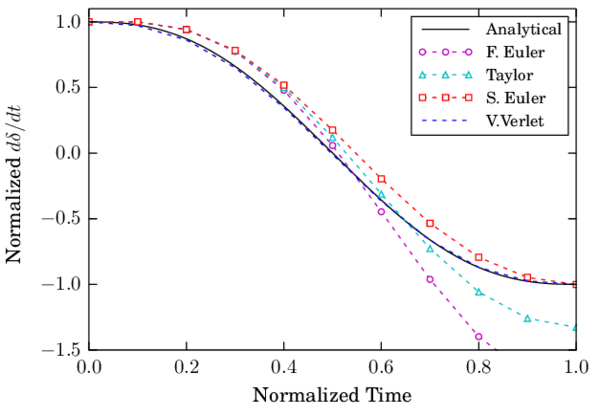

Finally, the same test is carried out using a Hertzian contact law (section 2.5.2). The derivation of the contact time duration and other properties of the Hertzian contact are detailed in Appendix A. The simulation parameters are summarized in Table 3. The different schemes are tested with a and the results for the indentation evolution and its time derivative are plotted in Fig. 16 and Fig. 17 respectively.

| Contact law | Hertzian Contact Law (section 2.5.2) |

| Radius | |

| Density | |

| Young's modulus | |

| Poisson's ratio | |

| Restitution coeff. | |

| Contact time | |

|

| Figure 16: Indentation during the collision of two spheres using HM+D with |

|

| Figure 17: Velocity during the collision of two spheres using LS+D with |

In terms of convergence, the velocity presented even a higher order than quadratic for the Verlet scheme. It shall be noticed however, that the error of this variable for the selected time steps is too small to draw conclusions on the scheme convergence.

|

| Figure 18: Convergence in velocity and displacement for different integration schemes |

(1) The concept of contact resolution defined as is discussed in section 2.6.4.

2.6.4 Stability analysis

There are many factors that can cause instabilities in a Discrete Element simulation. The first basic requisite for the time step, in a DEM simulation, is to be stable in terms of the integration scheme. Another significant source of instabilities is the lack of accuracy in the determination of the formation of contacts. In this sense, quantities such as the velocity of the particles and the search frequency play a great role in the overall stability and are not sufficiently studied in the literature. While most of the authors merely perform a scheme stability analysis [81] for the determination of the time step, a large safety factor is applied which reduces the estimated value. This reinforces the idea of using a time step based on the concept of contact resolution [12,82] defined as the number of steps used to resolve a contact event, .

Stability of the integration scheme

Explicit integration schemes present a limitation in the time step in order to be numerically stable . Belytschko [76] shows that the critical time step for a central difference method is determined by the highest natural frequency of the system }\omega _{max}\mbox{ as:

|

|

(66) |

Exact calculation of the highest frequency }\omega _{max}\mbox{ requires the solution of the eigenvalue problem defined for the whole system of connected rigid particles. In an approximate solution procedure, an eigenvalue problem can be defined separately for every rigid particle using the linearized equations of motion. The maximum frequency is estimated as the largest of the natural frequencies of the mass-spring systems defined for all the particles with one translational and one rotational degree of freedom:

|

|

(67) |

And the natural frequency for each mass-spring system (contact) is defined as:

|

|

(68) |

being the spring stiffness and the mass of particle . Now, for the case with no damping, it is possible to rewrite the critical time step as:

|

|

(69) |

The effective time step is considered as a fraction of the critical time step:

|

|

(70) |

The fraction has been studied by different authors. O'Sullivan and Bray in [81] recommend values close to for 3D simulation, and for the 2D case.

If damping exists, the critical time increment is modified with the fraction of the critical damping corresponding to the highest frequency }\omega _{max}\mbox{ in the following way [76]:

|

|

(71) |

Further details are given in section 4.4.1 where the critical time step for a explicit finite element procedure is discussed.

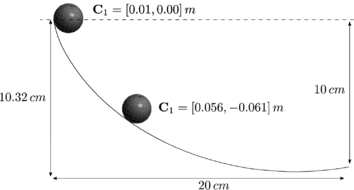

Example of the scheme stability

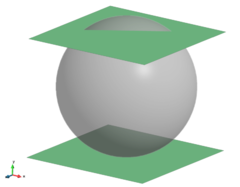

An example is presented here to show the performance of the different schemes for time steps near the critical one and smaller. A sphere of radius and density oscillates between two parallel plates which are separated using a linear contact law with stiffness . The sphere presents an initial indentation with the top plate of (fig. 19a). The example tries to simulate the instability effects that can occur locally in a system with dense particle packings.

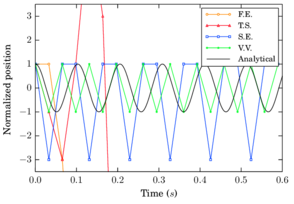

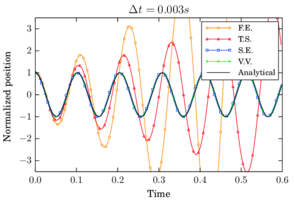

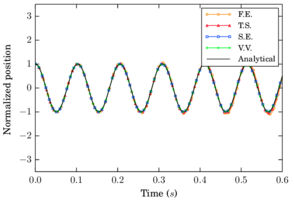

The linear mass-spring system has a theoretical frequency1 of which yields to a critical time step . The results for the four schemes are presented (fig. 19) using time steps: , and .

The results show how the Velocity Verlet is the only scheme which has an acceptable performance in the limit of the critical time step (fig. 19b) as it is a second order scheme. It was found that for a slightly larger time step the Velocity Verlet scheme becomes also unstable as predicted by the criterion in eq. 69. Symplectic Euler, which showed properties similar to a second order scheme in terms of accuracy, does not unstabilize but presents a wrong prediction of the amplitude. As it can be seen in figure 19c the first order schemes are still unstable even for a time step which is ten times smaller than the critical one, being Forward Euler the most unstable one. Finally, in figure 19d it is shown that all methods converge to the analytical solution as the time step diminishes.

|

|

| (a) Setup of the example | (b) Position evolution for |

|

|

| (c) Position evolution for | (d) Position evolution for |

| Figure 19: Setup and results for the position of the sphere between the plates | |

Stability due to lack of accuracy

The lack of accuracy can produce instabilities in a DEM simulation. The easiest way to explain it is to imagine a particle travelling with a very large velocity towards another particle or a wall; while the critical time step was shown to be independent of the velocity (eq. 71), a large velocity will imply inaccuracy in the detection of the contact and this translates into an indentation that can be unboundedly large and thus yielding to an unrealistic increase in the energy. This can also be interpreted as an insufficient resolution of the contact.

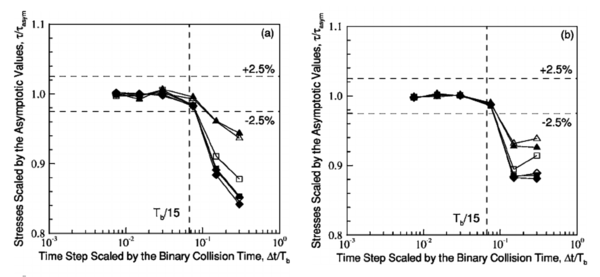

An example of this effect is found in the work by Ketterhagen et al. [12] where an analysis of how the time step affects the mean stress tensor measurements in two-dimensional granular shear flow simulations is performed. For a time step small enough the simulation results for the stress tensor (or any other variable) should be independent of the time step size. The studies performed using a linear contact model and several stiffness values showed that for a the error in the stress measurement was below while higher time steps yielded a sudden increase in the error up to values above . These inaccuracies may introduce instabilities as it was shown by their results which are referenced here in Fig. 20.

] ]

|

| Figure 20: Stress measurement error in shear flow simulations. Taken from: Fig. 4 in Ketterhagen et al. [12] |

A commonly accepted approach as an alternative to the critical time step criterion (section 2.6.4) is to select the time step of the simulation in function of the characteristic duration of the contacts, i.e, by means of the contact resolution. No agreement is found when addressing a correct value for the , several authors recommend values around which is quite conservative (See [83,84,60,65]), Keterhagen et al. recommends a contact resolution of while others like Dury [85] use larger time steps: . O'Sullivan [81] determines values of in the range using a central differences scheme with regular mono-disperse (same radius) meshes.

Summarizing, there is not a unique solution for the problem of selecting a suitable time step. It depends on many factors such as the mesh, the integration scheme, the type of simulation, the material parameters, the contact law, etc. Our suggestion is to estimate a characteristic contact time of the problem and then select a time step based on the criterion in the range depending on the conditions of the problem and the accuracy desired. This will be in general much lower than the critical time step.

(1) The multiplying the stiffness comes from the fact that this is not a single mass-spring system, instead two plates are contributing to the stiffness of the system.

2.6.5 Computational cost

From the accuracy and stability analysis it is clear that Velocity Verlet and Symplectic Euler are much superior than the Forward Euler and Taylor Scheme, being Velocity Verlet the best one among these four one-step schemes. The final aspect to take into consideration is the computational cost of the method. Simulations in real applications involve millions of particles and can also comprehend millions of time evaluations. The example described in section 6.2.2 is used here to calculate time steps. It includes approximately 30.000 spheres contacting among them and also with around rigid finite elements. The test has been run in a personal computer with an Intel Core i7 processor of 4 Gb RAM and 2.93 GHz.

| Scheme | F. Euler | Taylor | S. Euler | V. Verlet |

| Time (s) | 169.61 | 170.04 | 169.64 | 174.28 |

The results showed similar computational times for the four schemes. Velocity Verlet performed slowlier which is insignificant considering the advantages found in terms of accuracy and stability in the integration of velocities. Obviously, it will vary in every computer but in general lines we determine that it is worth to employ a Velocity Verlet scheme.

2.7 Particle shape

In granular matter, the effects of the particle geometry are crucial in the behaviour of the particles as a bulk or as individuals [86]. Often, the phenomenological approach is considered and the granular media are modelled with spheres as it is the cheapest and most efficient option for simulating a large amount of particles [79]. Alternatively, if we want a method which is purely based on contact and other interaction forces, the real geometry of the particles have to be well represented.

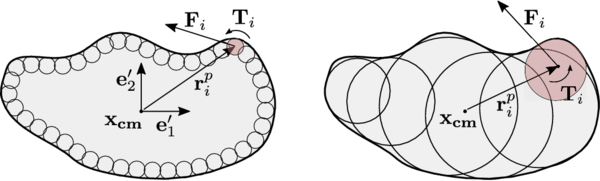

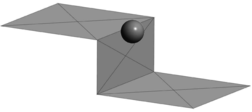

Among the most common methods there is the use superquadrics, which permits a wide range of symmetric convex shapes [4], the Granular Element Method [87], which uses NURBS to represent the particles and, finally, the use of clusters or agglomeration of spheres [88]. The last one is chosen in this work since it provides great balance between shape representation accuracy1 and efficiency in terms of computational cost. Furthermore, it is the most versatile method in terms of particle shape and can naturally include angularities. The contact forces and torques are evaluated as usual on every sphere through eq. 4 and eq. 5. The contribution from every sphere is then gathered and translated to the centre of gravity of the rigid body altogether with the additional torque yielding from the application of the this force from the centre of every particle to the centre of the cluster through the distance vector .

|

|

| Figure 21: Discretization of a rigid body using a cluster approach with spheres on the surface or overlapping in the interior |

Once the total force and torque of the rigid body is obtained, the classical Newton's second law for the translation and the Euler rotation equations have to be solved in order to obtain the full motion of the rigid body (section 2.7.2). These equations can be integrated in an explicit way, preferably with a second or higher order scheme (section 2.7.3).

(1) The use of sphere cluster can introduce artificial friction due to the irregularities in the surface meshed by spheres. This problem is discussed in [89].

2.7.1 Representation of the rotation

There are three ways which are very popular to represent rotations in the DEM: the use of Euler Angles, the use of rotation matrices and the use of quaternions. A review of the advantages and drawbacks of the methods can be found in [90].

The use of quaternions represents a clear advantage. It avoids the singularity problems that Euler angles present, it is more compact and it has less memory requirements than storing rotation matrices. Furthermore, the rotation operations are done in a more efficient way than using rotation matrices.

A rotation matrix is a orthogonal matrix which transforms a vector or a tensor from one coordinate system to another one as follows:

|

Given a rotation of degrees over a unitary vector , the rotation matrix is constructed as follows:

|

|

(74) |

A quaternion can summarize the same information just using 4 scalars. It is defined in the complex number system as:

|

|

(75) |

or in a compact form:

|

|

(76) |

Defining its conjugate as , the norm of a quaternion can be expressed:

|

|

(77) |

and its inverse:

|

|

(78) |

Now, given a rotation of degrees over a unitary vector , the resulting unit quaternion reads:

|

|

(79) |

And the conversion from quaternions to a rotation matrix is the following:

|

|

(80) |

By using unit quaternions the intermediate transformation to a rotation matrix can be skipped and the rotation can be directly applied to vectors and tensors. The specification of unit quaternions is important in order to preserve lengths during rotational transformations. The rotations are applied in the following way:

|

To do so, the multiplication operation needs to be employed. Given two quaternions and the multiplication yields a new quaternion :

|

|

(82) |

The vector involved in a quaternion multiplication (eq. 81.a) is treated as a quaternion with a null scalar part. The tensor multiplication (eq. 81.b) can be simply done treating the tensor as an assembly of vectors that are being multiplied subsequently. Note that the multiplication of quaternions is not commutative since it involves a cross product. A extended review on quaternion algebra can be found in [91].

2.7.2 Rigid body dynamics

In a rigid body the distance between two material points is constant over time. Any spatial movement undergone by a rigid body can be described with the displacement of the centre of mass plus a rotation over some axis passing through the centre of gravity.

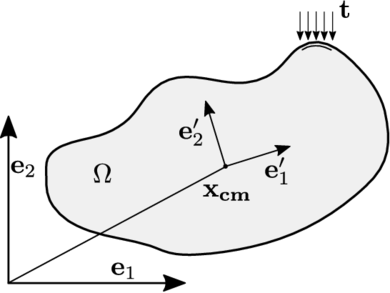

|

| Figure 22: A generic rigid body |

For sake of convenience the spatial description of the body will be used identifying the position of every material point in time with its spatial position referred to global inertial reference system . In its turn, the superscript as in denotes a quantity expressed with respect to the body fixed frame . The temporal dependence will be dropped in the following developments for clarity.

The definition of the centre of mass of a body enclosed by the domain supposing constant density is:

|

|

(83) |

Defining . The velocity and acceleration can be obtained:

|

The linear and angular momentum are defined as:

|

and the balance expressions for linear and angular momentum read:

|

Now, the expression for the translational motion is obtained combining equation 85.a and 84.b onto the equation of balance of linear momentum 86.a yielding the classical Newton's second law of motion:

|

|

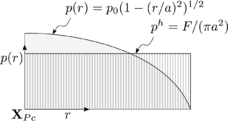

(87) |

Likewise, the expression for the rotational motion is achieved plugging equation 85.a into equation 86.a and evaluating the temporal derivative. The expression of the Euler equations is found with the use of eq. 84.b and eq. 84.a onto the balance of linear momentum (eq. 86.a).

|

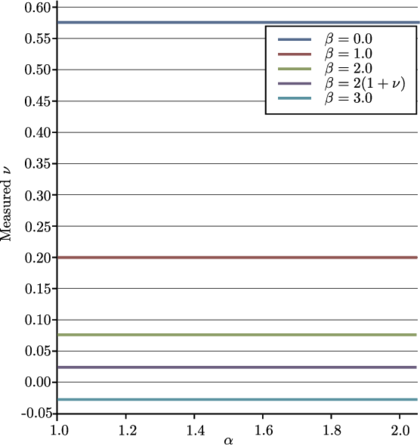

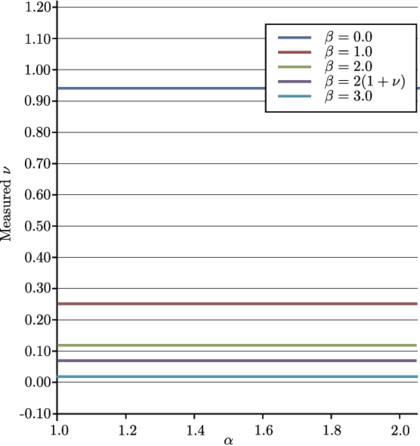

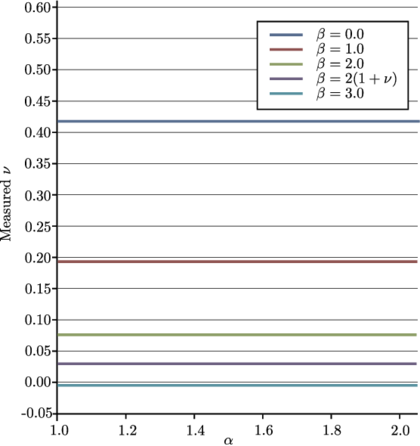

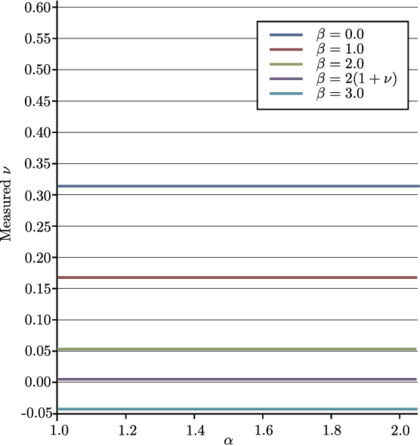

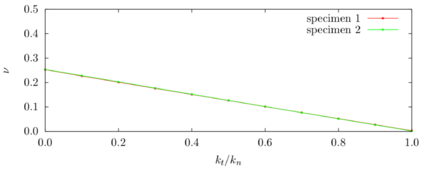

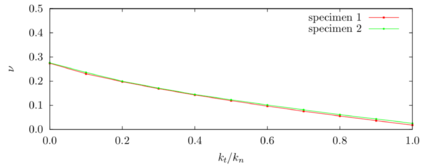

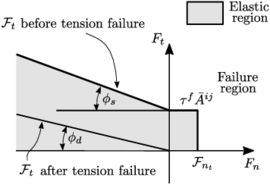

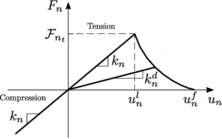

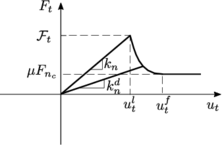

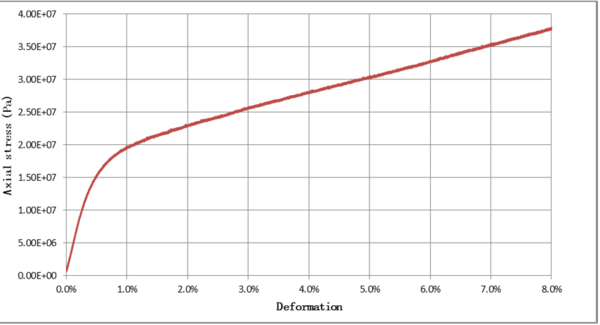

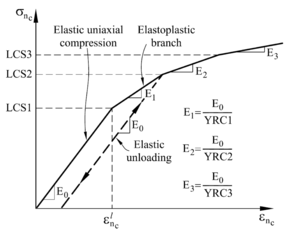

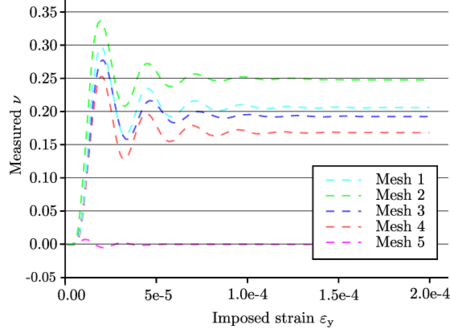

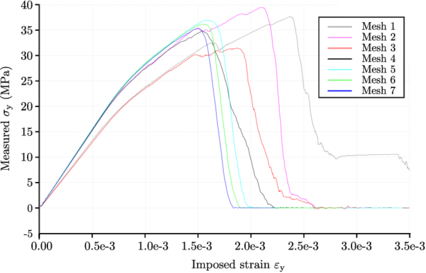

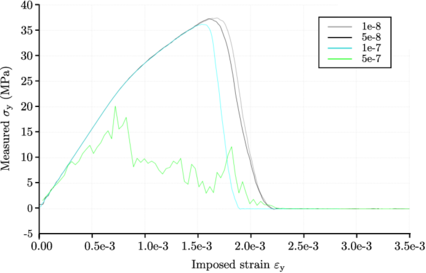

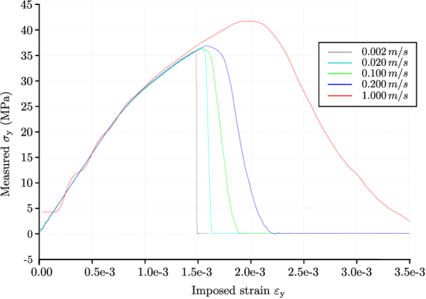

|