Abstract

Online code sharing and documentation platforms have become an essential part of every analyst and data scientist’s lives. Google Colab and Jupyter Notebook are some of the well named platforms which are used quite extensively to work on datasets. These platforms allow the user to showcase and manipulate data along with the capabilities of adding beautiful visualizations and essential narrative texts. They offer high customization abilities for the users along with Collaboration and sharing capabilities. These notebooks also offer a variety of languages to choose from, like R, Python, PySpark etc. if the necessary packages are installed. Other platforms available for working on big data includes R Markdown, Kaggle, Spark Notebook and many other popular tools. With the recent developments in Data Science technologies and Machine learning, there has been an increased adoption for these platforms, which has eventually led to the addition of new features to these tools. In this way, this paper present a case study (optimization techniques course) with the python notebook usefullness

Keywords: Game, simulator, education

1. Introduction

JupyterLab is a web-based interactive development environment for Jupyter Notebook, codes as well as data. It has been designed to be flexible so as to incorporate any form of workflow into it pertaining to Data Science, Scientific Computing as well as Machine Learning & Artificial Intelligence. It also allows developers to write their own plugins and packages, which can then be easily incorporated into the workflows, i.e., seamless integration experience. Jupyter Notebook is a component of Lab which is an open-source web application that allows users to create and share documents containing live code, equations, visualizations and narrative text. Some of the use cases include data cleaning and transformation, numerical simulation, statistical modeling, and data visualization.

Google Colab or short for Colaboratory is a product developed by Google Research, which is a free to use platform and can be used to execute arbitrary Python codes through the browser. This tool is especially suited for machine learning, data analysis as well as educational purposes. Colab is a hosted Jupyter Notebook service which requires no setup and provides free access to computing power like GPUs on the cloud. This is ofcourse extended if the user pays for the pro version called Colab Pro.

1.1 Why Jupyter Notebook?

Some of the features provided by Jupyter are as follows [1-2]:

- Record code and steps performed in data manipulation and data cleaning which helps to back track steps in a later stage

- Visualizations like graphs and figures are directly rendered on the notebook which can be easily rendered on html or pdf files for sharing

- Animations and dynamic visualizations or interactive visuals are available on Jupyter Notebook which helps users interact with visuals and understand patterns in-depth

1.2 Why Google Colab?

Just as we discussed about some primary features that Jupyter provides to its users, here are some features that Google Colab provides its users as well [3-4]

- It runs on Google Cloud Platform which provides robust and flexible service to the users in terms of storage and management

- It is free of cost, provided the high end GPUs and resources that it provides for computation. Recently, they have added the feature for Tensor Processing Unit apart from its existing GPU and CPU

- It can easily be integrated with Google Drive which makes dealing with large datasets from the location easy

2. Google Colab vs Jupyter Notebook: The verdict

Google Colab is an evolved version of the Jupyter Notebook with enhancements and far more superior features to be offered. There are multiple reasons why Google Colab is often preferred in colleges and companies, some of them being: [5-7]:

- Lot of libraries are pre-installed which eliminates the need to install softwares and packages before using. Some of the most commonly used packages are pandas, numpy and matplotlib which are already pre-loaded in the environment

- The work is saved on the cloud and there is no hassle of saving it in the local system. They are shared on the users google drive account which can easily be shared across with others over the internet

- Collaborative capabilities which users can share across with team mates, with proper comments and notes. It has the power to co-code with fellow analysts and data scientists for real time work updates and reviews

- GPU and TPU uses are free for everyone which eliminates the need to have expensive processing power with the user. Google provides all the processing power for powerful ML and AI packages and the local systems are not harmed in any manner

- Google Colab also allows easy integration with numerous platforms like Google Forms, Stack Overflow, and Code Gist on Github

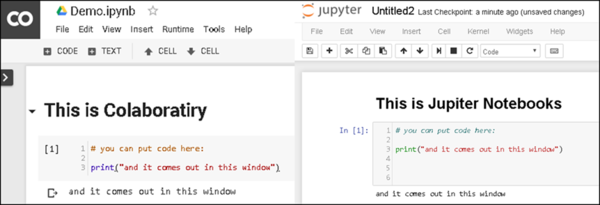

The image above (Figure 1) shows both the IDEs and how a basic print statement looks on each of these platforms. Communication is an essential aspect of any project or data science activity. It is the idea of sharing information with one another in the process of developing a model or analyzing the data. You can only imagine when there are situations wherein the coder has developed 1000s of lines of codes which serves the purpose of the project. Now, sharing the same with the entire team, without any proper annotations or comments only makes it complex for the team to interpret what each section of the code does. In another scenario, earlier practices have been to copy snippets of code and paste it on MS Word document or some Confluence page after which the developer might go ahead and start explaining what each segment of the code does. This process of ‘documenting as you code’ has become far more simpler with the introduction of IDEs like Google Colab and Jupyter Notebook.

|

| Figure 1. Google Colab vs Jupyter Notebook |

3. Guide to using Google Colab

We have constantly faced this issue in the past wherein a Machine Learning model stops working and we get an error ‘memory-error’, especially when working with large datasets on our local system. The following are some of the steps for setting up a project on Google Colab [7-8]:

- * Setting Up a Drive

One can start by clicking on this link and then go on to create a new notebook or even upload a notebook from the local system to the environment. One can also seamlessly import a notebook directly from Google Drive if they wish to do so

- Choosing a GPU or TPU

Users are free to change the runtime on their notebook as they please. They can choose either a GPU or a TPU to work on their notebook

- Using Terminal Code

Google Colab also allows their user to run terminal codes and the popular libraries are already added to the notebook. Other than that, pip install allows installing the common packages on the work environment for the user

- Saving a File

While there are multiple ways to saving a Colab file, users can either use the terminal commands to save their work or directly go to File and Choose Save a Copy in Drive to get the job done

These simple steps as discussed above allows a user to navigate easily on the platform, which makes most of the tasks quite easy for them to comprehend. This makes Google Colab even more desirable in terms of choosing over an IDE for Data Science and Machine Learning. Having discussed that, there are also some downsides to using Google Colab, some of which include -

- Closed Environment

Google Colab can be used by anyone to run arbitrary Python codes in the browser. But it is kind of a closed environment for the Machine Learning Engineers or Data Scientists while adding their own packages. Hence the platform is great for using common tools but lacks scope for specialization purposes.

- Repetitive Task

The problem is that repetitive tasks are persistent in Google Colab wherein the user needs to install the packages and libraries again and again, when they start a new session every time. So, one needs to keep in mind that once they shut down an instance or session, on beginning a new one, everything needs to be re-installed again to get back to the original state of working

- No Live-Editing

For real-time collaboration just as Google Docs or Google Sheets provide, multiple users cannot work on the same file which limits the scope of real time collaboration. Hence, the need for back and forth transition arises when working on a single file within a team, since one person can work on the edits at a time

- Saving & Storage Problem

Uploaded files are removed when the session is restarted because Google Colab does not provide a persistent storage facility. So, if the device is turned off, the data can get lost, which can be a nightmare for many. Moreover, as one uses the current session in Google Storage, a downloaded file that is required to be used later needs to be saved before the session’s expiration. In addition to that, one must always be logged in to their Google account, considering all Colaboratory notebooks are stored in Google Drive

- Limited Time and Space

The Google Colab platform stores files in Google Drive with a free space of 15GB; however, working on bigger datasets requires more space, making it difficult to execute. This, in turn, can hold most of the complex functions to execute. Google Colab allows users to run their notebooks for at most 12 hours a day, but in order to work for a longer period of time, users need to access the paid version, i.e. Colab Pro, which allows programmers to stay connected for 24 hours

These points that we discussed above come as a set of trade-offs for the platform, when comparing with other coding IDEs for Data Science and Machine Learning. But if a user is comfortable with these points, Google Colab is definitely a great option to choose from a wide range of coding platforms.

To conclude, even though Jupyter Notebook is a robust IDE for Data Scientists and Analysts to work on, Google Colab has become an evolved form of the same which can make the work far more easier. With the increasing amount of data and the requirement for more accuracy in predictions, the need to use powerful ML and AI techniques has increased. This requires high computation power which is provided by Google Colab to its users. In comparison to the Jupyter Notebook, which uses local computational power, this becomes far more convenient for users who cannot afford high end processors and GPUs.

Over and above that, there are various mathematical equations, convex as well as non-convex optimization requirements along with other mixed-integer programming problems which can be solved with the high computational abilities of Google Colab. For the purpose of demonstration, a convex optimization has been used to solve a problem of control in this notebook. Similar to the example notebook, many other mathematical models can be solved using Google Colab and its computing capabilities.

The notebooks have evolved over the years, from a rudimentary form (iPython) to Google Colab. While iPython notebook only provided Read-eval-print loop, Jupyter was developed, which helped in running codes and taking notes along with other interactive features. Finally, Google Colab was developed which provided real-time code collaboration as well as hardware and resource allocation for the users.

Hence, for extensive Machine Learning and Artificial Intelligence, Complex Mathematical model optimization and other resource intensive tasks, Google Colab can be preferred over the Jupyter notebook, which relies on local computational power. For universities and highly collaborative work spaces, Google Colab surpasses the traditional JupyterLab features in terms of code development and model testing purposes. Over time, a research organization like Google Research is bound to improve a lot on their product Google Colab in the coming days. At least for now, when it comes to working on your projects, Google Colab is one of the best in the market which completes all the set of needs for their users as compared to the other IDEs available.

4. Case study: Optimization techniques course

We reviewed and evaluated four textbooks on mathematical optimization, engineering optimization, and engineering optimization applications [9-12] as well as three blogs with material published on the internet [13]-[15]. We chose two main sources and three secondary sources [9-11,14-15]. The first reference was chosen because its contents are updated and coincide with the course syllabus [16], while the second reference was chosen because of its clear explanations and examples. According to the author of reference [9], This book introduces all the major metaheuristic algorithms and their applications in optimization. This textbook consists of three parts: Part I: Introduction and fundamentals of optimization and algorithms; Part II: Metaheuristic algorithms; and Part III: applications of metaheuristics in engineering optimization. The author of reference [10] states: The reader is motivated to be engaged with the content via numerous application examples of optimization in the area of electrical engineering throughout the book. This approach not only provides relevant readers with a better understanding of mathematical basics but also reveals a systematic approach of algorithm design in the real world of electrical engineering. Once these two references had been chosen, the thematic content to be developed in the notebooks was structured. Since the objective was to address all the topics of the syllabus in [16], our proposal was as follows:

- ● Mathematics for optimization 1

- Upper and lower dimensions

- Basic calculus

- Optimality

- Norms of vectors and matrices

- ● Mathematics for optimization 2

- Eigenvalues and definition

- Linear and affine functions

- Gradient vector, Jacobian matrix, and Hessian matrix

- Convexity (convex sets, convex functions)

- Convex optimization 1

- Unconstrained optimization

- Gradient-based methods

- Constrained optimization

- Convex optimization 2

- Linear programming

- Simplex method (basic procedure, augmented form)

- Non-linear optimization

- Convex optimization 3

- Penalty method

- Lagrange multipliers

- Karush-Kuhn-Tucker conditions

- Convex optimization 4

- BFGS method

- Nelder-Mead method

- Convex optimization 5

- Trust-region method

- Sequential quadratic programming

- Non-convex optimization 1

- Non-convex stochastic gradient descent

- Non-convex optimization 2

- Gradient descent for principal components analysis

- Non-convex optimization 3

- Alternating minimization methods

- Branch-and-bound methods

- Metaheuristic optimization 1

- Simulated annealing

- Metaheuristic optimization 2

- Genetic algorithms

- Metaheuristic optimization 3

- Tabu search

- Metaheuristic optimization 4

- Evolutionary strategies

The contents of the selected references were previously read to structure the proposal. Subsequently, the topics were balanced, so they could be developed in a one-and-a-half-hour masterclass.

We expect to contribute from our approach: mathematics, computer science, and engineering. The purpose is to try to capture the rigor of mathematics along with the efficiency of algorithms, as well as applications in engineering. To this end, we proposed that the first two notebooks should have this mathematical focus to lay the foundations necessary to develop the course. We also ensured that all notebooks included the implementations of the optimization algorithms efficiently.

The importance of clear concepts for students was always emphasized. For this reason, we decided to use as many graphic resources as possible, whether they were images uploaded in the notebooks by means of a code or images obtained from different websites related to the contents.

The preparation of the Google Colab notebooks was based on the pedagogical proposal called Problem-based learning (APP); this methodology is widely used in the formulation of educational texts and curricula [17]. This methodological proposal has produced good results at all educational levels, including the university [18].

At the beginning of each notebook there is a brief description of the topics to be covered throughout the notebook for students to know the contents of the class beforehand. Then there is a brief introduction to the topics to be covered; this introduction usually contains small historical notes related to the main topic of the notebook and general ideas for students to better understand the phenomenological regarding the topic. For example, the introduction of notebook 4 (Classic Methods II) states: This notebook is focused on studying the Simplex method, developed in 1947 by the American mathematician George Dantzig (known as the father of linear optimization). The Simplex method is one of the canonical methods of the optimization theory; it is of great importance to efficiently solve instances of optimization problems with both the objective function and the constraints being linear functions.

Sometimes, this introduction refers to topics studied in previous notebooks and contrasts methods and results, promoting students’ meaningful learning [19].

After the introduction, students will find a section of dependencies, which are basically libraries necessary for the notebook to run correctly.

Although this section was among the first ones to be developed, all the necessary libraries and functionalities were compiled until the completion of the notebook for it to be executed successfully. Once these libraries were compiled into a single cell, the cell was relocated at the beginning of the notebook. This was done primarily for two reasons. On the one hand, the sequential structure of Google Colab notebooks generates an error when trying to execute a function without importing the library that contains it first; it is therefore advisable to upload these functionalities beforehand. On the other hand, since all the libraries are grouped in the same cell, students will always be aware of the libraries they will use or learn to use during the class.

Students then encounter the most important part of the notebook, namely the body of the notebook. This contains the title of the topic to be addressed, its theoretical development, formulas, deductions, and pseudocodes; these are developments necessary for a deep understanding of the topic. Mathpix Snip was used to prepare this section; this is a powerful OCR tool for finding the LaTeX code of a text image. Thanks to this, writing a formula inside the notebook becomes a simple task: if the formula is found digitally as an image, only a single click is necessary to obtain the LaTeX code that generates it [22]. After completing the theoretical development of the topic, students find a simple example, which is clearly delimited by the graphic separator associated with it (Figure 2).

| Figure 2. Graphic separator |

Sometimes, the examples include a practical section, so students are expected to complete the structuring and to solve the problem presented; that is the function of the graphic separator (Figure 3).

| Figure 3. Function of the graphic separator |

Students are told that it is time to interact with the exercise; this is based on problem-based learning, as explained above.

The proposed exercises include comments, hints, and aids that will allow students to satisfactorily fulfil the objectives. For this reason, the code contains comments and interactive hints. These interactive hints are only an object that, when clicked on, unfolds completely as shown below (Figure 4).

| Figure 4. Interactive hints |

Interactive hints are created based on an HTML object called Details. <details> is used as a widget (small program) for revealing information. It is useful as users can see additional information, which is not visible initially unless they voluntarily want to do so. The aim is to encourage students to try to solve the exercise on their own by using the hints provided when necessary.

There are also questions in which it is necessary to confirm that the result is correct for the future development of the notebook. To this end, there is a section that shows the answer that students should obtain when solving the proposed exercise; the graphic separator is used to do it.

Then the answer to the exercise is shown.

The answers to the exercises were previously tested for students to verify if they have assimilated new knowledge correctly [20].

Inside the body of the notebook, students will also find historical notes or curious data that complement the information presented in the notebook; the graphic separator is used for this.

For example, the Did You Know? section of notebook 1 (Mathematics for Optimization) states: The property that allows every non-empty set of real numbers bounded above to have a supreme is known as the “supremum axiom” or “axiom of completeness;” it is logically equivalent to the “intermediate value theorem,” a theorem that we will use later in the course. This information is not included in the main references [9] and [10], but complements the topics addressed.

Both header and footer banners as well as graphic separators containing example, your turn, answers, did you know that? were designed on the web application Canva; this is a graphic design tool software with drag-and-drop features that provides access to more than 60 million photographs and 5 million vectors, graphs, and free and licensed fonts [21] (Figure 5).

| Figure 5. Example of banner taken from notebook 1 |

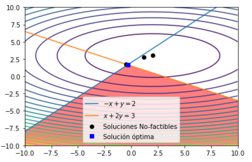

Finally, to take full advantage of the benefits of Google Colab notebooks, libraries of Python programming language were implemented in the explanations and examples sections, such as Matplotlib, Seaborn, and plotly; the main function of these libraries is to provide tools for data visualization [23]-[25]. The functions of the examples developed in the notebooks were plotted in both 2D and 3D graphs with these libraries; functions, contour lines, and even feasible sets can be plotted (Figure 6).

| Figure 6. Example taken from notebook 5 (Classic methods III) |

Most graphs are static: the user sees the graph as an image that does not change over time and cannot interact with it. However, dynamic and interactive graphs were also added, as in notebook 3 (Optimization methods).

Made with the plotly library [25], students can move the graph with their cursor, check the results, be more involved with the objects of the exercise, and understand its objectives.

Finally, we would like to mention those tools that we believe may be interesting to be explored in future developments:

- Tensowflow [26]: it contains a set of functionalities related to machine learning. Functionalities like those inherited from Keras [27] regarding optimizers contain valuable information with topics we have already studied in notebooks such as SGD or Adagrad [28].

- R [29]: it is possible to invoke functionalities and run programs written with R syntax in Google Colab by means of the rpy2 library [30]. In doing so, optimization techniques and the native optimization tools provided by R could be explored [31]. Including exercises that can take advantage of probability and statistics should also be considered.

- CVXPY [32]: it is an optimization library for Python; one of the authors of reference [12] participated in its development. Some exercises can be proposed to compare the efficiency of the algorithms presented with the Scipy library and then contrast them with their corresponding algorithms from the CVXPY library.

- plotly [25]: We did not explore the inclusion of interactive graphs deeply. The use of libraries such as plotly or the classic matplotlib can provide interesting pedagogical approaches as they allow users to interact with the mathematical objects they are faced with.

5. Conclussion

The development of Google Colaboratory notebooks to complement classroom learning can be included in the category of development of educational and didactic resources, particularly university educational resources. Their preparation is not very different from the preparation of textbooks, books, or lecture notes, which are generally used; the process of preparation should be subject to rigorous phases of planning, development, and evaluation. During the development of this novel resource, the bibliography to be used was previously analyzed; this bibliography will guide the contents of the Google Colab notebooks. In this way, the next step was to find analogous material, either on Python or other languages, related to optimization techniques.

References

[2] https://dimensionless.in/using-jupyter-notebooks-google-colab/#:~:text=Colaboratory%20is%20a%20free%20Jupyter,for%20free%20from%20your%20browser.

[3] https://research.google.com/colaboratory/faq.html

[5] https://medium.com/@alexstrebeck/jupiter-notebooks-vs-colaboratory-67bd51803d8

[7] https://analyticsindiamag.com/a-beginners-guide-to-using-google-colab/

[8] https://analyticsindiamag.com/explained-5-drawback-of-google-colab/

[9] Abdelsalam H.M.E., Bao H.P. A simulation-based optimization framework for product development cycle time reduction. In IEEE Transactions on Engineering Management, 53(1):69-85, 2006.

[10] Thumann A., Mehta D.P. 4 electrical system optimization. In Handbook of Energy Engineering , River Publishers, pp.61-116, 2013.

[11] Morais H., Vale Z.A., Soares J., Sousa T., Nara K., Theologi A.-M., Rueda J., Ndreko M., Erlich I., Mishra S., Pullaguram D., Faria P., Mori H., Takahashi M., Qamar Raza M., Nadarajah M., Shi R., Lee K.Y., Rivera S.R., Romero A. Integration of renewable energy in smart grid. In Applications of Modern Heuristic Optimization Methods in Power and Energy Systems , IEEE, pp. 613-773, 2020.

[12] Koutsoukis N.C., Georgilakis P.S., Hatziargyriou N.D., Mohammed O., Elsayed A., An K., Hur K., Luong N.H., Bosman P.A.N., Grond M.O.W., La Poutré H., Chiang H.-D., Cui J., Xu T., Asada E.N., London J.B.A., Saraiva F.O., Sun W., Venayagamoorthy K., Zhou Q., Wang S., Zhang Y.-F., Gusain D., Rueda J., Boemer J.C., Palensky P., Alvarez D.L., Rivera S.R. Distribution system. In Applications of Modern Heuristic Optimization Methods in Power and Energy Systems , IEEE, pp. 381-611, 2020.

[13] Boyd S., Parrilo P. 6.079 Introduction to convex optimization. Fall 2009, Massachusetts Institute of Technology: MIT OpenCourseWare, https://ocw.mit.edu. License: Creative Commons BY-NC-SA, 2009.

[14] Caro-Ruiz C., Al-Sumaiti A.S., Rivera S.R., Mojica-Nava E. A MDP-based vulnerability analysis of power networks considering network topology and transmission capacity. In IEEE Access, 8:2032-2041, 2020.

[15] Luo F.-L. Machine learning for digital front‐end. In Machine Learning for Future Wireless Communications , IEEE, pp. 327-381, 2020.

[16] Pani S.K., Tripathy S., Jandieri G., Kundu S., Butt T.A. Applications of machine learning in big-data analytics and cloud computing. In Applications of Machine Learning in Big-Data Analytics and Cloud Computing, River Publishers, pp. i-xxxii, 2021.

[17] De Graaf E., Kolmos A. Characteristics of problem-based learning. International Journal of Engineering Education, 19(5):657-662, 2003.

[18] Gonzalez L. The problem-based learning model. 2019 Eighth International Conference on Educational Innovation through Technology (EITT), pp. 180-183, 2019.

[19] Alvarez D.L., Rivera S.R., Mombello E.E. Transformer thermal capacity estimation and prediction using dynamic rating monitoring. in IEEE Transactions on Power Delivery, 34(4):1695-1705, 2019.

[20] Alvarez D.L., Al-Sumaiti A.S., Rivera S.R. Estimation of an optimal PV panel cleaning strategy based on both annual radiation profile and module degradation. In IEEE Access, 8:63832-63839, 2020.

[21] https://www.canva.com/es_es/about/

[22] https://mathpix.com/about

[23] https://matplotlib.org/stable/contents.html

[24] https://seaborn.pydata.org

[25] https://plotly.com/

[26] https://www.tensorflow.org/

[27] https://keras.io/

[28] https://www.tensorflow.org/api_docs/python/tf/keras/optimizers

[29] https://www.r-project.org/

Document information

Published on 19/10/21

Accepted on 04/10/21

Submitted on 24/07/21

Volume 37, Issue 4, 2021

DOI: 10.23967/j.rimni.2021.10.003

Licence: CC BY-NC-SA license

Share this document

Keywords

claim authorship

Are you one of the authors of this document?