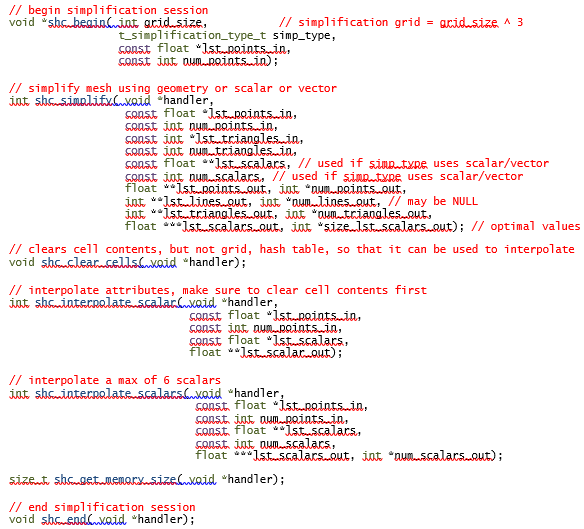

Summary

Mesh simplification is an important problem in computer graphics. Given a polygonal mesh, the goal is to generate another mesh which approximates the underlying shape but includes less polygons, edges and vertices. Early methods focused only on preserving the overall shape of the geometric model, whereas current methods also handle meshes with attributes (normal vectors, colors, texture coordinates) so that both the mesh shape and the mesh appearance are preserved.

The goal of this work is to develop, implement and test a mesh simplification algorithm able to simplify large models in-core using a vertex clustering algorithm. Several detail-preserving techniques will be examined and implemented and a new filter is proposed, taking into account geometry features and nodal defined attributes. We also review recent advances in spatial hash tables to achieve a more compact storage, and we analyze and evaluate their impact in the simplification process.

Acknowledgements

I would like to thank professor Carlos Andújar for its guiding in this project, its suggestions and efforts. I also want to thank Riccardo Rossi and Pooyan Dadvand for their support in providing some of the examples, for their suggestions and help in some of the experiments and providing access to some of the testing platforms. Thanks also to Antonio Chica and the people participating the 'moving-seminars' for providing the inspiration.

Thanks also to the Stanford University for providing the models to the public in their The Stanford 3D Scanning Repository [Standford11]. Models which were used to validate the algorithms. And the patient users of GiD which acted as beta-testers for this new feature. Also the GiD-team for the useful discussions and the demonstrated patience.

I would also like to thank CIMNE for providing support and the resources to develop this work, and also the Instituto Universitario de Investigación Biocomputación y Física de Sistemas Complejos of the Universidad de Zaragoza for letting me run the scalability tests in their Cluster.

Special thanks also to my family and friends for their understanding and support.

1. Introduction

1.1. Motivation

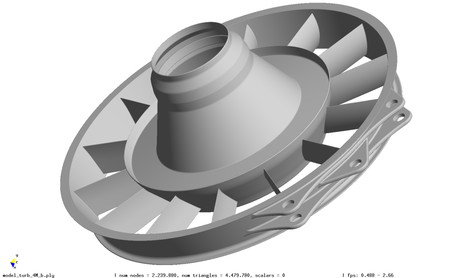

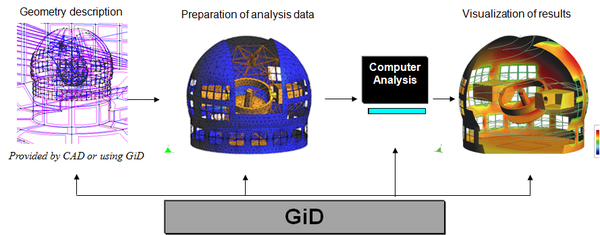

In computational mechanics and computer fluid dynamics, physical or artificial processes are simulated using HPC clusters by means of discretizing the domain with a finite element mesh and solving partial differential equations using different mathematical models and numerical solvers both at node and at element level. The elements used in these meshes can be planar elements, such as triangles, or volumetric elements, such as tetrahedra.

Scientists use several visualization tools to analyze and inspect the results of these programs by extracting information of the meshes using cuts, iso-surfaces, performing particle tracing or visualizing vector fields. With the ever increasing computational capabilities of current HPC clusters, the detail of the simulated 3D models increases accordingly, reaching nowadays billions of elements [Pasenau11, Yilmaz11]. The resolution of 3D scanners is also increasing and meshes with several millions of triangles are being generated. Not to mention the information extracted from medical scanners, like computer tomographies, which are used in biomedical simulations too [Soudah11].

On the other hand commodity hardware can only render at best a few millions of triangles per second. Several simplification techniques have been developed over the years to reduce the original mesh complexity so that the final user can interact with these models [Boubekeur2008]. As the amount of data obtained from the simulations is growing, some data reduction techniques are required to interact easily with these simulation results.

One of the most easily parallelizable simplification methods is the vertex clustering algorithm. This algorithm uses a uniform spatial grid to group vertices of the input mesh; vertices inside each cluster are replaced by a single (representative) vertex.

Some recent developments have been done in the mesh simplification field related to GPU implementations of the vertex cluster algorithm [DeCoro07,Willmot11], which achieve very high simplification rates and attempt to preserve attributes defined in the original mesh.

Several recent spatial hash schemes also take advantage of the GPU architecture to achieve both high insertion and query rates, while providing a compact storage [Alcantara11,Garcia11]. The scheme used by De Coro [DeCoro07] to store the cells of the decimation grid is called 'probabilistic octree' and it resembles a multi-level hash table but without handling collisions.

In a previous work [Pasenau11-2] we already addressed the use of a hash table [Alcantara07] to store the simplification grid efficiently, so that large meshes can be simplified in-core using a vertex clustering algorithm. The resulting algorithm still had some limitations though. On the one hand, classic vertex clustering algorithms might remove some details of the original mesh which may be very important (e.g. the two sides of the a very thin plate), and might create holes in a blade or collapse stretched structures into lines.

On the other hand, the simplification algorithm implemented in [Pasenau11-2] does not handle attributes, like scalar values defined at nodes or colors or texture coordinates defined at vertices. Simply averaging them on a per-cell basis is not appropriate as some discontinuities may turn into smooth changes and turbulences or vortices in a vector field may turn into a low-speed stream.

This work will extend the previous one by incorporating detail-preserving techniques applied not only to the geometry but also to the nodal attributes of the original model. It will also analyze the impact of new hash schemes in the simplification process to support fast simplification of huge 3D models.

1.2. Objectives

The final objective is to develop a tool for simulation engineers allowing them to visualize and interact with large models, either remotely or on a platform with modest graphics. The acceleration of this visualization process will be done by simplifying large triangle meshes coming from the simulation area, and preserving not only geometrical features but also features present in the scalar or vector field defined at nodes. Features in a scalar or vector field can be discontinuities or vortices which may be lost in the simplification process if they are not taken into account.

The resulting algorithm should be fast, robust and use the memory efficiently.

After reviewing the state of the art related to mesh simplification and spatial hash schemes, this work will analyze some of the simplification techniques aimed to preserve details of the original geometry and nodal attributes. It will also examine and compare three major spatial hash schemes presented in the latest years: Alcantara's two- and single-level cuckoo hashes and the coherent parallel hash from Garcia et al.. The goal is to develop a fast simplification algorithm for triangle meshes with a compact storage which also preserves the detail and attributes of the original model. This tool may be used not only to accelerate rendering rates but also, as a daemon running on HPC nodes, to reduce the bandwidth required to interact with huge remote models in a distributed scenario.

To validate the algorithm several models will be used, some of which can be found in the Annex.

1.3. Contributions

Vertex clustering algorithms use a uniform grid to group vertices into clusters. All vertices which fall into the same cell will be replaced by a single vertex (called representative) that provides the better approximation of the geometry. The computation of the best representative vertex for each cell requires some information to be stored in the cell which will be generated from the original mesh.

Since accurate simplification of large models require an arbitrarily large number of cells, we will use spatial hash schemes to store per-cell information. The key to access the hash table will be generated from the integer coordinates (indices) into the simplification grid. The hash schemes will be extended to support 64-bit keys and the information needed in the cell. The access to this decimation grid and the information stored at each cell will depend on the detail-preserving technique and will make use of normals and scalars at the nodes of the original mesh, with a new smoothing normal filter.

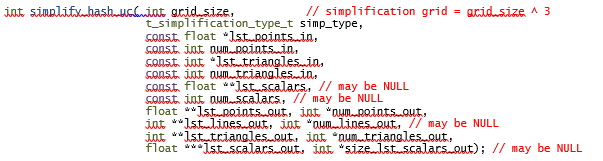

An application will be developed, which will run on MS Windows and on Linux, to test the different hash schemes and detail preserving techniques. The algorithms will also form a library which will be used in GiD, a pre- and postprocessor developed at CIMNE [GiD11].

Several benchmarks will be done comparing the speed, scalability and load factor of the different hash schemes.

1.4. Organization

The rest of this document is organized as follows. In the next chapter several articles will be discussed focusing on our particular needs: speed, shape preservation and compact representation. Also the setup of the experiments is described, as well as the metrics used in the benchmarks.

Chapter 3 extends the simplification algorithm of the previous work by building a octree, using the information of normals in the simplification process and incorporating the attributes in the error metrics.

Chapter 4 deals with storage issues and tests several methods to achieve a smaller memory footprint of the simplification algorithm while maintaining speed.

Several benchmarks related to scalability and load factors will be explained in the fifth chapter and some examples will also be shown.

Finally some conclusions will be presented in Chapter 6 and future work will be outlined.

1.5. Concepts

When engineers perform computer simulations they discretize the domain of the simulation, for instance a building structure or the fluid around an airplane, into a mesh of elements. These elements are basic geometrical entities such as lines, triangles, quadrilaterals, tetrahedra, hexahedra and prisms. Usually the Finite Element Method is used to solve the partial differential equations which model the problem to simulate. This method relies on a set of basic geometrical elements connected at the vertices where these equations are solved. This set of elements is called finite element mesh, or simply mesh. The vertices of the mesh are called nodes. Element connectivity is the set of nodes which defines the element. The orientation of an element is the order of the vertices which defines the element, the right-hand rule is used to define this orientation. Boundary edges on a surface mesh are those edges which are incident to one face.

The partial differential equations are solved at nodes or at the elements, and these solvers write results at nodes or at elements. These results may be just scalar values, such as pressures, densities, damage factors, von Misses stress; vectors, such as velocity, displacements, rotational; or matrices, such as tensors. This work will only deal with attributes representing scalars or vectors defined at the vertices of the mesh.

2. State of the Art

The first part of this chapter describes the simplification algorithm and the hash scheme used in the work done by Pasenau in its Final Project [Pasenau11-2] which is used as starting point of the current work. Then some techniques proposed by several authors will be discussed in relation to shape preservation and attribute handling during the simplification process. Two recent hashing schemes will also be explained and their advantages outlined. Finally, in the last section the experimental setup and the benchmark procedure are described.

2.1. Efficient vertex clustering

The starting point of this work is a mesh simplification algorithm [Pasenau11-2] that combines vertex clustering simplification [DeCoro07] with hash tables [Alcantara09]. We now review these two works.

Simplification process

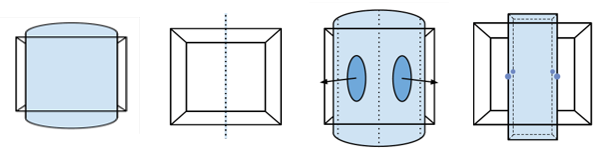

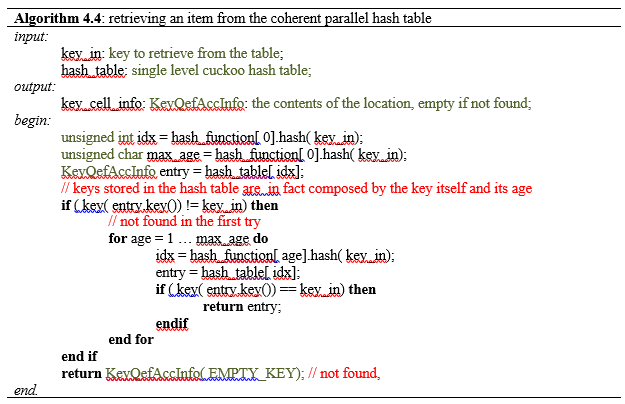

DeCoro's algorithm performs the simplification process in three steps:

1. Creation of the quadric map: for each vertex of each triangle of the original mesh the quadric error function matrix (qef, see below) is calculated, then using the vertex coordinates, the id of the corresponding cell of the decimation grid is calculated, and the qef matrices are accumulated.

2. The optimal representative vertex for each cell of the decimation grid is calculated.

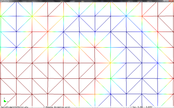

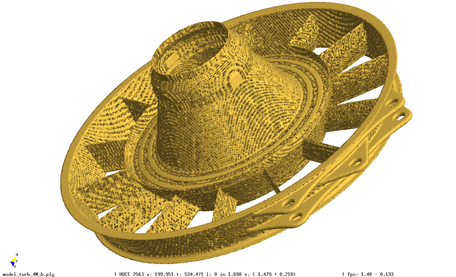

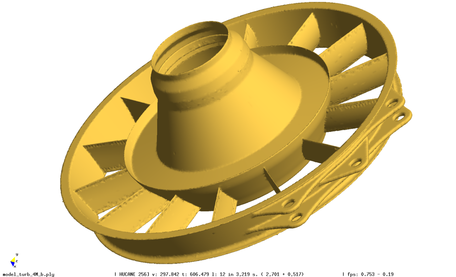

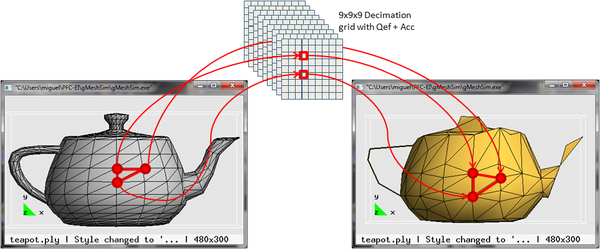

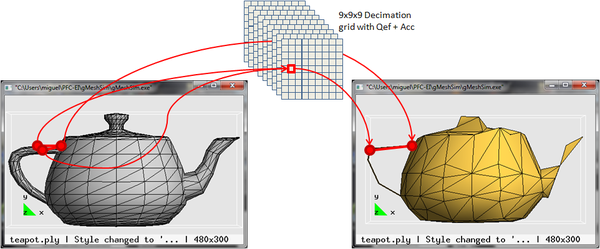

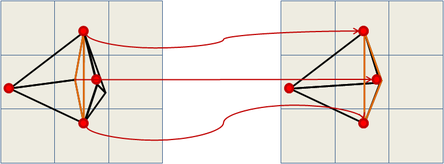

3. Finally, for each vertex of each triangle, the id of the corresponding cell of the decimation grid is calculated and if all three vertices have the same id, then the triangle collapses and is discarded; if only two of them have the same id, then the triangle collapses into a line, otherwise the vertices of the triangle are replaced by the optimal representative vertex of the respective cells. Figure 2.1 shows and example of this simplification step:

|

|

| Figure 2.1: Simplification example using a 9x9x9 decimation grid where one triangle of the original mesh may turn into a triangle in the simplified mesh or into a line. |

The quadric error function used is the one described in [Garland97]. Given a vertex v the quadric error function is defined as the sum of the square distances from this vertex to the set of planes associated to this vertex:

Initially planes(v) is build from the triangles incident to the vertex v. When two vertices are collapsed (a, b) à c, then planes(c) = planes(a) planes(b). Garland explains in its article, [Garland1997], that only the 4x4 matrix has to be stored, instead of storing the set of incident planes. This way, the union of the set of planes turns into a sum of quadrics. And so, on a multi-level octree like approach, the quadric of a node of the tree is the accumulated sum of the quadrics of its sub-tree.

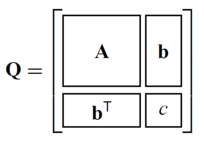

To find the point that best approximates the group of vertices whose quadric error function is the point minimizing this error has to be found. This can be done for example by inverting the QEF matrix:

As explained in the final project [Pasenau11-2], the matrix can be singular and, therefore, is not invertible, or the resulting point may lie outside the decimation cell. To avoid this situation the algorithm checked for both possibilities and if one of the problems is detected then the average of the accumulated vertex coordinates is used instead.

Repeated triangles were also eliminated in the final simplified mesh and, as sometimes the algorithm changes the orientation of some triangles, it checks this and, eventually corrects their orientation, like the one shown in Figure 2.2.

|

| Figure 2.2: The orange triangle flips its orientation in the simplification process |

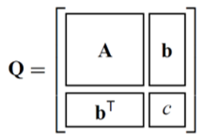

Data structure

Since space efficiency is critical in vertex clustering simplification, non-empty cells of the decimation grid (along with the qef matrices and the accumulated coordinates) were stored using a hash table, using Alcantara's two level cuckoo hash approach.

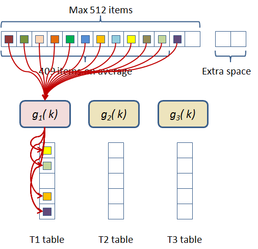

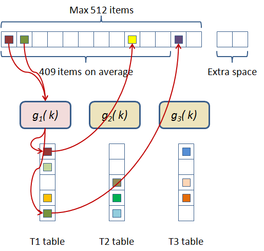

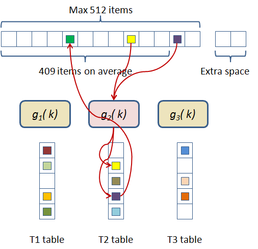

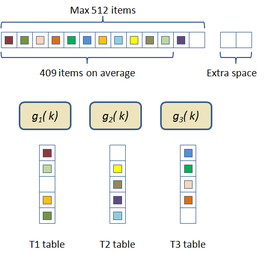

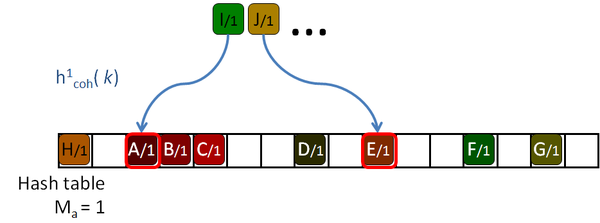

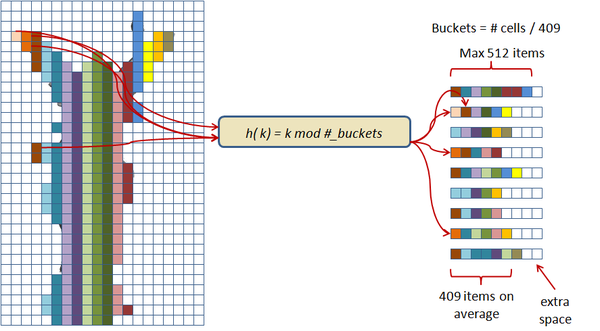

In this scheme the hash table construction is done in two steps: first, entries are grouped in buckets of 409 elements in average and 512 elements at most as shown in Figure 2.3; and then, for each bucket, a cuckoo hash function is build by subdividing the bucket into three sub-tables accessed by three different hash functions and storing a single value per entry. The number of buckets is just the number of items divided by 409. The hash function used for this process is just the modulus function ( ). If the building process of the whole table fails then an alternative hash function is used to distribute the items among the buckets. This alternative is of the form:

being and prime numbers.

|

| Figure 2.3: First step of the two level cuckoo hash: distributing the items into buckets. |

For each bucket the cuckoo building process is performed in parallel and independently between them. Six coefficients are generated by XORing a random seed value with six constants to obtain the three cuckoo hash functions of the form:

This seed is stored in the bucket, and the hash functions are generated each time an item is placed or retrieved.

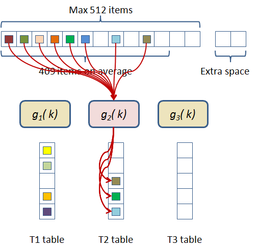

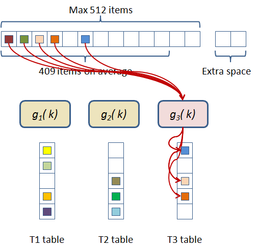

During the building process, an incoming item is inserted in the first sub-table using the first cuckoo hash function if the destination is free. If not, then it looks in the second sub-table using the second hash function. If its position in the second sub-table is not free, then it goes to the third table using the third hash function. If the corresponding entry in this last table is not free then it goes back to the first table and "kicks out" the already stored element and occupies its place. The element which has been "evicted from his nest" tries to place itself in the second sub-table. If the place is occupied, then evicts the stored element and occupies the place, and so on until a maximum number of iterations has been reached. Figure 2.4 displays this construction process graphically. If the construction process fails then a new seed is generated and the process is restarted.

To have enough space for the hash building process to succeed, the buckets are divided in three sub-tables of 191 entries each, adding a total of 573 positions for each bucket.

To retrieve an item placed in the table, the first hash function is used to know which bucket needs to be accessed. Then, using the stored seed the three cuckoo hash functions are created and used to access the three sub-tables. If the item is not in the first sub-table, then the second sub-table is accessed and eventually the third.

This hash scheme hash uses 1.4·N space for N items and using the four hash functions guarantees that an item of the table can be retrieved in constant time.

Given a point, the key used in the hash table is a 64-bit unsigned integer generated from the ( i, j, k) indices of the corresponding spatial cell in the simplification grid. The data stored together with the key consist of 14 float numbers corresponding to the 10 floats of the symmetrical quadric error function matrix and the 4 floats used to accumulate the point coordinates: x, y, z and count ( count = number of points accumulated).

Simplification process reviewed

Using the two-level cuckoo hash as data structure adds a fourth step in the simplification process:

1. Creation of the hash table: getting the unique id's used in the simplification grid, create the hash functions using empty values;

2. Creation of the quadric map (calculate the QEF information from the original triangles and accumulate it in the cells corresponding to the vertices of the triangles);

3. Find the optimal representative for each cell of the simplification grid;

4. Generate the simplified mesh by parsing each triangle of the original mesh, locating the cells of their vertices and generate the simplified triangle if it is not collapsed or repeated.

2.2. Detail preserving simplification

Scott Schaefer and Joe Warren in their article "Adaptive Vertex Clustering Using Octrees" [Schaefer03] propose an adaptive vertex clustering algorithm using a dynamic octree with a quadric error function (qef) on each node to control the simplification error and eventually to collapse certain branches. The quadric error function at the leaves is computed as explained in the previous section. The quadric error functions of an internal node is calculated by accumulating the qef's of its sub-tree. Their algorithm is able to simplify meshes without storing them in memory (out of core algorithm). Given a memory budget, the octree is build while reading the original mesh and when the limit is hit, then the sub-trees with less error are collapsed to free memory for new nodes.

The advantage of this algorithm is that it simplifies the original mesh but concentrates the vertices in areas with more detail, generating meshes with better quality than uniform vertex clustering. The main drawback is that the vertices of the mesh should be spatially ordered to guarantee that collapsed sub-trees are not visited again while parsing the original mesh. This sort has a relatively high time cost O(n·log(n)) which represent almost three times the cost of building the octree in some examples of the article.

Christopher DeCoro and Natalya Tatarchuk propose a 'probabilistic octree' in their article "Real-time Mesh Simplification Using the GPU" [DeCoro07]. This octree is in fact a multi-grid data structure in which each level doubles the number of cells with respect to the level above, i.e. follows an octree-like subdivision. Each cell stores the quadric error function for its level and all levels below, by adding the qef's of the cells of its 'sub-tree' like Schaefer. For a certain level, instead of storing all cells, there is a memory limit and a hash function is used to store them in this memory pool. Collisions are not handled and so, as not all cells are stored, a cell has some 'probability' of being stored.

In the building process, the qef calculated for an incoming point is not stored in all levels of the multi-grid, but are randomly distributed among all levels. This random distribution is weighted according to the number of cells on each level. In the simplification step, to obtain the optimal representative for an incoming vertex, the octree level is selected according to the user's error tolerance, given a certain error, and if the cell is not present, as not all cells of a certain level are stored, the cell of the level above is selected, as it 'surely' exists.

In "Rapid Simplification of Multi-Attribute Meshes" Andrew Willmot [Willmot11] proposes a different method to improve the quality of the simplified mesh: shape preservation. Some models have very fine parts such as twigs or antennas, which will be collapsed. In fact, any detail smaller than the cell size in at least one dimension will be removed.

In the vertex clustering algorithm, the label id assigned to each original vertex is extended to contain information about its normal direction, classified according to the sign for each major axis, so 8 tags are possible.

Whit this information, surface vertices with opposite normals will not be collapsed together, as shown in Figure 2.5. This preserves curved surfaces within a cell. Besides reading the vertex position, its normal must be read too. More faces are generated for curved surfaces, but ‘flat’ surfaces are almost unaffected.

2.2. Multi-attributes

In order to handle properties such as colours, textures and surface normals, Michael Garland and Paul Heckbert, in their article "Simplifying Surfaces with Color and Texture using Quadric Error Metrics" [Garland98], extend the quadric error metric to include these and other vertex attributes.

This is accomplished by considering that a point ( x, y, z) and its attributes ( a1, a2, …, an-2) define a point in . Assuming also that all properties are linearly interpolated over triangles, a quadric can be constructed that will measure the squared distance of any point in to this plane.

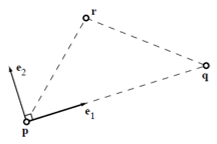

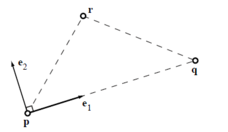

Using the three n-dimensional points of the triangle two orthonormal vectors can be calculated, e1 and e2, which lie in the plane and define a local frame with origin in one of them:

|

|

Using these orthonormal vectors the different parts of the quadric matrix can be calculated:

|

|

To simplify a mesh a 4x4 matrix is used to represent the quadric error function which then was inverted to calculate the optimal representative of a cell, with their group of original vertices. Considering the different possible attributes results in following matrix dimensions and unique coefficients:

| Simplification criteria | vertex | Q | # unique coefficients | accumulation vector size | |

| geometry only | 4 x 4 | 10 | 4 | ||

| geometry + scalar | 5 x 5 | 15 | 5 | ||

| geometry + colour | 7 x 7 | 28 | 8 | ||

| geometry + vector | 7 x 7 | 28 | 8 | ||

| geometry + normals | 7 x 7 | 28 | 8 | ||

| Table 2.1: Each cell of the simplification grid stores the QEF information and an accumulation vector (accumulates the n coordinates of original points which are grouped into the cell and its number: ; all this will be used to calculate the optimal representative. | |||||

In "Rapid Simplification of Multi-Attribute Meshes" Andrew Willmot [Willmot11] proposes a different technique to preserve discontinuities in the attributes, in its case texture coordinates when the same vertex has different sets of texture coordinates depending on the incident triangle. Instead of repeating the simplified vertex for each incident simplified triangle, repeating texture coordinates, Willmot describes an algorithm to get the boundary edges of the different discontinuities along the original triangles incident to the cell and classify the triangle vertices and determine which ones share the same attribute.

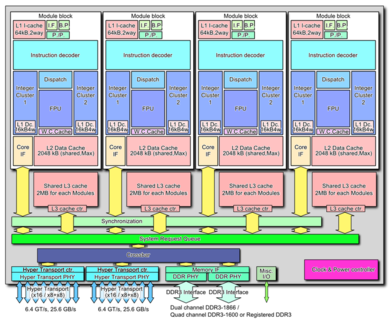

2.4. Parallel spatial hash tables

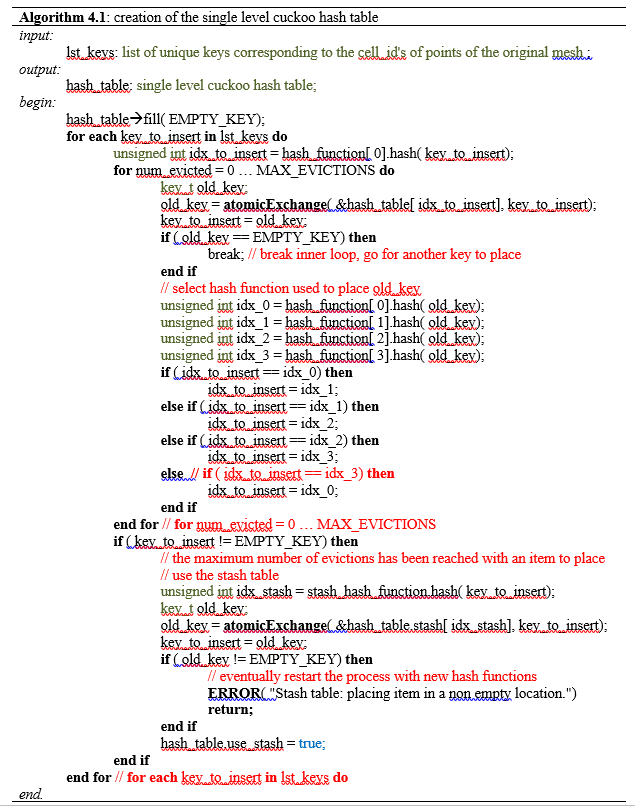

In his dissertation "Efficient hash tables on the GPU" [Alcantara11], Alcantara experimented with several hash schemes, in two different GPUs: nVidia GTX 280 and nVidia GTX 470. Including the two level cuckoo hash which was later used in Pasenau's final project [Pasenau11-2]. Among other new features, the latest graphics card introduces a cache hierarchy in the GPU architecture and several new memory atomic functions, and the comparison shows the effect of the cache over the different hashing algorithms. While the two-level cuckoo outperforms other hashing methods on the older architecture, the introduction of the cache hierarchy in the latest architectures turns the performance chart upside down, making previously efficient hash schemes become more inefficient. The two level cuckoo required some expensive restarts, memory overhead is difficult to reduce without reducing retrieval speed, and at sub-table level, several tries needs to be done to place and retrieve an item.

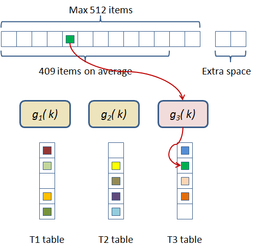

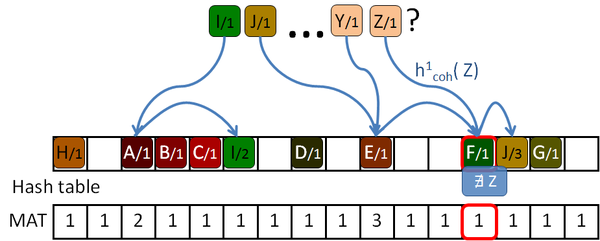

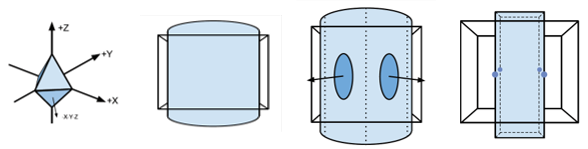

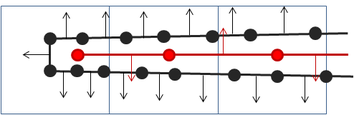

To improve performance, according to Alcantara, the algorithm should focus on minimizing memory accesses rather than improving spatial locality. His last proposal consists of a global, single level cuckoo hashing in which the cuckoo hash functions works over the entire hash table instead of using several separated sub-tables, and each thread, instead of trying repeatedly to insert the same element, it atomically swaps the current to-be-placed item with the item in the destination slot. The thread will succeed if the destination slot is empty. An example of this construction process is shown in Figure 2.6.

| Given an item the cuckoo hash functions may place it in any position of the whole hash table | Using the first hash function the location where the element should be placed is not empty. | The existing element is evicted and the hash function used to place this element is selected. |

| Then the next hash function, in round-robin order, is used to calculate the new position for the previous evicted item. | As the new position is again already occupied, the element in the table is evicted and the hash function used for this evicted element selected. | Using the next hash function for the previous evicted element, finally a free slot is found, and the element stored. |

| Figure 2.6: Steps followed to place an incoming item using Alcantara's single level cuckoo hash scheme. | ||

The cuckoo hash functions are of the form:

where ai , bi are randomly generated constants and p a prime number. These hash functions are used as in the other cuckoo scheme, i.e. in round-robin order, and when an item was evicted getting which hash function was used to place it, all functions are recomputed and compared to the current evicted slot index. Then the next hash function is used to place it elsewhere. If the maximum number of iterations is reached, then a restart is required, with other new cuckoo hash functions.

In several conducted experiments, the number of hard-to-place items was very small, 3 out of 10000 trials for table of 10M items. To avoid the restart problem, Alcantara proposed the use of a small stash table. If this stash table is big enough the rebuild probability is reduced to almost nil. In his dissertation Alcantara cites Fredman et al. [Fredman84] who showed that a hash table of size k2 has a high probability to store k items in a collision-free manner using a random hash function of the form

being p a prime number, bigger than the biggest value of k, and x a random number. Because the number of items failing to insert in the main table was almost always less than 5 in Alcantara's experiments, he uses a very small stash table with 101 entries, enough to place 10 items without collisions. Now when the query of a key is performed, the main single-level cuckoo hash table should be checked and the stash table too.

Alcantara also states that using more hash functions helps in packing more data together, so raising the load factor of the hash table, up to a 98 % with five hash functions [Dietzfelbinger10]. As it is increasing difficult to build tables with these higher load factors as the experiments showed, Alcantara suggest a load factor of 80 % to balance construction and retrieval rates for four hash functions.

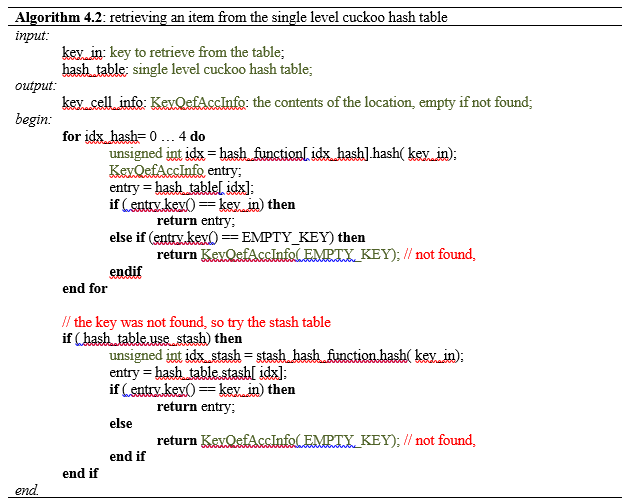

The single level cuckoo hash outperforms the previous two-level cuckoo hash in both architectures, the older GTX 280 and the newer GTX 470, although its memory access pattern is highly uncoalesced. It is more flexible, allowing different load factors and retrieval rates, and it also reduces the number of probes required to find an item, four in the worst case when four hash functions are used.

Alcantara also showed that other hashing methods, like quadratic probing, perform better than the single-level cuckoo hashing on lower load factors because they take advantage of the cache.

And this is one of the conclusions of his dissertation: although single-level cuckoo hashing takes profit of the faster atomic operations of modern GPUx, it has poor caching because the hash function distributes the elements across the whole table. He proposes several ways to overcome this and pointed also to the work of Dietzfelbinger et al. [Dietzfelbinger11].

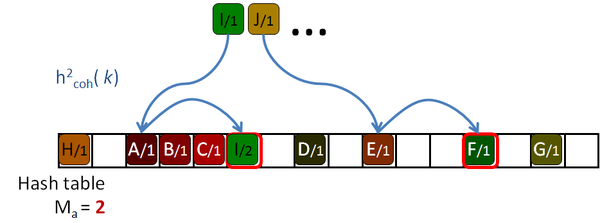

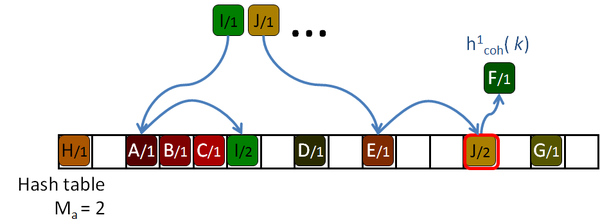

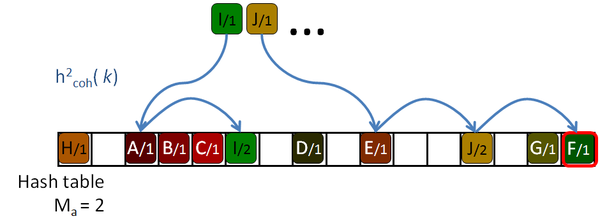

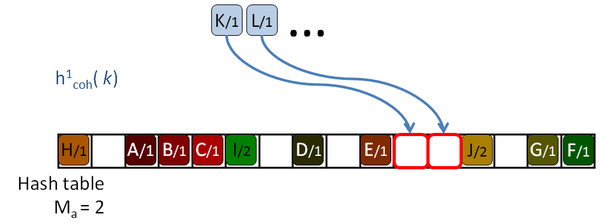

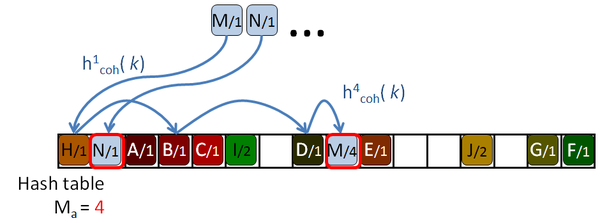

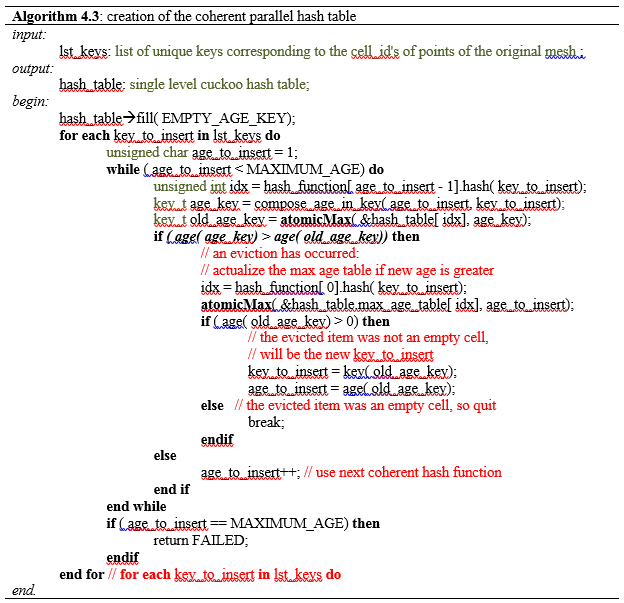

This lack of coherence, which lends to poor caching, is addressed by Garcia in his article "Coherent Parallel Hashing" [Garcia11]. GPU architectures are tailored to exploit spatial coherence, they are optimized to access neighbour memory locations and groups of threads access memory simultaneously. Following this approach, Garcia proposed a Coherent parallel hashing with an early key rejection.

His algorithm is based on a Robin Hood hashing [Celis86], which is an open addressing hashing [Peterson57] with an age reduction technique. On the open addressing hashing, given a table of size |H|, each input key is associated with several hash functions, h1( k) … h|H|( k) which can map the key to every location of the hash table. To insert a key in the hash table, first the first function is used. If the destination slot is occupied then the second hash function is tested, then the third and so on until a free location is found. Peterson defined the age of a key as the number of tries performed until the key is successfully placed in the table. The maximum age of the hash table is the maximum of the ages of all the keys inserted in the table.

So, to insert or query a key in table, the algorithm will take age probes. If an undefined key is queried, then maximum age locations should be checked before rejecting the key. If the amount of undefined queries is larger than the amount of defined keys, as it should be expected for sparse data, this will hurt the performance of the algorithm. The problem is even worse for tables with high load factors, in which the keys are harder to insert, and therefore they get older and older.

The Robin Hood algorithm from Celis solves this problem in that older keys that are to be inserted, evict already placed younger ones. Together with the key, the age of the key is saved and when a key is being inserted, its age is checked against the age of the key already stored in the destination location. If the incoming age is older than the stored one, that is if the incoming key visited more occupied locations than the stored key, then they are swapped. The algorithm continues with the evicted key trying to place it elsewhere. This reduces the age of the keys, and thus the maximum age of the table, drastically. The following pictures show this process with an example:

Garcia et al. states that the expected maximum age for a full table according to [Celis86] is O(log n) and according to Devroye et al. [Devroye04], for nun-full tables, the expected maximum age is reduced to O( log2 log n).

Although Robin Hood hashing reduces the maximum age of the table, the average number of accesses for a given key is very high, specially for undefined ones, compared to the number of accesses of cuckoo hashing, specially for undefined keys.

Garcia proposes an addition to the algorithm which helps to reduce the number of access on average, although some of the undefined keys still need to access maximum age locations to discard them. The Early key rejection mechanism stores, for each location of the hash table, H[ p], the maximum of the ages of all the keys whose initial probe location h1( k) is p. These ages are stored in a maximum age table, MAT. So when a key k needs to be queried, only MAT[ h1( k)] tries should be done to discard the key, or less if it's defined, as shown in Figure 2.8.

In all the experiments performed by Garcia, the maximum age was below 16, and so only 4 bits are used to store the age, together with the key and the data in the hash table.

In order to exploit coherence, Garcia uses just translations functions as hash functions:

Where is sequence offsets, large random numbers, independent of and between themselves, and . This way neighbouring keys remain neighbours at each step and threads will access neighbouring memory locations. This does not mean that the keys will be stored as neighbours, because one key can be placed at step and its neighbour at step in another random location, like the example in Figure 2.9:

In his experiments, Garcia shows that when a hash table is build with random sparse data and randomly distributed keys, the single-level cuckoo hashing performs better than the coherent hash algorithm. A robin hood algorithm with a random hash function instead of the translation function, performs slightly better than the coherent hash. Also the results of retrieving random distributed defined keys, the single-level cuckoo and the random hash performs better than the coherent hash. The author states that this is due to the lack of coherence and the overhead of the updated of the MAT table, maximum age table, and the emulation of the CUDA operator atomic MAX over 64-bit operands.

When the input keys of the same random data are sorted before insertion, the construction and querying algorithm doubles the rates of the single-cuckoo, and random hashing. If a coherent data set is used, instead of the random data, and the keys are also inserted in order, then the two-level cuckoo and the coherent hashing perform almost equally, and the single-level cuckoo performs slightly behind. The main difference is that with the coherent robin hood hashing, the hash table can reach higher load factors, up to 99%, compared to the two-level cuckoo and the single-level cuckoo which reach only a factor of 85 % and 95 % respectively. Garcia explains that they never failed to build tables with 99% local factor, but higher factors generate maximum ages above 15, which cannot be stored using only 4 bits.

Also the results for full key tests were presented. In these tests all keys of the domains are scanned, whether they are defined, i.e. pointing to useful data, or undefined, i.e. pointing to empty space. The tests were done over a random data set and a coherent set, an image. In both cases the coherent parallel hashing outperforms the other algorithms by a factor of 4 or more. This can be explained by the early key rejection mechanism and the coherence of the accesses.

The preserved coherence is also reflected in the higher usage of the GPU's cache, which reaches a 40% of cache hits, compared to the meagre 1% or less of the random hashing.

Garcia also experimented with different access patterns, besides the linear incremental one, he also tried the Morton order and the bit-reversal permutation. The conclusion is that the more ordered and predictable the accesses are, the better the coherent parallel algorithm performs.

2.5. Conclusions

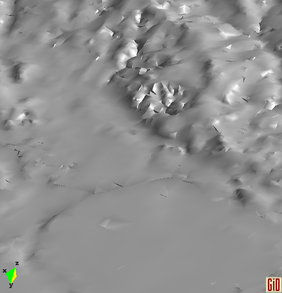

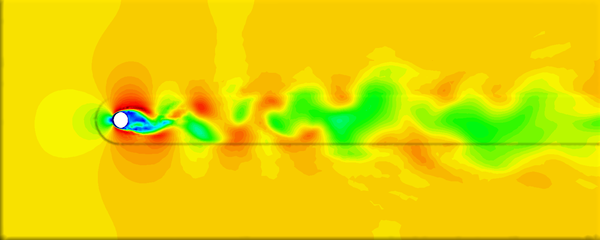

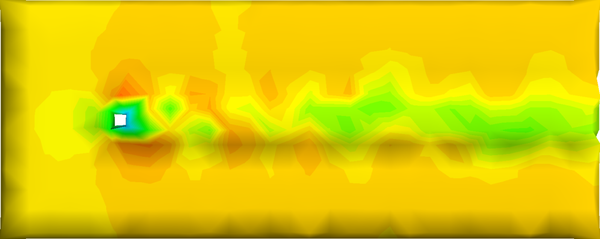

The previous simplification algorithm [Pasenau11-2] was able to simplify big meshes very fast but only based on geometrical information and losing some important details, like the shown in Figure 2.10, where holes appear on very thin surfaces:

The octree approach proposed by DeCoro may suppose an advance in preserving some of this details although it supposes more effort in the construction and simplification process.

Willmot's shape preservation feature, which can be seen as subdividing each cell of the simplification grid according to the orientation of the normals on the vertices of the original mesh, may avoid this overhead as the normal orientation is encoded into the label_id calculated for the original vertices.

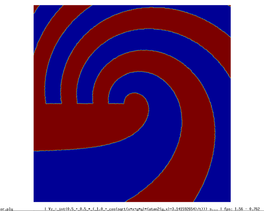

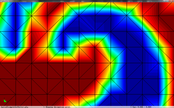

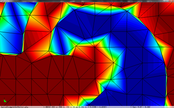

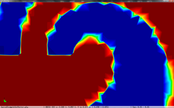

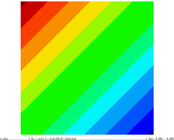

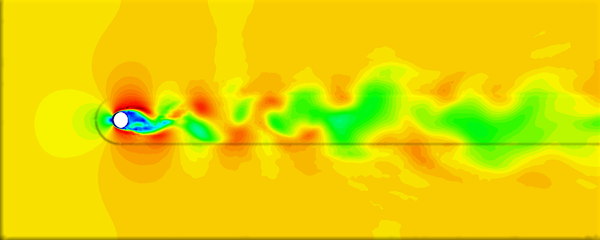

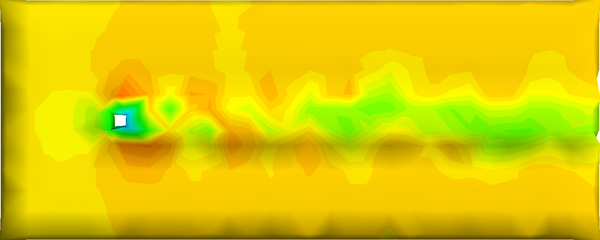

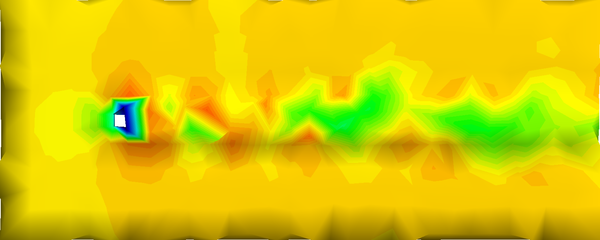

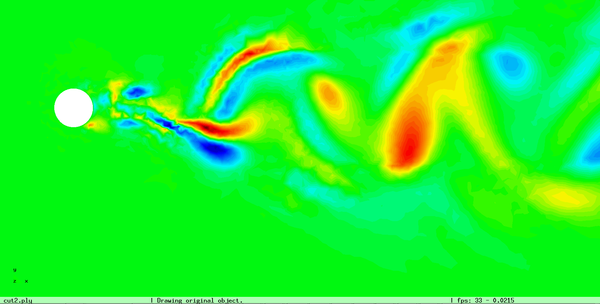

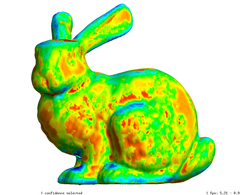

The cell boundary edges mechanism also presented by Willmot, which preserves discontinuities in the cell by grouping triangle vertices with continuous attributes and repeating the simplified vertices as many times as discontinuities are present. This is can be easily be applied on discrete attributes, i.e. vertex attributes whose values are finite or can be grouped clearly in a set of discrete values. As the proposed work should handle scalar and vector fields coming from simulation codes, the nature of these attributes cannot be known beforehand or if so, the discretization may hide some features present in the results of the simulation. Figure 2.11 shows this problem with the velocity modulus field of a fluid around a cylinder:

|

|

| Figure 2.11: The minimum and maximum values, in dark blue and red respectively, of the velocity field are smoothed in the simplified mesh of the bottom image. |

The two level cuckoo hash scheme proposed by Alcantara uses 1.4 N space to store N items. The pair (key, value) used in the simplification algorithm represents a cell of the decimation grid used to simplify the model and is composed by a 64-bit key and the information needed to find the optimal cell representative. This information, as shown in Table 2.1, can be important. The second cuckoo scheme presented also by Alcantara in its dissertation and the coherent parallel hash explained by Garcia in its article may reduce this overhead quite significantly, almost perfect for the later scheme.

Both of these hash functions rely on a very specific feature of CUDA: atomicExchange in the case of the single level cuckoo hash and atomicMax in the case of the coherent parallel hash. Both functions guarantee exclusive access when a thread reads a memory position and stores a new value, in the first case; and when a thread reads a memory position compares its value to the new one and eventually stores a new value, in the second case.

Such atomic memory operations are not present in OpenMP, as the implementation target of this work is the CPU a not GPUs; and therefore the locking mechanism must be used, with its overhead in memory and time.

The two level cuckoo hash scheme proposed by Alcantara, and used the previous project [Pasenau11-2], already achieves very good simplification rates although the cuckoo hash functions introduce a random distribution of neighboring cells. The hash function used to distribute the items into buckets was just a modulus function and the randomness was limited to the bucket area. The single level cuckoo hash scheme extends the randomness of the accesses to the whole table. This can level the advantage of a more compact representation. On the other side, the coherent parallel hash scheme try to maintain the spatial coherence of the input mesh, if the same hash function is used. This feature promises good results, but it should be balanced with a higher memory costs for the maximum age table and the OpenMP locks.

Memory usage is very important as the intention is to extend this simplification algorithm to volume meshes and memory will become a limiting factor in how much can be simplified or how many details can be preserved.

2.6. Experimental setup

Given the three different hash schemes to evaluate and the detail-preservation techniques, we first focus on enhancing the existing simplification schemes by incorporating the following detail preserving techniques and multi-attribute handling:

- Octree: as multi-grid like the one of DeCoro but each upper level will hold all accumulated information their corresponding sub-tree;

- Shape preservation using the normals orientation to classify the points of each cell in the simplification grid;

- Incorporation of a scalar in the quadric error function of the cell and of a vector attribute afterwards.

After the evaluation of these techniques, some experiments will be performed with the hashing schemes and will be compared in terms of simplification time (construction & retrieval) and memory usage.

The timings are averages of several runs to avoid noise, and do not consider the time used to read models, allocate memory for the objects and the calculation of the normals of the input mesh.

Several models will be used to verify and validate the algorithms implemented so that a certain degree of robustness can be guaranteed.

3. Detail preservation and multi-attributes

One of the goals of this work is to develop a simplification algorithm able to preserve not only geometrical features but also the attributes defined at the vertices of the original mesh. In scientific visualization, these attributes represent simulation results on the nodes of the original mesh which may define features that traditional simplification processes may lose. Attributes also include colour components, texture coordinates or even the normals of the original mesh.

This chapter focuses on techniques aiming to enhance the quality of the simplified mesh and the use of attributes to control the simplification process. The hash schemes used to handle and store this information are discussed in the next chapter.

3.1. Octrees

Concept

The simplification process described in the previous chapter proceeds through the following steps:

1. create the hash data structure

2. create the quadric map

3. find the optimal representative for each cell

4. generate the simplified mesh

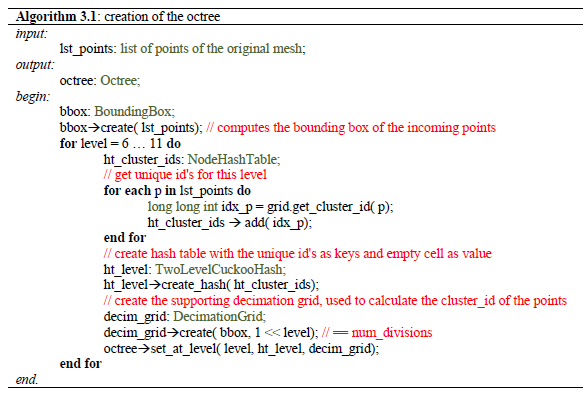

Following DeCoro's approach [Decoro07], a multi-grid version has been implemented but with some key differences. First, each level stores all occupied cells of the simplifications grid using a two-level cuckoo hashing scheme. Another difference is that the qef information generated from the original mesh is not randomly distributed across all levels of the octree, but stored at the deepest level of the tree. Then, since Schaefer demonstrated that the qef information of an internal node is just the sum of the qef's of its sub-tree, the qef information of the lowest level is accumulated in the levels above until the first level is reached.

Like in DeCoro's octree, given a user's error tolerance, the tree is parsed bottom-up to generate the simplified mesh.

As the original model should still be recognizable in the simplified mesh, the coarser levels are discarded and the implemented octree starts at level 6, corresponding to a decimation grid of 643. The depth of the tree is limited to 11, corresponding to a decimation grid of 20483, but deeper levels have also been tested. In all levels the two-level cuckoo hash scheme has been used to store per-cell information.

Creation

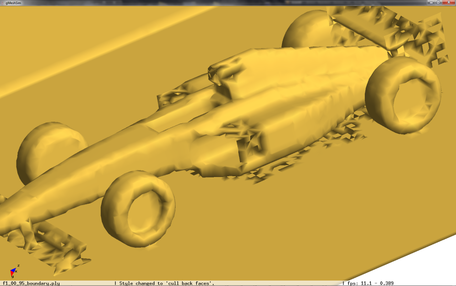

Algorithm 3.1 shows the steps required to create the octree.

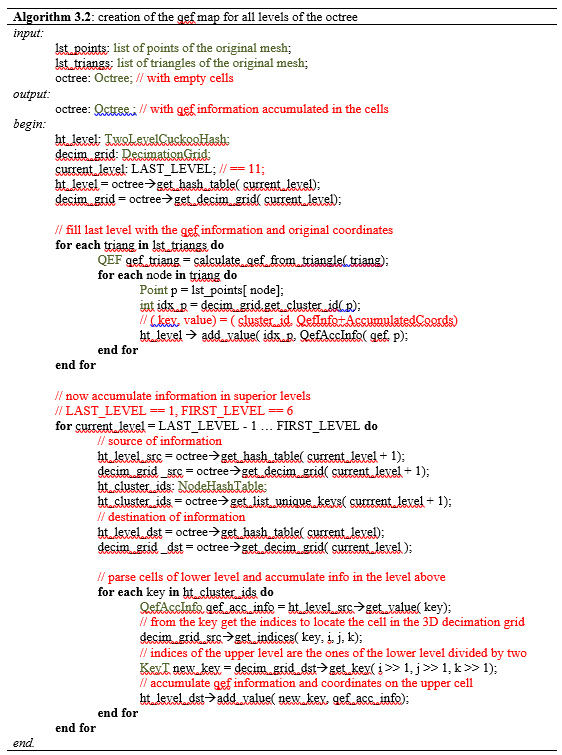

Algorithm 3.2 shows how the octree is filled with the qef information calculated from the original mesh:

|

Finding the optimal representatives

To calculate the optimal representatives in the octree, the algorithm visits all the cells of all levels of the octree to calculate the optimal representative in the same way as the previous work, but now the error of the cell's representative is also stored in the cell:

if the qef matrix is not singular then

invert matrix to find the optimal position;

if the optimal position lies inside the cell of the decimation grid then

use it as cell representative

if the qef matrix cannot be inverted or the calculated optimal position lies outside the cell then

use as cell representative the average of the accumulated coordinates

calculate the error of the cell representative

This error is used in the simplification process to compare against the user selected error tolerance.

Simplification using an error tolerance

The simplification process changes somewhat with respect to the previous one. Now for each point of the original mesh, starting from the deepest level, the cell representative which best approximates the user's selected error is selected.

As before if the three representatives of the original triangle are the same, then the triangle is discarded. If two of them are different, then a line is generated. And if all three of them are different then a triangle is generated for the simplified mesh. The resulting triangles are still checked for the correct orientation, i.e. the same orientation as the original triangles, and for duplicates.

One of the advantages of this approach is that once the octree is created, several simplified meshes can be generated using different error tolerances. This cannot be done with the uniform grid approach. If a finer mesh is desired a new grid should be build (including the hash table). Coarser meshes can be obtained by sending the simplified mesh to a coarser grid, but still there is the cost of creating the new hash table.

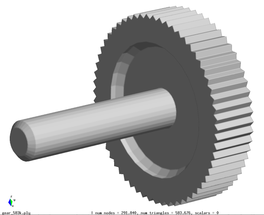

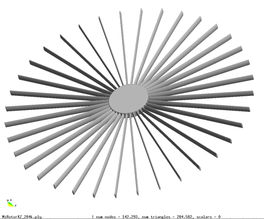

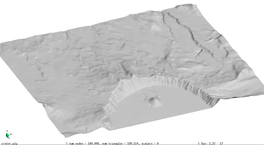

Results

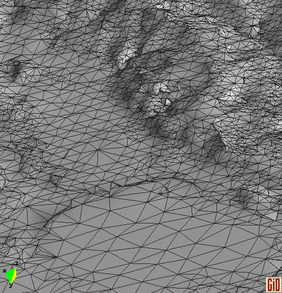

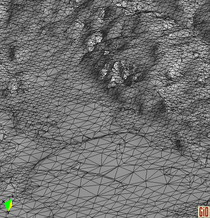

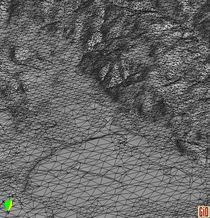

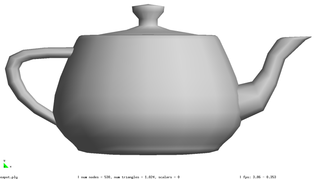

Two models are used to compare the meshes obtained from the uniform grid simplification and the implemented multi-grid octree-like algorithm.

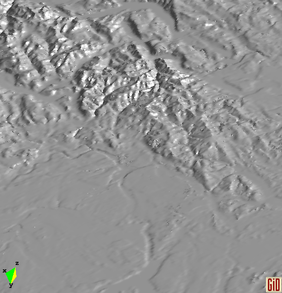

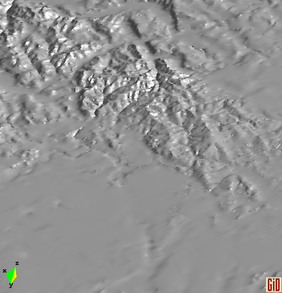

The first one is the "Lucy" model with 28 million triangles. The second one if the Puget Sound map with 16 million quadrilaterals, which were split into 32 million triangles.

With the "Lucy" model, a side-by-side comparison is performed with different simplification levels, showing differences in the distribution of elements and error.

Refining a little bit more, i.e. using a smaller error, it can be seen that the octree simplifies aggressively on those areas where no much definition is needed, and concentrates elements in areas with more details.

This advantage related to the quality of simplification can also be observed with the Puget Sound map, with 16 millions of quadrilaterals, where with half the triangles the same detail level can be achieved.

This saving in the number of triangles can also be used to obtain a higher resolution in the simplified mesh.

The next sequence of pictures shows different output meshes with different error tolerances, demonstrating how the detail level can be controlled with the multi-grid-octree structure.

Results

Table 3.1 shows the time, memory requirements and mesh sizes for both algorithms: the one using uniform clustering (UC) and the octree. Several grid sizes have been used with the first algorithm and several depth levels has been used with the octree, including the level 13, which uses a grid of 40963 for the leaves.

| Type - level | Creation | Simplif. | Total | Mem (MB) | Points | Triangles |

| UC 2563 | 3.37 s. | 0.70 s. | 4.07 s. | 13.66 | 90,780 | 182,913 |

| UC 20483 | 7.49 s. | 12.80 s. | 19.74 s. | 478.25 | 4,555,618 | 9,128,472 |

| UC 64..20483 | 22.92 s. | 18.25 s. | 41.20 s. | |||

| Octree (12) <1e-5 | 14.43 s. | 6.49 s. | 20.93 s. | 666.42 | 51,228 | 103,619 |

| Octree (12) <1e-6 | 10.27 s. | 305,725 | 614,325 | |||

| UC 40963 | 14.16 s. | 31.74 s. | 46.05 s. | 1,063.35 | 10,287,708 | 20,568,519 |

| Octree (13) <1e-5 | 35.09 s. | 6.75 s. | 41.84 s. | 1,546.47 | 51,235 | 103,630 |

| Octree (13) <1e-6 | 10.32 s. | 307,082 | 617,092 | |||

| Table 3.1: Comparison of the time cost and the memory cost of the different simplification approaches. | ||||||

The benchmark shown in Table 3.1 was performed on a Intel Core2 Quad Q9550 computer running MS Windows 7 64 bits and using the Lucy model with 14,027,872 points and 28,055,742 triangles.

Notice that the memory footprint and the running time is almost the sum of creating each level separately. In fact the creation is a bit faster because some of the work across all levels is done in parallel, such as getting the list of unique keys for each level. The advantage, as mentioned before, is that once the octree is created several meshes can be generated with different levels of detail.

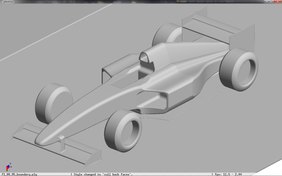

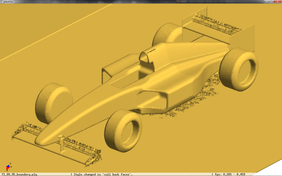

The problem shown in Figure 2.10 of Chapter 2 still remains, although some parts benefit from the octree as it can be seen in the next figure:

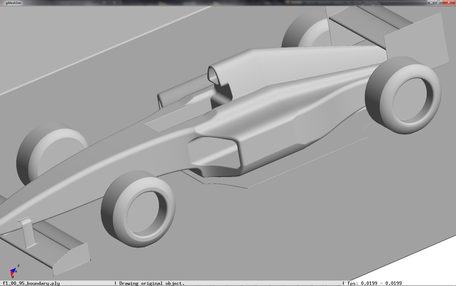

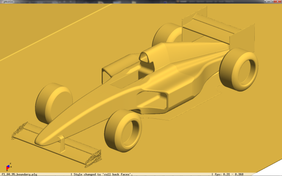

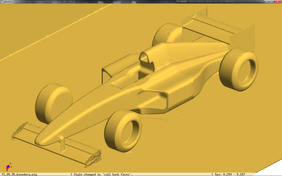

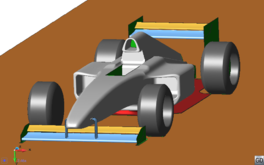

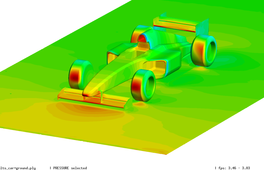

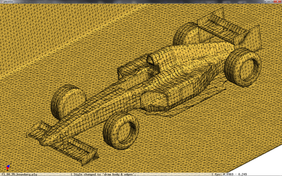

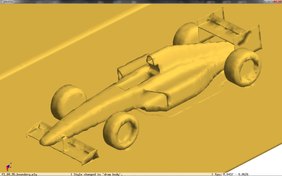

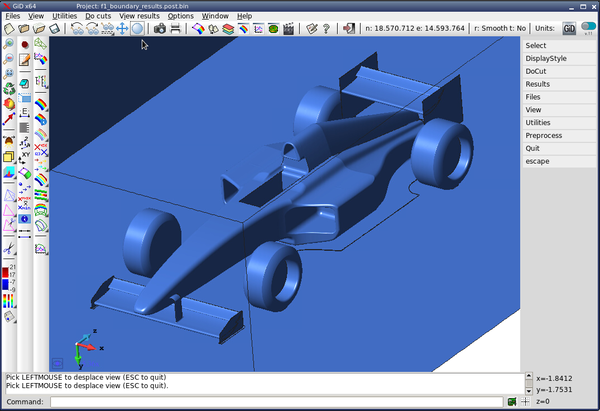

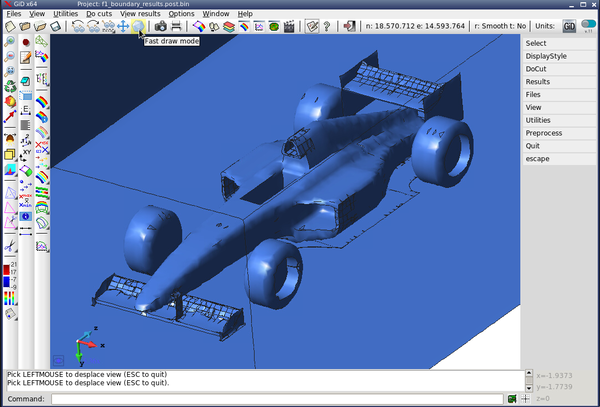

Table 3.2 shows the details of the different simplification algorithms and the settings used to generate de images in Figure 3.6. of the centre-left image. Although a very fine decimation grid was used, centre-left picture, the front wing of the car still has some holes. The same applies to the octree output with a very small error tolerance in the bottom-left picture.

The benchmark was performed on a Intel Core2 Quad Q9550 computer with MS Windows 7 64 bits and using the f1 racing car model with 3,005,848 points and 6,011,696 triangles.

| Type - detail | triangles | create + simplify | Memory KB | |

| Top-left | Original model | 6,011,696 | ||

| Top-right | Unif. Clust. 10243 | 1,387,314 | 2.97 s. | 65,750 |

| Centre-left | Unif. Clust. 40963 | 3,463,332 | 6.44 s. | 164,710 |

| Centre-right | Octree (13), 1e-6 | 195,860 | 9.15 s. (6.16 + 2.98) | 335,242 |

| Bottom-left | Octree (13), 1e-7 | 1,328,293 | 0 + 6.39 s. | 335,242 |

| Table 3.2: Comparison of the two simplification approaches used and their cost in time and memory. | ||||

The problem is that the plate of the original mesh just falls inside one single decimation cell, and the algorithm substitutes all points in the cell by a single representative:

|

| Figure 3.7: In blue the cells of the simplification grid, in black the original mesh, with its normals, and in red the resulting simplified mesh, red normals show the orientation problem. |

Depending on which triangle of the original mesh was the first one to generate the simplified version, the normal will be oriented to one side or the other, but it cannot be guaranteed that the resulting normals will be oriented towards the same side, causing the artifacts shown in the above pictures. Furthermore, in simulation processes it is very likely that the attributes of one side may differ very much from the ones of the other side, like a velocity field from two fluids on either side of the plate in opposite directions. A good idea would be to, rather than generating one single vertex per cell, generate several vertices depending on the orientation of the normals at the vertices of the original mesh, like the shape preservation mechanism proposed by Willmot in its article "Rapid Simplification of Multi-Attribute Meshes" [Willmot11].

3.2. Normal based simplification and smoothing

Willmott's shape preservation: using normal direction

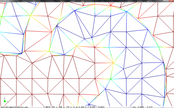

The shape preservation technique explained in the article "Rapid Simplification of Multi-Attribute Meshes" by Andrew Willmott [Willmott11], has been implemented. This technique uses the vertex normal information to classify the vertices inside a spatial grid-cell. The classification is done by encoding the normal direction into 3 bits of the cell label id, using only the sign of the components of the normal.

This can be seen as a subdivision of the original spatial cell into 8 sub-cells according to the sign of the normal components as shown in the next figure:

| Figure 3.8: Using the sign (left) of the normals of the cylinder, the corresponding points are distributed into four sub-cells inside the single spatial cell; then each sub-cell calculates its own optimal representative, generating four differentiated points for the final generated square prism (right). | |||

This technique, also called normal subdivision criterion in this work, has been implemented in the original uniform simplification algorithm, which used the two-level cuckoo hash algorithm, and not in the octree version.

Up to now, given a point and a decimation grid, the indices of the cell where this point belongs to were calculated and encoded in a 64-bit cell_id, used then as key in the hash table. Now, to encode the sign of the normal components the last three bits are used, leaving 61-bits to encode the i, j and k indices of the cell. This barely limits the possibilities of our simplification algorithm, as still a decimation grid of 1MB3 cells can be addressed.

Two changes were needed in the algorithm to implement this technique:

1. extend the DecimationGrid data structure so that given a point and a normal an extended cell_id could be generated (61 bits to encode the cell indices and 3 bits for the sign of the normal components);

2. as some input models do not include normal information, this has to be calculated by the algorithm.

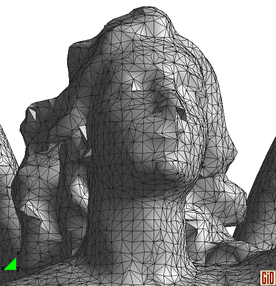

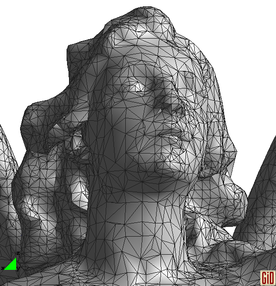

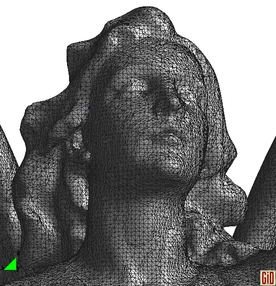

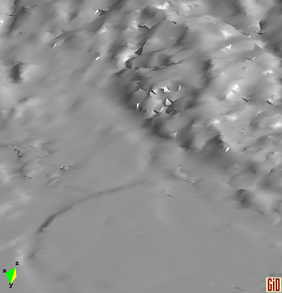

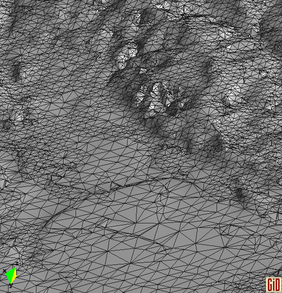

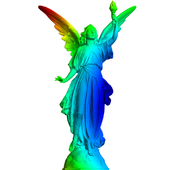

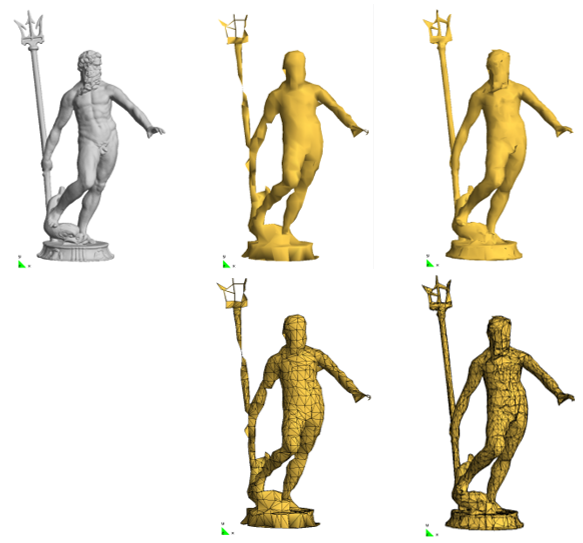

This type of refinement preserves important details, as shown in Figure 3.9 shows, using the Neptune model:

- the image top-left shows the original model with 4,007,872 triangles;

- the image top-centre, a uniform grid 323 created 2,078 triangles and 14 lines in 1.47 s. and using 295 KB of memory;

- the image top right, a uniform grid 323 with normal classification, created 9,342 triangles and 44 lines in 1.96 s. and using 349 KB of memory.

Tests done on Intel Core2 Quad Q9550 computer with MS Windows 7 64 bits.

|

| Figure 3.9: On the left, the original "neptune" model, in the middle simplified using the standard uniform clustering, and on the right, using normals. |

Unfortunately, the new version tends to generate many triangles which could be collapsed without introducing important artifacts. Also, as a spatial cell is subdivided into eight sub-cells, the points are distributed into these sub-cells, leaving the possibility that some sub-cells generate lines instead of triangles.

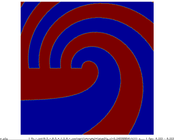

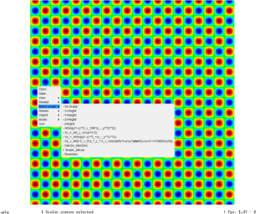

The nature of the discretization using the sign of normals also creates some artifacts, as shown in Figure 3.10:

| ||

| Figure 3.10: A sphere was simplified to a "cube" using the 23 grid using 12 triangles, using the normals discretization, it becomes a strange object with 31 triangles. | ||

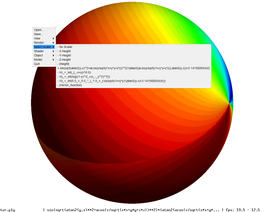

Normal planar filter

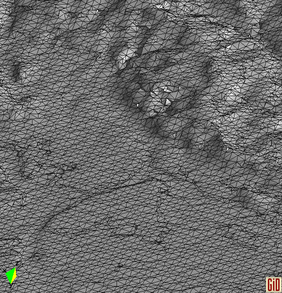

Depending on the orientation, the above technique might generate too many triangles in quasi planar surfaces, where the normal orientations just differ by very few degrees. If this fluctuation of the normals just happens near one of the boundaries of discretization, for instance a ground a little bit corrugated with normals of the form (± 0.1, 0.995, 0.0), the algorithm will generate many triangles which could have been simplified away.

| |

| Figure 3.11: Example of a quasi planar terrain, in the right two thirds of the profile. Shown is a profile in the y plane of the "gcanyon" model. |

To overcome this problem, a new filter has been developed and is applied when the optimal representative of the cells are calculated, just after creating the quadric map.

With the above shape preservation technique each spatial cell is subdivided into eight sub-cells, classifying points with normals pointing to the same quadrant. When all points in the cell have normals pointing to the same direction, only one of the sub-cells are used, i.e. filled with information. On the other hand, if, for instance, all points of a sphere fall into the same spatial cell, then all eight sub-cells are filled with information, as the normals of the points are oriented in all directions. Points belonging to an axis-oriented cylinder section, however, will be classified into four sub-cells.

Points from a perfect planar surface will also fill only one sub-cell, but if the surface is not perfect then the points will fill, eventually two, three or four sub-cells. In this case the new filter joins these two, three or four sub-cells into the same spatial cell.

To decide if, for instance, four sub-cells should be joined because they belong to a quasi-planar surface, or should be left separated, because they belong to a cylinder section, our new filter checks the normals stored in the sub-cells.

After creating the quadric map, the information stored in the cell of the decimation grid is the qef matrix Q and the accumulated coordinates of the points grouped in this cell (x, y, z, w), being w the number of points in the sub-cell. For this filter to work, each sub-cell also stores the accumulated normal components. The alternative would be to store in the cell the normals of all vertices grouped in that cell and then check the angle of the cone that contains all these normals. The shape preservation technique already classifies the normals into quadrants and this ensures that accumulating the normals in each sub-cell will not nullify them. Accumulating the normals also is a way of calculating the preponderant orientation of all normals in the quadrant.

Two, three or four sub-cells will be joined if the normals lie within a cone of 60 degrees, i.e. the angle between every two normals is less than 60 degrees. The value for this angle has been found good enough in several tests in this and other fields of usage, like calculating sharp edges of a mesh for display purposes.

The algorithm does not distinguish between spatial cells (classified by point coordinates) and sub-cells (classified by point coordinates and normal sign components). The hash table stores all used sub-cells.

The filter goes through all sub-cells and for each one them locates all its siblings. If there are two, three or four of them the information is joined and the optimal representative is calculated and used in all two, three o four sub-cells. Otherwise, the optimal representative is calculated as always, i.e. inverting the qef matrix to calculate the optimal representative or, if it is singular or the calculated point lies outside the cell, then the average of the accumulated points is used.

As already demonstrated by Garland [Garland97] and Schaefer [Schaefer03], two QEF matrices can be added to obtain the accumulated quadric error function matrix.

A gather mechanism is used in the algorithm to avoid potential collisions, and only the current cell if modified to store the optimal representative. To avoid repeated calculations or possible conflicts, the cell also uses a status flag to store the state of the cell: UNPROCESSED, PROCESSED_BUT_NOT_JOINED and PROCESSED_AND_JOINED.

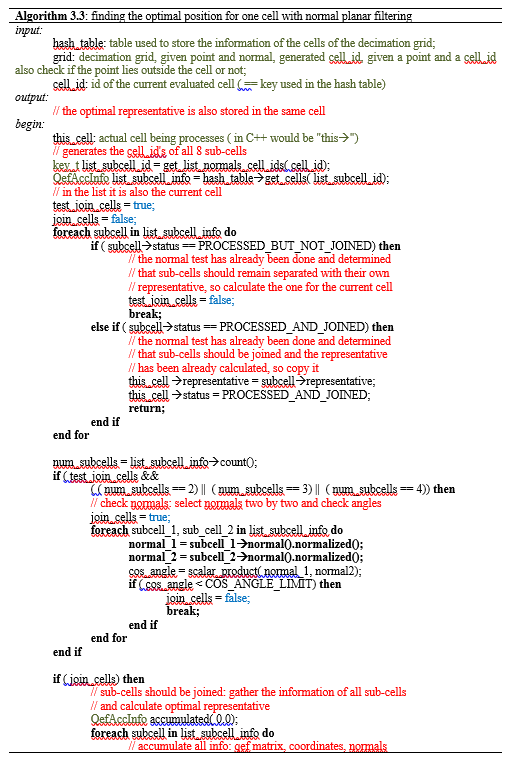

The final algorithm to find the optimal representative for a certain cell is shown below.

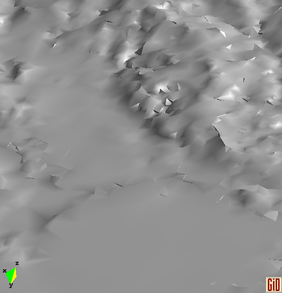

Applying this filter the results obtained show a significant improvement in the simplified mesh, Figure 3.12. After applying the filter in the Neptune model, the previous simplified mesh with 9,342 triangles and 44 lines was reduced to 5,898 triangles and 25 lines. For comparison:

- uniform grid 323 created 2,078 triangles and 14 lines in 1.47 s. and using 295 KB of memory;

- uniform grid 323 with normal subdivision created 9,342 triangles and 44 lines in 1.96 s. and using 349 KB of memory.

- uniform grid 323 with normal planar filter created 5,898 triangles and 25 lines, 2.10 s., 429 KB

Tests done on Intel Core2 Quad Q9550 computer with MS Windows 7 64 bits.

Results

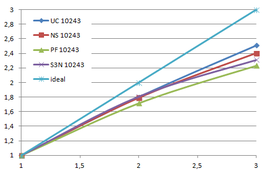

Using the "Lucy" model (14,027,872 points and 28,055,742 triangles) in the same development platform (Intel Core2 Quad Q9550 computer with MS Windows 7 64 bits) the obtained times and memory cost for different degrees of simplification using the three algorithms (UC: uniform clustering, NS: normals subdivision criterion, PF: normal planar filter) are shown in the next table:

| Type - level | T simplif. | T interp. | T total | Mem (MB) | points | Triangles |

| UC 2563 | 3.66 s. | 0.47 s. | 4:14 s. | 8.46 | 90,780 | 182,902 |

| NS 2563 | 4.28 s. | 0.51 s. | 4.79 s. | 13.15 | 141,082 | 312,696 |

| PF 2563 | 4.79 s. | 0.63 s. | 5.43 s. | 16.16 | 93,503 | 188,756 |

| UC 10243 | 7.24 s. | 0.95 s. | 8.19 s. | 122.78 | 1,317,615 | 2,642,388 |

| NS 10243 | 8.58 s. | 1.03 s. | 9.61 s. | 142.25 | 1,526,656 | 3,103,481 |

| PF 10243 | 9.47 s. | 1.76 s. | 11.23 s. | 174.89 | 1,317,901 | 2,643,980 |

| Table 3.3: Comparison of the time cost and the memory cost of the different simplification approaches (UC: uniform clustering, NS: normal subdivision, PF: planar filter). | ||||||

Tsimplif. refers to the time to create, find optimal representatives and simplify the input mesh. Tinterp. refers to the time needed to interpolate the attributes, i.e. clearing the cells, sending the attributes of the original mesh to the same cells where their respective points would be grouped, and calculate the average value for the simplified mesh. This averaging is the first approach to handling attributes on the vertices of the original mesh and will be explained in the next section. Ttotal refers to the sum of both times.

For the normal subdivision and the normal planar filter algorithms the cost of calculating the normals of the original mesh should be added, this increases their times in 2.2 seconds.

The increase in memory requirements of the normal subdivision hash table is due to the fact that more cells are needed, as all cell of the traditional uniform clustering are subdivided into several sub-cells according to the normals orientation.

The increase in memory usage of the planar filter approach, despite that it generates less vertices, is because it needs to store not only all sub-cells as the normal subdivision algorithm but also each sub-cell stores the accumulated normals of the grouped vertices. So the cell size is increased from 14 floats to 18 floats.

The process of the sub-cells in planar filter is also more complex in comparison to the normal subdivision approach; this last one is the same as the normal uniform clustering. In this new scheme, for each sub-cell its status needs to be checked; if is a joined sub-cell then the information of the siblings is gathered and if one of them has already generated the result, get it, if not, it should calculate it.

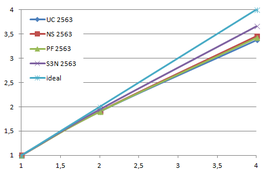

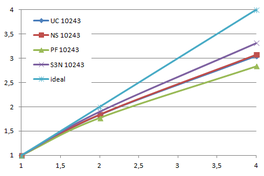

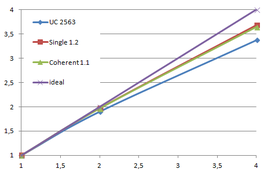

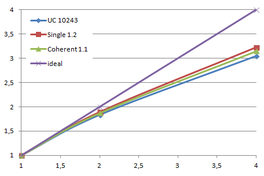

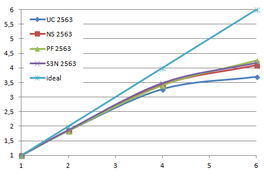

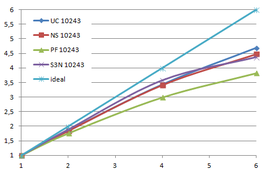

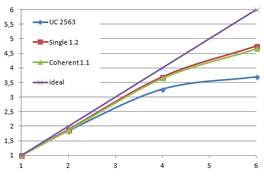

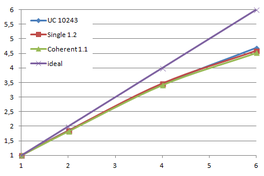

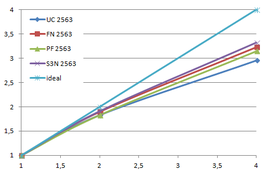

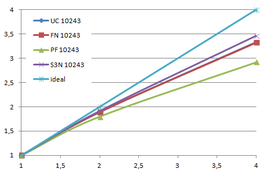

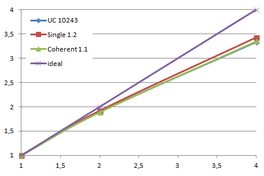

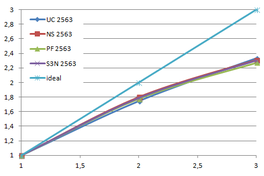

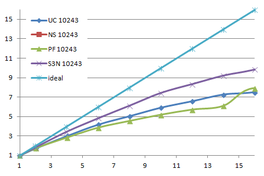

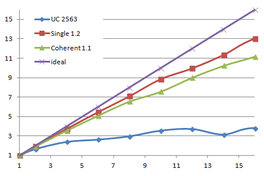

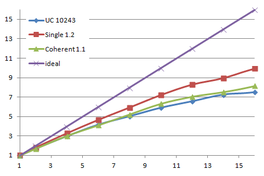

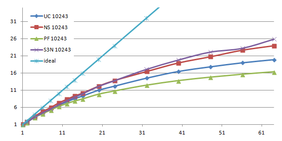

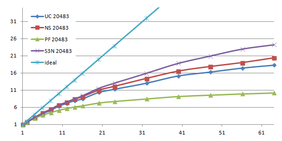

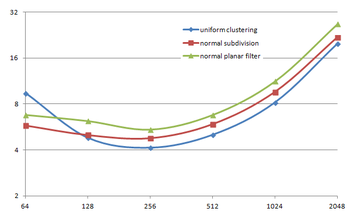

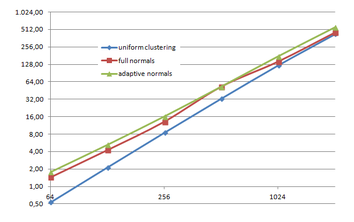

Several degrees of simplification have been performed with the three algorithms on the same model and the same platform and are summarized in following figures:

| Time (s) |

Grid size Grid size

|

| Memory(KB) |

Grid size Grid size

|

| Figure 3.13: The graphs show the time and memory cost of several simplification degrees, scale is logarithmic. |

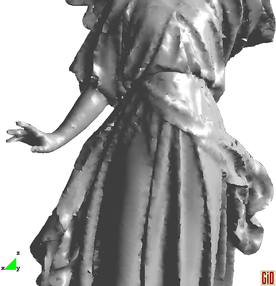

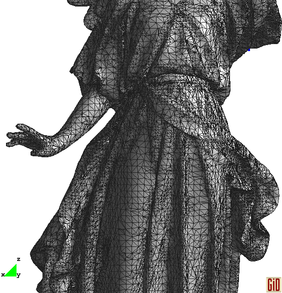

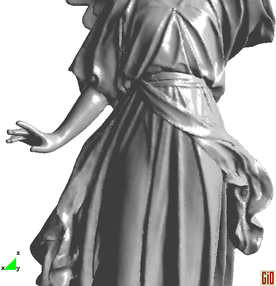

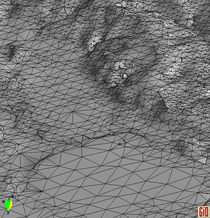

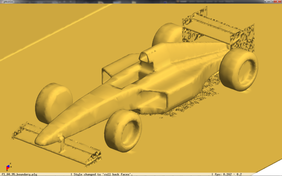

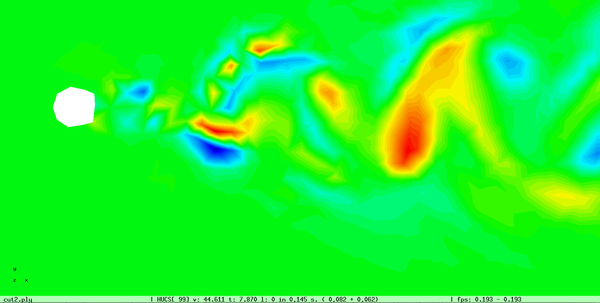

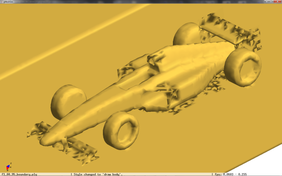

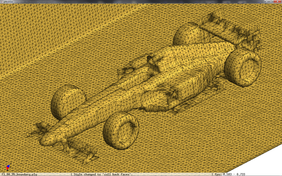

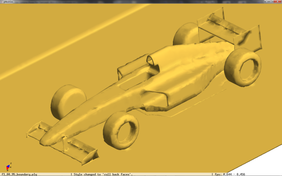

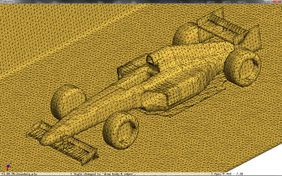

When this new scheme is applied to the f1 racing car model, an improvement in quality of the simplified mesh can be noticed. Even in very coarse meshes, the front and rear wings, and the thin plate blow the pilot's cabin do not present the artifacts shown in Figure 3.6.

|

|

|

|

|

|

| Figure 3.14: Comparison against the original uniform decimation grid (top), of the subdivision of cells using the normals sign (centre), and the normal planar filter (bottom). | |

Table 3.4 lists the mesh information and cost of the algorithms on the same platform (Intel Core2 Quad Q9550 computer with MS Windows 7 64 bits)

| Type - detail | points | triangles | Time | Memory |

| Original model | 3,005,848 | 6,011,696 | ||

| Uniform clustering 2563 | 83,739 | 168,452 | 1.11 s. | 7,999 KB |

| Normal subdivision | 92,742 | 202,714 | 2.09 s. | 8,857 KB |

| Normal planar filter | 87,483 | 180,664 | 2.19 s. | 10,889 KB |

| Table 3.4: Comparison of the resources of three different algorithms used to generate the images in Figure 3.14. | ||||

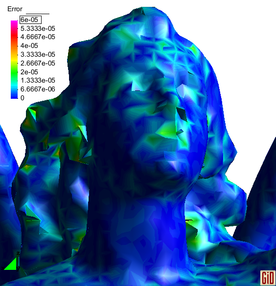

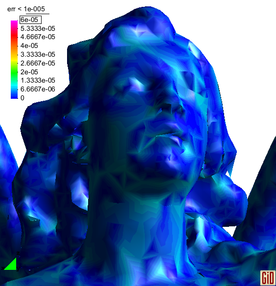

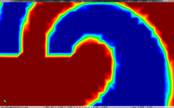

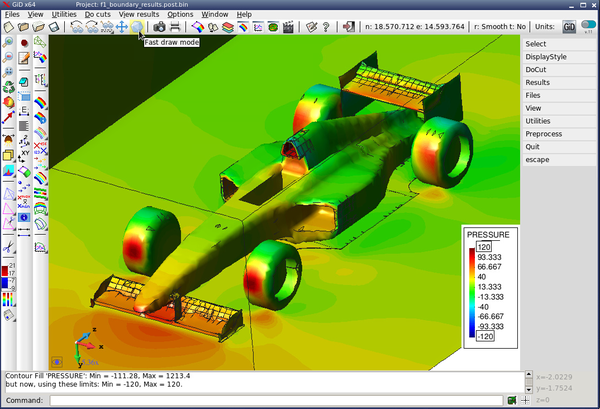

3.3. Incorporating attributes in Quadric Error Metrics

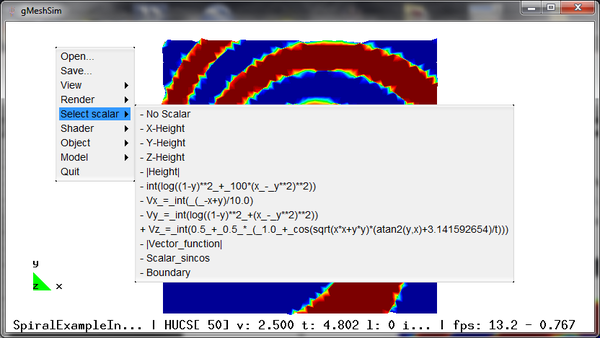

When the above implementation was incorporated in GiD [GiD11], the scalars defined on the vertices of the original mesh were averaged on each cell, as shown in Figure 2.11. The drawback is that features such as discontinuities present in the values inside the cell may disappear. The same applies for minimum or maximum values, they are smoothed out.

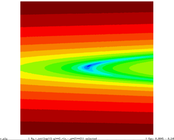

Another problem is that the nature of the uniform simplification grid is also reflected in the scalar field creating a "staircase" effect in smooth curvatures, and smoothing discontinuities as the ones shown in Figure 3.15.

As explained in section 2.2, a way to include the attributes in the simplification process is to extend the QEF matrix by considering that the vertices of the input mesh are defined in including their coordinates (x, y, z) and their attributes (a1, a2, …, an-2).

Using the three n-dimensional points of the triangle two orthonormal vectors can be calculated, e1 and e2, which lie in the plane and define a local frame with origin in one of them:

|

|

Using these orthonormal vectors we can calculate the different parts of our quadric matrix:

|

|

To simplify a mesh using only the geometrical information a 4x4 matrix was used to represent the quadric error function which then was inverted to calculate the optimal representative of a cell, with their group of original vertices.

In this work the number of attributes which will be used to calculate the optimal representatives in the simplification process, together with the geometrical information, will be limited to one scalar and the three components of a vector, as these types of attributes are the ones most used in the simulation area. Afterwards, given a time-step of the simulation analysis, the resulting implementation can easily be extended to handle all attributes present on the vertices in this single time-step, so that the simplified mesh maintains the features of the geometry and all attributes. Usually the simulation engineer visualizes results of a single time-step or compares them with the ones from another time-step, so that the cost of creating another simplified mesh with the new attributes when the time-step is changed can be assumed.

Considering the proposed attributes following table shows the dimension of the QEF matrix and the information that should be stored in the cell:

| Simplification criteria | vertex | Q | # unique coefficients | accumulation vector size | |

| geometry only | 4 x 4 | 10 | 4 | ||

| geometry + scalar | 5 x 5 | 15 | 5 | ||

| geometry + vector | 7 x 7 | 28 | 8 | ||

| geometry + normals | 7 x 7 | 28 | 8 | ||

| Table 3.5: Each cell of the simplification grid stores the QEF information and an accumulation vector (accumulates the n coordinates of original points which are grouped into the cell and its number: ; all this will be used to calculate the optimal representative. | |||||

Normal information of the original mesh can also be considered a vector field, and can also be incorporated in the qef matrix . However this work will not handle geometry, normals and attributes in the same qef matrix. Two separated options have been implemented in the application: extended qef with geometry and attributes that defined a vector, and extended qef with geometry and the normal information.

Implementation details

The simplification process consists of four steps:

1. create the hash data structure;

2. create the quadric map: each triangle creates the QEF matrix and each vertex accumulates this information and its coordinates in the appropriate cell of the decimation grid;

3. find the optimal representative for each cell: inverting the qef matrix or using the average of the accumulated coordinates;

4. generate the simplified mesh: using the optimal representatives, generating new simplified triangles or discarding others.

Incorporating the scalar information into the qef matrix in the developed algorithm meant:

- to modify the quadric map creation algorithm (step 2) and extend it to handle the attributes;

- to expand the cell information to store the enlarged qef matrix and to accumulate the attributes of the vertices grouped in the cell, in the same way as is done with the coordinates;

- to calculate the optimal representative: to determine if the qef matrix is singular or not, and if not singular, calculating the inverse; or, eventually, averaging the accumulated values.

This implies that all operations which were done using 3- and 4-dimensional vectors and matrices, have been extended to support up to 7-dimensional vectors and matrices.

Neither the hash data structure nor the simplification scheme needed to be drastically changed.

To calculate the inverse of the 5x5, 6x6 and 7x7 matrices, first a direct approach was used. The code to do the inverse of these matrices was obtained using the computer algebra system Maple 14 [Maple12]. After some tests the direct inversion of 7x7 matrices seemed to take very long, so an alternative method was implemented to compare with. The Gauss-Jordan elimination algorithm from Numerical Recipes book was implemented [Press07].

Next table shows the time differences measured using the "Lucy" model (14,027,872 points and 28,055,742 triangles) in the same development platform (Intel Core2 Quad Q9550 computer with MS Windows 7 64 bits). A unique simplification grid of 10243 is shown which reduces the original model to 1,317,615 points and 2,642,388 triangles. To test the inversion of the 5x5 matrices an artificial scalar, module of the point coordinates, was used and to test the inversion of the 7x7 matrices, the nodal normals were used. The table shows the cost of computing the optimal representative with the extended QEF matrix using the direct inversion approach and the Gauss-Jordan elimination method.

| Simplification | Q | inversion | Time | Memory |

| UC 10243 | geometry | 8.29 s. | 125,726 KB | |

| UC 10243 | geometry + scalar | Direct | 9.68 s. | 168,997 KB |

| UC 10243 | geometry + scalar | Gauss-Jordan | 9.52 s. | same |

| UC 10243 | geometry + vector | Direct | 47.14 s. | 284,384 KB |

| UC 10243 | geometry + vector | Gauss-Jordan | 15.39 s. | same |

| Table 3.6: Cost of calculating the optimal representative with attributes, using two different inversion methods, against the previous simplification which uses only the geometrical information. | ||||

For the 7x7 matrices the direct inversion is around 3 times slower than the Gauss-Jordan elimination. The direct approach is for general matrices, perhaps it can be specialized for the type of matrices the algorithm uses. But this specialization and the testing other methods, like the Cholesky decomposition, is left as future work.

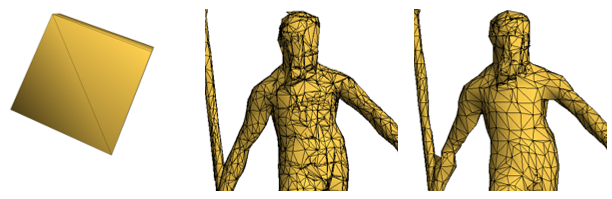

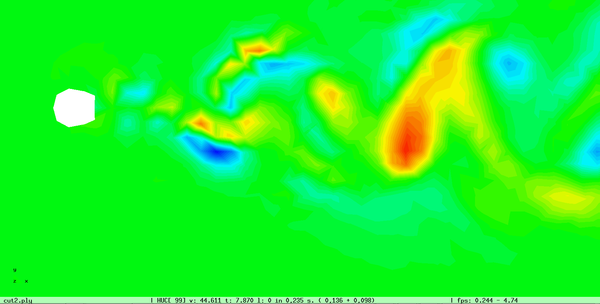

From the following figures it can be observed that the incorporation of the attributes into the simplification process helps to preserve the features present in these attributes, like discontinuities, maximum and minimum values, and to reduce the artifacts introduced by the uniform clustering.

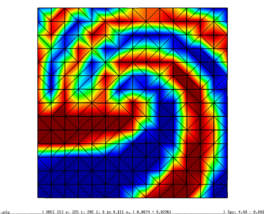

Figure 3.16 shows how the extended qef preserves the discontinuities in the scalar field. The top row shows a close-up of the discontinuity in the spiral example. The left image shows the original model, the centre one the simplified one with only geometrical information. Notice that the discontinuity is moved a bit upwards and the reticular pattern of the simplification grid can be perceived. On the other hand, the left image the scalar information has been "transferred" to the simplified mesh, and the calculated cell's representatives are placed on the discontinuity depicting faithfully this feature.

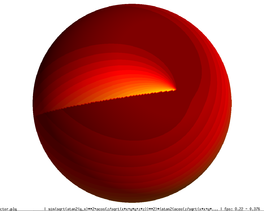

The bottom row illustrates that the discontinuity in the original sphere (left), which was smoothed away (centre) with the original simplification algorithm, shows again (right) with the incorporation of the scalar field information into the quadric error functions.

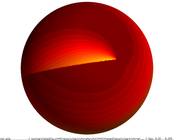

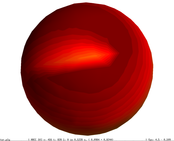

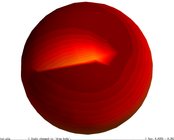

Incorporating the vector field into the qef also projects the information of these attributes into the simplified mesh, as the next figure shows:

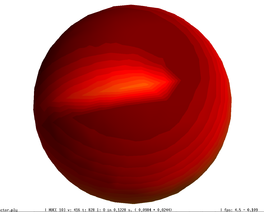

If this improvement is applied on the model shown in Figure 2.11, the minimum and maximum values of the velocity modulus are better represented.

The next figure shows a cut plane of this model, and how the qef matrix with coordinates and the z-velocity component improves the quality of the visualization:

3.4. Conclusions

Although the octree-multi-grid scheme does a good job in concentrating triangles in the areas of the original model with more detail and saves triangles on areas with smooth curvatures or planar ones, it does not balance the memory and time cost it comes with. Compared with the shape preservation mechanism in conjunction with the normal smoothing filter, the later does a better job in preserving interesting details.

Instead of using separated hash tables for the levels of the octree, a single hash table may improve the performance of the construction process. The memory requirement is also an important factor. Other hash schemes should be looked for, with a smaller space factor than the 1.4·N of the two-level-cuckoo-hash. Another point to try is to avoid storing the qef information of the internal nodes of the octree, and store only this at the leaves. When a simplified mesh should be created, given a certain error tolerance, then the qef information of the leaves can be accumulated on the fly, and if needed stored in the final mesh, as it is only needed once per simplified mesh retrieval.

Another possibility is to combine the octree approach with the shape preservation mechanism, in conjunction with the normal planar filter, so that the savings in triangles of the first compensates for the "explosion" of triangles of the second. This is left for future work.

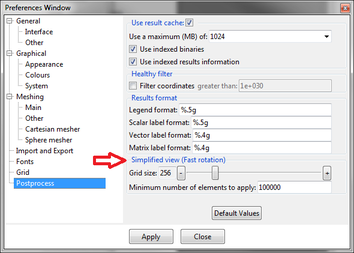

The discretization of the cells into sub-cells according to the normals orientation improves significantly the quality of the final simplified mesh by preserving important details which are useful for the simulation engineer, such as the two sides of a wall that usually have different scalar attributes which should be kept separated. This improvement has been incorporated also in the simplification algorithm used in GiD [GiD11].

The incorporation of the attributes in the resolution of the optimal cell representative is also very important for the simulation engineer, as the examples showed that the features and important characteristic of the attributes are preserved and reflected in the geometry of the simplified model.

To preserve extreme values inside on cell, as the ones in Figure 3.16, a modified version of shape preservation mechanism can be applied. This mechanism uses the normal orientation to subdivide the spatial cell into eight sub-cells according to the sign of the normal's components. In the same way the scalar range of values can be subdivided into two or more parts and then group the values of a cell accordingly into two or more sub-cells. This subdivision can be done in two ways:

- performing the subdivision separated from the one done using the normal criteria: normal(x, y, z) + scalar splits into 8 + 2 sub-cells and normal(x, y, z) + vector(vx, vy, vz) into 8 + 8 sub-cells, or

- extending the geometrical normal subdivision criteria to a normal in Rn incorporating the scalar attributes: normal(x, y, z) splits cell into 8 sub-cells, normal(x, y, z, Scalar) splits it into 16 sub-cells, normal(x, y, z, vx, vy, vz) into 64 sub-cells.

This is left as future work.

4. Increasing occupancy

In the previous chapter we have seen that detail preservation techniques improve the quality of the simplified mesh at the expense of increasing per-cell data, making space efficiency even more critical.

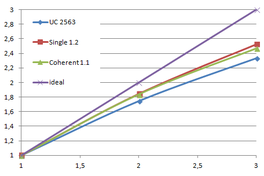

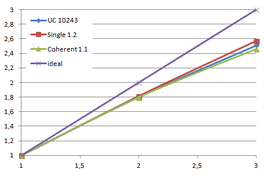

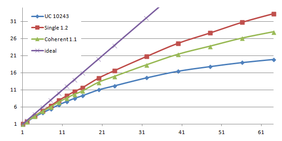

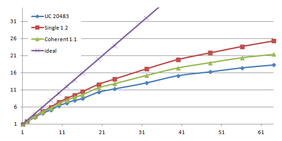

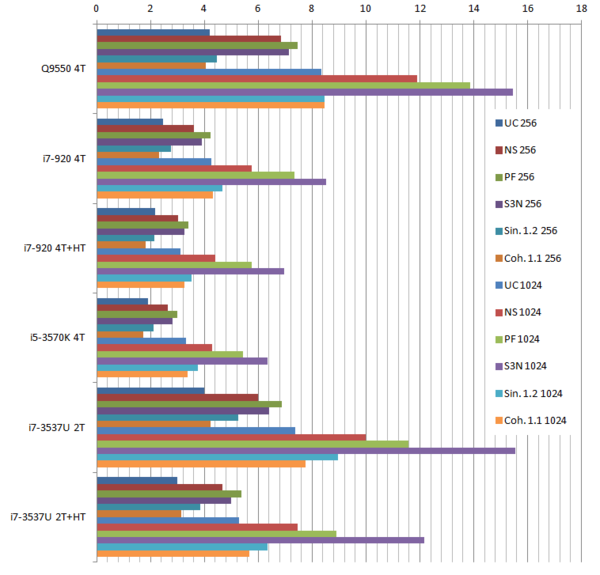

We already discussed the integration of a two-level cuckoo hash approach with vertex clustering simplification. We now deal with two new hash schemes and explore their suitability in our simplification algorithm: the single-level cuckoo hash, presented in Alcantar's dissertation "Efficient hash tables on the GPU" [Alcantara11] and the coherent parallel hash, presented by Garcia, Lefebvre, Hornus and Lasram, in the article "Coherent parallel hashing" [Garcia11].

The dissertation of Alcantara also included more experiments with the two-level cuckoo hash regarding occupancy levels, which were not included in his article [Alcantara09].

The first section of this chapter experiments with the bucket occupancy of the two-level cuckoo hash applied to the mesh simplification process of this work, trying to lower the space factor from the original 1.4·N.

Afterwards, the parallel implementation of the single-level cuckoo hash and the coherent parallel hash are explained. And finally, some results are presented and conclusions drawn.

4.1. Two-level cuckoo hash

The implementation of the two level cuckoo hash uses buckets with 573 entries for a maximum of 512 elements, but for an average occupancy of 409 elements. This represents a space factor of 1.4·N. The previous work [Pasenau11-2] already experimented increasing this average occupancy, but increasing this limit further than 419 elements, implied too many restarts, increasing the time to build the cuckoo hash table.

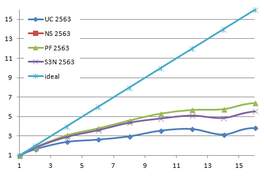

As proposed by Alcantara in his dissertation [Alcantara11], the bucket capacity has been increased for a maximum of 1024 and 2048 elements, while increasing the average occupancy, and so reducing the space factor, as can be seen in following table:

| Average occupancy | Maximum occupancy | Size of sub-tables | Bucket size | Space factor | Load factor |

| 409 | 512 | 191 | 573 | 1.401·N | 71.4 % |

| 870 | 1,024 | 383 | 1,149 | 1.321·N | 75.7 % |

| 1,843 | 2,048 | 761 | 2,283 | 1.239·N | 80.7 % |

| Table 4.1: As the bucket size is increased, the average occupancy has also been increased | |||||

The Lucy model has been tested with these bucket sizes to store the cells of the different simplification grids of the uniform clustering algorithm. Table 4.2 shows the times obtained on the development platform (Intel Core2 Quad Q9550 computer with MS Windows 7 64 bits):

| Grid size | T 512 | T 1024 | % 1024/512 | T 2048 | % 2048/512 |

| 1283 | 4.32 s. | 5.51 s. | 127.6 % | 7.86 s. | 182.0 % |

| 2563 | 3.80 s. | 4.20 s. | 110.6 % | 4.68 s. | 123.2 % |

| 5123 | 4.62 s. | 4.80 s. | 104.1 % | 4.77 s. | 103.4 % |