Abstract

Understanding the cognitive processes of the human mind is necessary to further learn about design thinking processes. Cognitive studies are also significant in the research about design studio. The aim of this study is to examine the effect of designers intelligence quotient (IQ) on their designs.

The statistical population in this study consisted of all Deylaman Institute of Higher Education architecture graduate students enrolled in 2011. Sixty of these students were selected via simple random sampling based on the finite population sample size calculation formula. The students’ IQ was measured using Raven’s Progressive Matrices. The students’ scores in Architecture Design Studio (ADS) courses from first grade (ADS-1) to fifth grade (ADS-5) and the mean scores of the design courses were used in determining the students’ design ability. Inferential statistics, as well as correlation analysis and mean comparison test for independent samples with SPSS, were also employed to analyze the research data.

Results indicated that the students’ IQ, ADS-1 to ADS-4 scores, and the mean scores of the students’ design courses were not significantly correlated. By contrast, the students’ IQ and ADS-5 scores were significantly correlated. As the complexity of the design problem and designers’ experience increased, the effect of IQ on design seemingly intensified.

Keywords

Architectural design studio ; Intelligence quotient (IQ) ; Design education ; Human factors ; Design thinking

1. Introduction

Design studio is considered the core of the design curriculum (Demirbaş and Demirkan, 2003 ). Researchers have described design studio as the center of architecture education (Schön, 1985 ; Ochsner, 2000 ; Vyas et al ., 2013 ). Starting from an ill-defined problem (Schön, 1983 ), the development of ideas and solutions is evaluated through different types of critique (Oh et al., 2013 ). These procedures are common in all design studios. Social interaction and interpersonal interactions among design studio participants, including student–student and student–tutor, are significant. The importance of collaboration (Vyas et al., 2013 ), teamwork, and decision making in design studio has been studied as well (Yang, 2010 ). Architecture students should also develop a set of design thinking (Dorst 2011 ) and creative skills (Demirkan and Afacan, 2012 ), which are increasingly prioritized in workplaces and in society as a whole. A set of problem solving skills is among the abilities that design studio students required to manage a growing spectrum of new complex ranges of problems and situations caused by societal changes in students’ future careers. Learning theories in design studio have also been discussed (Demirbaş and Demirkan, 2007 ).

Considering the importance of design thinking in the design process (Dorst, 2011 ) and in design studio (Oxman, 2004 ), researchers have emphasized the necessity to understand the cognitive processes of the human mind to enhance the understanding of design thinking (Oxman, 1996 ; Nguyen and Zeng, 2012 ) and to view design as a high-level cognitive ability. Design cognition studies are conducted via experimental and empirical methods (Alexiou et al., 2009 ).

According to Gregory and Zangwill (1987) , “Design generally implies the action of intentional intelligence”. Meanwhile, Cross (1999) introduced the natural intelligence concept in design with the assumption that design itself is a special type of intelligence. Papamichael and Protzen (1993) discussed the limits of intelligence in design. Other studies emphasized the significance of spatial ability as a type of intelligence in graphic-based courses (Potter and van der Merwe, 2001 ; Sorby, 2005 ; Sutton and Williams, 2010a ). Furthermore, Allison (2008) concluded that spatial ability is crucial in learning and problem solving.

However, effective and measurable predictive mental factors, and tools that can influence the design process in design studio are insufficiently studied.

Raven’s Matrices tests are originally developed to measure the “eduction” (from the Latin word educere , which means “to draw out”) of relations( Mackintosh and Bennett, 2005 ); moreover, these tests are some of the best indicators of the g factor ( Snow et al ., 1984 ; Kunda et al ., 2013 ). The g factor assesses the positive correlations among various cognitive abilities and implies that individual performances on a certain type of cognitive task could be compared with those on other types of cognitive tasks (Kamphaus et al., 1997 ).

Raven tests directly measure two major elements of the general cognitive ability (g), namely, (1) “eductive ability”, which is the capacity to “make meaning out of confusion”, easing the manner of dealing with complexity; and (2) “reproductive ability”, which is the capacity to process, remember, and recreate explicit information, and those who communicate interpersonally(Raven, 2000 ).

Raven tests have been extensively applied in research and in practice, and a vast “pool of data” has been accumulated thus so far (Raven, 2000 ).Given the independence of language skills in Raven tests, the three versions of these tests (Advanced, Colored, and Standard Progressive Matrices) have been among the most widely applied intelligence tests(Brouwers et al., 2009 ).

The current study reflects a hypothesis of the correlation between students’ intelligence quotient (IQ) and design abilities in architectural design studio. The IQ indicator is based on Raven’s Progressive Matrices applied to the sample of Deylaman Institute of Higher Education architecture students enrolled in 2011. The architecture design skill indicator is obtained according to scores during the first year of Architecture Design Studio (ADS-1) to the final year (ADS-5). This study initially considers a theoretical framework that includes six components, namely, (1) a design studio in architecture education; (2) design thinking in design studio; (3) a cognitive approach in design; (4) spatial ability and design studio; (5) design, problem solving and IQ; and (6) creativity, design, and IQ. Subsequently, hypotheses are formed. Descriptive and inferential statistics are employed to test the hypotheses using the SPSS software.

2. Theoretical framework

2.1. Design studio in architecture education

According to the “learning by doing” philosophy(Schön, 1983 ), design studio is widely recognized as an indispensable component of the design curriculum(Shih et al., 2006 ) and as the heart of architectural education(Oh et al., 2013 ).

Demirbaş and Demirkan (2007) regarded design studio as the core of the design curriculum, and noted that all other courses in the curriculum should be related to design studio. Demirbaş and Demirkan (2003) contended that design studio is related to design problems sociologically and to design education relations with other disciplines epistemologically.

By bridging mental and social abilities, Rüedi (1996) viewed design as a “mediator” between invention (mental activity) and realization (social activity). Design is an open-ended problem-solving process, and the functions of design theories support designers’ cognitive abilities (Verma, 1997 ). Hence, design studio helps in the free exchange of ideas (Tate, 1987 )through an information process that may be assumed as a social and organizational method for both tutors and students (Iivari and Hirschheim, 1996 ).

Regarding the significance of designers’ experiences compared with regulations and facts (Demirkan, 1998 ), a design studio in architectural education is the first environment where the initial experiences for future professions can be obtained (Demirbaş and Demirkan, 2003 ).

Schön (1985) concluded that the design studio learning process starts with ill-defined problems and is developed through the “reflection-in-action” approach. In design studio, the knowledge learnt in different courses should be applied in the design process to determine an optimal solution for the design of an ill-defined problem. In design education, teaching and learning methods are intended to balance critical awareness and the creative process (Demirbaş and Demirkan, 2007 ). Schön (1983) also emphasized that the studio-based learning and teaching method can be extended to other professional educations in other disciplines. In design studio, students communicate with one another, and receive comments from other students and a tutor (Kvan and Jia, 2005 ), which is a process called critique. Oh et al. (2013) reviewed different types of critiques in design studio.

Aside from the importance of the cognitive ability of design studio students, the other influential factors in design studio are social and interpersonal communication (Cross and Cross, 1995 ), encounter with open-ended problems (Schön, 1985 ), and collaborative design (Vyas et al., 2013 ). Oxman (2004) introduced the concept of think maps to teach design thinking in design education. Additionally, the significance of emotion and motivation was considered by Benavides et al. (2010) . Demirkan and Afacan (2012) analyzed creativity factors in design studio. The major factors that influenced design studio performance were reviewed in our previous work (Nazidizaji et al., 2014 ), including social interaction and collaboration. The importance of emotional intelligence for design studio students was also investigated.

2.2. Design thinking in design studio

After Rowe (1987) used the term “design thinking” in his 1987 book, the term has been widely used and has been a part of the collective "consciousness of design researchers” (Dorst, 2011 ). Design thinking has received increasing attention and popularity in the research about the cognitive aspects of design as a base for design education (Oxman, 2004 ), and has been considered a new paradigm for addressing design problems in different disciplines (Dorst, 2011 ).

Oxman (1995) classified different types of design thinking studies into seven categories, namely, (l) design methodology; (2) design cognition; (3) design for problem solving; (4) psychological aspects of mental activities in design; (5) collaboration, which is the social and educational aspect of design; (6) artificial intelligence in design; and (7) computational methods, models, systems, and technology.

Oxman (2001) suggested that the cognitive aspect of design thinking should be regarded as a key educational objective in design education. The two major broad directions of this study are experimental and empirical approaches. Empirical approaches that include protocol analysis in certain special design processes are repeatedly applied. These studies are normally related to the clarification of thinking processes in specific activities that formulate problems and generate solutions (Cross, 2001 ).

Schön (1985) highlighted the importance of design thinking. He also emphasized the significance of empirical research and cognitive studies in improving design pedagogy. In investigations on design teaching, cognitive studies are significant because these studies encourage a clear approach in the design pedagogy development (Schön, 1985 ) (Eastman et al. 2001 ).

2.3. Cognitive approach in design

Design is typically regarded as a high-level cognitive ability, and numerous computational and empirical studies have focused on design cognition (Alexiou et al., 2009 ). Oxman (1996) concluded that the potential importance of the relationship between cognition and design has received increasing attention among design investigators. Design has been viewed as probably one of the most intelligent human behaviors. Design has a solid connection to cognition. Cognition is the study about all forms of human intelligence, including vision, perception, memory, action, language, and reasoning. Expanding the knowledge about human cognitive processes is necessary for understanding the nature of the mind, and consequently the nature of design thinking. In other studies, Nguyen and Zeng (2012) emphasized the importance of understanding design cognitive activities to develop design technologies and provide an effective design. Additionally, studies about creativity and design cognition have concluded that different types of design cognition in the design process affect the outcomes of both low and high creativity (Lu, 2015 ).

Kim and Maher (2008) considered the following two major approaches for the cognitive approach of design:

- The symbolic information-processing approach (SIP), which was introduced by Simon, emphasizes the rational problem-solving process of designers, with more attention provided to both design problems and designers (Eastman, 1969 ; Akin, 1990 ; Goel, 1994 ).

- The situativity approach (SIT), which was introduced by Schon, focuses on the situational context of designers and on the environment of designers (Schön, 1983 ; Bucciarelli, 1984 ).

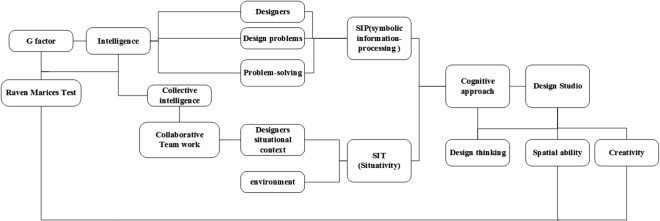

Figure 1 shows the connections between the ideas of cognitive approaches of design with intelligence approaches.

|

|

|

Figure 1. Connections between cognitive approaches of design and intelligence approaches. |

Certain cognitive tests are available, such as the spatial ability test, which measure the spatial cognition of designers.

2.4. Spatial ability and design studio

As a cognitive ability, spatial ability is one of the most significant components related to designers. The idea of spatial ability denotes a sophisticated process that designers extensively employ in the design activity (Sutton and Williams, 2010b ). Spatial ability in the design field is vital for both problem solving and learning, regardless if a problem is not particularly spatial (Allison, 2008 ). This feature shows that spatial ability is significant in design education. Spatial ability may be defined as “the ability to generate, retain, retrieve, and transform well-structured visual images” (Lohman, 1996 ). Additionally, spatial ability has been defined as “the ability to understand the relationships among different positions in space or imagined movements of two- and three-dimensional objects” (Clements, 1998 ).

The literature (Potter and van der Merwe, 2001 ; Sorby, 2005 ; Sutton and Williams, 2010a ) shows the significance of spatial ability in graphics-based courses, and the implications of poor skills on career choices and success rates. Spatial cognition for designers transpires by constructing internal or external representations, in which the representations can serve as cognitive support to information processing and memory (Tversky, 2005 ).

According to Schweizer et al. (2007) , certain evidence proposes that the performance required to complete Raven’s Matrices test also depends on spatial ability somehow. Sutton and Williams (2007) defined spatial ability as the performance on tasks that require three aspects, namely, (1) the mental rotation of objects; (2) ability to understand how objects appear at different angles; and (3) understanding of how objects relate to one another in space. Sutton and Williams (2007) introduce delight tests for measuring spatial cognitions; one of these tests is Raven’s Matrices test. The authors contended that the Raven test does not strictly measure spatial ability but can be considered an on verbal ability test that recognizes the forms of spatial concepts.

Moreover, Guttman (1974) pointed out the concerns related to the validity of Raven’s Matrices test with the genetic analysis of spatial ability, whereas Schweizer et al. (2007) investigated the discriminant and convergent validity of Raven’s Matrices while considering spatial abilities and reasoning. Schweizer et al. (2007) reinvestigated the relationship between spatial ability measured and Raven’s Advanced Progressive Matrices; four scales that represent visualization, reasoning, closure, and mental rotation were also applied to a sample of N =280 university students. The results indicated the existence of convergent validity. Meanwhile, Lohman (1996) concluded that the hierarchical models of human abilities provide g statistical and logical priority over spatial ability measures and that Raven’s Matrices tests are some of the best measures of g .

In relation to the gender differences in spatial ability and spatial activities (Newcombe et al., 1983 ), assessments of the spatial nature of tasks are positively correlated with the masculinity evaluated, and with greater male participation than that of females.

The meta-analysis results (Linn and Petersen, 1985 ) suggest the following points: (a) gender differences arise in certain types of spatial ability but not in other types; (b) large gender differences exist only on “mental rotation” measures; (c) minor gender differences exist on spatial perception measures; and (d) gender differences that exist can be detected across a life span. In relation to the influence of age on spatial ability (Salthouse, 1987 ), older adults perform at lower accuracy levels than young adults do in each experiment.

2.5. Design, problem solving, and intelligence quotient

Problem solving in design has attracted significant interest among design researchers since the 1960s. Most “design methodology” works have been influenced by the premise that design establishes a “natural” type of problem solving. Nevertheless, this assumption has been insufficiently explored (Goel, 1994 ).

Alexiou et al. (2009) indicated that few ambiguities existed on whether design can be a special type of problem solving or a totally distinct style of thinking; the distinguishing features of design were more or less established in general.

One approach for differentiating design is related to problem space and solution space ideas. Problem space signifies a collection of requirements, whereas solution space presents a couple of constructions that satisfy these requirements. In problem-solving theory, “problem space is a representation of a set of possible states, a set of ‘legal’ operations, as well as an evaluation function or stopping criteria for the problem-solving task” (e.g., Ernst and Newell, 1969 ; Newell and Simon, 1972 ).

Moreover, Resnick and Glaser (1975) argued that an essential part of intelligence is the ability to solve problems and a careful study of the problem-solving behavior, particularly many of the psychological processes that comprise intelligence. Bühner et al. (2008) reviewed ideas correlated to problem solving and intelligence.

Although problem solving abilities have been initially assumed to be possibly independent from intelligence, correlations among these constructs have been frequently demonstrated (Rigas et al ., 2002 ; Kröner et al ., 2005 ; Süß, 1996 ).

Rigas et al. (2002) applied the Kühlhaus and NEWFIRE scenarios to evaluate problem-solving performance. The correlations between intelligence and problem-solving scores (Advanced Progressive Matrices, APM, Raven, 1976) were r =0.43 (Kühlhaus) and r =0.34 (NEWFIRE) when corrected for attenuation.

Leutner (2002) conducted two experiments related to the effect of domain knowledge on the correlation between problem solving and intelligence, and concluded that “With low domain knowledge, the correlation is low; with increasing knowledge, the correlation increases; with further increasing knowledge, the correlation decreases; finally, when the problem has become a simple task, the correlation is again low”.

2.6. Creativity, design, and intelligence quotient

Creativity in design has been discussed through the problem–solution co-evolution(Dorst and Cross, 2001 ) and based on the topic itself (Sarkar and Chakrabarti, 2011 ; Demirkan and Afacan, 2012 ). Creativity is vital for the design of all types of artifacts. Assessing creativity can help recognize innovative products and designers, and can improve both design and products (Sarkar and Chakrabarti, 2011 ). Creativity is a natural part of the design process, which has been frequently categorized through a “creative leap” that occurs between solution and problem space (Demirkan, 2010 ). Given the complex nature of creativity, consensus is lacking regarding the definition of creativity that completely covers the concept and recognizes creative solutions. Consequently, a creative “event” cannot be guaranteed to occur within the design process. Thus, the study on creative design seems problematic (Dorst and Cross, 2001 ).

According to Demirkan (2010) , “In architectural design process the interaction between person, creative process and creative product inside a creative environment should be considered as a total act in assessing creativity”. Hasirci and Demirkan (2003) considered the four elements of creativity (i.e., person, process, product, and environment) while selecting two sixth-grade art rooms as the setting. The authors concluded that three creativity elements (person, process, and product) significantly influence the design process differently. In a later study, the effects of these three creativity elements have been analyzed by focusing on cognition phases in the creative decision making of design studio students (Hasirci and Demirkan, 2007 ).

While criticizing terms such as “creativity test” and “measure of the creative process”, Piffer (2012) indicated that three specific dimensions of creativity (novelty, appropriateness, and impact) constitute a framework that helps define and measure creativity by answering if creativity can be measured.

Squalli and Wilson (2014) argued that the terms intelligence, creativity, and innovation are initially assumed to be well understood generally, but defining, assessing, and measuring the inter-relationships among these terms are controversial. The authors conducted the “first test of the intelligence–innovation hypothesis” that contributed to the creativity–intelligence debate in the psychology literature.

Two different theories exist in terms of the relationship between IQ and creativity in the history of psychological research. In the first theory, IQ and creativity belong to the same mental processes (conjoint theory). In the second theory, IQ and creativity represent two separate mental processes (disjoint theory). Various researchers have recommended some evidence since the 1950s to prove the correlation between creativity and intelligence. In these previous studies, the correlation between these two concepts is extremely low that distinguishing the two concepts can be justified (Batey and Furnham, 2006 ). Several researchers contend that creativity and intelligence originate from the same intellectual cognitive process, and can only be interpreted as creativity because of the outcomes of both concepts. For example, a complete new object is produced through the cognitive process. This approach is called the “nothing special” hypothesis (O’Hara and Sternberg, 1999 ).

The model usually adopted in this type of research is known as the “threshold hypothesis”. This hypothesis indicates that creativity requires a high IQ level. However, only having a high IQ is insufficient (Guilford, 1967 ). Therefore, although a positive correlation exists between creativity and intelligence, this correlation would either disappear or lose meaning if an individual’s IQ score is higher than 120, which is beyond the threshold. This model is acceptable for many researchers, but is also challenging in different cases (Heilman et al., 2003 ).

2.7. Architecture education in Iran

The architecture undergraduate program in Iran universities is no less than a four-year program. The program comprises140 course credits, including 6 credits for the final thesis. The courses are divided into general (20 credits), basic (29 credits), major (60 credits), and professional (27 credits). Thirteen optional courses are also offered, such as ethics in architecture, research methods, design process and methods, new structures, and software applications in architecture. Students should pass two optional courses in the program. ADS courses comprise 5 courses (ADS-1, ADS-2, ADS-3, ADS-4, and ADS-5) with 5 credits each. ADS courses begin from the fourth semester (one ADS course in every semester) (Supreme Council for Planning, 2007 ).

Each design studio course begins with a tutor proposing a design project according to the current syllabus of the Ministry of Science. The students start designing by studying some environmental factors and architectural standards and by considering other similarly designed projects. Semester critique sessions are held, during which students obtain the opinions of a tutor and of fellow students regarding the improvements of the students’ design. ADS assessment is based on the delivered project at the end of the semester (including a 3D model, floor plans, elevations and sections, and internal and external perspectives), and students’ activities during critique sessions.

3. Main hypotheses

This study started from the following hypotheses:

- Does any relationship exist between IQ and mean scores for architecture design studio courses?

- Does any relationship exist between IQ and scores for architecture design studio courses 1 to 5(ADS-1 to ADS-5)?

4. Test subjects

The statistical population in this study consisted of all Deylaman Institute of Higher Education (Lahijan-IRAN) architecture students enrolled in 2011. The Department of Education provided a complete list of students, from which the sample was selected. Moreover, 184 students were selected as the study sample. Finite population sampling was adopted to estimate the sample size (n ) as follows:

|

|

where

- P =0.5 is the estimating ratio for trait in this study (gender ratio is considered the trait ratio in this population);

- Zα /2 =1.96 is the corresponding value with 95% confidence level in a standard normal distribution;

- ε =0.1 is the value of allowable error;

- N =184 is the statistical population size; and

- n is the least sample size, where

|

|

This formula shows that the minimum sample size obtained was 64. A larger sample size of 69 was considered because the confidence level was increased and access to the student population was provided. Simple random sampling was adopted because the sampling framework and population members were predetermined. Participation in this research was voluntary. Individuals who participated in this research were assured that the data would only be used for research purposes, and the data were analyzed collectively.

Forty-six female students (66.7%) and 23 male students (33.3%) comprised the study sample. The average age of the students was 23.39. The youngest student was 21 years old, whereas the oldest was 31 years old.

5. Research methodology

The approach adopted in this research was statistical inference associated with testing statistical hypotheses. The students’ scores were prepared, collected, recorded, and subsequently classified, controlled, and analyzed using SPSS. The students’ IQ was based on Raven’s Progressive Matrices test, whereas the design talent index was based on the design course scores. The reliability and validity of the Raven test in Iran as well as the evaluation and reliability of the design scores are described in the subsequent sections.

5.1. Raven’s IQ test

Raven’s Progressive Matrices, either the simple form or Raven’s Matrices themselves, are classified as non-verbal IQ tests employed for educational purposes. These tests are among the most extensively used and comprehensive tests that can be used for five-year-old children to elderly people (Kaplan and Saccuzzo, 2008 ). Raven test was created by John C. Raven in England in 1936 (Raven, 1936 ).

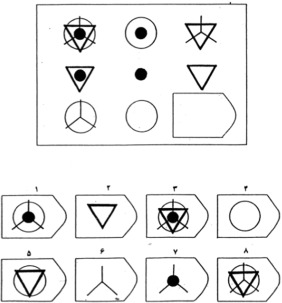

Raven’s Advanced Progressive Matrices (Figure 2 ) are specific forms of Raven’s Matrices test, which are particularly designed to distinguish individuals whose intelligence is beyond normal. This form of the test is designed as two different sets of questions in separate booklets. The first booklet contains 12 questions that are solely designed to distinguish among individuals with varying levels of intelligence, whereas the second booklet contains 36 questions that clarify and more precisely distinguish individuals. All of the questions in the second booklet are designed as rectangular matrices with three columns and rows that contain organized figures and images. The final cell in this matrix is always blank. The figure contents in the eight other cells are specified based on optional and abstract rules. An individual studied should discover these rules through trial and error, and subsequently guess the content of the ninth cell based on these rules. Six to eight optional answers are designed for every question. In this form of test, an individual’s ability for abstract reasoning is evaluated, specifically the individual’s ability to solve/guess the relationship among the components of each question, identify the fundamental rules by which the cells are constructed, and use these rules to recognize the correct answer (Mackintosh and Bennett, 2005 ).

|

|

|

Figure 2. Illustrative progressive matrices item. The respondents are asked to recognize the piece required to complete the design based on the corresponding options. |

5.2. Reliability and validity of Raven test in Iran

Raven’s Progressive Matrices are among the IQ tests whose reliability and validity are accepted to measure and evaluate the overall intelligence, which is the “g factor”. An advanced form of this test is employed as a tool form ensuring the intelligence of individuals regarded as brilliant and distinguished people, and as top and gifted students. Rahmani (2006) investigated the reliability and validity of this test in research in which students’ intelligence was measured. Rahmani obtained raw data associated with individuals’ IQ. Moreover, 707 individuals were initially selected as samples from a statistical population of students studying in the Khorasgan branch of Azad University (Iran) from school years 2005 to 2008. The samples were tested using Raven’s Progressive Matrices. The results indicated that Raven’s Progressive Matrices were significantly reliable and valid (P <0.01). The students’ overall intelligence was measured through IQ equivalents on the Wechsler intelligence scale, in which a mean of 100 and a standard deviation of 15 were obtained using the standard method to calculate scores (z scores). No significant difference (P <0.01) was observed in the raw mean scores of the female and male individuals. A comparison of the mean raw scores of the subjects studied, whose age range differed, showed no difference in the mean scores among individuals over 18 years old.

5.3. Students’ scores in architecture design studio courses

The architecture design studio scores in this study were used as indicators of students’ design competence. However, debates about these issues persist, that is, whether students’ final term scores obtained from design course projects and design critiques represent students’ actual design skills, and whether students with high scores in design courses are likely to be better designers in the future. Design projects, whether professional or academic, are examined to answer issues on whether a systematic mechanism can be developed to evaluate architecture designs. Additionally, how the referees’ judgements are affected by the referees’ presumptions (Carmona and Sieh, 2004 ; Prasad, 2004 ) is examined. These works are designed to identify better assessment methods for design projects. Studies about the reliability of the scores of college design courses are limited.

Table 1 presents the design course titles, purposes, and subjects considered in the Deylaman Institute of Higher Education in Iran for the architecture undergraduate program.

| Course title | Course purpose | Design subject | Land area (m2 ) |

|---|---|---|---|

| ADS-1 | Learning simple and tangible functions Paying attention to effective factors in design | Fruit market, simple fair exhibit site, small passenger terminal | 1500 |

| ADS-2 | Learning housing concept and factors affecting it | Residential units/buildings in urban context for an extended family. | 1000 |

| ADS-3 | Meeting various cultural, artistic, dialect and semantic dimensions with simplicity in functional system | Museum, monument, cultural center, special exhibition sites | 2000–3000 |

| ADS-4 | Learning specific and complicated functional systems and paying attention to installations and structural system | Small hospitals, small airports, port facilities, nursing home for disabled people | 6002 |

| ADS-5 | Micro and macro-scale residential complex designed to suit cultural, climatic and economic conditions | Forty-floor residential complex based on medium or high population density | 8000 |

Considering every ADS syllabus(Supreme Council for Planning, 2007 ) and some related studies, such as “experts and novices” subject studies (Björklund, 2013 ; Ozkan and Dogan, 2013 ), the following differences among ADS-1 to ADS-5 can be recognized:

- Normally in design research, first year students are regarded as novices, whereas final year students are considered experts. From ADS-1 to ADS-5, students transition from novice to experts.

- Based on the attention to form and function in the syllabus, the first design projects (ADS-1 and ADS-2) are less functional, whereas the final design projects (ADS-4 and ADS-5) are highly functional. The ADS-3 project is more conceptual and artistic.

- The complexity of a project in terms of the number of spaces and variations, land area, and required technology increases from ADS-1 to ADS-5.

- Based on the syllabus, required design constraints, such as environmental and economic factors, and numerical standards, increase from ADS-1 to ADS-5.

- The degree of being ill-defined decreases in the final design projects (ADS-4 and ADS-5). A difference between ADS-4 and ADS-5 is that ADS-5 students should deal with an urban network design for designed buildings aside from designing buildings.

6. Testing the main hypotheses

The existence of a significant relationship between IQ and ADS courses 1 to 5 scores, and the means of these scores is questioned.

Inferential statistical methods, including a correlation test, were used in testing the main hypotheses. First, the existence or absence of a significant relationship among these variables was studied through these methods; a 5% confidence level was considered in this study.

Given that the P -value of the testing correlation between IQ and ADS-1 to ADS-4 is more than 0.05, the null hypothesis is accepted and the correlation is insignificant for the 95% confidence level (see Table 2 ).

| Hypothesis | Pearson correlation | P -value | correlation | Type of correlation |

|---|---|---|---|---|

| IQ scores and mean ADS scores | 0.13 | 0.26 | No correlation | – |

| IQ scores and ADS-1 scores | 0.028 | 0.82 | No correlation | – |

| IQ scores and ADS-2 scores | 0.07 | 0.56 | No correlation | – |

| IQ scores and ADS-3 scores | −0.06 | 0.61 | No correlation | – |

| IQ scores and ADS-4 scores | 0.21 | 0.07 | No correlation | – |

| IQ scores and ADS-5 scores | 0.26 | 0.02 | Correlated | Positive |

However, the P -value of the testing correlation between IQ and ADS-5 is 0.02, which is lower than 0.05. Therefore, the null hypothesis is rejected and these two variables are correlated. The correlation value is 0.26.

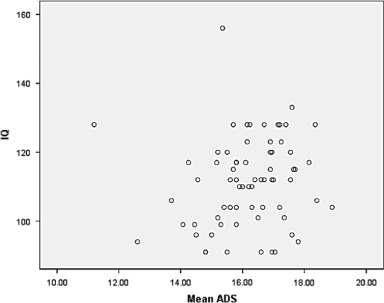

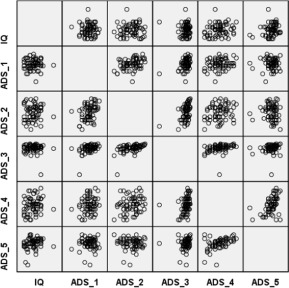

Figure 3 shows the mean distribution in every course compared with the IQ scores. Evidently, the relationship among the means is extremely low that this relationship can be disregarded. Figure 4 demonstrates the relationships between IQ and the five design courses. Obviously, no correlations exist between IQ and ADS-1, ADS-2, ADS-3, and ADS-4.

|

|

|

Figure 3. Matrix showing the distribution between the IQ scores and the mean of scores from architecture design studio (ADS-1 to ADS-5). |

|

|

|

Figure 4. Distributions of the scores of ADS-1 to ADS-5. |

7. Gender difference hypotheses

- Does a significant difference exist between female and male architecture students in terms of intelligence quotient scores?

- Does a significant difference exist between female and male architecture students regarding the mean ADS scores of both genders?

7.1. Testing the hypotheses

H0 (null hypothesis) μ 1=μ 2: No significant difference exists between the IQ scores of female and male students.

H1 (alternative hypothesis) μ 1≠μ 2: A significant difference exists between the IQ scores of female and male students.

Descriptive statistics and the t -statistical test for two independent groups (independent t -sample test) are used in this section to recognize the present condition of the population under study. These statistics investigate the difference between the mean IQ scores and the mean architecture design scores in the two student samples examined (i.e., female and male individuals).

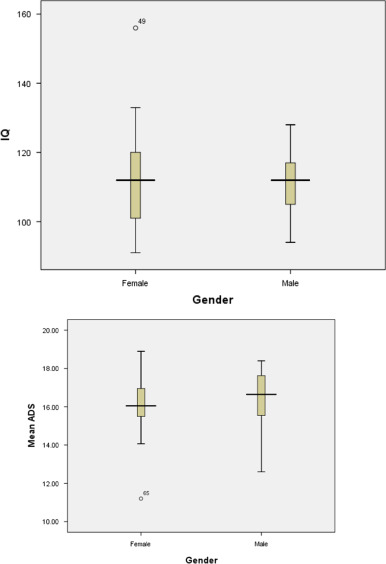

Table 3 shows the data related to both IQ scores and the mean scores for architecture design studio courses of female and male students. Descriptive statistics are individually calculated for each group.

| Gender | N | Mean | Std. deviation | Std. error mean | |

|---|---|---|---|---|---|

| Intelligence quotient | Female | 46 | 111.02 | 13.84 | 2.041 |

| Male | 23 | 111.91 | 10.71 | 2.233 | |

| Architecture design studio mean scores | Female | 46 | 16.09 | 1.25 | 0.184 |

| Male | 23 | 16.35 | 1.53 | 0.318 |

Out of the 69 students studied (46 females and 23 males), the mean IQ is 111.02 in the female student group, whereas that in the male student group is 111.91. Moreover, the standard deviation shown in Table 3 indicates that the IQ distribution is wider in the female student group than that in the male student group. The mean of the architecture design average scores is 16.09 in the female student group and 15.35 in the male student group. The standard deviation obtained implies that the distribution of the ADS average scores is slightly wider in the male student group than in the female student group. A mean comparison test should be performed to reject or accept this hypothesis.

Two columns of the 95% confidence interval and the P -value are used to conclude whether the means differ from or are similar to each other (significance of the difference in the mean scores). The P -value shows whether the null hypothesis (H0) should be rejected or accepted. This value is compared with 0.05. First, a test for equality of variances is performed, and a test for equality of means is subsequently conducted based on the test for equality of variances. If the variances are proven to be equal to each other, then the first row of means is tested for equality of means; otherwise, the second row is tested.

The P -value is 0.21 according to the test for equality of variances for the mean IQ variant scores; moreover, the P -value is 0.21 according to the test for equality of variances for the mean scores of the architecture design courses. A 0.05% confidence level is taken. Given that the P -value for both tests is lower than 0.05, the null hypothesis for equality of variances is accepted. Thus, the variances of two populations are considered equal for both variables. Therefore, the first row of means is considered to test every variable because the variances are equal (see Table 4 ).

| Levene’s test for equality of variances | t -Test for equality of means | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| F | Sig. | t | df | Sig. (2-tailed) | Mean difference | Std. error difference | 95% Confidence interval of the difference | |||

| Lower | Upper | |||||||||

| Intelligence quotient | Equal variances assumed | 1.58 | 0.21 | −0.27 | 67 | 0.78 | −0.89 | 3.29 | −7.46 | 5.68 |

| Equal variances not assumed | −0.29 | 55.24 | 0.76 | −0.89 | 3.02 | −6.95 | 5.17 | |||

| Mean architecture design studio scores | Equal variances assumed | 1.27 | 0.26 | −0.77 | 67 | 0.44 | −0.26 | 0.343 | −0.95 | 0.41 |

| Equal variances not assumed | −0.72 | 37.13 | 0.47 | −0.26 | 0.367 | −1.01 | 0.47 | |||

According to the P -value obtained from the test for equality of means for the mean IQ scores, which is as follows:

P -value=0/78>0/05, the null hypothesis, which is the equality of means, is not rejected with the 95% confidence level. Therefore, no significant difference exists between the mean IQ scores associated with female and male students.

According to the P -value obtained from the test for equality of means for the mean scores for ADS courses, which is as follows:

Sig=0/78>0/05, the null hypothesis, which is equality of means, is not rejected with the 95% confidence level. Therefore, no significant difference exists between the mean scores for the architecture design courses associated with female and male students.

Two numbers shown at the 95% confidence interval of the difference are 0, which indicates that the null hypothesis is accepted in both comparison tests.

The box charts in Figure 5 show numerous descriptive statistics, including the maximum and minimum data, median, quartiles, and range, along with data distribution associated with the scores of architecture designing courses and IQ scores in the two groups of male and female students.

|

|

|

Figure 5. Box charts of IQ and mean ADS with respect to gender. |

8. Discussion

This research demonstrates that no significant relationships exist between students’ IQ and variables that include (1) ADS-1 to ADS-4, and (2) mean scores for design courses. Moreover, IQ and ADS-5 scores are correlated.

According to the explained difference among ADS syllabusses in Section 5.3 , the effect of IQ on expert design skills is better than that on novices (the P -value for IQ and ADS-4 is 0.07 and is close tobeingcorrelated). Moreover, when the complexity of a project and design constraints increase, and the degree of being ill-defined in a design problem decreases, the influence of IQ on design skills becomes evident. However, the item (designer experience, complexity and functionality of design project, and a design problem being less ill-defined) that has a larger effect on this correlation cannot be indentified because no continual decrease in P -value occurs from ADS-1 to ADS-5 (because of both ADS-2 and an IQ P -value of 0.56).

Testing the second hypothesis about the effect of gender difference on IQ and design scores shows that no significant difference is observed in the IQ and design scores of males and females in this study compared with other predictors (i.e., spatial ability) that indicate that males are better than females (Newcombe et al., 1983 ). The implication is that if spatial ability is regarded as an indicator of design abilities, then spatial ability contradicts the results in terms of the absence of a significant difference between males and females in the aspect of design scores.

The correlation between IQ scores and the total GPA is also measured in this study (P -value=0.217, Pearson correlation=−0.15, no correlation). The results indicate the lack of a significant difference between the average of design scores and students’ GPA.

Furthermore, the threshold theory of creativity–intelligence about intelligence design for an IQ above 120 is measured. For students with an IQ above 120, the correlation between IQ and the mean ADS is the Pearson correlation=−0.073 and the P -value=0.79, which indicates the lack of a significant relationship, and threshold theory about creativity–intelligence is not confirmed in intelligence design.

9. Conclusion

This study examined the correlation between architecture students’ IQs and (1) the students’ ADS-1 to ADS-5scores,and (2) the mean ADS scores. The results indicated that as the degree of complexity of a project increases, a designer’s experience may boost the effect of IQ in the design process. As the factors that influence designers’ success are identified, more productive and effective design programs may be devised in the future. Furthermore, the guidance for the future occupation of students in this field can be assured by identifying mental talents that empower design ability, particularly talents that can be quantitatively measured.

The study reported in this paper should be repeated in other architectural schools to confirm if a correlation only exists in the final year course. Further research on the correlation between urban design courses and IQ would help clarify this topic.

The major limitation of the research approach was the relevance or accuracy of the evaluation methods in practical architecture courses, specifically design courses. Certain doubts that emerged in students’ scores in design courses accurately represented the students’ actual design ability. Therefore, this study encourages further research on this issue. New studies are currently being developed through different assessment methods.

Other concerns that emerged were about creativity versus intelligence tests. Meanwhile, researchers have emphasized the role of creativity in design, in which the broad concept of creativity induced difficulties in understanding the exact role of creativity in design. Moreover, the measurability of creativity and creativity tests is under debate. Certain intelligence innovation tests (Squalli and Wilson, 2014 ) that can be used for future studies are available.

The present study is recommended to be repeated on larger statistical populations and in different countries or cities. Repeating this research in a broader context and using the new results may help design a new questionnaire or cognitive tests to identify future high potential designers.

Based on different effective design factors and the outcomes (mental, cognitive, social interaction, collaboration, personality, problem-solving skills) of these factors, every designed predictive test should consider all aspects or might be combinations of cognitive and personality tests. In terms of success in designing a reliable test or questionnaire, Cross’ (1999) theory can validate that design is a special and separate type of intelligence.

Acknowledgement

This research has been done by financial support of Portugal Calouste Gulbenkian Foundation (Grant number 129645) (Fundação Calouste Gulbenkian). We most sincerely thank Mr Dariuosh Poordadashi for his cooperation in the analysis of the statistical results and the staff of Deylaman Institute of Higher Education for their cooperation in preparing the research data.

References

- Akin, 1990 Ö. Akin; Necessary conditions for design expertise and creativity; Des. Stud., 11 (2) (1990), pp. 107–113

- Alexiou et al., 2009 K. Alexiou, T. Zamenopoulos, J.H. Johnson, S.J. Gilbert; Exploring the neurological basis of design cognition using brain imaging: some preliminary results; Des. Stud., 30 (6) (2009), pp. 623–647

- Allison, 2008 L.N. Allison; Designerly Ways of Knowing; MIT Press, Cambridge (2008)

- Batey and Furnham, 2006 M. Batey, A. Furnham; Creativity, intelligence, and personality: a critical review of the scattered literature; Genet. Soc. Gen. Psychol. Monogr., 132 (4) (2006), pp. 355–429

- Benavides et al., 2010 F. Benavides, H. Dumont, D. Istance; The Nature of Learning. Using Research to Inspire Practice; OECD Publishing, Paris (2010)

- Björklund, 2013 T.A. Björklund; Initial mental representations of design problems: differences between experts and novices; Des. Stud., 34 (2) (2013), pp. 135–160

- Brouwers et al., 2009 S.A. Brouwers, F.J.R. Van de Vijver, D.A. Van Hemert; Variation in Raven’s progressive matrices scores across time and place; Learn. Individ. Differ., 19 (3) (2009), pp. 330–338

- Bucciarelli, 1984 L.L. Bucciarelli; Reflective practice in engineering design; Des. Stud., 5 (3) (1984), pp. 185–190

- Bühner et al., 2008 M. Bühner, S. Kröner, M. Ziegler; Working memory, visual–spatial-intelligence and their relationship to problem-solving; Intelligence, 36 (6) (2008), pp. 672–680

- Carmona and Sieh, 2004 Carmona, M., L. Sieh, 2004. Measuring Quality in Planning: Managing the Performance Process. Routledge.

- Clements, 1998 Clements, D.H., 1998. Geometric and Spatial Thinking in Young Children.

- Cross, 1999 N. Cross; Natural intelligence in design; Des. Stud., 20 (1) (1999), pp. 25–39

- Cross, 2001 N. Cross; Design cognition: Results from protocol and other empirical studies of design activity; Des. Knowing Learn.: Cogn. Des. Educ. (2001), pp. 79–103

- Cross and Cross, 1995 N. Cross, A.C. Cross; Observations of teamwork and social processes in design; Des. Stud., 16 (2) (1995), pp. 143–170

- Demirbaş and Demirkan, 2007 O.O. Demirbaş, H. Demirkan; Learning styles of design students and the relationship of academic performance and gender in design education; Learn. Instr., 17 (3) (2007), pp. 345–359

- Demirbaş and Demirkan, 2003 O.O. Demirbaş, H. Demirkan; Focus on architectural design process through learning styles; Des. Stud., 24 (5) (2003), pp. 437–456

- Demirkan, 1998 H. Demirkan; Integration of reasoning systems in architectural modeling activities; Autom. Constr., 7 (2) (1998), pp. 229–236

- Demirkan, 2010 Demirkan, H., 2010. From Theory to Practice – 39 Opinions. Creativity, Design and Education. Theories Positions and Challenges. pp. 56–59.

- Demirkan and Afacan, 2012 H. Demirkan, Y. Afacan; Assessing creativity in design education: analysis of creativity factors in the first-year design studio; Des. Stud., 33 (3) (2012), pp. 262–278

- Dorst, 2011 K. Dorst; The core of ‘design thinking’ and its application; Des. Stud., 32 (6) (2011), pp. 521–532

- Dorst and Cross, 2001 K. Dorst, N. Cross; Creativity in the design process: co-evolution of problem–solution; Des. Stud., 22 (5) (2001), pp. 425–437

- Eastman et al., 2001 C. Eastman, W. Newstetter, M. McCracken; Design Knowing and Learning: Cognition in Design Education; Elsevier (2001)

- Eastman, 1969 Eastman, C.M., 1969. Cognitive Processes and Ill-defined Problems: A Case Study from Design.

- Ernst and Newell, 1969 Ernst, G.W., A. Newell, 1969. GPS: A Case Study in Generality and Problem Solving. Academic Press.

- Goel, 1994 V. Goel; A comparison of design and nondesign problem spaces; Artif. Intell. Eng., 9 (1) (1994), pp. 53–72

- Gregory and Zangwill, 1987 R.L. Gregory, O.L. Zangwill; The Oxford Companion to the Mind; Oxford University Press (1987)

- Guilford, 1967 Guilford, J.P., 1967. The Nature of Human Intelligence.

- Guttman, 1974 R. Guttman; Genetic analysis of analytical spatial ability: Raven’s progressive matrices; Behav. Genet., 4 (1974), pp. 273–284

- Hasirci and Demirkan, 2003 D. Hasirci, H. Demirkan; Creativity in learning environments: the case of two sixth grade art rooms; J. Creative Behav., 37 (1) (2003), pp. 17–41

- Hasirci and Demirkan, 2007 D. Hasirci, H. Demirkan; Understanding the effects of cognition in creative decision making: a creativity model for enhancing the design studio process; Creativity Res. J., 19 (2–3) (2007), pp. 259–271

- Heilman et al., 2003 K.M. Heilman, S.E. Nadeau, D.O. Beversdorf; Creative innovation: possible brain mechanisms; Neurocase., 9 (5) (2003), pp. 369–379

- Iivari and Hirschheim, 1996 J. Iivari, R. Hirschheim; Analyzing information systems development: a comparison and analysis of eight IS development approaches; Inf. Syst., 21 (7) (1996), pp. 551–575

- Kamphaus et al., 1997 Kamphaus, R.W., M.D. Petoskey, A.W. Morgan, 1997. A History of Intelligence Test Interpretation.

- Kaplan and Saccuzzo, 2008 R.M. Kaplan, D.P. Saccuzzo; Psychological Testing: Principles, Applications, and Issues; Wadsworth Publishing Company (2008)

- Kim and Maher, 2008 M.J. Kim, M.L. Maher; The impact of tangible user interfaces on spatial cognition during collaborative design; Des. Stud., 29 (3) (2008), pp. 222–253

- Kröner et al., 2005 S. Kröner, J.L. Plass, D. Leutner; Intelligence assessment with computer simulations; Intelligence, 33 (4) (2005), pp. 347–368

- Kunda et al., 2013 M. Kunda, K. McGreggor, A.K. Goel; A computational model for solving problems from the Raven’s progressive matrices intelligence test using iconic visual representations; Cogn. Syst. Res., 22–23 (0) (2013), pp. 47–66

- Kvan and Jia, 2005 T. Kvan, Y. Jia; Students’ learning styles and their correlation with performance in architectural design studio; Des. Stud., 26 (1) (2005), pp. 19–34

- Leutner, 2002 D. Leutner; The fuzzy relationship of intelligence and problem solving in computer simulations; Comput. Hum. Behav., 18 (6) (2002), pp. 685–697

- Linn and Petersen, 1985 M.C. Linn, A.C. Petersen; Emergence and characterization of sex differences in spatial ability: a meta-analysis; Child Dev. (1985), pp. 1479–1498

- Lohman, 1996 Lohman, D.F., 1996. Spatial Ability and G. Human Abilities: Their Nature and Measurement. pp. 97–116.

- Lu, 2015 C.-C. Lu; The relationship between student design cognition types and creative design outcomes; Des. Stud., 36 (2015), pp. 59–76

- Mackintosh and Bennett, 2005 N.J. Mackintosh, E.S. Bennett; What do Raven’s matrices measure? An analysis in terms of sex differences; Intelligence, 33 (6) (2005), pp. 663–674

- Nazidizaji et al., 2014 S. Nazidizaji, A. Tomé, F. Regateiro; Search for design intelligence: a field study on the role of emotional intelligence in architectural design studios; Front. Archit. Res., 3 (4) (2014), pp. 413–423

- Newcombe et al., 1983 N. Newcombe, M.M. Bandura, D.G. Taylor; Sex differences in spatial ability and spatial activities; Sex Roles, 9 (3) (1983), pp. 377–386

- Newell and Simon, 1972 A. Newell, H.A. Simon; Human Problem Solving; Prentice-Hall, Englewood Cliffs, NJ (1972)

- Nguyen and Zeng, 2012 Nguyen, T.A., Y. Zeng, 2012. Clustering designers’ mental activities based on EEG power. Tools and Methods of Competitive Engineering, Karlsruhe.

- O’Hara and Sternberg, 1999 L.A. O’Hara, R.J. Sternberg; Learning styles; Encycl. Creativity, 2 (1999), pp. 147–153

- Ochsner, 2000 J.K. Ochsner; Behind the mask: a psychoanalytic perspective on interaction in the design studio; J. Archit. Educ., 53 (4) (2000), pp. 194–206

- Oh et al., 2013 Y. Oh, S. Ishizaki, M.D. Gross, E.Y.-L. Do; A theoretical framework of design critiquing in architecture studios; Des. Stud., 34 (3) (2013), pp. 302–325

- Oxman, 1995 R. Oxman; Viewpoint observing the observers: research issues in analysing design activity; Des. Stud., 16 (2) (1995), pp. 275–283

- Oxman, 1996 R. Oxman; Cognition and design; Des. Stud., 17 (4) (1996), pp. 337–340

- Oxman, 2001 R. Oxman; The mind in design: a conceptual framework for cognition in design education. In: Eastman, C., McCracken, M., Newstetter, W. (Eds.). Design Knowing and Learning: Cognition in Design Education; Elsevier Science Ltd., Oxford (2001), pp. 269–295

- Oxman, 2004 R. Oxman; Think-maps: teaching design thinking in design education; Des. Stud., 25 (1) (2004), pp. 63–91

- Ozkan and Dogan, 2013 O. Ozkan, F. Dogan; Cognitive strategies of analogical reasoning in design: differences between expert and novice designers; Des. Stud., 34 (2) (2013), pp. 161–192

- Papamichael and Protzen, 1993 Papamichael, K., J.P. Protzen, 1993. The limits of intelligence in design.

- Piffer, 2012 D. Piffer; Can creativity be measured? An attempt to clarify the notion of creativity and general directions for future research; Think. Skills Creativity, 7 (3) (2012), pp. 258–264

- Potter and van der Merwe, 2001 Potter, C., E. van der Merwe, 2001. Spatial Ability, Visual Imagery and Academic Performance in Engineering Graphics.

- Prasad, 2004 S. Prasad; Clarifying intentions: the design quality indicator; Build. Res. Inf., 32 (6) (2004), pp. 548–551

- Rahmani, 2006 J. Rahmani; Reliability, validity and standardization of advanced Raven’s progressive matrices test between students of Azad University Students in Iran; Sci. Res. Psychol., 34 (2006), pp. 61–74

- Raven, 1936 Raven, J., 1936. Mental Tests Used in Genetic Studies: The Performance of Related Individuals on Tests Mainly Educative and Mainly Reproductive (Unpublished Master’s thesis). University of London.

- Raven, 2000 J. Raven; The Raven’s progressive matrices: change and stability over culture and time; Cogn. Psychol., 41 (1) (2000), pp. 1–48

- Resnick and Glaser, 1975 Resnick, L.B., R. Glaser, 1975. Problem Solving and Intelligence.

- Rigas et al., 2002 G. Rigas, E. Carling, B. Brehmer; Reliability and validity of performance measures in microworlds; Intelligence, 30 (5) (2002), pp. 463–480

- Rowe, 1987 P.G. Rowe; Design Thinking; The MIT Press, Cambridge, MA (1987)

- Rüedi, 1996 K. Rüedi; Architectural Education and the Culture of Simulation: history against the Grain; A. Hardy, N. Teymur (Eds.), Architectural Education and the Culture of Simulation: history against the Grain (1996), pp. 109–125

- Salthouse, 1987 T.A. Salthouse; Adult age differences in integrative spatial ability; Psychol. Aging, 2 (3) (1987), p. 254

- Sarkar and Chakrabarti, 2011 P. Sarkar, A. Chakrabarti; Assessing design creativity; Des. Stud., 32 (4) (2011), pp. 348–383

- Schön, 1983 Schön, D.A., 1983. The Reflective Practitioner: How Professionals Think in Action, Basic Books.

- Schön, 1985 Schön, D.A., 1985. The Design Studio: An Exploration of its Traditions and Potentials. RIBA Publications for RIBA Building Industry Trust, London.

- Schweizer et al., 2007 K. Schweizer, F. Goldhammer, W. Rauch, H. Moosbrugger; On the validity of Raven’s matrices test: does spatial ability contribute to performance?; Personal. Individ. Differ., 43 (8) (2007), pp. 1998–2010

- Shih et al., 2006 S.-G. Shih, T.-P. Hu, C.-N. Chen; A game theory-based approach to the analysis of cooperative learning in design studios; Des. Stud., 27 (6) (2006), pp. 711–722

- Snow et al., 1984 R.E. Snow, P.C. Kyllonen, B. Marshalek; The topography of ability and learning correlations; Adv. Psychol. Hum. Intell., 2 (S47) (1984), p. 103

- Sorby, 2005 S.A. Sorby; Assessment of a “new and improved” course for the development of 3-d spatial skills; Eng. Des. Graph. J., 69 (3) (2005), p. 6

- Squalli and Wilson, 2014 J. Squalli, K. Wilson; Intelligence, creativity, and innovation; Intelligence, 46 (2014), pp. 250–257

- Supreme Council for Planning, S, 2007 Supreme Council for Planning, S., 2007. General characteristics Programme and syllabus of courses – B.Sc. of Architecture. Ministry of Science Research and Technology, Tehran.

- Süß, 1996 Süß, H.-M., 1996. Intelligenz, Wissen und Problemlösen. Göttingen: Hogrefe.

- Sutton and Williams, 2010a Sutton, K., A. Williams, 2010a. Implications of Spatial Abilities on Design Thinking. Design & Complexity. Design Research Society, Montreal (Quebec), Canada.

- Sutton and Williams, 2010b Sutton, K., A. Williams, 2010b. Implications of Spatial Abilities on Design Thinking.

- Sutton and Williams, 2007 Sutton, K.J., A.P. Williams, 2007. Spatial cognition and its implications for design. International Association of Societies of Design Research, Hong Kong, China.

- Tate, 1987 A. Tate; The Making of Interiors: An Introduction; HarperCollins (1987)

- Tversky, 2005 Tversky, B., 2005. Functional Significance of Visuospatial Representations. Handbook of Higher-level Visuospatial Thinking, pp. 1–34.

- Verma, 1997 N. Verma; Design theory education: how useful is previous design experience?; Des. Stud., 18 (1) (1997), pp. 89–99

- Vyas et al., 2013 D. Vyas, G. Van der Veer, A. Nijholt; Creative practices in the design studio culture: collaboration and communication; Cogn. Technol. Work., 15 (4) (2013), pp. 415–443

- Yang, 2010 M.C. Yang; Consensus and single leader decision-making in teams using structured design methods; Des. Stud., 31 (4) (2010), pp. 345–362

Document information

Published on 12/05/17

Submitted on 12/05/17

Licence: Other

Share this document

Keywords

claim authorship

Are you one of the authors of this document?