Parameter estimation in ODEs. Modelling and computational issues

Abstract. In this work we discuss a variational approach for the determination of the parameters of systems of ordinary differential equations (ODE). We construct a model for fitting observed noisy data into the given dynamical system. Also we explain in detail the advantage of using the adjoint equation method to compute the derivatives or gradients, which are needed for the application of gradient methods and quasi-Newton algorithms to find the minimum of the cost function. In particular, we consider two classic iterative algorithms: the conjugate gradient (CG) algorithm and the Broyden-Fletcher-Goldfarb-Shanno (BFGS) algorithm. For educational purposes we try to explain several numerical and computational issues with some detail and illustrate them with the Susceptible, Exposed, Infected, Recovered, and Deceased (SEIRD) epidemiological model.

Keywords. Inverse problem; noisy data; parameter determination; adjoint method; variational approach.

1 Introduction

Systems of ordinary (and partial) differential equations are an important tool to model the physical state of a real phenomenon that arise in many areas of applied sciences and engineering. Predicting the future behaviour or allowing control of these processes requires not only accurately describing the system but also finding or improving its parameters. Estimation of unknown or inaccurate parameters in turn requires fitting partially observed noisy data or experimental measurements to the model. More generally, in the statistics and machine learning literature various methods have been employed to fit differential equations to data, from maximum likelihood approaches, [13] to Bayesian sampling algorithms, [6] or traditional deterministic approaches. Thus, parameter estimation needs efficient ODE (forward) solvers, optimization routines, statistical and possible stochastic procedures. There are several stochastic, deterministic and hybrid optimization routines [2]. Contrary to stochastic algorithms, deterministic ones are computationally efficient but they tend to converge to local minima. However, they are the departure point in many applications and in the design of better and efficient procedures. For these methods the gradient is combined with information from line searches or methods involving a Newton, quasi-Newton (low-rank) or Fisher information based curvature estimators to update model parameters, [9]. The main computational bottleneck in these algorithms is the computation of the gradient (or the curvature) of the parametric cost function. Then, efficient methods to evaluate gradients or for parametric sensitivity analysis of differential–algebraic models is important, no only for the determination of parameters, but also in other areas of application like model simplification, data assimilation, optimal control, process sensitivity, uncertainty analysis, and experimental design, among others, for a wide range of scientific and engineering problems.

In this work we concentrate in deterministic optimization procedures, mainly those for quadratic non-linear programming and based on gradient methods and quasi-Newton algorithms. Our purpose is to explain in some detail modelling, algorithmic and computational issues to a wide, and possible non-expert, audience. In Section 2 we introduce the quadratic non-linear model that incorporates noisy data into the cost function and it is adapted to the SEIRD epidemiological deterministic model, which is employed to illustrate the algorithms and their related computational issues. Section 3 is devoted to explaining the adjoint equation method for computing gradients of the given cost function and its advantages over other methods. In section 4 we describe the CG and BFGS optimization algorithms and discuss important computational issues. Numerical results are shown in Section 5 and finally, in Section 6, we give some conclusions and perspectives for future work in order to improve the model and overcome some drawbacks and algorithmic problems.

2 The quadratic model

Let be a state variable at time of a continuous time ordinary differential equation (ODE) satisfying the following initial value problem:

|

|

(1) |

where the upper dot on the left hand side denotes derivation with respect to time. The vector function depends on the parameter vector with the number of parameters. The solution at time of this problem is denoted by when its dependence of and is made explicit. Frequently, we use the short notation for simplicity, like in equation (1) above. A set of vector measurements at times in are available:

|

|

(2) |

where are independent random vectors and represent measurement errors with a multivariate Gaussian distribution having zero mean and vector variances . In many problems not all components of the state are observable, so this vector variable is decomposed in observable variables and non-observable variables , which can be regarded as orthogonal projections of over the coordinates of these observable and non-observable variables. A model to estimate the unknown parameter vector and the initial conditions , from the given measurements, relays on the minimization of the least squares objective function

|

|

(3) |

where the state variable is subject to satisfy the ODE (1). The first norm is the euclidean norm in and the norms into the sum are the euclidean norms in , number of observable variables. The fixed vector denotes an experimental measurement of the initial conditions.

Remark 1: We assume that all components of the state variable are observable at the initial time , otherwise the first term in (3) can be modified accordingly. The most used norms, in the construction of objective functions , are those of the form with , , . Choosing the most appropriate norm depends on the particular application and of the properties of the state variable. As mentioned before we use the usual euclidean norm, i.e. .

Remark 2: Observe that the quantities in (3) are vectors, so the quotients are computed component-wise. In general, if is the covariance matrix at experimental time , then

|

|

where is the precision matrix. If the symmetric matrix is positive definite, then it defines a norm in the corresponding subspace of the observable variables, as . Of course, it is possible to define the cost function (3) taking as the unitary vector for every , however the minimization process using iterative gradient methods converge faster when using the weights . The attraction ball around the global minimum is also larger in this case.

Example 1: To illustrate the previous concepts and notation we consider the SEIRD epidemiological deterministic model that describe the dynamics of the propagation of an infections disease, like COVID-19, [12]. If we have a closed constant population of individuals, at each time a compartmental model separates this population in five segments: susceptible, exposed, infectious, recovered and dead, denoted by , , , , , respectively. The system of equations they satisfy is given by

|

|

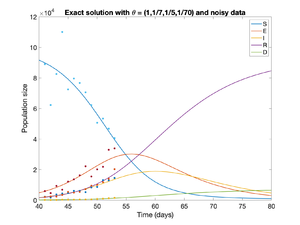

| Figure 1: The solution of the SEIRD model and synthetic measurements with white noise. |

Assuming that the total population is constant or its change is negligible during the time period, then above system must be complemented by the conservation equation

|

|

(5) |

so (1)-(5) describe an differential-algebraic system. Accordingly, we may modify the optimization model (6), adding a penalized term, obtaining the extended model:

|

|

(6) |

The penalty parameter may be proportional to . The added penalized term in (6) helps stabilizing the optimization process to estimate the initial conditions. The constant vector has components all equal to one, so the scalar product is equal to the sum of the components of .

3 Variational approach

Our goal is to find the initial conditions and the parameters that minimize the cost function (6) subject to (1) and (5), given that we have a set of noisy experimental measurements (2) at different times. Since the model is deterministic and all the variables and functions are both continuous and smooth, we can employ gradient descent methods or quasi-Newton algorithms and its variants. The most expensive and delicate task, when applying these methods, is the calculation of the gradient or Jacobians of the cost function at each iteration. Some options are available to compute these quantities as shown in [3], [14], [4], and references therein. Here, we are interested on two approaches which are based on variational calculus.

Let be the vector which contain the unknown initial conditions and the unknown vector of parameters of the dynamical system, then to first order

|

|

(7) |

where is an small increment of . If we want to be more specific we can write

|

|

(8) |

where and denote the gradients with respect and , receptively and the dot is used to denote the corresponding scalar products. Evaluating directly from (6), and simplifying, we obtain

|

|

(9) |

At the limit we obtain the gradient with the above derivatives distributed accordingly. In (9), matrices and are the Jacobians of the observable variables with respect and , respectively. In the literature, their partial derivatives are commonly called sensitivities of the observables, and their evaluation may require considerable computational effort. The first matrix is of size and the second matrix is of size , then the total number of partial derivatives that we must compute in (9) is . For instance, in the above example for the SEIRD model, if the number of observable variables is , and there are experimental measurements, we have to compute sensitivities at each iteration of a typical gradient or quasi-Newton method. The most basic method to compute these derivatives is the finite difference method (see [14]), but it has some disadvantages. As mentioned before, we concentrate only in variational methods, which may be more sophisticated but they are commonly more efficient.

3.1 Variational method to compute the sensitivities

This method is also known as the forward approach, because a forward dynamical systems is solved to compute the sensitivities. We consider first the calculation of the sensitivities with respect to the parameter , so let us denote the Jacobian by for simplicity. Then

|

|

Taking derivatives with respect to , we obtain

|

|

Then, and satisfy the following variational system:

|

Last two relations in (10) form a system of matricial differential equations and its initial condition reflects the fact that does not depend on , because

|

|

A similar variational system is satisfied by the matrix with the sensitivities with respect . Therefore, with this approach, most of the computational work is concentrated in the solution of two matricial systems of differential equations and their evaluation at the experimental times , . The amount of computational work will accumulate at each new iteration of the optimization algorithm, which in some cases may be prohibitive.

3.2 The adjoint method to compute the sensitivities

The total variation of with respect to and is given by

|

|

(11) |

Our goal is to avoid the explicit calculation of the Jacobian matrices on the right hand side. Before we proceed further, let us first introduce the following Hilbert spaces:

|

|

where the is the usual scalar product in . Then a natural inner product in is given by with induced norm given by .

Since satisfies the state equation (1), then the following inner product is null

|

|

Differentiating this expression, we get

|

|

where , and are the Jacobians of with respect to , and , respectively. Integrating by parts the left hand side, and observing that on the right hand side , , and , we obtain

|

|

and, assuming that does not depend explicitly of (it depends only through , but the variation w.r.t. is already accounted in ), then

|

|

(12) |

where is given by (11) and arise in the last term of (9). One way to avoid the explicit computation of (11) is forcing a relation with (12) by introducing the following adjoint equation

|

|

(13) |

with the Dirac measure centred at . This adjoint equation (a backward in time differential equation) contains all the information about the experimental data , and its variational formulation is obtained multiplying by a differentiable test function and integrating:

|

|

(14) |

The integral on the right hand side is equal to . Choosing in (14) and substituting the result in (12), we obtain

|

|

(15) |

where is the solution of the adjoint equation. Finally, the substitution of this equation into (9), gives

|

|

(16) |

Therefore, the gradient of the objective function (6) is , with

|

where solves the state equation (1) and solves the adjoint equation (13).

Remark 3: Formulas (17)-(18) avoid the explicit calculation of the sensitivities, they only requiere the solution of the state equation (1) and of the adjoint equation (13), regardless of the number of experimental data, , the number of parameters, , and of the observable variables, . Furthermore, these same equations must be solved to compute either the gradient with respect or with respect , or both gradients simultaneously.

Remark 4: The solution of the adjoint equation turns out to be the Lagrange multiplier of the optimization problem, with objective function , subject to the constraint (1). To show this property, let us introduce the Lagrangian function

|

|

(19) |

where is the Lagrange multiplier associated to the given constraint, and the last term is the inner product of this multiplier with the restriction. Our goal is to compute from this expression. Formally, the second term on the right hand side of (4) is zero because the state solves the ODE, therefore . However, the differentiation of reveals additional information and gives more freedom. Doing integration by parts of the term in (4) first, and then taking the derivative with respect to , we obtain

|

|

(20) |

The first term on the right hand side is obtained directly from (6) and can be expressed as

|

|

Now, if satisfies the adjoint equation (13) then the sum of first and third terms in (20) vanish, and also the boundary term , since and . Therefore

|

|

A similar development can be applied to obtain .

3.3 Solution of the adjoint equation and computation of the gradient

The adjoint equation (13) is a system of ODE with backward in time propagation. We apply a change of variable from the symmetric relation :

|

|

(21) |

obtaining the equivalent system with forward in time dynamics

|

which can be solved with any usual numerical ODE solver like Runge–Kutta methods.

For the SEIRD model, if the observable variables are , the adjoint equation (22) takes the form

|

|

with , in (1). After solving this forward adjoint equation we recover the solution of the backward adjoint equation (13) with , or by interpolation and using (21) for other values of .

The last step to compute the gradient is the calculation of the integral in (18). Observe that

|

|

Then, using the Simpson's rule in a set of given times (nodes of the mesh or interpolated times) we have

|

|

(24) |

4 Numerical algorithms for the optimization problem

4.1 Conjugate gradient algorithm

This algorithm is one of the most important algorithms for quadratic optimization problems with positive definite Hessians and for unconstrained continuous convex optimization. It may be considered as a variant of gradient descent where the search directions are generated progressively based on the orthogonality of the residuals and conjugacy of the search directions. The conjugate directions are calculated at each iteration a linear combination of the most recent negative gradient and the last conjugate direction, as indicated in step 9 of Algorithm 1 bellow. Its computational cost is comparable to steepest descent, but it has faster convergence, specially for ill conditioned problems, [9].

|

|

Algorithm. 1 Conjugate gradient algorithm |

If for all we recover steepest descent. Some variants for computing , include:

|

|

We use the short notations F–R, P–R, H–S, D–Y, for these variants.

4.2 BFGS algorithm

This is one of the most popular quasi-Newton algorithms for nonlinear optimization. It is usually more effective because it involves information about curvature, besides the information about the gradient. The curvature information is incorporated by approximating the Hessian matrix during the iteration process, which formaly plays the role of preconditioner for the gradient. The general idea about these methods comes from the second order approximation:

|

|

with a matrix close to the Hessian. Then the so called secant condition is obtained:

|

|

Forcing the gradient to be zero (to look for a minimum), we obtain the Newton step

|

|

and the following search direction is obtained;

|

|

The Hessian and its inverse are updated at every iteration adding rank–one or rank–two matrices. The BFGS algorithm updates the approximated Hessian with a rank–two matrix as showing in step 9 of Algorithm 2 bellow.

|

|

Algorithm. 2 BFGS algorithm |

Remark 5: Another very popular method for least squares problems is the Gauss-Newton method and it variant, the Levenverg-Marquadt method (see [9]), where the Jacobian of the residual vector with respect to and must be computed at each iteration. These partial derivatives can also be computed with variational methods.

4.3 Line search

The most critical step in Algorithms 1 and 2 is the solution of the one dimensional optimization problem at step 4. This is not a trivial step and requires careful treatment. There are several algorithms for this problem, the most common are line search methods and trust region methods. Some classic references are [8] and [9], or the more recent one [15], while some publications, e,g, [1] and [10], show that this topic is still under development.

Given that we have a efficient way to compute the derivative , we may use the secant method, whose iteration formula is

|

|

(25) |

with two initial values and , . The initial value takes into account a proper scaling (see step 5). Computing requires solving the state equation (1) with initial conditions and parameter , obtaining a solution that we call , and then solving the corresponding adjoint equation (13) with and , obtaining the solution . Thus

|

|

(26) |

Remark 6: The secant method for line search may be improved, incorporating standard bracketing strategies that keeps track of upper and lower bounds for the location of the root [1]. We will try this enhancement in a future work.

Remark 7: Newton's method for line is also possible. However, it is more costly because we must compute , i.e. the derivative of (26) with respect to . This operation involves the solution of another two systems of ODE at each iteration: one forward-in-time problem (related to the state equation) and a backward-in-time problem (related to the adjoint equation), where the Jacobians and must be evaluated at different values. We leave this task for a future work.

5 Numerical results for the SEIRD model

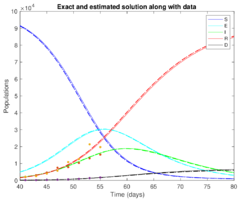

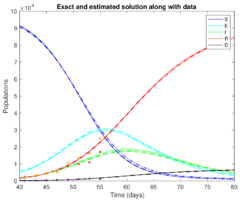

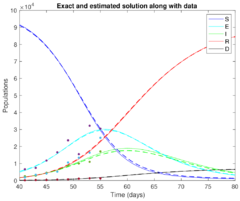

To validate the fitting model and the proposed optimization algorithms we consider the SEIRD model, described in Section 2. Our base or reference true solution is the one obtained numerically in the time interval is (days), parameters , initial condition and total population , shown in Figure 1. Synthetic data is generated adding white noise to the true solution with the random Gaussian generator of Matlab, with zero mean and standard deviations at the times where we suppose to have experimental measurements. The proposed model and numerical algorithms are tested at three levels of noise, 0.05, 0.1, and 0.2. We will divide the experiments in two parts: 1) only the vector parameter is unknown, 2) both the initial conditions and the vector parameter are unknown.

Example 2: Case when is known and is unknown, .

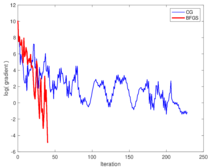

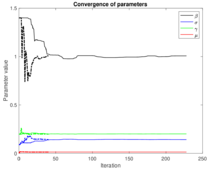

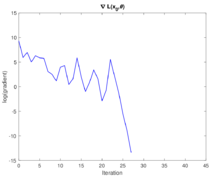

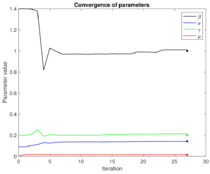

In many problems we are interested in recovering the vector of unknown parameters , assuming that we are given the exact initial conditions and the experimental data at the corresponding times . We consider synthetic experimental noisy data with , where the observable variables are in the time window , . Table 2 shows the numerical results obtained with the CG-algorithm (F-R variant) and the BFGS-algorithms, with initial guess and tolerance to stop the iterations. The relative error is computed component-wise.

| Method | CG (F-R variant) | BFGS |

| Data time window | , | , |

| , no. iters. | , 168 | , 14 |

| Computed | ||

| Relative error |

|

|

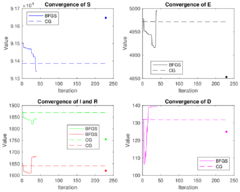

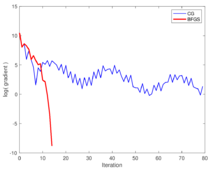

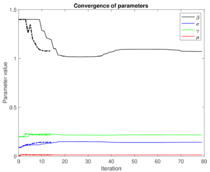

| Figure 2: Left: graph of against iteration value . Right figure: Convergence of with respect to iteration for CG (continuous lines) and for BFGS (dashed lines). | |

|

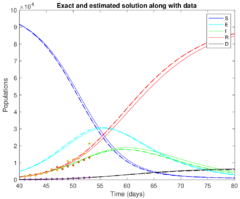

| Figure 3: The dynamics of the true solution (continuous lines) and the one obtained with computed (dashes lines), along with noisy data for the observable variables (points). |

Example 3: Case when both and are unknown, .

This problem needs a more careful treatment, we now have to compute nine parameters instead of four and the scale of both unknowns is different: may have components of the order of while has components of the order at most . The initial guess to start iterations is the same for both algorithms (CG and BFGS). However, the election of the initial guess is more subtle. We first fix the value of in model (6) with the following formula

|

|

(27) |

where is obtained from the noisy data at . The adjustment in (3) ensures that the sum of the components of be equal to . Observe that is not guaranteed to be equal to , because experimental data have inherent noise. Finally, we choose for the CG algorithm and for the BFGS algorithm. The CG algorithm does not always converge properly with an arbitrary initial value like . Table 2 summarize the numerical results.

| Method | CG (P–R variant) | BFGS |

| Data time window | , | , |

| , no. iters. | , 229 | , 41 |

| Computed | ||

| Relative error | ||

| Computed | ||

| Relative error |

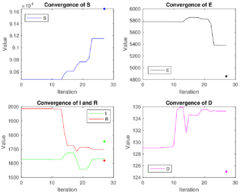

This time the CG algorithm does not admit tolerances smaller than and also the P-R variant to compute at step 8 turn out to be more efficient than F-R. We observe that the window time for experimental data is wider but with less data points for both algorithms. The computed obtained with both algorithms is almost the same, but the computed exhibits more discrepancy. This lack of stability on the estimation of initial conditions from noisy data is already known by the scientific community. In fact, if is Lipschitz continuous with constant , then the sensitivity of the solution of the system (1) with respect to initial conditions is given by .

Figures 4 and 5 show information about the convergence of both methods like in the previous example. We have only added in Figure 5 (left) a plot that shows convergence of . The main difference with respect to the previous example is that convergence not only is slower for both methods but also it is not as smooth as before. The convergence curves oscillate a lot more due to a destabilization effect of the unknown initial conditions. However, Figure 5 (right) shows that the overall numerical reproduction of the true solution is much better than in the previous example (all curves are very close to each other).

|

|

| Figure 4: Left: gradient behaviour obtained with the CG (blue line) and the BFGS (red line) algorithms. Right: convergence of with CG (continuous lines) and BFGS (dashed lines) algorithms. | |

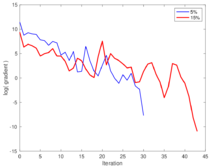

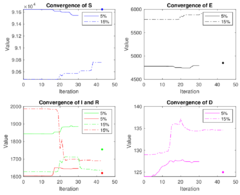

Example 4: This last example includes numerical results obtained with the BFGS algorithm only, but with perturbations for the generation of synthetic noisy measurements. Table 4 summarizes the numerical results.

| Data time window | , | , |

| , no. iters. | , 30 | , 43 |

| Computed | ||

| Relative error | ||

| Computed | ||

| Relative error |

|

|

| Figure 7: Left: convergence of for 5% noisy data (continuous lines) and 15% noisy data (dashed lines). Right: dynamics of the true solution (continuous lines) and of the numerical solution obtained with 5% noisy data (dashed lines) and 15% noisy data (dash-pointed lines). Here we only show the points with 15% noisy data (see Table 2). | |

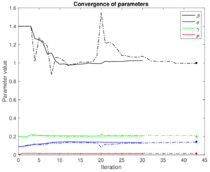

Another way to enhance convergence of the optimization algorithms is adding observable variables. For instance, adding as observable variable for the case of 15% noise and using the same numerical parameters in Table 4, the BFGS algorithm converges in 27 iterations for the given tolerance. The best improvement, besides the faster convergence, is the estimation of the initial conditions, as shown in Table 4

| Parameter | Computed | Relative error |

|

|

| Figure 8: Left: plot of against iteration obtained with the BFGS algorithm for 15% noisy data and observable variables . Right: convergence of . | |

|

|

| Figure 9: Left: convergence of for 15% noisy data and observable variables . Right: dynamics of the true solution (continuous lines) and of the numerical solution (dashed lines). | |

6 Conclusions

We have introduced a deterministic model for fitting observed noisy data into a given dynamical system to find initial conditions and the parameters of the associated system of ordinary differential equations. The classical CG and BFGS optimization algorithms are employed to minimize the quadratic non-linear cost function. It is shown the advantage of using the adjoint equation approach to find the derivatives or gradients. We explain with some detail the implementation of this methods and algorithms with the SEIRD epidemiological model. However, this approach can be equally applied to other problems modelled by ODEs.

Similar numerical results are obtained with both algorithms, CG and BFGS using the same tolerance to achieve a given accuracy, but as expected the BFGS algorithm has better convergence properties and it is more robust. Numerical results show that more experimental data points and more observable variables increase the convergence properties of these algorithms. On the other hand, the higher the noise of the experimental data the slower is the convergence of the optimization algorithms. The main drawback of the proposed methodology is that it is sensitive to the location of noisy data and also to the initial guesses for initial conditions. However, if the algorithms converge properly, then the numerical results obtained are more accurate when is also estimated along with .

For future work, we want to overcome some difficulties or deficiencies that arise with the proposed model and numerical algorithms. First, we must include explicitly into the fitting model (6) the positivity constraint of the unknown parameters, and , specially for those that are relatively small and extend the proposed algorithms accordingly, like in [7]. The inherent instability and difficulty to find the initial conditions may be fixed incorporating the technique of multiple shooting, e.g. [5], [11]. Concerning the efficiency of optimization algorithms, we still need to test the Gauss-Newton method and if necessary its variant, the Levenberg–Marquardt algorithm. Finally, as mentioned before, the line search strategy is crucial for gradient descent algorithms. We may improve the performance of the secant method incorporating bracketing strategies like in [1], or trying the Newton's method as mentioned in remark 7.

Acknowledgements

We want to acknowledge the Department of Mathematics at Universidad Autónoma Metroprolitana – Izatapalapa and to CONACyT for the support for this research work.

BIBLIOGRAPHY

[1] I. K. Argyros, M. A. Hernández-Verón, M. J. Rubio, M. J., On the Convergence of Secant-Like Methods, in Current Trends in Mathematical Analysis and Its Interdisciplinary Applications (2019) 141–183.

[2] J. R. Banga, C. G. Moles, A. A. Alonso, Global optimization of bioprocesses using stochastic and hybrid methods, in: Frontiers in global optimization, Springer (2004) pp. 45–70.

[3] J. Calver and W. Enright, Numerical methods for computing sensitivities for ODEs and DDEs, Numerical Algorithms 74(4) (2017) 1101–1117.

[4] Y. Cao, S. Li,L. Petzold, R. Serban, Adjoint sensitivity analysis for differential algebraic equations: the adjoint DAE system and its numerical solution. SIAM J. Sci. Comput. 3(24) (2003), 1076–1089.

[5] F. Carbonell, Y. Iturria-Medina, J.C. Jimenez, Multiple Shooting-Local Linearization method for the identification of dynamical systems, Communications in Nonlinear Science and Numerical Simulation, 37 C (2016) 292–304.

[6] Calderhead, B., Girolami, M. Estimating Bayes factors via thermodynamic integration and population MCMC Comput. Stat. Data Anal. 53 (12), (2009), 4028–4045.

[7] M. Victoria Chávez, L. Héctor Juárez, Yasmín A. Ríos, Penalization and augmented Lagrangian for OD demand matrix estimation from transit segment counts, Transportmetrica A, Transport Science, 15(2) (2019), 915–943.

[8] J. E. Dennis and Robert B. Schnabel, Numerical Methods for Unconstrained Optimization and Nonlinear Equations, Englewood Cliffs: Prentice-Hall. (1983).

[9] Jorge Nocedal, Stephen J. Wright, Numerical Optimization, New York: Springer, 1999.

[10] I. F. D. Oliveira, R. H. C. Takahashi, An Enhancement of the Bisection Method Average Performance Preserving Minmax Optimality, ACM Transactions on Mathematical Software. 47(1) (2020) 5:1–5:24.

[11] Ozgur Aydogmus, Ali Hakan TOR, A Modified Multiple Shooting Algorithm for Parameter Estimation in ODEs Using Adjoint Sensitivity Analysis, Applied Mathematics and Computation Volume 390(1), (2021) 125644.

[12] Elena L. Piccolomini and Fabiana Zama, Monitoring Italian COVID-19 spread by an adaptive SEIRD model, medRxiv preprint doi: https://doi.org/10.1101/2020.04.03.20049734, April 6, 2020.

[13] Ramsay, J., Hooker, H., Campbell, D., Cao, J. Parameter estimation for differential equations: a generalized smoothing approach. J. R. Stat. Soc. Ser. B 69 (5), (2007) 741–796.

[14] B. Sengupta, K.J. Friston, W.D. Penny, Efficient gradient computation for dynamical models, NeuroImage 98 (2014) 521–527.

[15] Wenyu Sun, Ya-Xiang Yuan, Optimization Theory and Methods:Nonlinear Programming. New York: Springer, 2006.

Document information

Published on 26/11/22

Submitted on 19/09/22

Licence: CC BY-NC-SA license