Abstract

This work explores the use of stabilized finite element formulations for the incompressible Navier-Stokes equations to simulate turbulent flow problems. Turbulence is a challenging problem due to its complex and dynamic nature and its simulation if further complicated by the fact that it involves fluid motions at vastly different length and time scales, requiring fine meshes and long simulation times. A solution to this issue is turbulence modeling, in which only the large scale part of the solution is retained and the effect of smaller turbulent motions is represented by a model, which is generally dissipative in nature.

In the context of finite element simulations for fluids, a second problem is the apparition of numerical instabilities. These can be avoided by the use of stabilized formulations, in which the problem is modified to ensure that it has a stable solution. Since stabilization methods typically introduce numerical dissipation, the relation between numerical and physical dissipation plays a crucial role in the accuracy of turbulent flow simulations. We investigate this issue by studying the behavior of stabilized finite element formulations based on the Variational Multiscale framework and on Finite Calculus, analyzing the results they provide for well-known turbulent problems, with the final goal of obtaining a method that both ensures numerical stability and introduces physically correct turbulent dissipation.

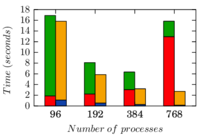

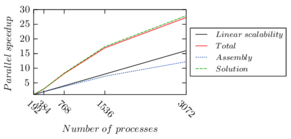

Given that, even with the use of turbulence models, turbulent flow problems require significant computational resources, we also focused on programming and parallel implementation aspects of finite element codes, and in particular in ensuring that our solver can perform efficiently on distributed memory architectures and high-performance computing clusters.

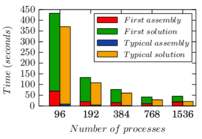

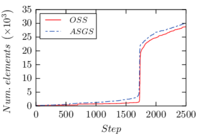

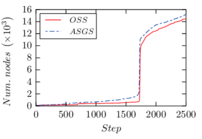

Finally, we have developed an adaptive mesh refinement technique to improve the quality of unstructured tetrahedral meshes, again with the goal of enabling the simulation of large turbulent flow problems. This technique combines an error estimator based on Variational Multiscale principles with a simple refinement procedure designed to work in a distributed memory context and we have applied it to the simulation of both turbulent and non-Newtonian flow problems.

1 Introduction

1.1 Background and motivation

1.1.1 Turbulence modeling

Turbulent flows are a subject of paramount interest in engineering, physics and earth sciences, since they govern many phenomena involving moving fluids like volumes of air or water. Turbulent phenomena appear in flows of all sizes, from large scale processes such as the atmospheric flow of air or oceanic currents to local problems such as wind loads over lightweight structures or the efficiency of a wind turbine. However, the complex nature of turbulent flows means that they can rarely be solved analytically and, as a result, we have to rely on experimental studies or numerical simulations for their analysis.

Experimental studies, which typically involve either campaigns of field measurements or wind tunnel tests, tend to be expensive and complex endeavors (see for example [1]) and, although they are an essential tool in providing a deeper understanding of the problem, tend to provide localized measurements such as point recordings or line averages, which are limited to the regions where sensors are placed, or indirect measurements, such as trajectories of tracers. From this point of view, numerical modeling represents a less costly alternative to experiments with the added advantage of providing a global image of the velocity and pressure fields for the entire volume of interest. Unfortunately, numerical study of turbulent problems is not without difficulty. The fundamental problem in turbulent flow simulations is the wide range of time and spatial scales involved [2], which have to be taken into account in the analysis.

Taking as an example the flow around an obstacle, the largest fluid motions, or eddies, in the flow can be assumed to have a characteristic size comparable to the size of the obstacle itself. Under a turbulent flow regime, coherent flow structures tend to break and the energy associated to these motions is transferred to successively smaller eddies until, for very localized fluctuations, viscous effects dominate and the energy is dissipated. This process can be formalized in terms of the energy associated to motions of a characteristic wavelength in what is known as the Kolmogorov energy cascade [3,4,2] and the size at which viscous dissipation dominates is defined as the Kolmogorov length .

Turbulent flows can be characterized by the Reynolds number , a dimensionless parameter indicative of the balance between inertial and viscous forces in the problem,

|

|

(1.1) |

where is the fluid's density and its dynamic viscosity and is a characteristic velocity of the flow. The Kolmogorov length scale can be related to the largest motions of the flow by means of the Reynolds number [2] as

|

|

(1.2) |

To properly represent the full range of turbulent motions in a numerical simulation, the size of the problem domain would be a multiple of the size of the obstacle on each dimension, while the grid size would have to be small enough to capture motions on the Kolmogorov scale. For a problem, this implies that the total number of elements would be on the order of . Keeping in mind that the Reynolds number in engineering applications is typically on the order of , the number of elements involved in a typical simulation would put it beyond the reach of most computers and scientific computation clusters. As a result, this approach, which is known as Direct Numerical Simulation (DNS) is only applicable to problems with a moderate Reynolds number and is not commonly used in engineering.

The only viable option in most cases is to introduce a turbulence model to reduce the computational requirements of the simulation. This typically involves neglecting the smallest motions and modifying the problem to include terms that model the effect of the neglected motions on the problem. The addition of such model increases the minimum element size required to simulate the problem, extending the range of problems that can be simulated with given computational resources. Turbulence models can be grouped into two broad families, Reynolds Averaged Navier-Stokes (RANS) and Large Eddy Simulation (LES).

RANS models are based on rewriting the problem in terms of time-averaged variables (or time- and space-averaged, if there are spatial directions of homogeneity) and introducing a model for the effect of turbulent motions on the averaged solution. This model has a dissipative effect, removing energy from the average motions, and most commonly takes the form of an added viscosity, called turbulent or eddy viscosity. The temporal and spatial distribution of the eddy viscosity is given by the specific model and is usually determined by the solution of one or more additional equations that describe the production and transport of turbulent quantities. Typical examples of RANS models are the Spalart-Allmaras model [5], which involves one additional equation, or the – [6,7] and – models [8], which introduce two additional variables.

LES models are based on introducing a spatial filter to smooth out high wave number fluctuations in the solution and writing the problem equations in terms of filtered quantities. This allows the separation of larger flow features, influenced by the geometry of the fluid domain and the boundary conditions, from the smaller motions, which are assumed to have an universal behavior and thus can be replaced by local dissipation model, which is problem-independent. The most well known member of this family of models is the Smagorinsky method [9], but many variants and alternatives exist [2].

Note that, while the use of turbulence models makes the simulation of turbulent flows possible in terms of mesh resolution and computational resources, many flows of practical interest still require fine meshes and long simulation times in order to obtain statistically steady solutions and reliable statistical measurements. As a result, the simulation of complex flows at high Reynolds number still requires significant computational resources, frequently beyond what a single computer can provide, and has to be solved using High Performance Computing (HPC) clusters.

1.1.2 Stabilized finite element formulations for turbulent flows

A second problem frequently encountered in the finite element simulation of incompressible flows is the apparition of numerical instabilities, which are a consequence of the incompressibility constraint and the effect of the convective term in the equations for convection-dominated flows (see for example [10] for an introduction to this topic). In the context of finite elements, one possible solution for this issue is the use of stabilization techniques, where the original Galerkin weak form of the problem is modified to obtain a stable formulation. The modified equations are characterized by the addition of new terms that are typically dissipative in nature, in a way that ensures consistency, that is, that the modified problem tends to the original equations as the simulation mesh is refined.

For turbulent flows in particular, the interaction between turbulence models and stabilization terms is an active area of research. While they have clearly different origins and motivations (turbulence models are typically motivated using physical arguments, while stabilization methods are purely numerical in nature), both types of methods have a dissipative effect on the solution, which has raised some questions regarding their interaction or the possibility of using a unified formulation to provide both stability and turbulence modeling. This subject has been studied for example for Streamline-Upwind Petrov-Galerkin (SUPG) stabilization [11,12], Finite Calculus (FIC) based formulations [13,14] and stabilization techniques within the Variational Multiscale (VMS) framework.

In the case of VMS formulations, the stabilized problem is motivated by a separation of scales, differentiating a large scale part of the solution, that can be represented by the finite element mesh, from a small scale part, which remains unresolved, by means of a projection of the solution onto the finite element mesh [15,16]. This concept has intriguing parallels with the spatial filtering introduced in LES methods and can be understood as a mesh-induced filtering. This has motivated multiple investigations studying the use VMS formulations in turbulence modeling [17,18,19,20,21,22,23,24,25,26,27], analyzing their relationship both from the theoretical point of view and through its application to the simulation of turbulent flows of interest.

1.2 Objectives and methodology

The main topic of this monograph is the study of the applicability of stabilized finite element techniques to the solution of turbulent flow problems of practical interest in engineering, with a special focus in analyzing the behavior of different formulations as a LES-like turbulence model. The research is organized around two main lines: The first of them is centered on stabilized finite element formulations and their relationship with turbulence modeling, while the second focuses on computational techniques to facilitate the calculation of complex flows in large simulation domains.

Regarding the relationship between stabilized formulations and turbulence modeling, we choose to focus on two types of methods. The first of them is the VMS framework, where we intend to analyze the behavior of two well-known formulations, algebraic subgrid scales and orthogonal subgrid scales, as well as dynamic subscales, while the second is a new formulation derived from the application of the FIC balance to the incompressible Navier-Stokes equations. We have implemented a fluid solver based on the different techniques and used it to analyze their performance.

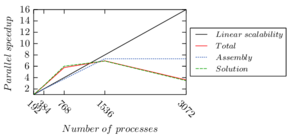

The first topic that will be discussed in terms of the computational aspects of the present work is the use of parallel programming techniques for the simulation of finite element problems in a distributed memory context. In this sense, all developments have been made with a parallel implementation in mind, choosing algorithms and implementations that are suited to a parallel implementation in preference to alternatives that are not, and the parallel capabilities of the implemented code have been evaluated.

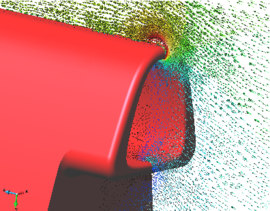

A related topic in relation to the calculation of large problems is the use of adaptive mesh refinement techniques to simplify the mesh generation procedure and reduce the overall number of elements required to perform the simulation. We have developed a technique based on a simple refinement technique that can work in a distributed memory environment, which has been implemented to work in combination with the fluid solver. During the course of the present work we also found the opportunity to apply these refinement techniques to non-Newtonian flow problems. The results of our investigation in this area will also be presented.

The methods presented in this document have been implemented within the open source Kratos Multiphysics finite element framework [28,29], which is based on C++ and Python and has parallel calculation capabilities, some of which have been developed or expanded as part of the present work. This implementation has been used to simulate all the examples considered and generate the presented results.

1.3 Outline of this document

The present document is organized along the main goals outlined above. In Chapter 2 we introduce VMS methods, with a special emphasis on dynamic subscale approximations, and review the arguments that have been used in the literature to relate them to LES formulations. Our tests have driven us to propose a variant of the method characterized by a new model for the pressure subscale. We use both the standard approach and our modified formulation to simulate a turbulent channel flow benchmark problem, which allows us to compare our results to DNS data.

In Chapter 3 we present a new stabilized formulation for incompressible flows based on the FIC balance principles. This formulation is also used to simulate the channel flow problem, as well as other benchmark examples.

Chapter 4 is concerned with different aspects of the parallel implementation of the finite element flow solver that has been used to perform the simulations presented in the previous chapters, including tests of the parallel performance of the solver. In the same chapter we present the approach we used to record spatial averages and variances in a distributed memory context.

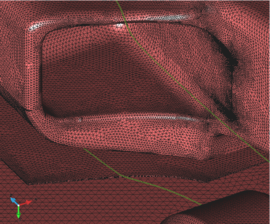

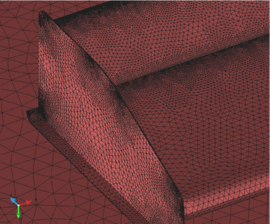

Chapter 5 presents an adaptive mesh refinement technique that combines a parallel refinement algorithm based on edge subdivision with an error indicator motivated by the VMS formulation. This technique is then used to solve both turbulent and non-Newtonian flow cases.

Finally, in Chapter 6 we summarize the work and present its main conclusions, as well as proposing future lines of research.

2 Variational multiscale stabilization for turbulent flow problems

2.1 Introduction

Variational multiscale (VMS) methods [15,16] provide a theoretical framework for the design of stabilized finite element formulations based on the separation of the solution into resolved and unresolved parts, which is achieved through the definition of large scale and small scale solution spaces. The projection of the original equations onto the large scale space gives an equivalent problem that depends on the small scale variables, while the projection of the original equations onto the small scale space is used to motivate a model for the effect of the small scale variables, which are not solved, to the large scale solution.

This methodology has interesting parallels with LES turbulence models, which use a spatial filter to introduce a separation between the resolved and unresolved parts of the solution. This fact has motivated research into the relationship between VMS stabilized formulations and LES methods, and in particular on the possible use of VMS methods as turbulence models. In the present chapter we review some current trends in VMS formulations, including the derivation of algebraic and orthogonal models for the small scales, and the arguments that have been used to relate them to turbulence modeling. We also direct our attention to dynamic subscale models [30,20], which have been shown to provide good results in turbulent flow simulations without requiring the use of an explicit turbulence model [25,31,27].

In spite of the success of the method in the mentioned tests, some aspects of the behavior of VMS methods as turbulence models are not completely understood. In particular, the solution of the problem has a degree of dependency on the precise definition of the stabilization parameters (see for example [31]) and on using or neglecting the pressure small scale [27]. We investigate this issue through the analysis of the results of turbulent channel flow simulations. Additionally, we propose a new model for the pressure small scale, which we found to provide more accurate results in our simulations.

The chapter is organized as follows: in the next pages we review the state of the art for the formulations of interest, introducing the Galerkin weak form of the incompressible Navier-Stokes equations Section 2.2 and using it Section 2.3 to obtain the complete stabilized equations for the different variants that will be considered in this work. We continue by presenting the arguments that have been used to justify their use as a turbulence model in Section 2.4. In Section 2.5 we present the finite element solver that we have implemented based on the described VMS formulations. In Section 2.6 we present our proposal for a new model of pressure small scale and in Section 2.7 we use both this and standard VMS methods to simulate a turbulent channel flow test case, analyzing the solution in terms of different measured statistics of the flow and their comparison with reference DNS data. Finally, we present our conclusions in Section 2.8.

2.2.1 Problem statement

The incompressible Navier-Stokes equations state the balance of linear momentum and mass in a fluid domain , given by

|

where is the fluid velocity, its density, represents the stress tensor and the external forces acting on the domain.

The problem described by Eqs. 2.3 and 2.4 must be completed with suitable initial and boundary conditions. We denote the domain boundary as and introduce its partition into Dirichlet () and Neumann () parts, verifying and . The initial and boundary conditions for the problem can be expressed as:

|

where is the initial velocity field, represents the imposed velocity on the Dirichlet boundary, the outer normal vector and the imposed traction acting along the Neumann boundary. Note that the initial velocity must be chosen to be divergence free to ensure that Eq. 2.4 is verified at all times.

For Newtonian fluids, the stress tensor can be related to the fluid velocity and pressure using the constitutive relation

|

|

(2.8) |

where is the second order identity tensor, the fluid's viscosity and the symmetric gradient of velocity, defined as

|

|

(2.9) |

To obtain a finite element formulation for the Navier-Stokes equations we need to rewrite them in weak form. We multiply Eq. 2.3 by a velocity test function , defined to be zero on the Dirichlet boundary , and Eq. 2.4 by a pressure test function . Integrating the resulting expressions over the fluid domain we obtain

|

The Neumann boundary condition can be introduced in the formulation by using the product rule on the stress term in Eq. 2.10 and expressing the resulting divergence as a boundary integral, which allows us to write

|

|

(2.12) |

Substituting Eq. 2.12 into Eq. 2.10 and introducing the definition of the stress tensor in Eq. 2.8 we obtain

|

Eqs. 2.13 and 2.14, together with the initial and Dirichlet boundary conditions, allow us to state the weak form of the problem. In addition, they also impose regularity requirements on the solution, test functions and problem data, as it must be ensured that all the integrals that appear in the equations remain bounded. This is only briefly discussed here, directing the reader to more specific literature on this topic for a rigorous formulation (see for example [32,33]). In general, for any two given functions we want to ensure that

|

|

We define the norm of a function as

|

|

and functions with bounded norm are said to be square-integrable. The space of functions that are square-integrable in is denoted as .

Although no proof is given here, it can be shown that, for any given time instant , it is sufficient to require that both the momentum test function , the velocity solution and their first order derivatives belong to . The space of functions verifying this property is a Hilbert space commonly denoted as in functional analysis. For the mass conservation test function and the pressure solution , it is enough to require them to be square-integrable, as their spatial derivatives do not appear in Eqs. 2.13 and 2.14.

Considering that must verify the Dirichlet boundary condition when evaluated in the Dirichlet boundary and is zero in by definition, the solutions (for any fixed instant in time) and test functions must be contained in the spaces of functions given by

|

|

Likewise, the external forces must be such that the domain integral in the right hand side of Eq. 2.13 is well defined. Given that , this is equivalent to requiring the forces to belong to the dual of that space, denoted as . There is a similar requirement on the traction , as it has to be integrable when multiplied by the test function restricted to the Neumann boundary, but it will not be formally stated here.

Finally, to make sure that the dynamic problem is well-posed it is sufficient to require that and square-integrable along the time interval of the problem. This is denoted as . In the case of the pressure it is enough to enforce that the norm is square-integrable in time, that is, .

2.2.2 Conservation properties

Before we introduce the variational form of the problem, there is an important remark to be made about the convective term in the momentum equation Eq. 2.3. As long as the velocity field is strongly (point-wise) divergence-free, the following three expressions are identical:

|

|

(2.15) |

or, in variational form,

|

The three expressions in Eq. 2.15 are respectively known as non-conservative, conservative and skew-symmetric forms of the convective term. However, in practice, the expressions in Eq. 2.16 are not equivalent for the discrete problem, as the velocity solution is only divergence-free in a weak (integral) sense. In fact, as shown in [34], each of them gives rise to a variational problem with different conservation properties. While the continuous Navier-Stokes equations enforce the balance of linear and angular momentum, as well as kinetic energy, none of the discrete variants ensures conservation of the three quantities at the same time. According to the analysis in the same reference, the variational form resulting from each expression conserves the quantities listed in Table 1.

| Convective term | Linear momentum | Angular mom. | Kinetic energy |

| Non-conservative | For equal - interpolations | No | No |

| Conservative | Yes | Yes | No |

| Skew-symmetric | For equal - interpolations | No | Yes |

In the present work we have used the skew-symmetric form, as the kinetic energy balance is an important concern in the present study and something that can be measured to to gain insight on turbulent flow simulations. In fact, we can report that using a formulation based on the skew-symmetric form resulted in a better fit to DNS data in our preliminary tests for the channel flow simulations presented in Section 2.7.

2.2.3 Galerkin weak form

Using the skew-symmetric form for the convective term, the Galerkin weak form of the Navier-Stokes problem can be stated as

Find , such that, ,

|

|

Unfortunately, this problem is not straightforward to solve using finite elements, as its discrete version is not numerically stable. In fact, to ensure that that problem of finding a pair that satisfies the above weak form for all suitable choices of has a stable solution, the discrete spaces in which the solution is sought must verify the inf-sup or Ladyzhenskaya-Babuska-Brezzi (LBB) condition, given by

|

|

(2.18) |

where and are spaces containing the velocity and pressure solutions, respectively, and is a positive constant. In practice, satisfaction of the LBB condition implies the use of a higher order interpolation for velocity than for pressure, as is done for example in Taylor-Hood elements [35].

Numerical instabilities in the discrete solution of the Navier-Stokes may also appear in convection-dominated flows, that is, when the convective term is large in relation to the viscous term. In turbulent flows, this issue can be understood from a physical point of view by noting that viscous dissipation occurs predominantly due to high velocity gradients at small scales. If the finite element mesh is coarse, these gradients cannot be reproduced and dissipation is underestimated, which leads to energy accumulation on the larger scales and the eventual loss of convergence of the solution.

Both instabilities can be cured by the use of a stabilized formulation, which involves obtaining a modified weak form not restricted by the inf-sup condition of Eq. 2.18. This has the advantage of allowing the use of equal order interpolations for velocity and pressure. Two classical stabilized formulations for the Navier-Stokes equations are known as Streamline-Upwind Petrov-Galerkin (SUPG) [36] and Galerkin-Least Squares (GLS) [37]. Other alternatives are methods within the VMS framework, which are the main subject of the present chapter, and the Finite Calculus (FIC) approach, presented in Chapter 3.

2.3 Variational multiscale stabilization

2.3.1 Scale separation

The Variational Multiscale (VMS) method, introduced in [15,38,16] is a theoretical framework for the development of stabilized finite element methods that has been used extensively during the last two decades in finite element formulations for fluid problems. The basic premise of this method is the separation of the problem variables into large scale and small scale, or subscale, values , which in our case corresponds to

|

|

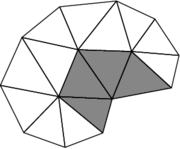

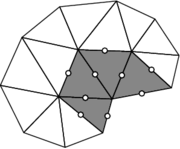

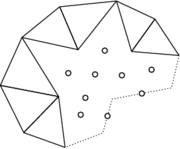

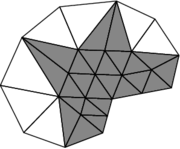

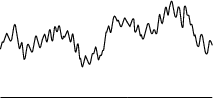

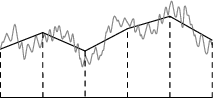

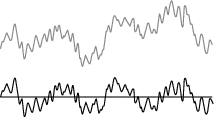

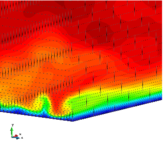

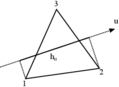

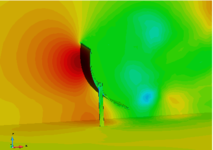

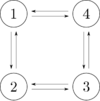

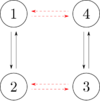

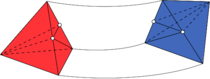

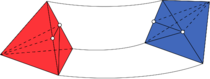

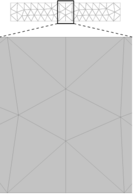

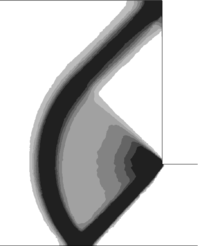

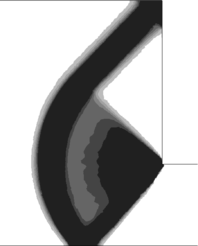

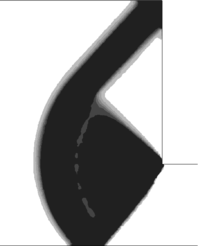

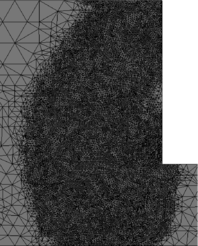

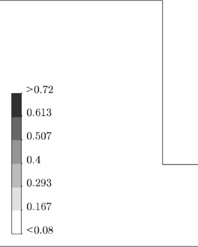

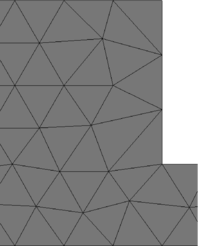

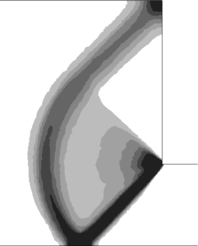

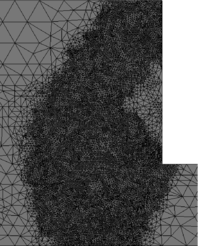

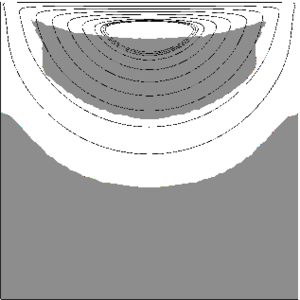

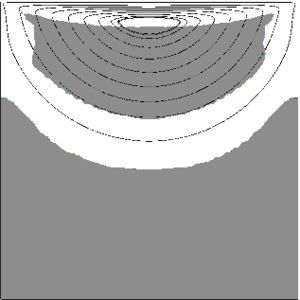

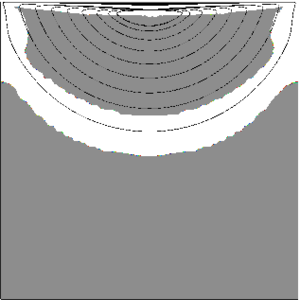

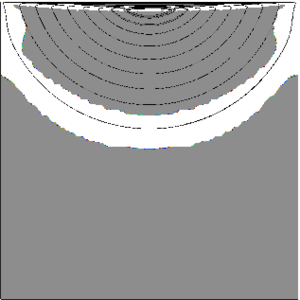

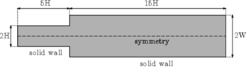

The notation chosen to represent the large scales should be understood as a reference to the finite element mesh size . In practice, scale separation is closely related to the spatial discretization used to solve the problem. This is shown graphically in Figure 1, where the solution along a line is represented. The large scales correspond to the part of the solution that can be described using the finite element interpolation, while the small scales represent fine features of the solution that cannot be reproduced due to the limitations of the discrete interpolation.

|

|

|

| (a) Continuous domain. | (b) Discrete domain. | (c) Continuous solution. |

|

| |

| (d) Large scale solution. | (e) Small scale solution. | |

| Figure 1: Scale separation. | ||

Once a finite element discretization is defined, the space containing the large scale part of the solution can be identified with that of the admissible finite element functions on the discrete domain. This implies that, instead of working with test functions and solutions defined on infinite-dimensional spaces of functions, we now seek a solution on a restricted, finite-dimensional space of admissible solutions, expressed as

|

|

This in turn allows us to define spaces containing the small scale part of the solution , . The subscale spaces complete the corresponding large scale space, that is, , . As a result, it is clear that small scale spaces are infinite-dimensional, unlike the large scale ones, and have to be approximated in order to obtain a solution. There is no single way to approximate them and, in fact, the choice of approximate space for the small scales is one of the defining features of the solution method.

We introduce the following compact notation

|

|

Note that we have modified the convective terms in to introduce a convection velocity . This notation is introduced for convenience, as it will allow us to introduce a linearized version of the operator later. Using the compact notation, the weak form of the problem can be expressed as

|

We introduce the scale separation of the problem variables in Eqs. 2.19 and 2.20. Testing against large scale functions, we can write

|

If the small scale test functions are used instead, the following expression is obtained

|

The large scale problem given by Eqs. 2.21 and 2.22 represents the finite element approximation of the original problem, now containing terms that describe the effect of the unresolved scales on the large scale solution. These additional terms cannot be evaluated in practice, as the small scale variables are not known. VMS methods are based on the definition of a model for the small scale variables, which is motivated by the small scale problem of Eqs. 2.23 and 2.24. This model can then be introduced in the large scale equations, closing the problem.

Before we apply this procedure to our problem, it is convenient to operate on Eqs. 2.21 and 2.22 to eliminate all spatial derivatives of small scale functions, obtaining an expression that depends on and , which will be modeled, but not on their gradients, which will remain unknown. We start by separating the terms involving the large and small scale parts of the solution

|

|

Integrating by parts within each element in the mesh, differential operators acting on and are moved to the test functions. To do so, we introduce the notation of to refer to the part of the problem domain corresponding to element and to denote its boundary.

|

In the present work, the boundary integrals appearing in Eqs. 2.27 and 2.28 will be neglected. This is common in VMS formulations, and can be understood as assuming that the small scale unknowns vanish on element boundaries.

In the following we express this element-by-element integration using the notation

|

|

2.3.2 Small scale equation

Now the question is to define a model for and that can be used to evaluate the domain integrals in Eqs. 2.27 and 2.28. To do this, we start from the small scale problem, given by Eqs. 2.23 and 2.24, which can be developed to read

|

Again, it is convenient to use integration by parts element-by-element on some of the terms in the momentum equation, obtaining the expression

|

|

(2.32) |

Note that the elemental boundary integrals in Eq. 2.32 vanish over internal boundaries because they involve either the exact traction or the exact velocity over the boundary, which are both continuous. Similarly, they cancel out with the corresponding traction in the Neumann boundary. As a result, all boundary terms in Eq. 2.32 can be eliminated in the following.

Rearranging terms in Eqs. 2.32 and 2.31 to separate large and small scale unknowns we can write

|

Eqs. 2.35 and 2.36 can be understood as the projection onto the space of small scales of a differential equation, in the same sense as Eqs. 2.10 and 2.11 represent the projection onto of the Navier-Stokes equations. Moreover, observing the right hand side terms of Eq. 2.35, we can see that it represents the projection of the strong-form linear momentum equation applied to the large scale part of the solution ,. The same can be said about the right hand side of Eq. 2.35, which corresponds to the mass conservation equation applied to the large scale velocity.

Denoting the projection onto the small scale spaces , with and , respectively, Eqs. 2.35 and 2.36 equation can be stated as

|

Since Eqs. 2.39 and 2.40 must hold for all admissible small-scale test functions and , they are equivalent to imposing that the small scale variables , verify the following problem in each element :

|

where and represent the residual form of the Navier-Stokes equations applied to the large scale variables

|

and and are chosen to enforce that right hand sides of Eqs. 2.41 and 2.42 belong to the corresponding small scale space.

As mentioned in the previous pages, the small scale spaces are infinite-dimensional and have to be approximated before the problem given by Eqs. 2.41 and 2.42 can be solved. In practice, the definition of an approximate small scale space corresponds to a choice of projectors and . The most straightforward possibility is to use the entire residual (without projecting to a particular space), which corresponds to considering operators , equal to the identity function or, equivalently, and . This formulation gives rise to the algebraic sub-grid scale (ASGS) method [39].

Another well-known choice consists in taking a small scale space that is orthogonal to the large scale space. If and are the projection onto the large scale spaces and respectively, then the projections in Eqs. 2.39 and 2.40 are defined as , . In this case, and are chosen to subtract from the equation the part of the residuals that belongs to the finite element space, that is,

|

This choice leads to the orthogonal sub-scale (OSS) method, presented in [40,30].

Note that, due to their definition, and belong to the space of finite element functions and can be constructed from their values on mesh nodes using standard finite element interpolation functions.

A second important remark is that the calculation of and requires knowledge of the finite element solutions , and, as a result, it is coupled to the solution of the stabilized Navier-Stokes equations. In principle, this would double the number of nodal degrees of freedom of the problem. However, in practice, given that the Navier-Stokes problem is non-linear and has to be solved iteratively anyway, the projection problem can be implemented in an staggered way, updating the projections after each non-linear Navier-Stokes iteration.

The problem for the small scales, given by Eqs. 2.41 and 2.42, is not usually solved. Instead, it is approximated by an expression of the form

|

|

(2.47) |

where second order tensor and the scalar , known as stabilization parameters, are algorithmic quantities that have to be defined to complete the method.

A motivation for this expression can be found in [30], where the parameters in Eq. 2.47 are designed to ensure that the norm of the modeled subscale variables is the same as that of the exact small scale values. A different justification, based on the approximation of the Green's function of the small scale problem, is provided in [16,21].

2.3.3 Quasi-static small scale models

While Eq. 2.47 represents the complete small scale model derived from the dynamic Navier-Stokes equations, many VMS formulations that can be found in the literature (see for example [39,41,21]) neglect the time variation of the velocity small scale model and define the small scales as

|

|

(2.48) |

This choice corresponds to the assumption that the velocity small scales adapt automatically to the large scale residual. Following the nomenclature of [39], we refer to models based on Eq. 2.48 as quasi-static subscales, as opposed to dynamic subscale models, based on Eq. 2.47.

To complete the formulation, a definition for the stabilization parameters is needed. We follow the approach of [39], where the velocity stabilization parameter is taken to be a diagonal matrix and

|

where is a characteristic length of the element and , are constants, which, for linear finite elements, are usually defined as , (this is the case for example in [42] or [34]). However, the studies presented in [31] for a turbulent channel flow in the low Mach number regime suggest that the choice of values for these parameters can have an impact on the solution. Based on the results presented in that reference and in [27], we have adopted , for our tests.

As pointed out in [20], the use of quasi-static subscales leaves open the possibility of instabilities appearing for small time steps once the problem is discretized in time. The same instability is studied in [43] for the Stokes problem, where it is shown that it can be neutralized if the stabilization parameter satisfies the condition

|

|

(2.51) |

where is the time step and is a constant. To avoid this instability, the stabilization parameter can be replaced by the modified expression

|

|

(2.52) |

although, as remarked in [20], this introduces a dependency of the solution on the time step, even for problems that result in a stationary solution.

2.3.4 Dynamic small-scale models

If, instead of the quasi-static model of Eq. 2.48, we use the dynamic model of 2.47, the time evolution of the velocity small scale has to be taken into account. This was achieved in [30,20] by introducing a time discretization for the small scale acceleration, resulting in the time-discrete small scale model

|

|

(2.53) |

Taking , which corresponds to a backward Euler time scheme, we can write a closed expression for the small scale velocity, given by

|

|

(2.54) |

As remarked in [20], the effective stabilization parameter in Eq. 2.54 is

|

|

(2.55) |

which is precisely the expression introduced as in Eq. 2.52 and prevents the apparition of instabilities due to small time steps. However, unlike in quasi-static approximations, when the problem has a stationary solution, the dependency on the time step is eliminated as, in that case, and the quasi-static model of Eq. 2.48 is recovered.

From the point of view of its implementation, the main difference between the quasi-static model of Eq. 2.48 and Eq. 2.54 is that the latter introduces the old value of the velocity small scale in the model. This means that has to be tracked in time. In practice, this implies evaluating and storing historical values for on the integration points of the finite element mesh.

2.3.5 Complete equations

Once the small scale model is defined, it can be introduced in the large scale equations, given by Eqs. 2.27 and 2.28. The most general formulation that can be obtained from the material presented in the previous section is given by

Momentum equation

|

|

(2.56) |

Mass conservation

|

|

(2.57) |

The first two rows of Eq. 2.56, in combination with its right hand side, constitute the standard Galerkin discretization of the momentum equation. The terms in the third row model the effect of the velocity small scale fluctuations and the velocity small scale itself, respectively, on the large scale equations, while the first term in the last row of Eq. 2.56 represents the effect of pressure small scales.

Analogously, the left hand side of Eq. 2.57 represents the Galerkin weak form of the incompressibility equation, while its right hand side models the effect of the small scales in mass conservation.

While Eqs. 2.56 and 2.57 represent a general VMS formulation, they can be particularized to recover several well-known stabilized methods:

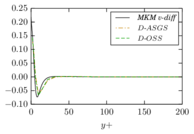

D-ASGS Dynamic algebraic sub-grid scales [20]. The algebraic approximation to the small scales is characterized by using an identity projector to define the small scale space, which implies that the projection terms and are zero. zero.

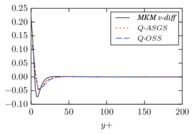

Q-ASGS Quasi-static algebraic sub-grid scales [39]. A quasi-static approximation to the small scales can be recovered by neglecting all terms involving either the small scale acceleration or the old small-scale velocities and replacing by the static stabilization parameter . Additionally, as the small scales are algebraic, projection terms and are also zero.

D-OSS Dynamic orthogonal subgrid-scales [30,20]. If the small scale space is assumed to be orthogonal to the large scale space, the integral involving and is zero, as it corresponds to the product of two terms belonging to orthogonal spaces.

Q-OSS Quasi-static orthogonal subgrid-scales [30] can be recovered from the original expression by neglecting all terms involving or and replacing by .

For OSS formulations, the projections and are defined as the projections of the momentum and mass residuals, respectively, onto the finite element mesh. Applying this definition, they can be obtained as the solution of the projection problem

|

The only question that remains to close the formulation is to provide a formal definition for the auxiliary convection velocity , something that we have been deliberately avoiding up to this point. This variable was introduced to linearize the convective term, and in practice we can identify it with the last known value of velocity, which corresponds to a Picard linearization of the momentum equation. However, in a context of scale separation, should we use the large scale velocity or the full velocity ?

Both choices result in viable stabilized formulations. The classical approach is to use only the large scale velocity , as it corresponds to the finite element solution. However, from a theoretical point of view, using the full velocity has interesting implications for turbulence modeling, which will be the main focus of Section 2.4.

2.4 VMS methods and Large Eddy Simulation

The concept of scale separation introduced by VMS formulations has some parallels with Large Eddy Simulation (LES) methods for the simulation of turbulent flows. LES turbulence models are also based on separating large and small motions in the flow, but in the LES approach this is is typically achieved through the introduction a filtering operation [2], defined as

|

|

(2.61) |

where is a filter function defined on the interval and is known as the filter width.

Applying a filter function to Eqs. 2.3 and 2.4 allows us to write the filtered Navier-Stokes equations, given by

|

where we have used the conservative form of the convective term and is the subgrid stress tensor, defined as

|

|

(2.64) |

The subgrid stress tensor represents the effect of the small scale motions on the large scale part of the solution and and is an unknown, since the quantity cannot be obtained from the filtered velocity . This means that Eqs. 2.62 and 2.63 do not represent a closed expression. However, if the filter width is chosen small enough that the filtered-out small scale motions can be assumed to lie in the inertial subrange, Kolmogorov's hypotheses [3,4] tell us that they have an isotropic, universal (problem-independent) behavior. LES methods use this approach to motivate a model for and justify its introduction in the filtered equations, closing the formulation.

Introducing the subgrid velocity , the subgrid stress tensor can be rewritten using the Leonard decomposition as

|

|

(2.65) |

where each of the individual terms is defined as:

|

The different terms in Eqs. 2.66–2.68 represent subgrid stresses due to the interaction between resolved motions (Leonard stresses), to the interaction between large and unresolved motions (cross stresses) and to the effect of completely unresolved motions (Reynolds stresses).

Like filtering in LES methods, scale separation in VMS formulations introduces a clear division between the resolved and unresolved parts of the solution, which in this case is achieved through the projection to the finite element mesh. This projection to the mesh was introduced in writing the large scale equation, given by Eqs. 2.21 and 2.22, which is rewritten using the conservative form of the convective term to be consistent with the LES expression above, obtaining

|

where boundary terms and terms related to the trace of the viscous stresses have been omitted for clarity and the full velocity has been used for both arguments of the convective term.

An analogy can be established between Eqs. 2.62 and 2.63, which represent the filtered Navier-Stokes equations and Eqs. 2.69 and 2.70, which expresses the projection of the Navier-Stokes equations to the finite element mesh. This was analyzed in [17,20], where it is remarked that the convective term in Eq. 2.69 can be expanded as

|

|

(2.71) |

The last three terms in Eq. 2.71 can be understood as a variational version of the LES subgrid stress tensor . Ignoring the density, they can be rearranged as

|

|

(2.72) |

where we introduced as a variational analogue of the LES subgrid stress tensor.

Furthermore, can be decomposed into Cross and Reynolds terms as:

|

while an analogue of the Leonard stress, representing the contribution of the resolved velocities to the unresolved stresses, appears in the corresponding small scale equation

|

|

(2.75) |

Assuming that the grid size is small enough for the unresolved scales to be in the inertial subrange, we can observe that scale separation and projection to the finite element mesh play a similar role to that of filtering in classical LES methods. It is worth mentioning that the concept of mesh-induced filtering has also been explored by the LES community, where it is known as implicit filtering. This is the basis of the Monotone Integrated Large-Eddy Simulation (MILES) approach of Boris et al. [44], where the authors propose not using an explicit model for the subgrid stresses and relying instead on a specially designed numerical method to introduce the correct dissipation for a given mesh resolution.

A different approach to VMS-based LES, which will only be mentioned here, is based on a three–level scale separation (see for example [41,45] or the review of [19]). In such approaches, the small scales are in turn divided into resolved and unresolved small scales. The presence of two levels of small scales can be used to either introduce explicit LES model terms to represent the effect of the unresolved small scales on the problem or to calibrate the amount of dissipation that is introduced using the variational equivalent of the Germano identity [46,47].

2.4.1 The VMS kinetic energy balance

Given that the derivation of VMS formulations is based exclusively in numerical and mathematical arguments, the physical behavior of VMS methods, in terms of reproducing the expected dissipation rates when the small scales are on the inertial subrange, has to be verified. This topic has been studied in [17,18] for the formulation that we are calling Q-ASGS and in [25,26] for OSS-based methods.

An energy balance for the original Navier-Stokes problem can be obtained by taking in the Galerkin weak form of the momentum equation, given by Eq. 2.13, which produces

|

|

(2.76) |

We are interested in obtaining a balance for the kinetic energy, . Using the fact that the full velocity is incompressible and the equality , Eq. 2.76 can be expressed as

|

|

(2.77) |

Eq. 2.77 expresses the balance of total kinetic energy in the domain, and the individual terms represent energy storage () and convection (), the power exerted by external forces () and boundary tensions () and finally viscous dissipation ().

We can obtain an equivalent expression for the kinetic energy contained in the large scale motions, , by taking in Eq. 2.69, in Eq. 2.70 and adding the two equations, obtaining:

|

|

(2.78) |

Note that in Eq. 2.78, and for the remainder of this section, we neglected all boundary integrals to simplify the discussion. This is equivalent to considering a problem with homogeneous Dirichlet boundary conditions.

It is convenient to integrate some of the terms in Eq. 2.78 by parts and rearrange the convective term as follows:

|

|

where we have used the fact that the exact velocity is divergence free.

With this, Eq. 2.78 can be restated as

|

|

(2.79) |

Analogously to the complete energy balance, Eq. 2.79 can be understood as a balance for the kinetic energy associated to large scale motions. Terms and represent the storage and convection of large scale kinetic energy, while term represents the power exerted by the external forces on large scale motions. Term is the viscous dissipation associated to the large scale motions, which can be assumed to be negligible for high Reynolds numbers. The remaining terms represent the transfer of energy between large scale and residual motions, playing an analogous role to production terms in the filtered Navier-Stokes equations.

Finally, we define the residual kinetic energy as . A balance statement for can be obtained by subtracting Eq. 2.79 from Eq. 2.77, which results in

|

|

(2.80) |

In Eq. 2.80, terms and represent the storage and convection of residual kinetic energy, while term represents the power exerted by the external forces on small scale (high wavenumber) motions. The next two terms represent the difference between the total viscous dissipation () and the large scale viscous dissipation (), which was already accounted for in the large scale energy balance of Eq. 2.79. Again, we remark that, in practice, term is expected to be negligible in comparison to term , since viscous dissipation occurs predominantly for motions in the range of the Kolmogorov length scale, while will contain only motions on a much larger scale , lying on the inertial subrange. Finally, terms and represent the production of residual energy due to the pressure and velocity small scales, respectively, and are exactly the same (but now with opposite sign) as the production terms in Eq. 2.79.

Terms and can be modified by noting that, since and ,

|

The last step to obtain the residual kinetic energy balance is to introduce the small scale models for velocity and pressure in Eqs. 2.81 and 2.82. Noting that represents the large scale viscous dissipation and will be negligible for high Reynolds numbers, we can write

|

|

(2.83) |

It is clear that terms and , since the stabilization parameters and were defined as strictly positive. They play the role of energy sinks in the large scale energy balance of Eq. 2.79, while acting as sources on the residual energy equation Eq. 2.80. Term is problem dependent but, if an OSS small scale model is used, it will vanish unless the external forces have a high-frequency (small scale) component. Finally, term only exists for dynamic small scale models. This fact was used in [25] to justify that OSS formulations can account for backscatter (energy transfer from the small scales to the large ones) if a dynamic small scale model is used.

Starting from a similar reasoning, Guasch and Codina [26] use statistical and scaling arguments to show that OSS formulations extract energy from the large scale equations at the correct rate, provided that some constraints on the behavior of stabilization parameters are respected.

As a final remark on this topic, we compare the large scale energy balance to the filtered energy balance used in filter-based LES formulations. If the kinetic energy associated to the filtered velocity field is defined as , the balance for can be obtained by multiplying the filtered linear momentum equation Eq. 2.62 by and integrating over the fluid domain (see [2]), resulting in

|

|

(2.84) |

where boundary fluxes have been omitted.

We can introduce the definition of the VMS subgrid stress tensor in Eq. 2.78, obtaining

|

|

(2.85) |

where the conservative form of the convective term has been used. Rearranging some terms, Eq. 2.85 can be rewritten as

|

|

(2.86) |

where we have used the fact that is a symmetric tensor to write . Terms to have an analogous interpretation to their counterparts in Eq. 2.79, but here we have obtained an additional term, , which explicitly represents the contribution of the residual subgrid stresses in the energy transfer. Comparing Eq. 2.86 to Eq. 2.84, we see that both LES and VMS approaches extract energy from the large scale problem through subgrid stresses, but that the variational approach gives rise to two additional energy transfer mechanisms, represented by terms and , compared to filter-based LES.

2.5 Discrete problem

To obtain a finite element solver based on the VMS formulation introduced in Section 2.3 we need to discretize the simulation domain, both in space and in time, and linearize the problem to obtain a system of equations that can be inverted using a linear solver. We start by introducing a finite element partition for the problem domain . Given the discrete domain , the large scale interpolation spaces and can be identified with the standard finite element interpolation functions and the large scale part of the solution, and , can be represented using a finite element interpolation as

|

|

(2.87) |

where represents the number of nodes in the finite element mesh, and are the nodal values of the large scale variables and respectively, represents the standard finite element basis function associated to node and its counterpart for vectorial variables, given by

|

|

Additionally, we introduce the following notation for the gradient and divergence of the finite element shape functions, which will be used to write the discrete form of the differential operators involved in the problem

|

|

(2.88) |

We also introduce the following operator to describe convection

|

|

(2.89) |

and the strain rate matrix, which will be used to write the discrete version of the viscous term

|

|

(2.90) |

Additionally, we define , and as the vectors of nodal values of large scale velocity , acceleration and pressure , respectively.

We introduce the finite element discretization of Eq. 2.87 in the variational formulation given by Eqs. 2.56 and 2.57 to obtain the matrix form of the problem. We will analyze the resulting expression for each of the variants we are considering in turn.

Note that in the present work we use linear finite elements, which can not be used to write second derivatives of the variables or test functions. As a result, terms involving in Eq. 2.56 or the strong-form viscous term that appears in the residual introduced in Eq. 2.43 will be neglected in the discrete form. It must be remarked that all terms lost in this way are related to viscous stresses, which should be small in turbulent flow problems.

2.5.1 Quasi-static ASGS formulation

The quasi-static ASGS formulation is in some sense the classical VMS formulation for the Navier-Stokes equations. It is also relatively simple, as it does not involve dynamic terms or projections, so we will present it first.

Starting from Eqs. 2.56 and 2.57, we can neglect all terms involving the projections or . As the small scales are described using Eq. 2.48, terms involving or in these equations can be ignored and the momentum stabilization parameter is , given by Eq. 2.49. After these simplifications, we introduce the finite element interpolation of Eq. 2.87 to describe the problem variables , . Testing against each nodal basis function in turn we obtain a system of equations that can be expressed in matrix form as

|

|

(2.91) |

|

|

(2.92) |

Again, we have used a generic convection velocity in all terms that have a non-linear dependence of velocity. Doing so, we leave open the possibility of using either the full velocity or the only large scale part . Note that, for linear finite elements, the latter choice is equivalent to the Galerkin-Least Squares (GLS) method [37].

The different matrices in Eqs. 2.91 and 2.92 represent the discrete version of the operators in Eqs. 2.56 and 2.57 and can be built from the assembly of elemental contributions. In general, matrix is constructed by the finite element assembly of elemental matrices of the form . For a finite element with nodes, can be defined using blocks , where and are local node indices. Using this notation, the standard Galerkin terms in the variational form of the problem give rise to the following elemental matrices

|

When defining the viscous matrix in Eq. 2.96 we introduced the constitutive matrix , which is given by

|

|

(2.101) |

In the same way, the stabilization terms in the Q-ASGS give rise to additional elemental matrices, expressed here as

|

2.5.2 Quasi-static OSS formulation

The next variant to be presented is the quasi-static OSS formulation. Compared to the Q-ASGS formulation, OSS is characterized by the inclusion of the projections and , which make the small scale variables orthogonal to the large scale unknowns and should reduce the overall amount of numerical diffusion introduced in the problem. As in the previous case, we leave open the possibility of using either or for convection and the corresponding stabilization terms. The matrix form of the Q-OSS formulation can be expressed as

|

|

(2.111) |

|

|

(2.112) |

where the following three new terms terms, involving the projections, have been introduced:

|

The calculation of the projections involves the solution of an additional problem, given by Eqs. 2.59 and 2.60, which can be expressed in discrete form as

|

where and represent the vectors of nodal values of and respectively and the remaining matrices and vectors are given by

|

Eqs. 2.116 and 2.117 represent an additional problem, coupled to Eqs 2.111 and 2.112, which effectively doubles the number of nodal unknowns in the problem. However, since the system matrices for the projection problem, defined by Eqs. 2.118 and 2.119, are effectively mass matrices, they can be replaced by the corresponding diagonal mass matrix, which allows us to obtain an approximate projection while avoiding the solution of an additional system.

Note that the matrices and that appear in Eqs. 2.111 and 2.112 are not strictly necessary in OSS based formulations, since they involve the acceleration term that appears in the residual . As noted in [39], the acceleration of the large scale lies in the large scale space and therefore its projection should be itself. As a result, we could neglect both these terms if we also not take into account when evaluating the residual that appears in the projection right hand side vector . However, as the projection is only calculated approximately using a diagonal mass matrix, we have found it convenient to take these terms into account to improve stability.

2.5.3 Dynamic ASGS formulation

When we use a dynamic approximation for the subscales we have keep track and update the small scale values at each integration point of the mesh. The dynamic small scale velocities are defined by the local problem given by Eq. 2.47, which was discretized in time using a Backward Euler time scheme to produce Eq. 2.53. Eq. 2.53 provides an expression for in terms of the residual and the old subscale value . The same Backward Euler scheme can also be used to obtain the following time-discrete expression for the small scale acceleration that appears in the variational form of the problem:

|

|

(2.122) |

Introducing Eq. 2.122 in Eqs. 2.56, we obtain a modified momentum equation with time-discrete small scales, given by

|

|

(2.123) |

where the first term in the third row and the last term on the right hand side appear from the time discretization of the small scale acceleration. Note that all terms without an index are evaluated at the current time step. Although they play no role in ASGS stabilization, we have included the projections and both in Eq. 2.122 and in Eq. 2.123, since the same expressions will be used as a starting point for the dynamic OSS method.

The matrix form of the D-ASGS formulation can be written as

|

|

(2.124) |

|

|

(2.125) |

where we have introduced three new terms on the right hand side involving the old subscale velocity, defined as

|

The terms that arise from the residual in the expression for the small scale acceleration, Eq. 2.122, have been grouped with the corresponding terms in the small scale velocity model, resulting in the following modified stabilization matrices:

|

Note that, unlike in the quasi-static variants, we will only consider using the full velocity to calculate convection for dynamic subscale models.

2.5.4 Dynamic OSS formulation

Finally, we consider the discrete form of the dynamic OSS formulation. Here, contrary to what happened for the D-ASGS formulation, the integral involving and can be eliminated a priori, since it corresponds to the inner product of two terms that belong to orthogonal subspaces. This results in the following matrix formulation:

|

|

(2.133) |

|

|

(2.134) |

where all involved elemental matrices have already been defined in the previous sections.

Note that, as presented for the Q-OSS method, the projections can be solved using a diagonal mass matrix to avoid the solution of an additional system. In this case, there is also the possibility of re-introducing in Eq. 2.133 and 2.134 all terms neglected using an orthogonality argument. To do so, we can replace , , and by their D-ASGS variants, given by Eqs. 2.129–2.132, and subtracting , given by Eq. 2.127, from the right hand side of Eq. 2.133.

2.5.5 Time integration

Regardless of the VMS variant used, once the problem has been discretized in space we obtain an equivalent matrix problem written in terms of the vectors of nodal velocities , pressures and accelerations that can be expressed in general form as

|

|

(2.135) |

We need to introduce a time discretization to write the accelerations in terms the velocities. For this we use the Bossak time integration method (see [48]), which can be described as a member of the generalized- Newmark family of methods with second order accuracy in time. The basic expression of the Newmark method, which is commonly used in solid mechanics problem and written in terms of displacements , velocities and accelerations , is

|

where and are constant parameters. In fluid dynamics it is convenient to rewrite Eq. 2.136 and 2.137 in terms of velocities, as these are the main variables of the problem, resulting in

|

Note that for the velocity based formulation, the displacements only appear in the equation for the new displacements, Eq. 2.139. As a result, both the displacements and the equation to obtain them can be omitted from the problem if we are not interested in their values.

The Bossak method is a generalization of the Newmark method based on introducing a relaxation factor on the acceleration of the system

|

|

(2.140) |

In the Bossak scheme, Eq. 2.138 is used to discretize in time Eq. 2.140, obtaining a system that depends exclusively on velocities, pressures and their spatial gradients. After rearranging some terms, this yields the following time-discrete problem

|

|

(2.141) |

A common choice for the Bossak parameter is , which provides maximum damping of high-frequency oscillations. The Newmark parameters are then chosen to be

|

|

2.5.6 Linearization of the large scale problem

The system described in Eq. 2.141 is non-linear due to the fact that the convective operator, the stabilization parameters, multiple stabilization matrices and the projections all depend on the current value of velocity. We define the residual of the problem at time step after non-linear iterations as

|

|

(2.142) |

The problem now consists in finding , such that . Denoting the increment between two successive iterations with , we use a first order Taylor decomposition to write the zero of Eq. 2.142 as

|

|

(2.143) |

We use Picard iterations, evaluating all matrices and vectors using the last known values of the variables. With this approximation, the system matrix can be written as

|

|

(2.144) |

which means that the linear system of equations that is assembled and solved at each iteration is

|

|

(2.145) |

The problem given by Eq. 2.145 is solved iteratively until the increments of the system variables and or the residual vector are smaller than a predefined tolerance.

2.5.7 Tracking of dynamic subscales

The dynamic small scale problem was discretized in time as Eq. 2.54. Using the full velocity, which is divergence-free, as the convective velocity, and neglecting the viscous stress term, since we are restricting ourselves to linear finite elements, we expand the residual that appears in Eq. 2.54 to obtain the following expression for the small scale velocity:

|

|

(2.146) |

Eq. 2.146 is non-linear, since and both depend on when using . Moreover, it is coupled to the large scale problem through its dependence to and . Similarly, under these assumptions, all terms that depend on or the convection velocity in the large scale problem require a value for to be computed.

To update the value of the small scale velocity we follow the procedure presented in [49,31]. Given known values of the large scale variables, and , we define a target function

|

|

(2.147) |

where we use to denote computed using in Eq. 2.52. We use Newton-Raphson iterations to find a zero of , resulting in

|

|

(2.148) |

The tangent matrix in Eq. 2.148 is computed neglecting the dependence of on , resulting in:

|

|

(2.149) |

With this we can obtain new values for by iteratively solving Eq. 2.148 on each integration point. These can be used to evaluate the values of the convective velocity and in the next iteration of the large scale problem. Similarly, once the large scale problem is converged and we advance to the next time step, Eq. 2.148 is solved once more to obtain the historical values for the small scale.

Note that, for OSS formulations, also depends on the value of , as it represents the projection of . However, this dependence is ignored in the procedure outlined in this section. Taking it into account would imply solving the (global) projection problem, given by Eqs. 2.116 and 2.117, every time a new is obtained.

2.5.8 Finite element solution algorithm

As a summary of the formulation described in this section, we present the full solution procedure for the method as Algorithm 1, including the calculation of nodal projections and tracking of dynamic small scales. For variants where they are not necessary, the corresponding step in the algorithm can be skipped.

|

|

Algorithm. 1 VMS incompressible flow solver. |

This procedure was implemented within the Kratos Multiphysics finite element framework and used to compute all numerical test cases presented in this chapter.

2.6 A new model for the pressure subscale

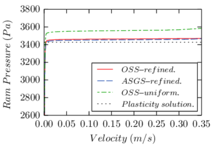

As described in the previous pages, the VMS formulation provides a stabilized method for the simulation of the Navier-Stokes equations that can be understood as a turbulence model, as justified in Section 2.4. However, its application in numerical simulations shows that the results have a strong dependency on the choice of the stabilization parameters. This is observed both in our own results and in the literature (see for example [31,27], which use basically the same formulation we have described), where it can be observed that the obtained mean velocity profile in the turbulent channel flow changes depending on the precise definition of and on whether the pressure small scale is considered or taken to be zero. In particular, the results in [27] suggest that the optimal choice is problem-dependent, since some test cases provide a better fit to the reference data when the pressure small scale is kept, while in other cases neglecting it yields better results.

All this suggests that the current design for the stabilization parameters does not capture the correct behavior for at least some turbulent flow problems. To further investigate this issue, we study an alternate design for the pressure subscale. We start by motivating the design for the stabilization parameters we have been using up to this point and follow by presenting an alternative.

2.6.1 On the design of the stabilization parameters

Recall that the small scale model is motivated by the small scale problem, given by Eqs. 2.41 and 2.42, repeated here in simplified form as

|

where we have written the convective term in non-conservative form and neglected the trace of . The motivation for the stabilization parameters used up to now, (or ) and , is given in [30], where the response of Eqs. 2.150 and 2.151 to high wave number excitations (which we are most interested in, since the small scales represent highly fluctuating motions) is analysed. In such circumstances, it is observed that

|

The design for given in Eq. 2.49 can be justified by noting that has the same limit behavior as the term in Eq. 2.152 when either the convective or the viscous terms are significantly larger than the other. The justification for is given by introducing Eq. 2.152 in the static version of Eq. 2.150 and taking the divergence. Assuming that and are divergence-free, we obtain

|

|

(2.154) |

or, equivalently,

|

|

(2.155) |

The analysis in [30] also shows that the pressure Laplacian in Eq. 2.155 behaves as , resulting in

|

|

(2.156) |

where the algorithmic constant is adopted by analogy with the viscous term in Eq. 2.152. From this expression, and using Eq. 2.151 the usual formulation for the pressure subscale is recovered

|

|

(2.157) |

Finally, we introduce the scaling argument of Eq. 2.152 back to Eq. 2.150 and obtain

|

|

(2.158) |

The small scale model we have been using up to this point, given by Eq. 2.47, is then recovered by neglecting , which effectively uncouples the small scale velocity and pressure models.

2.6.2 Alternative design for the pressure subscale

As an alternative, we propose a formulation which keeps the pressure gradient in Eq. 2.150 and uses it to introduce the pressure subscale. We start by analyzing how keeping the small scale pressure gradient modifies the large scale problem. Retaining means that Eq. 2.158 can be rewritten as

|

|

(2.159) |

hence the corresponding time-discrete small scale velocity model is

|

|

(2.160) |

Introducing Eq. 2.160 as our small scale model in Eqs. 2.56 and 2.57 we can obtain the full problem corresponding to this formulation. The resulting formulation, particularized for the quasi-static subscale case to reduce the number of terms involved, can be expressed as

|

|

(2.161) |

|

|

(2.162) |

where the terms including contributions from have been underlined. Note that the same reasoning could be applied to dynamic subscale formulations.

If we could define an approximate space for and discretize Eqs. 2.161 and 2.162, we would be able to write a matrix problem of the type

|

|

(2.163) |

where matrix and vector represent the matrix problem resulting from either Eqs. 2.91 and 2.92 for the Q-ASGS formulation or Eqs. 2.111 and 2.112 for the Q-OSS formulation, and are the (large scale) vectors of nodal unknowns and results from the contribution of to the underlined terms in Eqs. 2.161 and 2.162. Its precise definition, if was known at the integration points of each element, would be given by

|

We need to provide a model for before we can complete the formulation. To do so, we take a little detour. Consider that the finite element velocity solution , which is not divergence-free, can be decomposed as

|

|

where is a solenoidal field verifying and is a potential field. We identify the pressure small scale with the potential part of the solution , with the goal of obtaining a velocity field that is more divergence-free in some weak sense.

Taking the divergence of and integrating over the element's domain, we write

|

|

(2.166) |

Integrating by parts both sides of Eq. 2.166 we obtain

|

|

(2.167) |

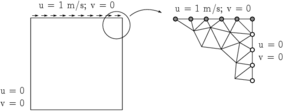

Eq. 2.167 can not be evaluated in practice, since the exact small scale space is infinite-dimensional, but can be estimated using an approximate small scale space. Our proposal is to assume that the the space for the small scale pressure can be approximated by the discontinous version of the large scale space, that is, functions that are linear within each element and discontinuous across element boundaries. The fact that our interpolation functions are discontinuous across element boundaries allows us to write a local problem on each element, given by the discrete form of Eq. 2.167. Denoting with the small scale shape function for node , we can write

|

|

(2.168) |

where and represent the nodal values of and on the element and the matrices are build from nodal contributions of the type

|

Note that, for the shape functions we are proposing, on a given element, is equivalent to the elemental discrete divergence matrix of the large scale problem, given by Eq. 2.98.

Matrix represents the discrete form of a Laplacian problem and can be explicitly inverted on each element if additional restrictions are imposed on the variable . In our tests, we have imposed that is zero on average over the element, which allows us to write

|

|

(2.171) |

Going back to the enhanced matrix problem of Eq. 2.163, the small scale shape functions can be used to rewrite the additional terms and in terms of the nodal vector

|

|

(2.172) |

where we introduced the new finite element matrices

|

Finally, we substitute Eq. 2.171 in Eq. 2.172, eliminating the additional variables from the problem algebraically

|

|

(2.175) |

To complete the formulation we would need to define a model for (as opposed to its gradient), which also appears in Eq. 2.161. However, since we imposed that is zero on average in each element to ensure that can be inverted and we are using linear shape elements (which means that is constant within each element), it can be verified that, for the proposed formulation,

|

|

(2.176) |

where is a constant that depends on the shape of the element.

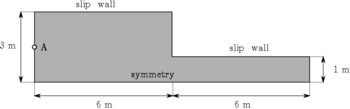

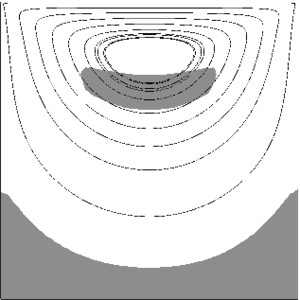

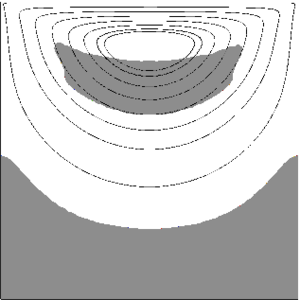

2.7 Application to the turbulent channel flow

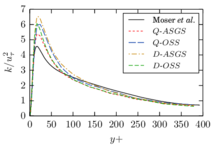

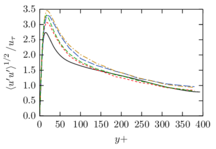

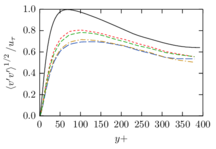

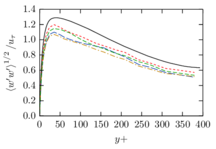

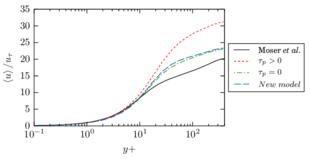

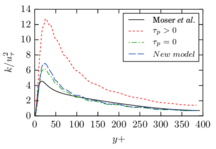

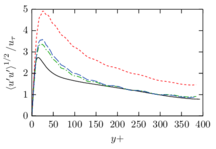

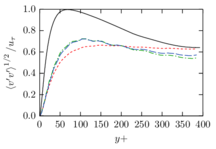

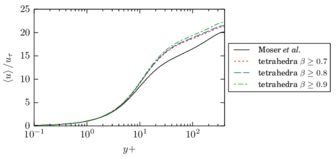

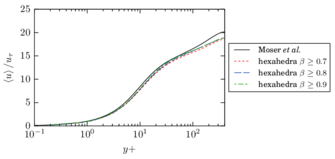

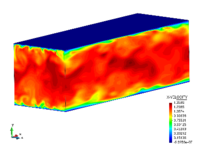

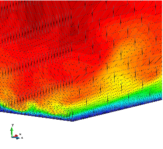

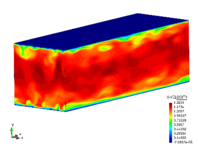

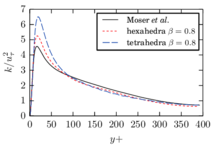

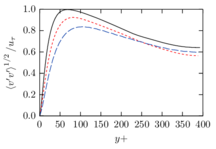

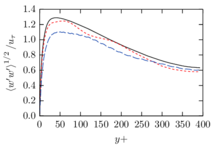

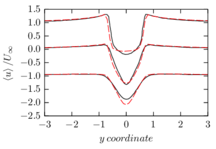

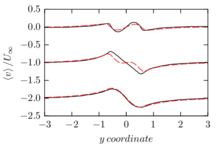

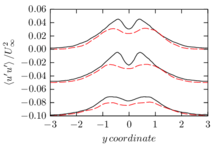

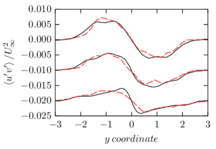

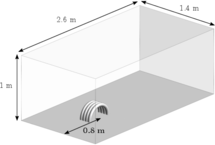

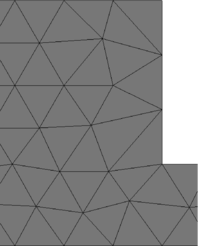

The turbulent channel flow is a classical benchmark for LES formulations, in which a fluid circulates between two parallel walls. In the turbulent regime, the flow is characterized by a transfer of energy from the central regions to the zones close to the wall, achieved through turbulent motions, where it is dissipated through viscous friction. This problem represents a challenge for turbulence models in general and LES methods in particular and is well studied in the literature [50,2]. For moderate Reynolds numbers, it is also within reach of direct numerical simulation (DNS), which means that simulations representing all scales of the flow are available in the literature. In particular, we will use data from the simulations of Moser et al. [51] to validate our results. Note that, while this particular problem could be simulated using DNS, we are not interested in fully resolving all scales of the flow, since we want to study the behavior of the formulation as a LES method.

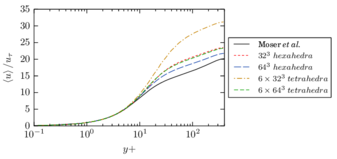

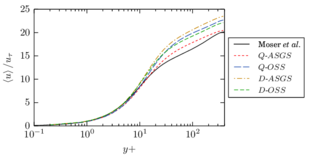

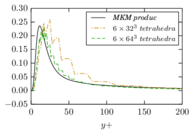

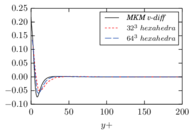

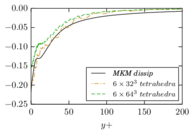

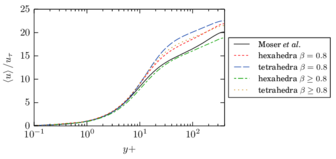

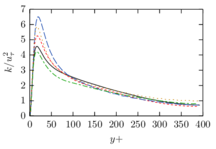

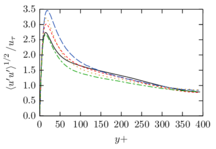

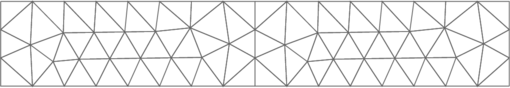

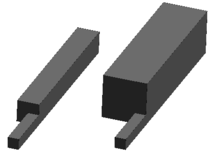

There is abundant literature validating VMS (and other) formulations on this particular benchmark, which was simulated using dynamic subscales in [27], and in [31] for the low Mach number regime; with and without explicit LES (Smagorinsky) modelling terms in [45], using VMS methods in combination with isogeometric finite element formulations [21,23] and using SUPG stabilization by itself [12] or in combination with the Smagorinsky model [11]. As a result, this example allows us to validate our implementation and test our new approach for the pressure subscale. However, we also note that all previous studies, as far as we know, have used linear hexahedra or higher order interpolations. In contrast with previous studies, we want to use this example to compare the results obtained with tetrahedral and hexahedral elements since, while hexahedra provide a richer interpolation, tetrahedra are in many cases the only practical choice to discretize complex geometries, and we want to quantify the impact of using tetrahedral interpolation on the solution.

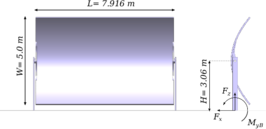

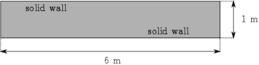

The simulation consists in modeling the flow between two parallel flat plates that are separated a distance . The flow is driven by a pressure gradient applied on the streamwise direction, , which is balanced by the friction produced by the wall, . The wall friction is conventionally expressed as , where is defined as the friction velocity. Given that the forces acting on the problem must be in equilibrium, the pressure gradient and the wall friction are related by the following expressions (see [50] for example):

|

|

The Reynolds number can be given in terms of the friction velocity and the channel width as

|

|

which is denoted by to distinguish it from the bulk Reynolds number, computed using the average streamwise velocity of the flow (see [2]).

For our tests, the Reynolds number is set to , which can be obtained by setting the problem parameters to

|

|

The problem domain is restricted to in the stream-wise, wall-normal and cross-stream directions respectively, which corresponds to the domain used in [21]. Zero Dirichlet conditions are applied on the solid walls and periodic boundary conditions are used for the remaining sides.

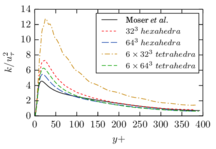

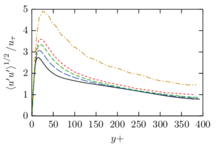

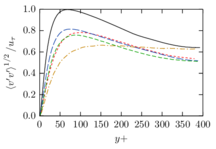

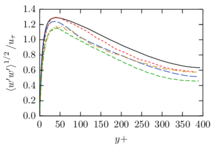

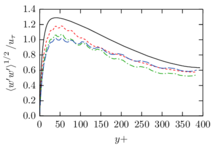

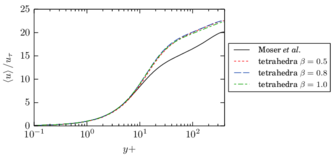

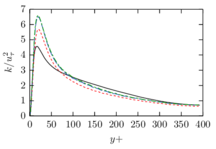

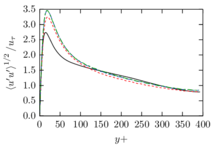

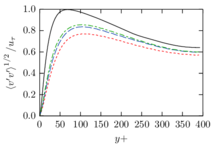

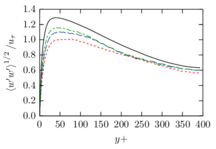

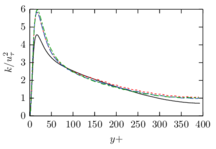

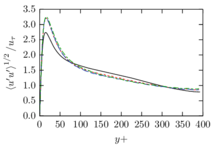

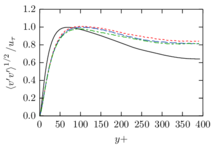

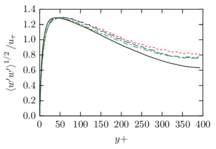

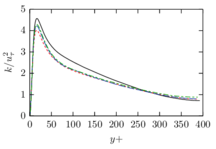

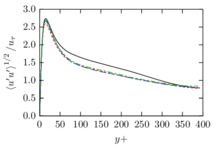

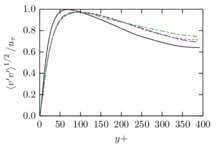

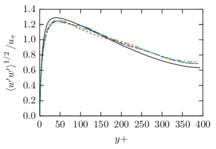

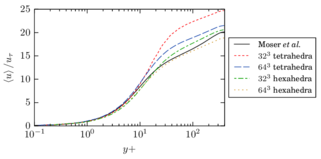

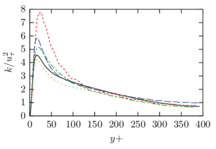

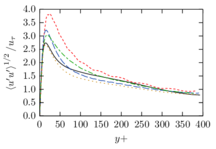

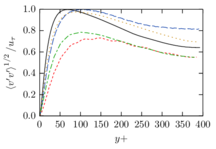

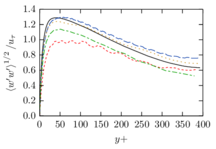

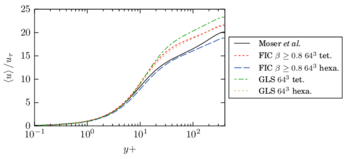

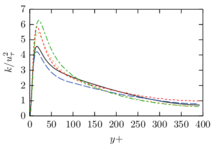

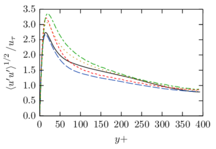

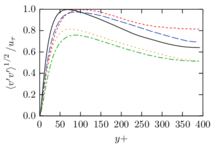

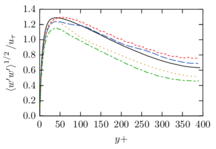

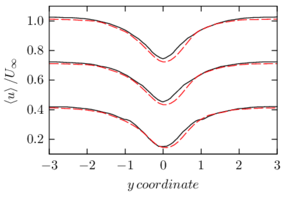

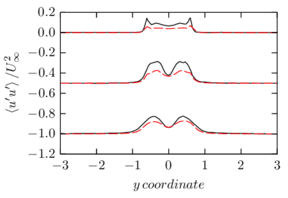

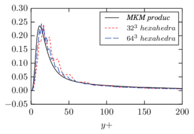

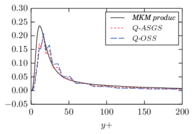

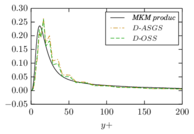

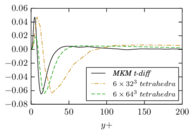

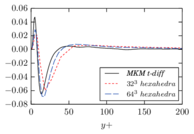

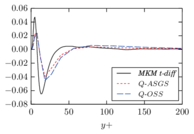

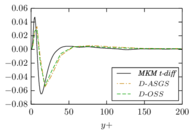

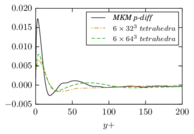

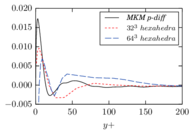

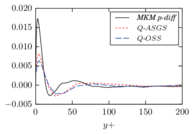

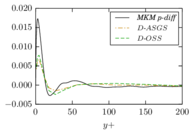

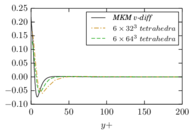

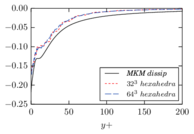

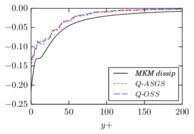

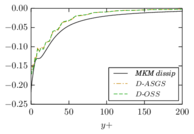

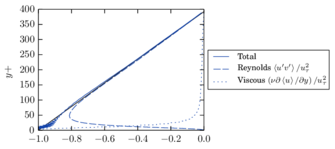

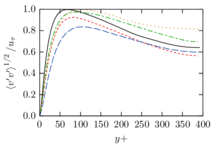

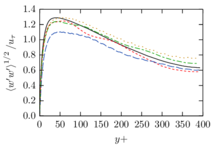

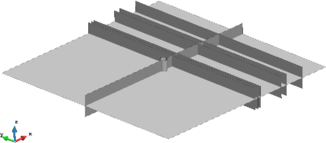

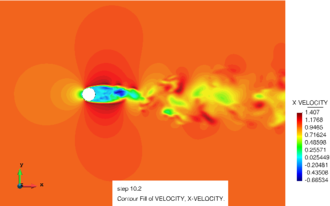

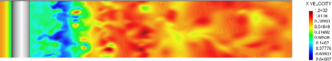

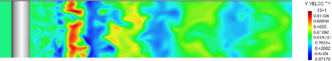

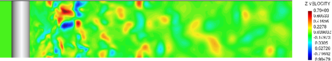

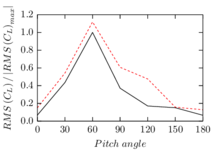

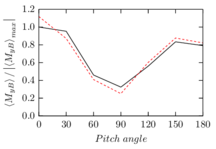

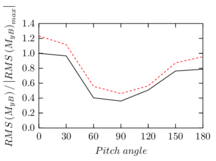

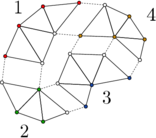

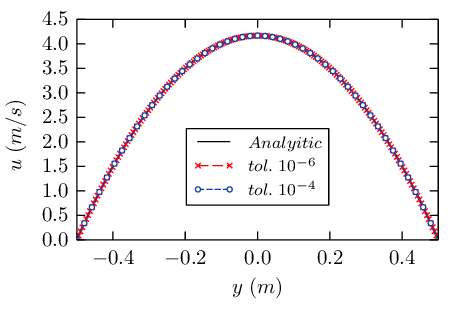

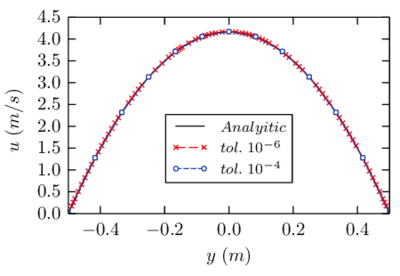

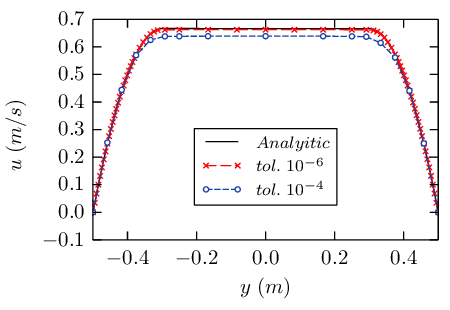

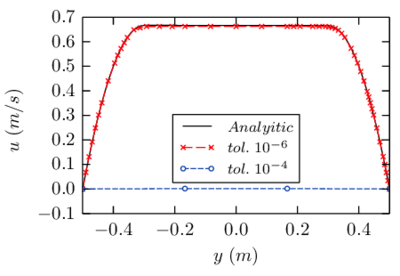

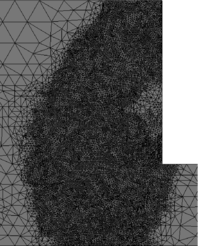

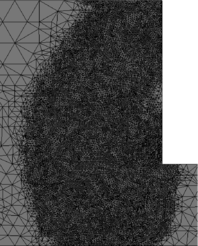

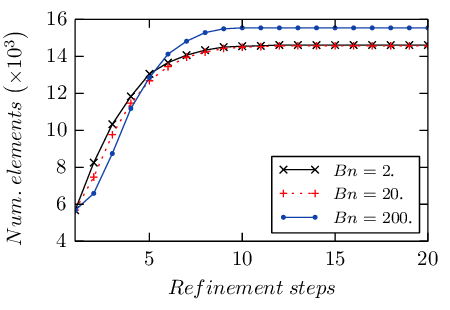

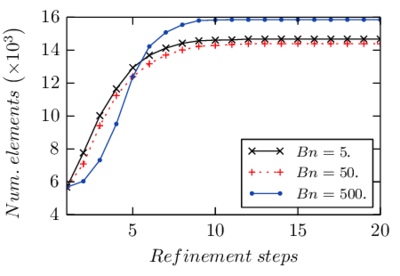

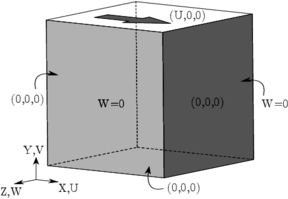

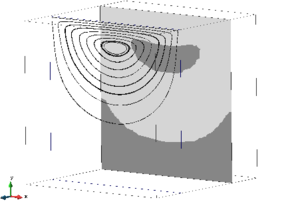

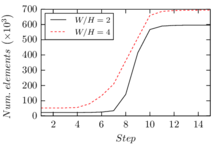

2.7.1 Effect of the simulation mesh