Dedico aquest treball, el més gran que he fet mai, als meus pares, Ana i Juan Ignacio, i a la Vera, el meu amor

Declaration

I hereby declare that except where specific reference is made to the work of others, the contents of this dissertation are original and have not been submitted in whole or in part for consideration for any other degree or qualification in this, or any other university. This dissertation is my own work and contains nothing which is the outcome of work done in collaboration with others, except as specified in the text and Acknowledgements.

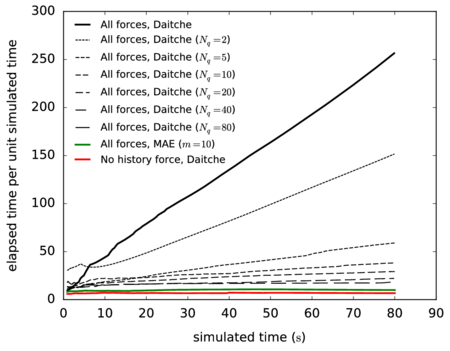

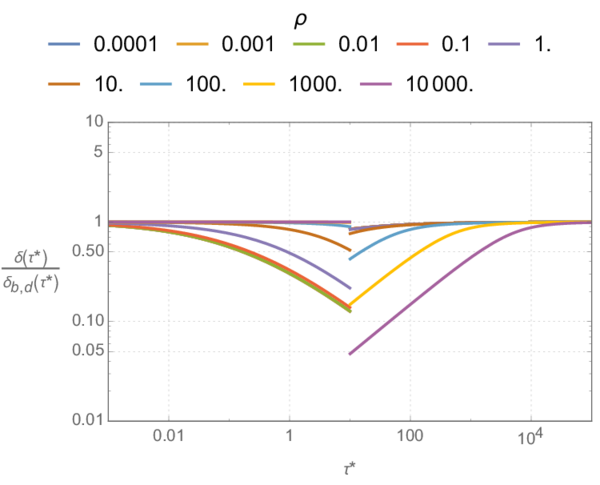

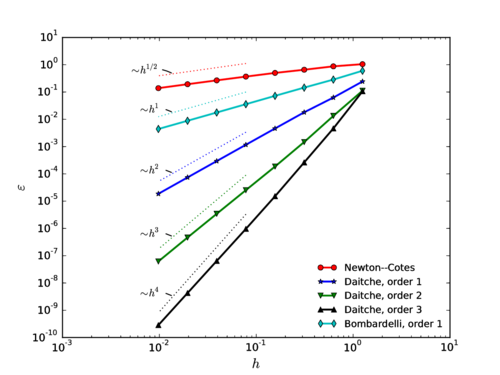

Acknowledgements

Writing this doctoral dissertation often felt like writing a book, perhaps a long one. A somewhat lonely activity, a fight to keep a focus for a long time chasing a quality standard that keeps getting away. And as so often writers explain, this is best achieved in solitude: it is a lonely job.

On the other hand, one is not ready to write it the first day. I arrived at CIMNE knowing very little about programming, or physics, or about how to do research. I must thank Eugenio Oñate for, in spite of this, giving me the opportunity to enter the world of numerical methods and science in general. Eugenio has taken care of my career and has cultivated my confidence in a very generous way over these years. I am especially grateful for his welcoming me a second time after I came back from my failed entrepreneurial adventures.

A year into my doctorate, Riccardo Rossi kindly took me in as his candidate as co-director. He has been the one to listen to my worries and patiently help me every time I got stuck.

An advantage of working in CIMNE is that there is no shortage of smart researchers, always ready to discuss your problems. And I certainly took advantage of this during the course of this work: First of all, thank you Jordi Cotela. I owe most of my work on the extension of the finite element code to you (and also my understanding of subscale stabilization). You provided me with code, lessons and fruitful discussions. I am sorry I could not finish the work on turbulence we were collaborating on in timeI must also thank my other lab-mates: Julio Marti, Pavel Ryzhakov and Ricardo Reyes for their many discussions and advice.

Even before I began my doctorate, I had entered the DEMPack team. I owe a lot to my colleagues there too: Miguel Ángel Celigueta, with whom I have collaborated in many developments and held numerous stimulating discussions on programming and on the discrete element method; to Salvador Latorre, with whom I have shared many jobs; and Ferran Arrufat, who helped me with crucial developments at the end of this work. I am indebted to these people, as well as to Merce López and Joaquin Irazábal for covering my back so many times when I was working on this manuscript. It has been a pleasure sharing all those good times during the lunch breaks with these friends.

I must thank several other researchers who have offered their help. I do not want to forget any of them: Roberto Flores, a friend and a such a talented scientist, who helped me sort out my ideas in several occasions; Enrique Ortega, who gave me valuable ideas on how to use polynomial smoothers; Ramón Codina and Joan Baiges, who helped me understand concepts on subscales stabilization and other questions; Prashanth Nadukandi, who helped me with matrix manipulations and with whom I enjoyed discussing possible research topics; and Pooyan Dadvant and Carlos Roig, who gave me programming solutions I would have taken an eternity to find.

I am convinced that the best research is done in collaboration. I must thank the very fruitful (and pleasant) collaborations I have had with Alex Ferrer, who played a crucial role in the optimization process described in the third chapter and Ignasi Pouplana, with whom we worked on particle impact drilling.

On occasions, I encountered difficulties when reading crucial sections of the literature. Thankfully, most times the authors were kind to answer my questions. I want to thank Benoit Pouliot, Ksenia Guseva and Andrew Bragg for their patient and elaborate answers.

I spent two extremely productive months at UP Berkeley thanks to Tarek Zohdi, who kindly hosted me two times and collaborated with me in writing a paper. I thank him and Eugenio for making that exchange possible.

My research would have not been possible without financial support from Generalitat de Catalunya, under Doctorat Industrial program 2013 (DI 024). I also thank the support received from the project PRECISE - Numerical methods for PREdicting the behaviour of CIvil StructurEs under water natural hazards (MINECO - BIA2017-83805-R - 01/01/2018 – 31/12/2020)

Finally, I want to thank my family for their extreme patience and constant love and support.

Abstract

In this work we study the numerical simulation of particle-laden fluids, with an emphasis on Newtonian fluids and spherical, rigid particles.

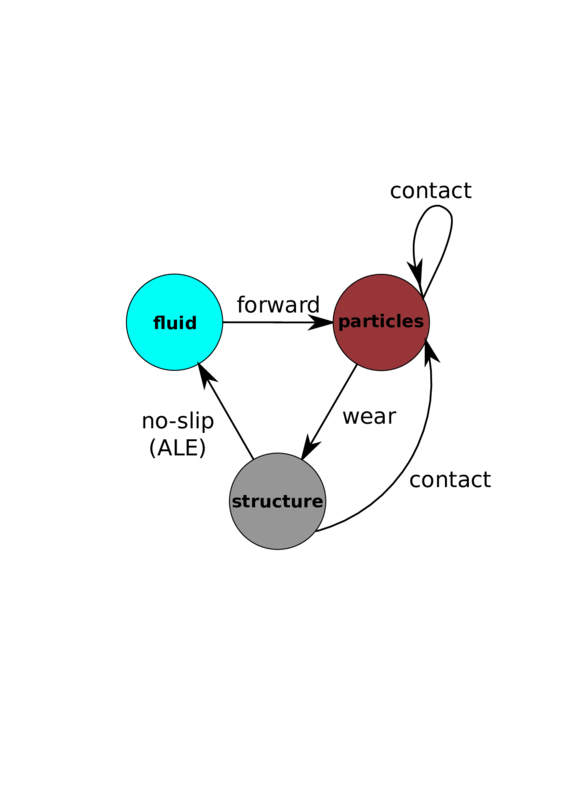

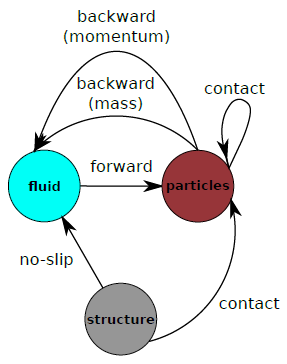

Our general strategy consists in using the discrete element method (DEM) to model the particles and the finite element method (FEM) to discretize the continuous phase, such that the fluid is not resolved around the particles, but rather averaged over them. The effect of the particles on the fluid is taken into account by averaging (filtering) their individual volumes and particle-fluid interaction forces.

In the first part of the work we study the Maxey–Riley equation of motion for an isolated particle in a nonuniform flow; the equation used to calculate the trajectory of the DEM particles. In particular, we perform a detailed theoretical study of its range of applicability, reviewing the initial effects of breaking its fundamental hypotheses, such as small Reynolds number, sphericity of the particle, isolation etc. The output of this study is a set of tables containing order-of-magnitude inequalities to assess the validity of the method in practice.

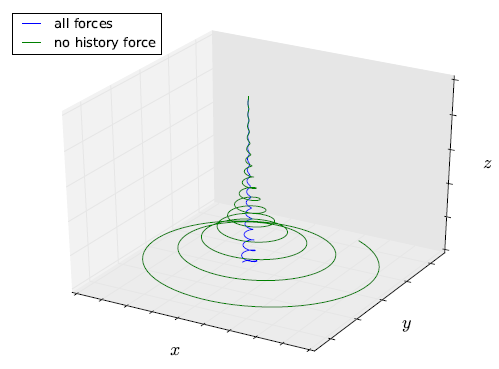

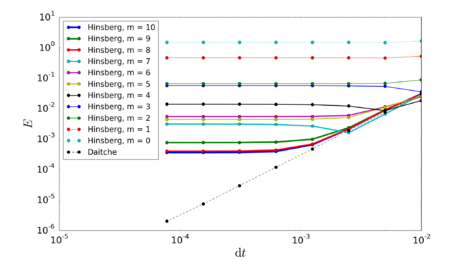

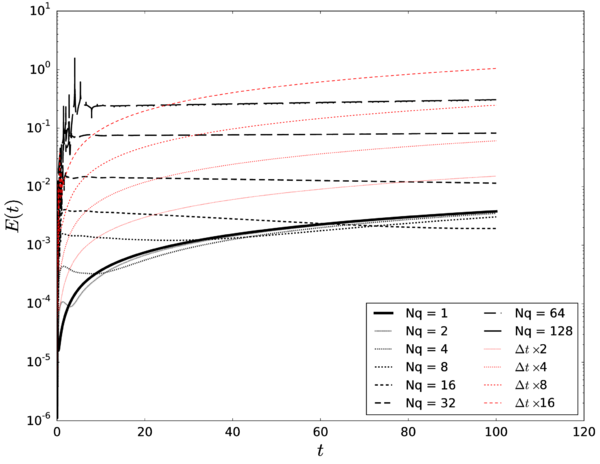

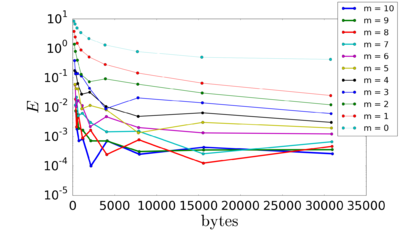

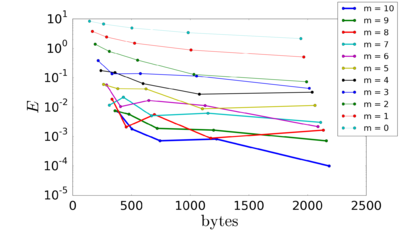

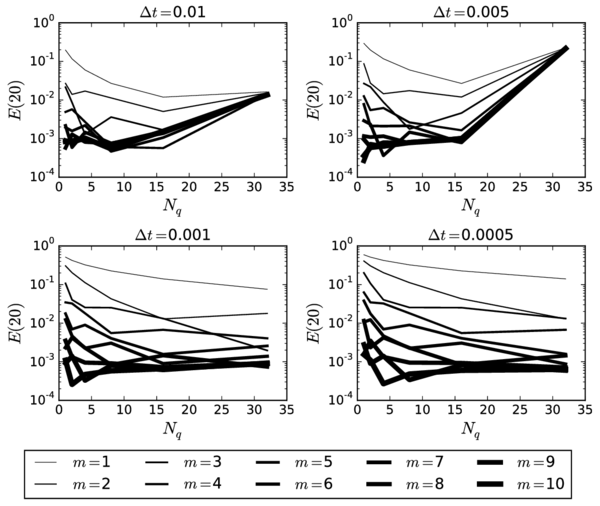

The second part of the work deals with the numerical discretization of the MRE and, in particular, the study of different techniques for the treatment of the history-dependent term, which is difficult to calculate efficiently. We provide improvements on an existing method, proposed by van Hinsberg et al. (2011), and demonstrate its accuracy and efficiency in a sequence of tests of increasing complexity.

In the final part of the work we give three application examples representative of different regimes that may be encountered in the industry, demonstrating the versatility of our numerical tool. For that, we describe necessary generalizations to the MRE to cover problems outside its range of applicability. Furthermore, we give a detailed account of the stabilized FEM algorithm used to discretize the fluid phase and compare several derivative recovery tools necessary to calculate some of the interphase coupling terms. Finally, we generalize the algorithm to include the backward-coupling effects according to the theory of multicomponent continua, allowing the code to deal with arbitrarily dense flow regimes.

Nomenclature

Roman Symbols

- particle's radius

- particle cross-sectional area

- body (continuum mechanics)

- body belonging to component (continuum mechanics)

- drag coefficient in Shah's (2007) model

- local sound speed

- Stokes–Einstein–Smoluchowski diffusivity

- three-dimensional affine Euclidean space

- friction factor (boundary layer)

- added mass force

- characteristic scale of

- total body work

- sum of contact forces

- steady drag force

- characteristic scale of

- Boussinesq–Basset history force

- characteristic scale of

- hydrodynamic force

- Momentum exchange between component and the rest of components

- Herron lift force

- Rubinow and Keller lift force

- characteristic value of

- Saffman lift force

- Froude number

- total surface work

- undisturbed-flow force

- characteristic scale of

- static submerged weight

- gravity acceleration

- space of functions with square integrable derivatives in

- space of functions in vanishing on

- space of vectors in fulfilling the Dirichlet boundary conditions

- spheric particle's moment of inertia

- consistency index

- Boltzmann's constant

- Boussinesq–Basset kernel function

- Knudsen number

- characteristic length scale of the smallest flow structures

- space of square integrable functions in

- characteristic filter length scale

- total momentum

- displaced fluid's mass

- particle mass

- equivalent particle mass

- behaviour index

- total moment of momentum

- number of space dimensions

- number density

- total number of mesh nodes

- number of time steps between quadrature steps

- number of space dimensions

- neighbourhood function

- power set of set

- fluid pressure

- Péclet number associated to the settling velocity

- heat flux

- space of pressure scalars

- discrete counterpart of

- particle position

- field of the real numbers

- strictly positive real numbers

- internal energy source/sink

- Reynolds number

- rotational Reynolds number

- particle Reynolds number

- Reynolds number for power-law fluids

- particle Reynolds number for power-law fluids (Metzner and Reed, 1955)

- Shear-related particle Reynolds number

- Cartesian product involving copies of

- strain rate tensor

- settling coefficient

- Brinkman over Oseen length scales squared

- Schmidt number

- sphere centred at zero with radius equal to

- Stokes number

- Stokes number for bubble rebound

- collisional Stokes number

- rotational Stokes number

- scale-dependent Stokes number

- surface traction on

- characteristic time scale of the smallest flow structures

- mass balance-related stabilization parameter

- momentum balance-related stabilization parameter

- matrix of stabilization parameters

- total torque due to body actions

- sum of contact torques

- discrete time variable for the particles

- characteristic filter time scale

- hydrodynamic torque

- total torque due to surface actions

- fluid velocity

- characteristic velocity scale of the smallest flow structures

- internal energy

- total energy

- particle velocity

- space of velocity vectors

- discrete counterpart of

- particle volume

- slip velocity

- power spent by the body forces

- power spent by the surface forces

- space of solutions

- space of subscales

- space of subscales that vanish at the boundary

- discrete counterpart of

- dimensionless distance for power-law fluids

Greek Symbols

- particle mass fraction

- Basset slip coefficient

- configuration (continuum mechanics)

- motion of a body (continuum mechanics)

- motion of a body of component (continuum mechanics)

- configuration at time (continuum mechanics)

- configuration of component at time (continuum mechanics)

- continuous phase time step

- disperse phase time step

- Levi–Civita symbol

- amplitude of surface irregularities

- radial distribution function

- local strain rate

- subset of where Dirichlet boundary conditions apply

- subset of where Neumann boundary conditions apply

- mean free path of the fluid molecules

- fluid's dynamic viscosity

- yield viscosity

- fluid's kinematic viscosity

- molecular collision frequency

- continuum phase domain

- particle's angular velocity

- characteristic value of

- characteristic scale of the vorticity in pure-shear flow

- moment exchange of component with the rest of components

- relative density of the disperse component

- fluid's density

- Cauchy's stress tensor

- tangential accommodation coefficient

- deviator stress tensor

- sonic time scale

- molecular diffusion time scale

- viscous diffusion time scale

- rotational particle relaxation time

- particle response time

- shear stress at the wall

- dimensionless frequency normalized by

- absolute temperature

- inverse of the particle mobility

- activation coefficient for term

Other symbols

- filtering operator over the component

- filtering operator per unit component volume over the component

- material derivative of the fluid continuum

- Laplacian operator

- boundary of set

- inner product

- integral of the product of two functions

- -component variable over -volume fraction

Acronyms / Abbreviations

ALE - arbitrary Lagrangian-Eulerian method

ALU - arithmetic logic unit

ASGS - algebraic sub-grid scales

BEM - boundary element method

CFD - computational fluid dynamics

CFL - Courant–Friedrichs–Lewy number

COR - coefficient of normal restitution

DEM - discrete element method

DNS - direct numerical simulation

DOF - degree of freedom

EE - Euler–Euler (method)

EL - Euler–Lagrange (method)

FDE - fractional differential equations

FEM - finite element method

FFC - recovery method of Pouliot et al. (2012)

FFT - fast Fourier transform

FLOP - floating point operations

FPU - floating point unit

FSI - fluid-structure interaction

FVM - finite volume method

LBM - lattice Boltzmann method

LES - large eddy simulation

MAE - method of approximation by exponentials

MFP - mean free path

MPM - material point method

MRE - Maxey–Riley equation

MSD - mean square displacement

OSS - orthogonal subscales

PFEM - particle finite element method

PIC - particle-in-cell

PID - particle impact drilling

PPC - particles per cell

PPR - recovery method of Zhang and Naga (2005)

PSM - pseudo-spectral method

RDF - radial distribution function

RVE - representative elemental volume

SC - standard case

SM - streaming multiprocessors

VMS - Variational multiscale (method)

1 Introduction

1.1 Background and Motivation

How is a moving particle affected by its surrounding fluid? This question has intrigued scientists since the Scientific Revolution and earlier, going back to Galileo [325], Leonardo Da Vinci [144], and even Aristotle [293]; perhaps because it refers to a simple system, ideal for thought experiments. The problem attracted attention for pragmatical reasons early on as well, as it is central to the study of ballistics (the science of predicting the trajectory of projectiles), which began to be systematized as a discipline by Tartaglia in the sixteenth century. Despite his ignorance of Newtonian dynamics, Tartaglia was able to provide useful range tables and instructions, although his understanding of the problem was still very rudimentary. For instance, he considered the trajectory of projectiles to be formed by the succession straight-circumference arch-straight, influenced by earlier tradition [335]. About a century later, Galileo provided the parabolic solution for a projectile in void; noting, in 1638, that he expected his solution to be valid only for slow-moving projectiles, while faster moving trajectories should make the beginning of the parabola less tilted and curved than its end. Thus, for a very long time, the intuitive notion that a resistance existed proved too difficult to translate into scientific knowledge.

In 1671 Newton wrote the following text in passing, as an analogy to describe the motion of his light corpuscles, when explaining the breakdown of a white ray into its different colors [259]1:

…I remembred that I had often seen a Tennis-ball struck with an oblique Racket describe such a curve line. for a circular as well as a progressive motion being communicated to it by that stroak, its parts on that side where the motions conspire must presse & beat the contiguous air more violently then on the other, & there excite a reluctancy & reaction of the air proportionally greater.

The quotation reflects Newton's very early insight into the fundamental mechanisms of fluid-particle interaction, made possible only thanks to the idea of force, the notion of continued action (as opposed to discrete) and its necessity to have the ball deviate from a straight line (law of inertia), and the action-reaction postulate; all of which were recent discoveries at the time. Moreover, it exemplifies the reductionist approach, the process of continued zooming in that would ultimately give the scientific method its modern power and that particularly resonates with the common theme of numerical methods: 1) break down into simpler entities, 2) model the interactions, 3) aggregate the result of all these interactions.

By running experiments on pendulums with spherical bobs, Newton himself was able to establish that the air resistance was approximately proportional to the square of the velocity in his Principia [260], a result still standing today (for fast-moving particles, in the so-called Newton-drag regime; see e.g., [218]). Together with his laws of motion, this finding was to set the science of ballistics on a much more solid ground2.

Still, little progress could be made from the theoretical point of view for many years, due to limited understanding of fluid dynamics. Famously, in 1752 D'Alembert proved the paradoxical result that inviscid flows, for which Euler had correctly given their equations of motion, yielded zero resistance on submerged objects. Almost a century later, Saint-Venant (who had actually derived the Navier–Stokes equations before Stokes) took the first steps toward its resolution by suggesting that it was necessary to take the fluid viscosity into account, as it would surely introduce some tangential resistance [7].

But it was not until the work of Stokes [326] that the first (correct) quantitative solution to the problem could be derived from first principles, for the case of very small Reynolds number3. Stokes calculated the drag force on a small sphere moving at steady speed in an infinite Newtonian fluid using a simplified version of his own discovery, the Navier–Stokes equations. The force turned out to depend linearly on the velocity in this regime, in contrast to the theory of Newton, whose pendulums operated at higher Reynolds numbers. Insightfully, Stokes observed that

Since in the case of minute globules falling with their terminal velocity the part of the resistance depending upon the square of the velocity, as determined by the common theory, is quite insignificant compared with the part which depends on the internal friction of the air, it follows that were the pressure equal in all directions in air in the state of motion, the quantity of water which would remain suspended in the state of cloud would be enormously diminished. The pendulum thus, in addition to its other uses, affords us some interesting information relating to the department of meteorology.

Where the reference to a pendulum is due to Stokes being in fact primarily interested in solving that problem, from which he derived his famous drag relation as the limiting case of an infinite oscillation period. As it turns out, the problem of cloud formation is still a subject of current studies, most often based on his equation [70,150].

Indeed, Stokes' result became (and remains) very important to fundamental as well as applied science, having lead to at least three Nobel prizes, according to Dusenbery [111]. For instance, Einstein used it to calculate the Brownian diffusion rate (see [290]), a result that provided the first strong evidence of the existence of atoms (i.e., strictly speaking, water molecules) as well as a practical means to calculate their mass!

Most analytical successes in studying this problem have been achieved in the realm of the tiny to the very small; in a world where viscosity counteracts all external forces and where inertia is, to all effects, negligible (or at most a small correction). Neglecting inertia renders the Navier-Stokes equations linear, which removes the tremendous difficulties associated with nonlinear effects, including the analytical intractability of problems and the onset of turbulent chaos. For large particles, when the Reynolds number grows much past one, we have not been able to go too far past the empirical solution of Newton.

But even under low Reynolds number conditions, the analytical difficulties do appear and it took many years until a complete picture was available. Successive advances where made over several decades by many prominent scientists, such as Boussinesq (1885), who derived the unsteady terms that arise when the particle decelerates in otherwise the same conditions as Stokes; Oseen (1910, 1913), who correctly found the first effects of inertia on the steady drag (although not entirely satisfactory, see 2.2.1); Faxén, who accounted for the non-uniformity of the background flow and Saffman (1965), who calculated the lift force in the presence of a linear shear flow (see the historical account by [248]). The equation of motion that we will study in the following two chapters, the Maxey–Riley equation, that generalizes the works of Boussinesq and Faxén was not derived until the year 1983.

With this brief historical account we hope to motivate our interest in studying the motion of a single particle in a fluid. One of the lessons learned during the course of these years of research is to what extent this is a fundamental theoretical piece towards understanding particle-laden flows. Although initially unplanned, such endeavour has ended up taking a large part of our work (2,3).

(1) Specifically, Newton is addressing the origins of the lateral force induced by simultaneous rotation and translation of a spherical particle, causing it to drift, curving its trajectory sideways. This phenomenon would later be rediscovered and empirically studied by Robins in the eighteenth century (although the phenomenon is commonly known as the 'Magnus effect', after Magnus, who studied it much later and who, in fact, cites Robins in his work [229]) [219,325].

(2) Although Newton was unable to improve upon Galileo's parabolic solution by introducing the quadratic air resistance into the equations. The first accurate ballistics tables where produced around mid-eighteenth century by Robin, who obtained them empirically, and by Euler who used Robin's data to fix the required empirical constants and a numerical method to integrate the nonlinear equation describing the trajectories based on Newton's squared-velocity drag law [325].

(3) This still did not resolve d'Alembert's paradox, since the fact that the Navier-Stokes equations coincide with Euler's equations in the limit of very large Reynolds number (large velocities over viscosity ratio), which yielded zero drag, seemed inconsistent with the known empirical observation that the drag did not actually tend to zero with viscosity at high velocities. A resolution of this problem did not come until 1904 with the work Prandtl, see [6], who introduced the concept of the boundary layer that provided a sound transition between both conflicting models.

1.1.1 Numerical simulations

With the growing computational power available and the development of multiphase solvers, one may be tempted to drop the analytical route altogether and simply calculate the forces on individual particles by solving the Navier–Stokes equations around them. A particle-laden flow system is, after all, a set of solid bodies submerged in a fluid, and could in principle be simulated by using their surface to impose the corresponding boundary conditions on the fluid as in any fluid-structure interaction problems.

In fact, researchers are increasingly following this route, producing fully-resolved simulations to study systems of submerged particles (the number of particles in each study is indicated between parentheses): In Johnson and Tezduyar [186] the settling of a sphere in a container was studied with a body-fitted mesh (); in [273] the fluidization of a bed of spheres was studied numerically and experimentally (); in [138] the lattice-Boltzmann method was used to simulate spheres setling in a stationary fluid (); while in [349] an embedded-body approach was used to study particle clustering in turbulent flows (). These methods are particularly helpful in basic research, as they can be used to calibrate empirical models [384], to study qualitative aspects of the flow, including turbulent effects [344] and to study complex systems including non-Newontian flow models and particles interaction in exquisite detail [107,250].

But the numbers in the previous paragraph are still clearly way too small for the vast majority of particle-laden flows of interest: sand grains or pebbles in a river bed, soil slurries, avalanches and pyroclastic flows; sedimentation tanks and vessels used to clarify water or separate small particles by size; pneumatic conveying systems carrying sugar, flour, coal seeds, nuts and conglomerate pellets and in general solid matter in gas or liquid ducts; fluidized beds, used in the chemical industries to enhance chemical reactions and homogenize and dry particulates; bubbles in nuclear reactors, oil, rock and gas bubbles in oil wells; microscopic biological systems like blood cells and platelets in small vesicles or suspended particles in the bronchioles, such as contaminants or vaporised sprays; plankton and organic matter in the ocean [231]; drops in a forming cloud, snow crystals and solid contaminant, like rubber particles, in the atmosphere; and even rocky particles in low-density atmospheres, relevant to the study of planet formation [358].

Thus, one soon realizes that this approach remains hopeless as a tool to study most systems as a whole; and it is expected to remain so for a long time, unless some unexpected, revolutionary discovery leads to a jump of several orders of magnitude in computational power.

On the other hand, note that if we are able to give up the resolution of the flow around the particles and instead modelling the hydrodynamic interactions using the coarse scale description of the flow we are able to immediately generate a radical cut-down in the computational cost.

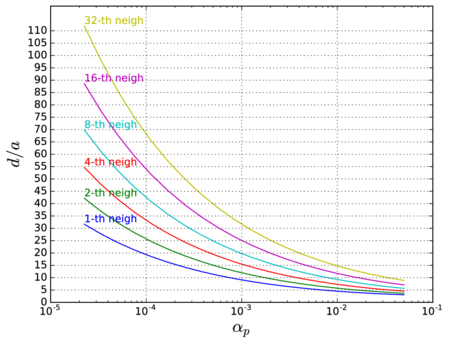

A quick calculation illustrates this point very convincingly: First, take a suspension of particles that we will consider to be of uniform size for simplicity. Let us assume that discretization size required to run a fully resolved simulation (where all the scales present in the fluid motion are considered) to be one tenth of the diameter of the particles. Note that it is unreasonable to think that a substantially coarser size should be sufficient to capture the dynamics of the flow around the particles in sufficient detail.

On the other hand, consider the same problem solved by an alternative, coarse-grained approach, where the hydrodynamic interactions are modelled as a distributed effect on the fluid, and where only the details of the flow much larger than the particles (say, ten times as big) are numerically resolved. Note that with such approach it would hardly make sense to consider finer resolutions at all because, at scales comparable to the particle size, the real flow would be locally distorted by the presence of the individual particles that are being averaged over. But without the information about the individual distortions the solution would necessarily be very poorly modelled at such fine scales. In other words, it would just not pay off to consider that level of detail.

Comparing both situations, one has that the number of computational cells required scales as in the first case and in the second case. That is a factor 1x106 in number of cells between the two approaches, which amounts to a significantly greater number in terms of computational resources1.

Note that such strategy corresponds to a mixed-scale method, where one phase (the particles) is described to a finer level of detail (individual particles) than the other (the fluid), which is described to a coarser level. This work is concerned with precisely this type of mixed methods.

Clearly, one can continue the process of coarsening of the description level further by homogenizing the particles phase as well, giving rise to a multicomponent continuum description of the particulate system. The method most consistent with this philosophy is the so-called Euler–Euler (EE) method [180], also called the Eulerian-Eulerian or two-fluid model; as opposed to the Euler–Lagrange (EL) method, which refers to the mixed method discussed above. In the EE method the motion of the particles is determined by a set of conservation differential equations, analogous to those of the fluid phase. Thus, both the particles and the fluid phases have their associated systems of conservation equations. The equations that correspond to each phase are coupled to each other through the averaged volume fractions (volumetric proportions), whose unknown values vary pointwise, as well as by momentum exchange terms (see Appendix H).

The equations involved can be derived following a purely formal procedure that relates the different averaged (large-scale) variables of interest. Several averaging methods exist, including time, volume and ensemble averages, but they all lead to similar equations [109]. As a consequence, and in a completely analogous situation to that encountered in turbulence modelling, the resulting equations include a number of terms that depend on finer-scale details, and that must be closed (expressed as a function of the averaged variables) to obtain a well-posed problem. This closure is normally based on the introduction of new physical models that most often include unknown parameters to be calibrated from experiments [182]. These methods have been applied to the description of particle-laden flows for many years; especially in the chemical [332,139,192] and the nuclear [196,158] industries.

In fact, EE-type methods remain the only realistic approach in many industrial settings. The reason is found again in the large numbers involved, which penalize the explicit description of each and every particle in the domain. This is a limitation common to all particle-based methods, including the discrete element method (DEM), since most industrial problems involve a much larger number of particles than what is computationally feasible.

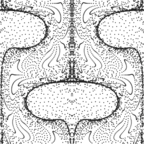

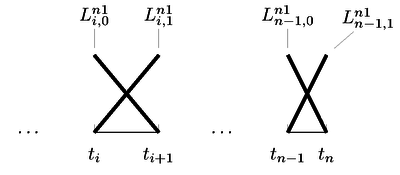

The latter point is illustrated in Fig. 1, where a bunch of industrial applications are identified in a domain size-number of particles graph. As a reference, simulations involving several million particles (green curve) are representative of what can typically be achieved in a personal computer and a few billion (red curve) particles that corresponding to what can ordinarily be achieved in powerful computer clusters, using massive parallelization techniques. The violet 2x1012-curve is representative of the numbers of particles included in today's largest cosmological simulations [281] and can be interpreted as an extremely optimistic upper bound. By mentally locating one's preferred application in the plot of Fig. 1 one quickly arrives at an intuitive grasp of the limitations of particle-based methods as a numerical tool to simulate real systems with realistic numbers of particles.

| Figure 1: Number of particles associated to cubic domains for a fixed proportion of volume occupied by the particles (1x10-1) as a function of the equivalent side length of the domain. The curves corresponding to different numbers of particles are included, as well as the upper limit sizes for clay, silt and sand, as representative granular materials. The scattered points correspond to different industrial application examples: coal, salt and nuts pneumatic conveying (volume per linear meter for typical pipe diameters); fluidized bed experiments of Geldart [143], showing the different Geldart categories; and catalytic cracking (FCC) for the production of gasoline. |

Nonetheless, it must be mentioned at this point that there exists the possibility of using a coarse-grained description, where a smaller-than-real number of (larger) particles is used instead of the one-to-one description [125,304,271] in analogy to what is common practice in molecular dynamics [295,176]. And, while the level of maturity of this approach is still low, with several problems still unresolved [200,258], the technology seems to be yielding promising results with spectacular computational gains [225].

Furthermore, even if we ignore the possibility of coarse-graining, hybrid methods and, in particular, CFD-DEM methods retain an interest due to their many advantages over exclusively continuum-based methods:

- One avoids the complications associated with a continuum-based description of granular matter, with still several open theoretical questions [329,297,22]; despite recent promising advances, mainly in the area of dry granular matter [163,110].

- The non-uniform granulometry of real materials can be reproduced naturally, while the same is extremely difficult to include in a model. Often, the only practical alternative is to simplify the granulometry into a finite number of fixed sizes with different proportions and include as many phases as different sizes are present, with the associated multiplicity of laws and computational resources required. This point is actually included in the point above but deserves special mention.

- A lower degree of empiricism is expected, thanks to the lower-scale description of the solid phase.

- The dynamics of the particles are not averaged-over, so that the actual mechanisms that dictate the behaviour of the particles can be understood directly, providing a more valuable qualitative picture that does not need to be reconstructed (which would be nontrivial or impossible) as a post-process. This advantage is particularly important close to the domain boundaries, where averaged methods tend to do most poorly, due to the sharp transitions.

- The consideration of additional effects such as new types of interaction laws (e.g.electrostatic interaction) is natural and does not require the overall modification of the models in place, but usually only a straightforward additive term.

- One can take advantage of existing programs (e.g.DEM programs) and modify them in a simple way to adapt them to the interaction effects with the surrounding fluid.

So, although the number of real-world applications where hybrid methods can be applied is in practice limited, it is important to recognize their value as validation tools that operate at an intermediate scale between fully resolved models and continuum-only models. Therefore, in addition to their value as direct simulation tools for the industry, they are useful to feed the empirical data needed to close EE-type equations and to understand the full-size objects of study through the analysis of simplified, small-size systems [132].

(1) In computational fluid dynamics, the computational cost is mostly determined by the resolution of the system of equations resulting from the spatial discretization, whose size scales as the number of unknowns. The computational cost of the resolution of the system can at the very best be linear for the latest-generation iterative solvers [146] (grow proportionally to the number of unknowns), although such scaling in practice is difficult to achieve. Furthermore, the smallest time step also needs to be modified, typically decreased proportionally to the element size, in order to preserve the accuracy increase desired; see the discussion around the Courant–Friedrichs–Lewy condition in Section 4.7.7

1.1.2 Industrial Doctorate

This dissertation has been completed with financial support from the Doctorat Industrial grant from the Generalitat de Catalunya, that supports research work performed as part of the work of a company in collaboration with a research center and with a focus on applied research. In our case, the research center role was played by the International Center for Numerical Methods in Engineering (CIMNE) and the role of the company was played by Computational and Information Technologies S.A. (CITECHSA).

The main objective of CITECHSA was to start the development of a computational fluid dynamics (CFD) tool able to simulate a wide range of particle-laden flows. Our realization at the time of beginning the work that a new DEM application tool was at that point at an early stage of development by the DEM group of Kratos (see next section) motivated our choice of the DEM for the simulation of the particles' phase. This choice also created a strong dependence between the DEM developments and those concerning the coupling, that explained the author's enrolment into the DEM group in CIMNE at around that time.

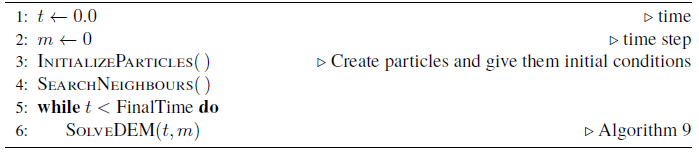

As a consequence, DEM-related tasks have occupied a substantial part of the research time. However most of the resulting algorithmic developments do not go beyond a state of the art, which is sufficiently documented. Furthermore, the application examples and analyses of interest are too detached from the main thread of this work to include them. Thus only a very brief account of some DEM-related elements are described in Appendix A. Nonetheless the reader should keep in mind the DEM background of the author, and the (perhaps) clear emphasis given to the particles' side of the research.

1.2 Objectives and methodology

The objectives of the research reported in this document are the following:

- To develop an algorithm that combines the discrete element method and the finite element method to simulate particle-laden flows with the following list of requisites:

- capability of dealing with a wide range of regimes, including the possibility to have regions with dense and dispersed suspensions simultaneously

- use of the finite element method to discretize the fluid

- use of the discrete element method to model the particles

- To study the range of applicability of the Maxey–Riley equation as a model for the motion of the individual particles submerged in a fluid, improving the current knowledge on the subject and generating, where possible, practical estimates of direct application to numerical modelling.

- To study current alternatives for the numerical treatment of the history term in the equation of motion and compare them.

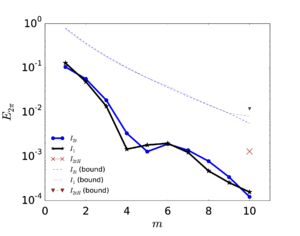

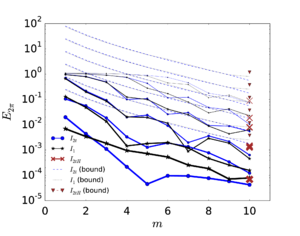

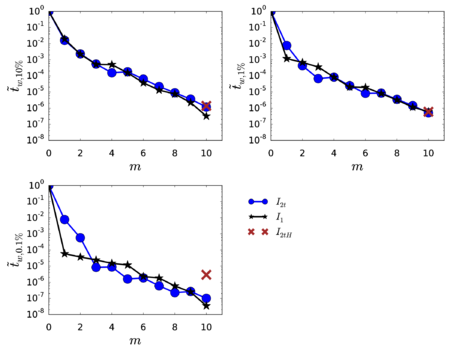

- To improve on the method of quadrature (the history term involves a time integral, as will be explained later) proposed by van Hinsberg [353] and provide a detailed study of its efficiency and accuracy, providing convincing evidence that it is not necessary to neglect this term to have an efficient numerical method.

- To report an account of relevant application examples of the proposed strategy with interest to the industry, as well as of the different technologies developed for their particular requirements.

- To generalize a stabilized finite element method and use it to discretize the backward-coupled flow equations.

- To develop a suitable inter-phase coupling strategy.

Our research activities have consisted in a combination of bibliographical investigation, numerical analysis (designing and running numerical experiments using existing or new code, mainly on a personal computer and sometimes on a small cluster) and programming.

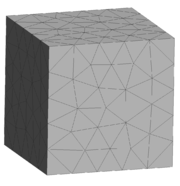

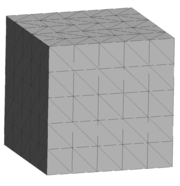

The bulk of the programming work has been carried out within the framework Kratos Multi-physics [88], or Kratos for short. Kratos is based on C++, with a Python-based external layer. It is organized according to a marked object-oriented philosophy that, at its coarsest level, can be modelled as a central core (Kratos Core) connected to a list of independent applications (FluidDynamicsApplication, StructuralApplication, DEMApplication, etc.).

The core contains the definition of the fundamental abstract classes common to a large part of the implementations. It provides a common language and a set generic protocols and algorithmic tools like linear solvers, search algorithms or input/output utilities.

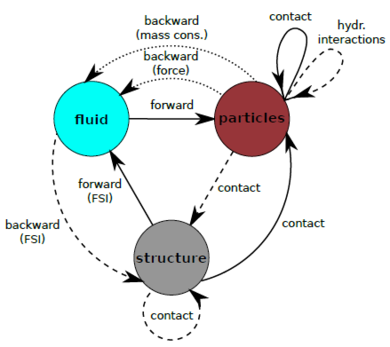

On the other hand, the developments related to specific problems, such as solving the Navier–Stokes equations or performing structural analyses, are coded in the corresponding specialized applications (in this case perhaps FluidDynamicsApplication and StructuralApplication). Moreover, an application can relate any subset of other, existing applications to solve a more complex problem. In order to fulfil our particular goals, we have created SwimmingDEMApplication, that couples FluidDynamicApplication [82,311]1 to DEMApplication [64,177].

FluidDynamicsApplication is an Eulerian-description, finite element method-based CFD simulator and it is in charge of solving the fluid equations of motion. DEMApplication is a general-purpose discrete element method simulation suite that is responsible of tracking the particles, computing contacts between them and evolving their motion in time.

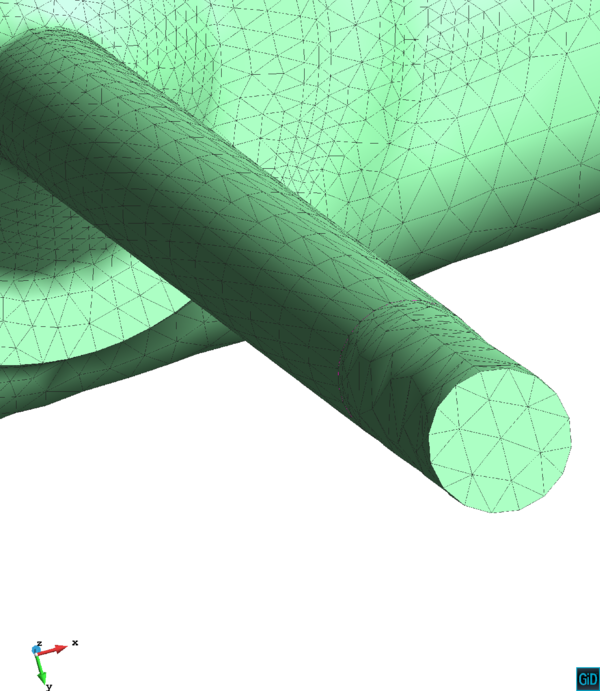

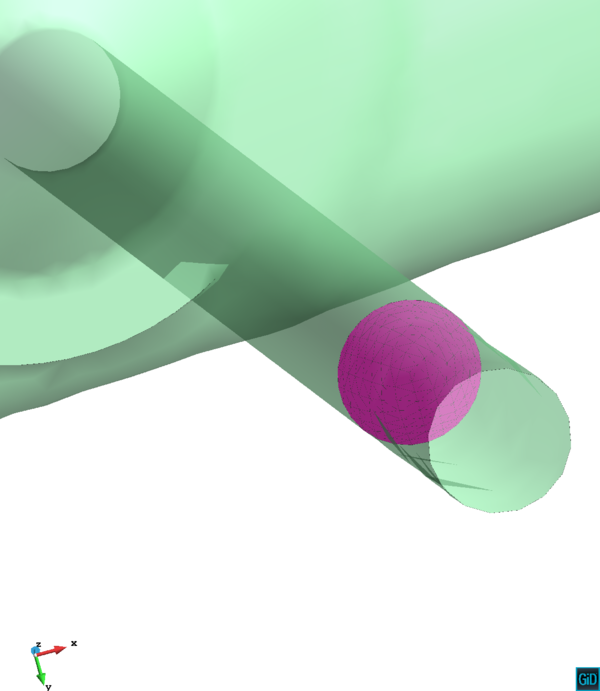

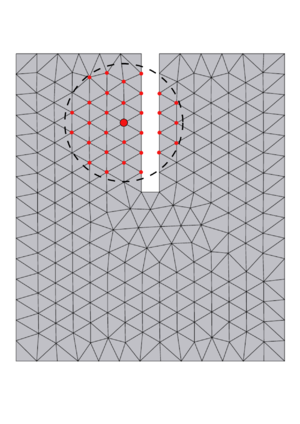

SwimmingDEMApplication contains all the inter-phase coupling tools, the hydrodynamic calculation algorithms, the modified fluid finite elements necessary to solve the modified backward-coupled flow equations (see Chapter 4) and several utilities that were developed where no similar tool was found in the core, but that have not yet reached a stage of maturity to make them suitable for their implementation in the Kratos core (for instance, the derivative recovery tools, see Section 4.4).

The whole of Kratos, including all the applications mentioned above and many others, is freely available for download2. FluidDynamicApplication has an interface usable from the Pre-/Post-processor GiD [244], that, by default, is included in Kratos. Moreover, DEMApplication powers any of the four free packages grouped under the name DEMPack [65], also based on the GiD interface and also included in Kratos. Among these, FDEMPack gives access to most of the capabilities of SwimmingDEMApplication, developed during the course of the research reported in this work.

(1) Our application has been designed so as to facilitate changing the fluid-solving application. Incidentally, it has already been coupled to PFEMApplication, a Lagrangian description fluid solver based on the particle finite element method (PFEM) [268,66].

(2) The code can be retrieved from https://github.com/KratosMultiphysics/Kratos (retrieved on June 15, 2018).

1.3 Outline of this document

The core of this work is contained in Chapters 2 to 4. Of these, Chapter 2 is the most theoretical in character. It is devoted to the analysis of the Maxey–Riley equation, as a fundamental model for the description of the motion of individual particles in a fluid, which at this point is assumed to be described by a known field. Section 2.2 systematically explores the range of validity of the model with respect to several criteria, first in terms of the nondimensional values that appear in the equation itself and later in terms of additional variables involving a selection of simplifications introduced a priori in the development of the theory. In Section 2.3 we apply a scaling analysis to the different terms in the model, providing estimates of their relative magnitude that may be applied in practice to simplify the basic model by neglecting the less important terms. Section 2.4 contains a summary of the main results of the chapter, including Tables 2, 4 and 5, that list the most important numerical estimates.

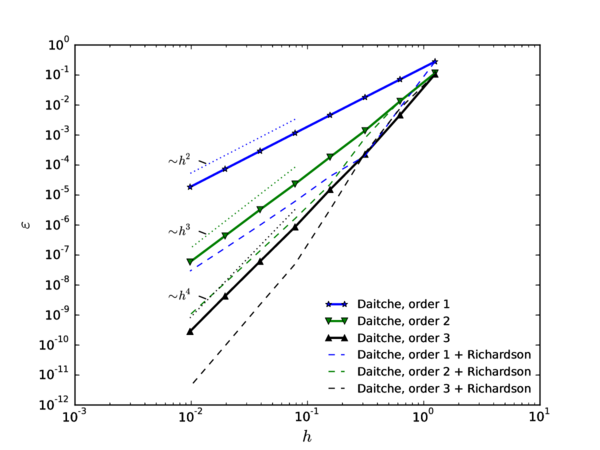

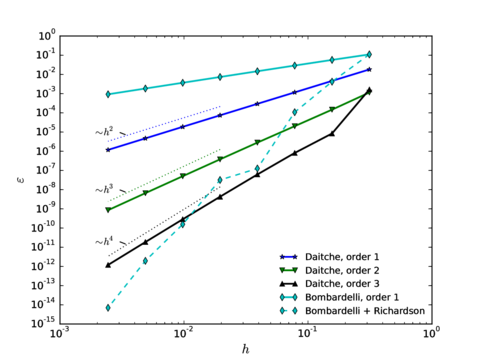

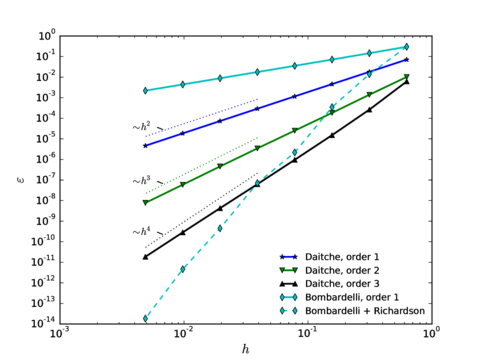

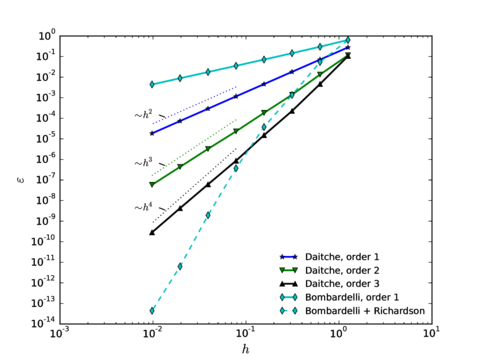

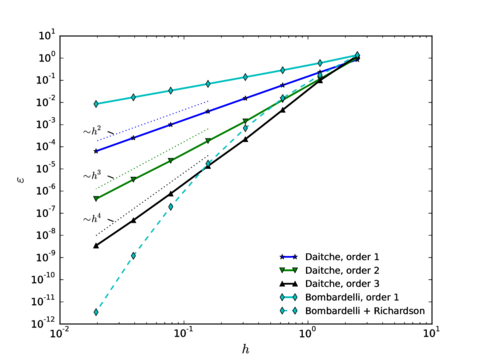

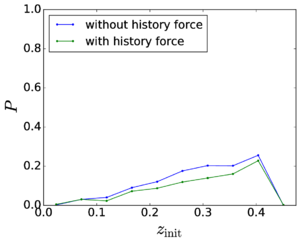

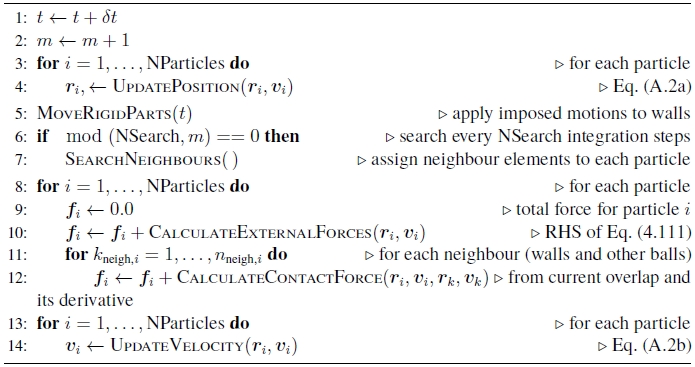

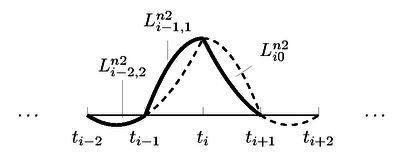

Chapter 3 shifts from the analytic point of view of Chapter 2 to the study of different numerical techniques to solve the Maxey–Riley equation, providing a detailed description of the algorithms involved. We emphasize the treatment of the history force term, whose numerical solution remains challenging, and that is often ignored for this reason. In Section 3.2 we provide a state of the art concerning the numerical treatment of this term, comparing the accuracies of several methods of quadrature. Section 3.3 contains the description of our method of choice for the quadrature, proposed by Hinsberg et al. [353], and of the extensions and improvements we have developed based on it. Section 3.4 contains the description of the overall algorithm for the time integration of the equation of motion including the history term. In Section 3.5 we make a stop to connect the general theory of fractional calculus to the Maxey–Riley equation, which had only been very superficially sketched before. This section appears at this point to take advantage of the terminology and concepts introduced in the previous developments. Section 3.6 is devoted to a systematic analysis of the accuracy and efficiency of the numerical method of solution, applying it to a sequence of benchmarks of increasing complexity. We close the chapter with a summary of the most important results and developments.

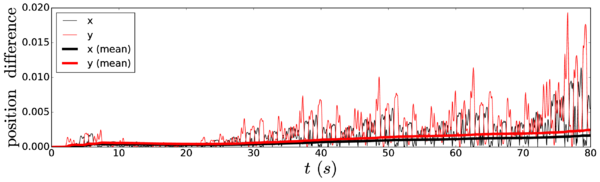

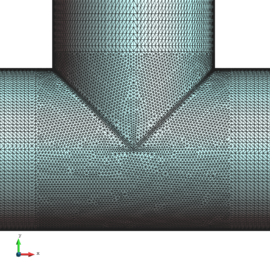

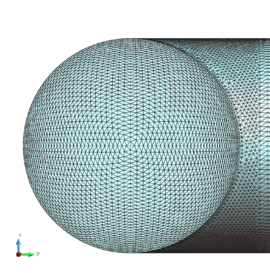

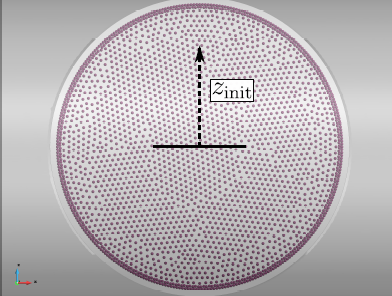

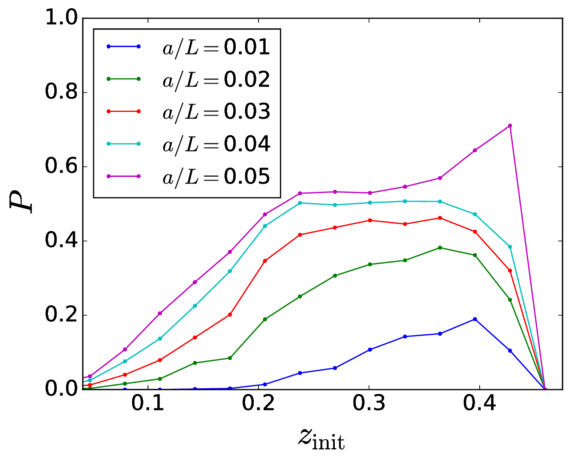

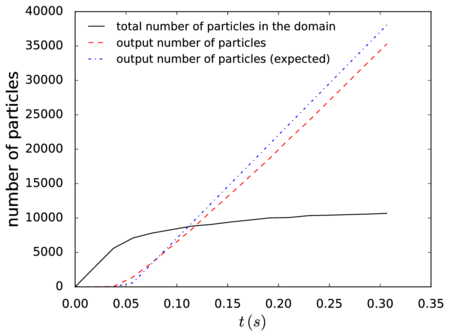

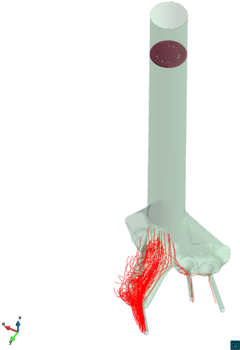

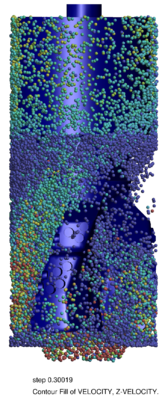

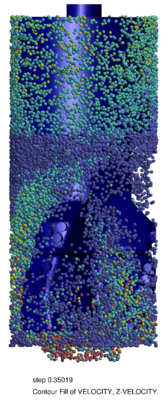

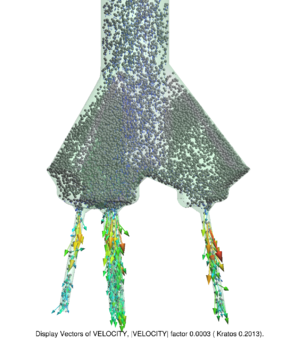

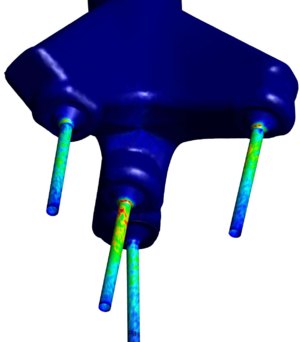

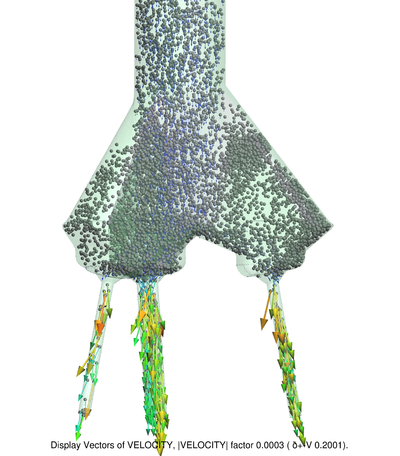

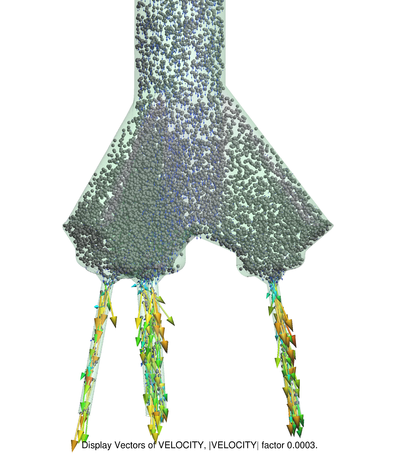

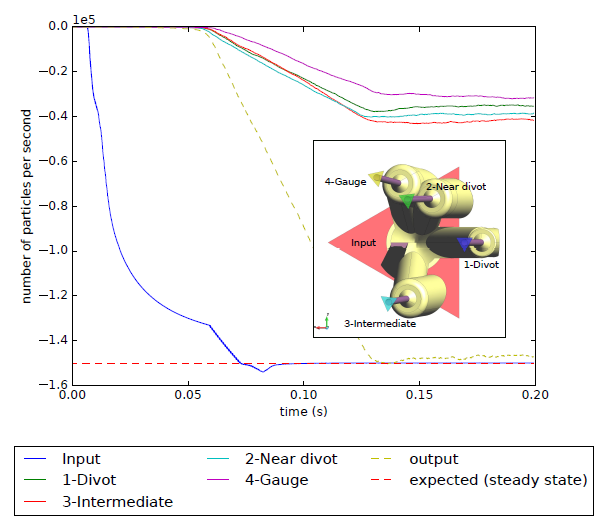

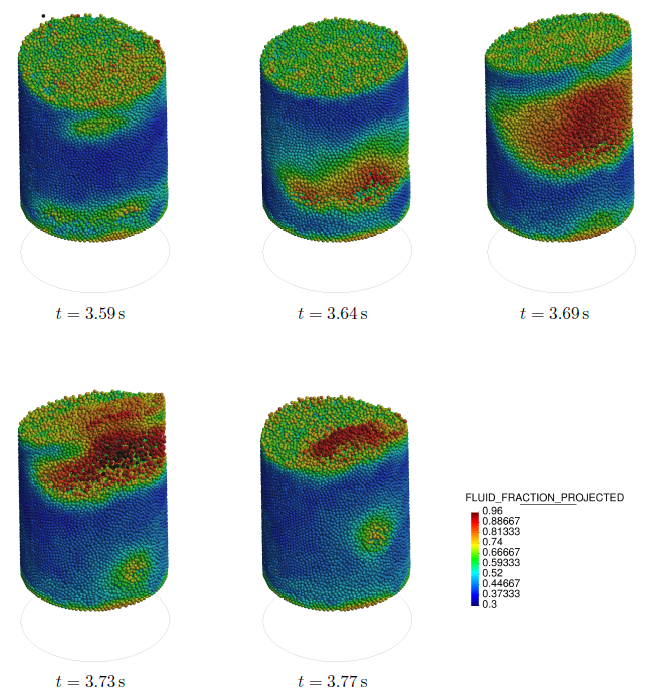

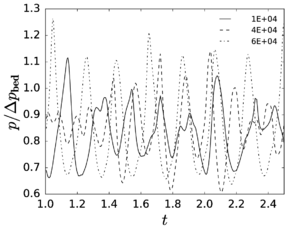

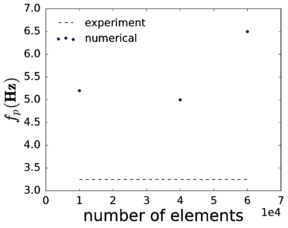

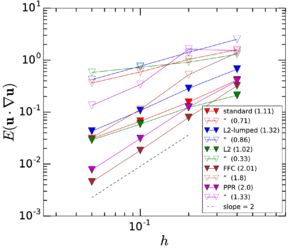

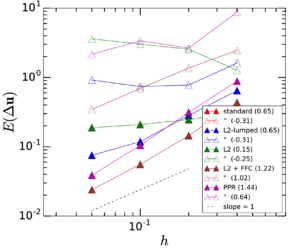

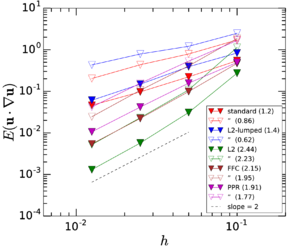

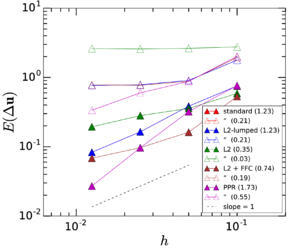

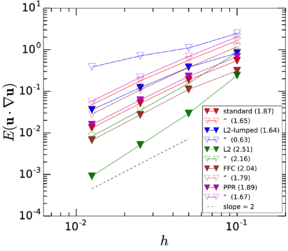

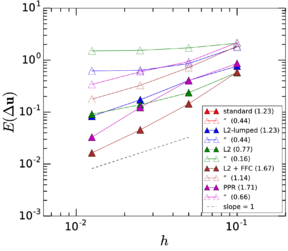

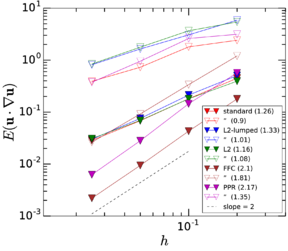

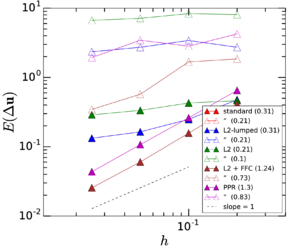

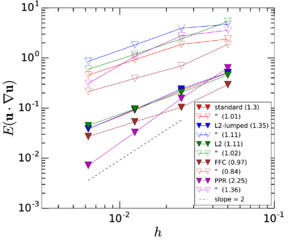

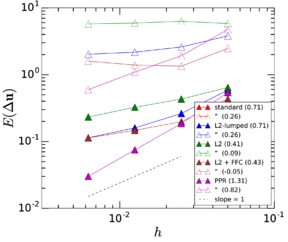

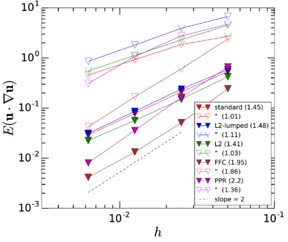

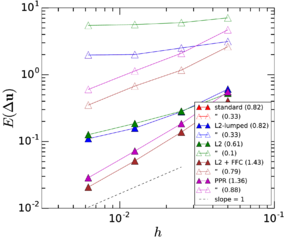

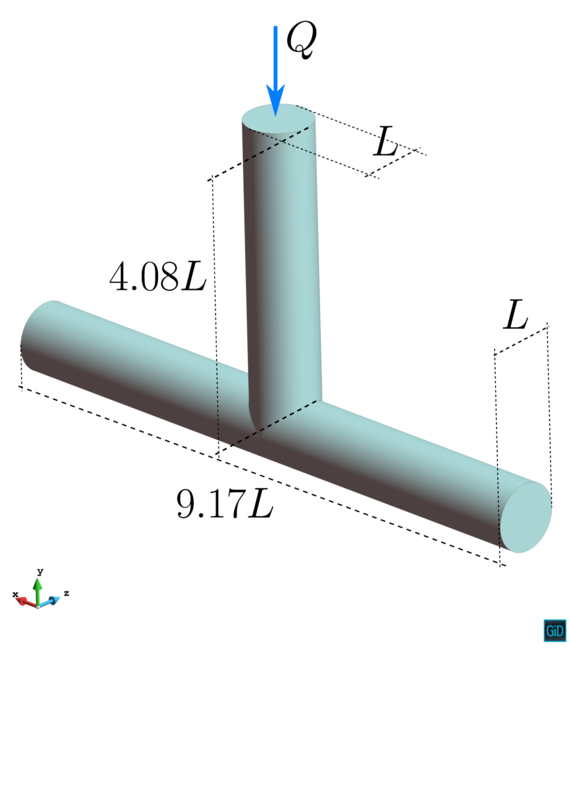

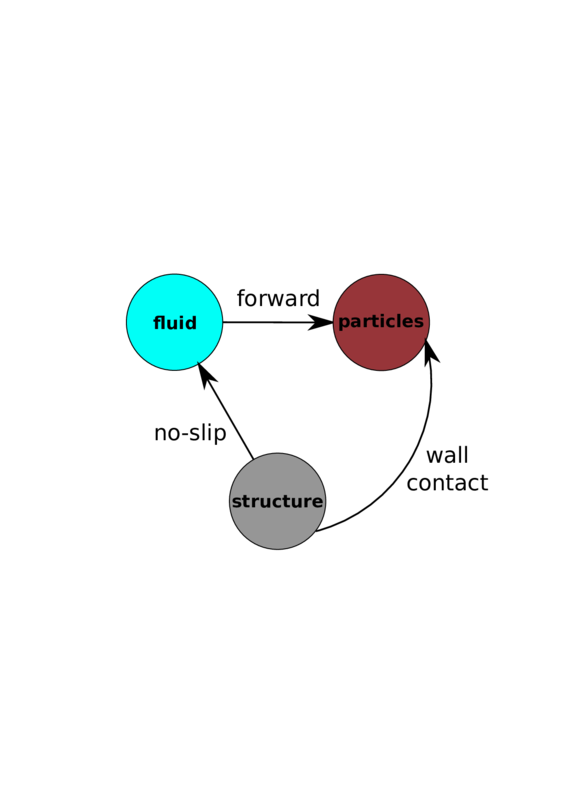

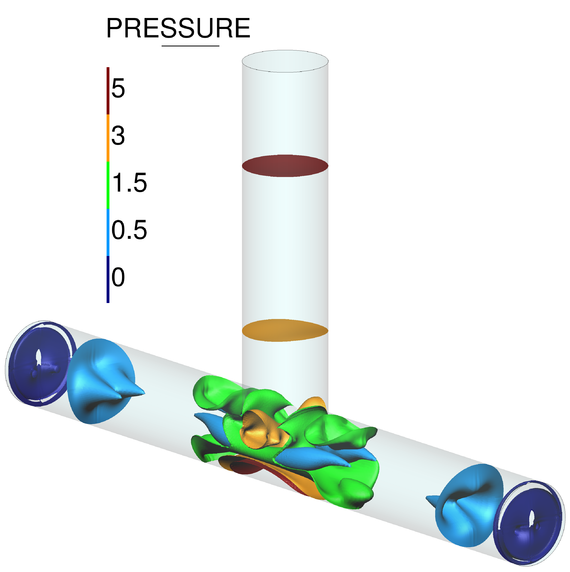

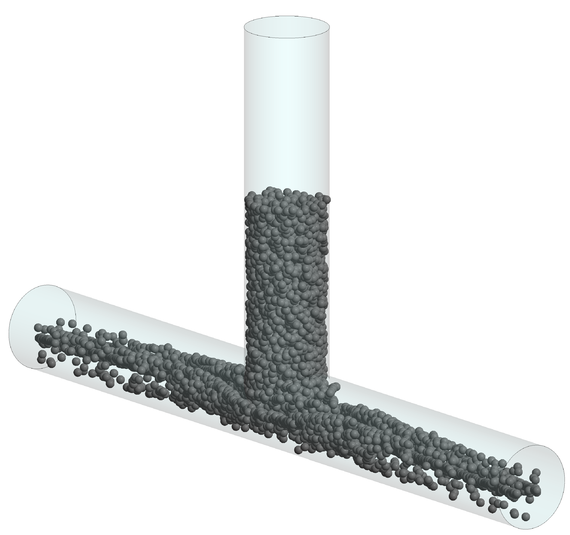

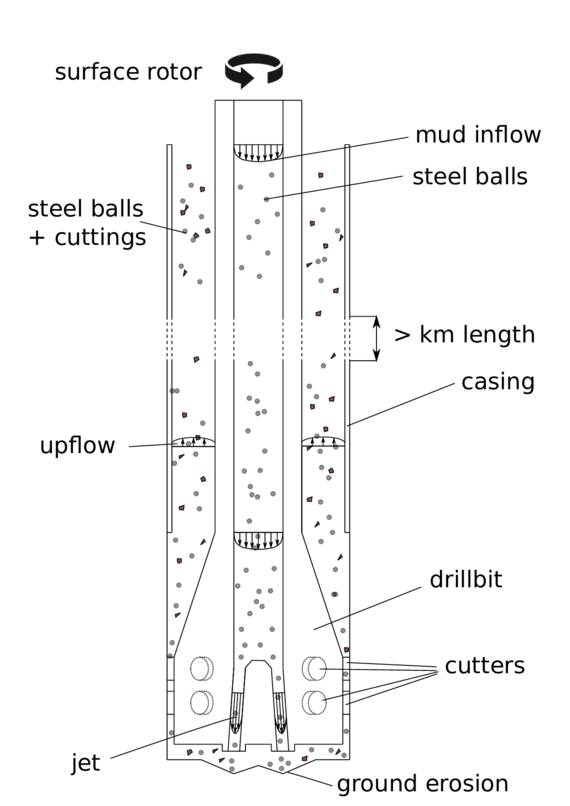

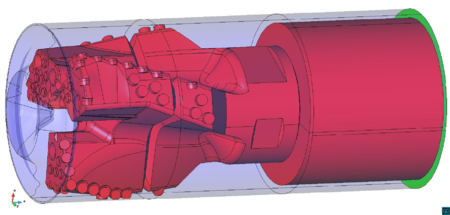

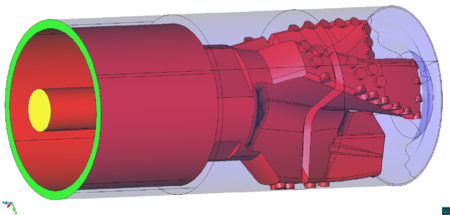

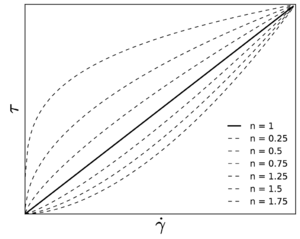

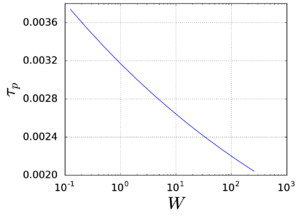

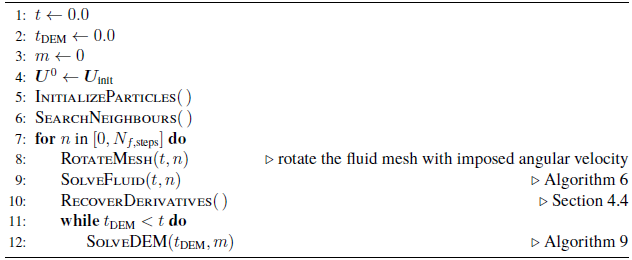

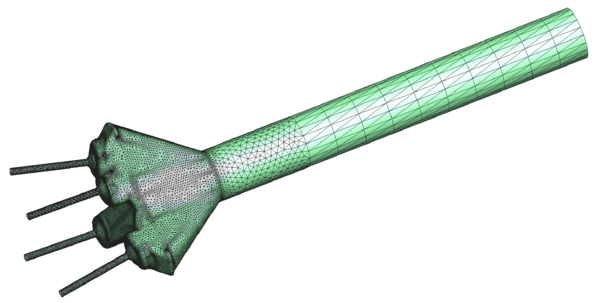

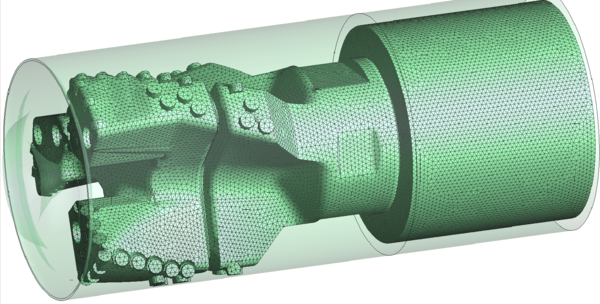

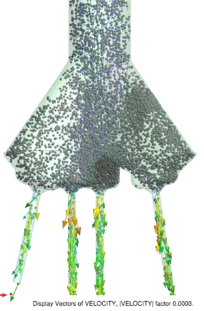

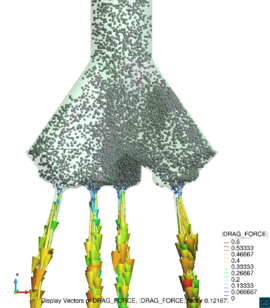

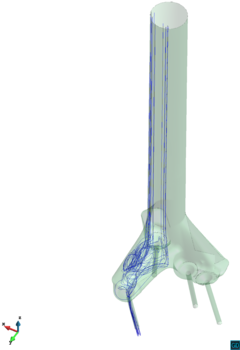

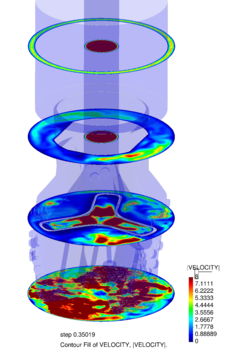

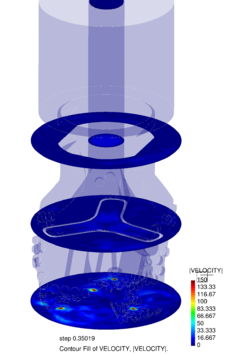

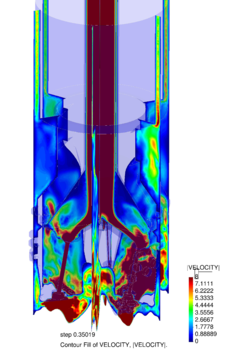

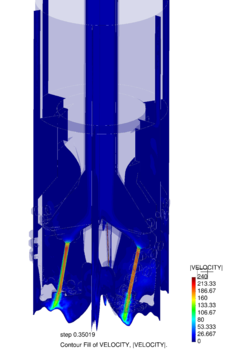

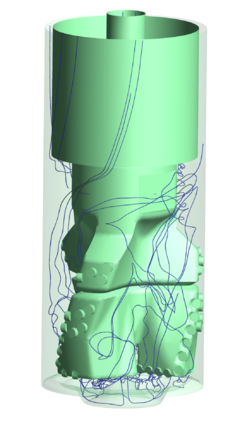

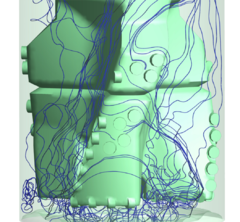

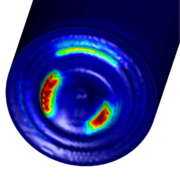

Chapter 4 moves even further toward practice, being eminently applied in nature. We consider a series of applications representative to different families of industrial problems, describing several developments involved in their solution as we go along. Section 4.2 discusses how the equation of motion considered in the previous chapters can be modified using empirical relations to extend its range of applicability beyond the limits studied in Chapter 2, for this will be necessary in the industrial applications that are considered later on. In Section 4.3 we introduce the fluid phase as a problem to be solved for the first time, for which we make use of a well-established stabilized finite element formulation. We describe it in sufficient detail to clarify the terminology and set the stage for the generalizations introduced in Section 4.3. In Section 4.4 we discuss the problem of derivative recovery, as a step necessary to obtain accurate estimates of the derivatives of the fluid field, once a solution is produced by the fluid solver. We give a brief state-of-the-art and compare several alternatives compatible to the finite element method. The description of the particles-fluid coupling is described very briefly in Section 4.5 where we basically bring together the different algorithmic parts involved. We quickly turn to the first application example, which is the subject of Section 4.6. It consists on the numerical simulation of the phenomenon of air bubble trapping in T-shaped pipe junctions, which was only recently first studied by Vigolo et al. [356]. This test exemplifies the use of the one-way coupled strategy, with no inter-particle interactions, representative of low-Reynolds number internal flows with low-density suspensions of small particles. We continue on to the study of the next test application in Section 4.7, where we study particle impact drilling, a technology used in the oil and gas industries to produce high penetration rate drilling systems. Here we again make use of a one-way coupled strategy for the fluid-particles coupling, although this time we consider the inter-particle contact as well. This is by far the most detailed of the examples provided and corresponds to consultancy work made during the Doctorat Industrial. Before moving on to the final example, the theory related to the backward-coupled flow must be introduced first, based on the theory of multicomponent flows (its rudiments can be found in Appendix H) and the general finite element formulation introduced in Section 4.3, which we specialize for this problem. Following this theoretical interlude, we present the last application example, a fluidized bed of Geldard-D particles in Section 4.9. We close with a summary of the chapter in Section 4.10.

We end the work with Chapter 5, where we give a schematic summary of our developments. In Section 5.2 we discuss some of our current and future work. There is indeed a considerable number of research lines that have been opened with this work, but that we have not followed as far as we would have liked due to the breadth of our scope.

We provide a series of appendices that carry substantial content. Our intent in doing so has been more to lighten the main text than to expand it with additional content. Perhaps the idea of modularity, associated to the programming principle, has contaminated our writing style, but we hope it helps comprehension. For instance Appendix A contains most of the little text devoted to the discrete element method while Appendix H is devoted to the description of the continuum theory related to the backward-fluid flow. The other appendices include formulas and numerical data of interest mainly to the interested reader willing to program the associated algorithms.

Finally, the Nomenclature section provides a quick guide for the symbols used throughout. Only the symbols that are exclusively used in a single context, close to their definitions, have been left out of the list, to avoid overpopulating it.

2 The Maxey–Riley equation

2.1 Introduction

The Maxey–Riley Equation (MRE) [238] describes the motion of a small, rigid, spherical particle immersed in an incompressible, Newtonian fluid. It is an expression of Newton's Second Law, relating the acceleration of the particle, taken as a point-mass, to the sum of the external forces (usually just its weight) plus the total force applied on it by its containing fluid. The smallness of the particle justifies the two fundamental assumptions made in its derivation: 1) that the relative flow can be described by the Stokes equations near the particle; and 2) that the flow is well represented by its second-order Taylor expansion about the particle's center (near the particle). The equation is thus applicable to the study of disperse particulate flows, for systems where the low volume fractions justify the consideration of each particle as an isolated body. Examples of such systems can be found in the study of warm-cloud rain initiation [122,314,363], turbulent dispersion of microscopic organisms [275,365], the dynamics of suspended sediments [324,155] and also in more fundamental areas, such as the study of turbulent dispersion [324,27,53].

The hypotheses involved in the derivation of the MRE are very often too stringent for the applications of interest. For such cases, there exist a huge variety of models applicable outside its strict range of applicability. These models, which usually involve a high degree of empiricism, are nonetheless very often constructed on the basis of the MRE (e.g.they are asymptotically convergent to it), showing similar mathematical properties and physical significance. Examples of such extensions apply to higher particle Reynolds numbers, nonspherical shape or finite-sized particles; see Section 4.2. The analysis and numerical treatment of the MRE is therefore of major importance from both the applied and the fundamental perspectives. This chapter is devoted to the study of several aspects related to the MRE, more specifically to its range of applicability and scaling properties. Our goal is to provide a solid ground on which to base engineering decisions concerning its use.

Let us recall the exact formulation of the MRE. Let define the background fluid velocity field, where is some spatial open domain contained in the fluid and is the ambient spatial dimension (either two or three). Let be the trajectory of a particle through this fluid, and its velocity. The MRE states:

|

|

(2.1) |

where is the mass of the particle, is the mass of the displaced fluid volume, is the particle radius, is the density of the fluid, is the dynamic viscosity of the fluid ( is the kinematic viscosity) and the acceleration due to gravity. The notation refers to the material derivative of the fluid. Eq.~2.1, together with and the initial conditions and form an initial value problem that must be solved to obtain the trajectory of the particle.

The terms on the right hand side of Eq.~2.1 have distinct physical interpretations and can be identified as: the force applied to the volume displaced by the particle in the undisturbed flow (), the added mass or virtual mass term (), the Stokes drag term (), the Boussinesq–Basset history term () and the term due to the weight of the particle minus its (hydrostatic) buoyancy ().

The terms bearing second order derivatives are the second order corrections, known as Faxén corrections, that take into account the finite size of the particle with respect to the second-order spatial variations of the flow field around it, see Section 2.2.2.

The exact formulation of the MRE as was originally given by Maxey [238] differs slightly from the version presented here, which adopts the Auton et al. [14] form of the added mass force. This formulation contains the material acceleration of the fluid continuum (i.e.the derivative following the fluid), evaluated at the current position of the particle. The original formulation presented instead the total derivative (i.e.the rate of change of the fluid velocity when following the particle). The former form, derived under the assumption of inviscid flow in [14] is however valid to the same order as the other terms derived by Maxey and Riley within their assumptions, while remaining accurate at higher Reynolds numbers [361].

Another difference with respect to the original formulation involves the Boussinesq–Basset term, on which the time derivative appears outside the integral sign in Eq.~2.1, as opposed to inside, as in the original Maxey and Riley paper. As pointed out in [89] this is a more general formulation, which allows for a nonzero initial relative velocity (and is also equivalent to the generalization later given by Maxey [240], see [222]; see also Appendix C).

2.2 Range of validity

Let us rewrite the MRE in dimensionless form. This will serve to introduce some fundamental dimensionless numbers. For the sake of notational simplicity, the same symbols have been overloaded to designate the dependent and independent variables, although here they represent nondimensional quantities.

|

|

(2.2) |

where

|

|

(2.3) |

where and , and are the dimensional scalars by which (and ), and have been normalized. These quantities are conventionally defined such that is a characteristic length scale of the flow and is defined such that is the characteristic magnitude of the gradient of the unperturbed velocity near the particle location, while is defined such that . By the term unperturbedwe refer to the flow resulting from subtracting the (Stokes) flow produced by the presence of the particle under the same far-field boundary conditions from the actual flow. The application of Buckingham's Pi Theorem to this equation reveals that the set of dimensionless parameters that describe it is in fact minimal. By defining , we obtain the relation

|

|

(2.4) |

The quantity is commonly referred to as the particle's response time [216]. It is equal to the time it takes for a static particle to accelerate to (where ) parts of the surrounding fluid velocity, under the action of a constant, uniform flow (and neglecting ). The Stokes number can thus be interpreted as the ratio of the particle's characteristic response time to the fluid's characteristic time, and it measures the dynamical importance of the particle's inertia. It is useful to additionally define

|

|

(2.5) |

where is the characteristic magnitude of the relative (or slip) velocity . The quantity is known as the particle's Reynolds number and it characterizes the importance of inertial effects versus viscous effects in the flow produced by the relative motion between the particle and the background flow.

Eq.~2.1 is derived from the full Navier–Stokes problem as an asymptotic relation, valid as [238]

|

|

(2.6) |

In practice, these asymptotic relations are interpreted as requiring the left-hand sides to be much smaller than one 1, that is

|

Note that the LHS of Eq.~2.7 can be understood as a sort of downscaled version of the ambient to the size of the particle, since the velocity variation seen by the particle scales as . This condition, along with Eq.~2.8 can be used to simplify the Navier–Stokes equations to the Stokes equation in the derivation of the MRE by resorting to scaling analysis of the full Navier–Stokes equations [238]. The approximation affects all the forces except , which is treated independently and only relies on Eq.~2.9 (and a sufficient degree of smoothness of the flow).

The condition Eq.~2.9 is necessary to ensure that the disturbance flow caused by the presence of the sphere is well approximated at the surface by its second order Taylor expansion around its center. This approximation remains good as long as the mean flow around the particle is approximately quadratic, and the error is expected to drop very fast with decreasing (as its third power). Note that, in practice, an accurate representation of the fluid velocity field will require a sufficient resolution of whichever numerical method is employed in its computation. The calculation of the spatial derivatives requires special attention. This issue will be addressed in Chapter 4.

Our main goal in this section is to gain a more complete understanding of the limits of applicability of the MRE than that directly offered by Eqs.~2.7-2.9. The original motivation for such an endeavour was to provide a bounded parametric space for the subsequent discussions about the relative importance of the different terms in the MRE, so as to make the analysis simpler and more precise. But we have come to recognize that such analysis should also be useful in itself, as a reference for the engineer or investigator concerned about the suitability of the equation for their particular problems. We are thus interested in answering questions such as

- What kind of effects are likely to appear first when moving away from its range of applicability?

- At what approximate pace are these effects expected to grow?

The survey we present is inevitably incomplete, though this kind of analysis is best realized through an iterative, cumulative process to which we hope to contribute a part. There have certainly been some notable efforts in this direction, the most important of which is the systematic analysis that E. Loth has undertaken over the last fifteen years [216,219,217,218,221]. However, his interest was mainly focussed outside the strict range of applicability of the MRE, exploring the ways in which it should be extended rather than its limits of applicability. Other relevant works are the best practice guideline [334] and the book [85].

Let us start by deriving some bounds to the dimensionless parameters in Eq.~2.3. First, the relative density can be extremely large for gas flows, so that in practice it can be considered as any positive number. For liquid flows, however, it becomes bounded of order 1x101 for any commonly encountered materials (for a suspension of osmium particles in gasoline one has , most likely an upper value for ordinary applications). Note that for atmospheric air instead of water this value would be about a thousand times greater.

At the other extreme, the relative density of air bubbles in water can be practically taken to be zero with a minimal change in the value of (typically, small bubbles and low Reynolds numbers lead to spherical bubbles; see [47] for an in-depth discussion on the circumstances under which bubbles can be modelled as rigid and spherical).

Moving on to the Stokes number, we find that it can also be bounded above by relating it to the particle Reynolds number as

|

|

(2.10) |

where we have interpreted Eq.~2.9 as and used the fact that for very large the relative velocities will be of the order or larger than the absolute velocities of the smallest scales of the flow. We will come back to this issue in Section 2.3. Using Eq.~2.10 and the above argument for liquids allows us to bound by the particle Reynolds number and in many cases (e.g.water suspended mineral particles) by an additional order of magnitude, so that if , then , speaking in order-of-magnitude terms. In atmospheric air, a similar argument would lead to bound below two to three orders of magnitude above . Using the more conservative would lead to lower upper bounds. We summarize the situation in Table 1, that includes some estimates for the upper bounds corresponding to different representative material combinations.

| Continuous phase | Dispersed phase | |||

| air | water | sand | copper | |

| air | 1 | 10 | 50 | 100 |

| water | 1 | 1 | 1 | 5 |

Apart from Eqs.~2.7-2.9 and Eq.~2.10, there are additional assumptions involved in the derivation of Eq.~2.1 that we want to visit. To begin with, the problem is posed for a single sphere in an infinite domain. That is, the presence of nearby particles and of solid boundaries is not contemplated, though these elements are most often present in the applications. Mere qualitative restrictions are of no practical use to the engineer, other than as a reminder of them being a source of error. Thus, there is a strong need to provide predictive quantitative measures too. Unfortunately, to the best of our knowledge, these are still open issues, and not much more than a few rough rules of thumb can be found in the literature.

It is also of interest to assess the range of validity of the different terms in the MRE, one at a time. The reason is that each term describes a distinct physical effect, with its own characteristic response to variations in the dimensionless parameters that characterize the flow. Indeed, in a given situation it might be of outermost importance to correctly calculate the steady drag force and not, say, the added mass force. Take for example the study of the deposition rate of microscopic particles in a container. Due to their tiny inertia the particles reach their terminal velocity very quickly. After the short transient the added mass force cancels, having a negligible impact on the much larger deposition time. In such cases, it is common practice to simply neglect the added mass force altogether, making to all effects the range of applicability of its particular formulation irrelevant. This sort of argument has allowed to justify the application of the MRE to a remarkably wide range of situations, that has been stretched even further by tweaking specific terms. A paradigmatic example of this practice is the use of empirical drag force laws combined with the usual formulation for the other terms (and usually neglecting the history force). We will revisit this type of approaches in Chapter 4, where we will need to move outside the range of applicability of the MRE.

It is important to realize that the use of this kind of empirical extensions almost invariably relies on the validity of the same additive structure of the different forces present in the MRE. Encouragingly, there has been an important body of research supporting such additive division both from a theoretical [223,14] and an empirical [222] points of view in a variety of situations and well beyond the range of applicability of the MRE.

All these questions are the subject of the following sections. We will look at the different directions in which the hypotheses involved in the derivation of the MRE can fail, in a systematic way. Since we are interested in the first effects, we will assume that it is adequate to look at each term independently. Furthermore, the additive nature of the different forces suggests that each one can be treated independently. We will therefore do so when possible, making an effort to set specific numerical bounds to the applicability ranges.

(1) The imprecision in this requirement must be supplemented by experience or by some conventional rule adapted to the particular fields of application. A common criterion is to interpret as meaning at least two orders of magnitude smaller than one or [197].

2.2.1 Inertial effects: first finite-Reₚ effects

The well known Stokes solution of the steady, low Reynolds number flow past a sphere (see Eq.~2.31) is obtained by completely neglecting fluid inertia. By applying the no-slip boundary conditions on the particle surface and the far-field velocity conditions at infinity and expanding the stream function in powers of , the hydrodynamic force on the particle can be calculated, to leading order, yielding the drag force of the MRE without the Faxén terms (this is the problem solved by Stokes in 1851). However, if one wishes to increase the order of this approximation following the same procedure, one soon realizes that there is no way to fulfil the far field boundary conditions in this case, since the higher order contributions to the perturbation caused by the particle do not vanish at infinity. This phenomenon is known as Whitehead's paradox [248].

Its resolution was possible due to ideas from Oseen, who noted that the assumption by which inertial terms are disregarded (under Eq.~2.8) is only valid near the particle, where viscous effects dominate. But, far from the particle (in particular at a distance such that , see [284]), the approximation breaks down. This implies that there is an inherent inconsistency in requiring that the Stokes solution be valid in an infinite domain, and that it is necessary to consider inertia far from the particle in order to calculate the higher order corrections to the drag force. The final word on the issue was nonetheless given by Proudman and Pearson [284], who, using the technique of matched asymptotic expansions, re-derived Oseen's inertial, first-order correction to the steady drag force, corrected a flaw in Oseen's original reasoning and added an additional term to the expansion:

|

|

(2.11) |

This way, the contribution from the outer region becomes a correction of the steady drag force, whose leading term, given by , could be used to provide the order of magnitude of this correction.

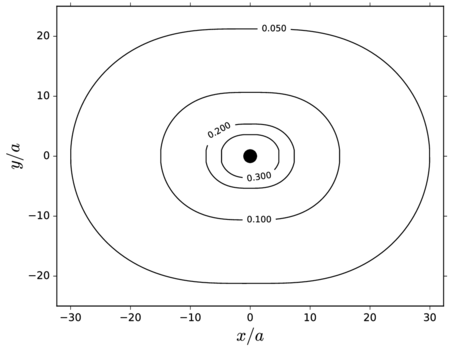

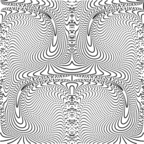

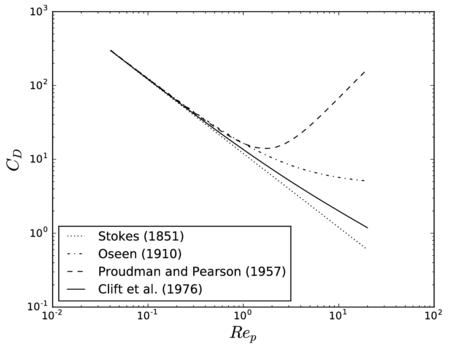

In order to illustrate how it could be used in practice, let us assume that we wish to establish the upper limit to the particle Reynolds number to ensure that the correction is smaller than 5 as a convention to fix the range of validity of the MRE. Then the leading term in Eq.~2.11 predicts this to happen for , and by this point the error in this expression is only , taking as a reference the empirical drag coefficient by Clift et al. (1978) (which has a root mean square error of with respect to the empirical data gathered by Brown and Lawler [50] covering the range ). This means that its range of validity is large enough to estimate the first-effects of inertia for error tolerances lower than 5%. In Fig. 2 all these different approximations to the drag force are compared.

|

| Figure 2: Drag force predicted by different approximations, as measured by the drag coefficient . |

The above expression of the force is only valid for long-term steady motions. Lovalenti and Brady [223] studied the motion of a small sphere immerse in an arbitrary space and time varying flow, although considering the particle small enough, so that the undisturbed flow can be considered uniform over the diameter of the particle. The problem is thus the generalization of the MRE (excluding Faxén terms, as these authors assume flow uniformity; see Section 2.2.1) for small, though non-zero particle Reynolds number. Their analysis is based on a different approach compared to the asymptotic expansion matching used by Proudman and Pearson [284]). In fact, they find an expression for a uniformly valid flow over the whole domain to first order in . The hydrodynamic force on the particle is then computed to first order in by approximating the resulting integrals of the stress tensor. The result is an expression for the steady and unsteady forces at finite Reynolds number. The inertial effects include the steady Oseen correction to the drag, as well as corrections to the unsteady forces like the Basset Force and the added mass force. These corrections confirm the observations from fully-resolved numerical simulations [243] that higher rate of convergence of the particle velocity to terminal conditions (i.e.it varies but is for smoothly accelerating motions where the slip velocity tends to increase) than that predicted by the MRE equation, which is , as predicted by the Boussinesq–Basset kernel. According to Lovalenti and Brady [223]: This fact may explain why experimentalists have measured a steady terminal velocity when the length of their apparatus would not have permitted this if the Basset force was correct..

This change in the decay regime of unsteady forces only applies at long times. For short-time-scale motions the unsteady force from the MRE is accurate. The boundary between both regimes is marked by the time it takes for vorticity to diffuse away from the particle up to the so-called Oseen distance, where inertial and viscous contributions become of the same order. At that point, convection transport takes over as the dominant physical mechanism for the transport of vorticity, explaining the change in regime. From the application point of view it is important to realize whether the flow is dominated by high frequency flows, so that the motion is mainly explained by the MRE unsteady forces, or else the characteristic frequencies of the flow are low and thus the (substantially more complicated) expressions provided by Lovalenty and Brady apply. The key is to compare those characteristic frequencies of the particle's motion with the quantity , the viscous diffusion time, which corresponds to the order of magnitude of the time it takes vorticity to be diffused away to the Oseen length. In other words, the MRE equation applies whenever [224]

|

|

(2.12) |

After a little algebra, this condition can be rewritten as a function of the usual nondimensional parameters as

|

|

(2.13) |

As the value of increases, the condition above may cease to be fulfilled and the form of the history term must be corrected using, for example, the formulation given by Lovalenti and Brady [223]; see also Lovalenti and Brady [224]. In turbulent dispersion, the importance of this correction will not be critical for most flows such that , except perhaps for solid particles in gas. The same conclusion was reached by [211] based on the empirical formulation for the history force of Mei and Adrian [243].

The effect of vorticity

As stated, the derivations in Lovalenti and Brady [223] assume a uniform flow at the scale of the particle. If one allows for some non-uniformity to be present in the flow, additional effects appear. In particular, this allows for a break in the symmetry of the flow on the plane orthogonal to the direction of , which gives rise to lateral forces on the particle that are known as lift forces. Famously, Saffman [299] (with the corrections in [300]) gave the correct expression, to first order in , for the lift force that an isolated, non-rotating particle experiments under constant shear (linear non-uniformity of the flow).

|

|

(2.14) |

where is the slip velocity. This result is valid under the restrictions , and ; where the shear Reynolds number is defined by

|

|

(2.15) |

that is, the particle Reynolds number multiplied by a dimensionless shear rate gradient.

Unfortunately, the typical values for in turbulence are often smaller than those of [364], invalidating the application of the formulation. However, in this case the expression is in fact overestimating the lift force [364] and so, without loss of generality, we may apply the formulation as an upper bound for the remaining discussion.

But the fluid vorticity can arise due to the solid rotation of the fluid as well, also giving rise to a lift. Its low- analytical formula was provided by Herron et al. [164] and reads

|

|

(2.16) |

which has a coefficient about 2x101 larger compared to . This expression is subject to the restriction . Note that and are incompatible and are only strictly valid for their corresponding ideal fluid motions. In fact, Candelier and Angilella [56] have proved analytically that for a particle settling in a solid-body rotation fluid, the lift force can even take the opposite sign as that indicated by Eq.~2.14, which is valid only for pure shear, steady flows. In this case, furthermore, the relative motion is not stationary, due to the migration of the particle in the radial directions.

Indeed, as with rectilinear motion, the relative flow unsteadiness also generates history-dependent contributions. Those have been studied in [60,55] for pure shear (generalizing Saffman's result) and solid-body rotation motions respectively. Nonetheless, the order of magnitude of the Saffmann lift force, compared to the steady drag force gives an idea of the magnitude of this correction. An analogue estimate can be obtained if fluid motion is closer to that assumed in Eq.~2.16.

|

|

(2.17) |

We will thus consider the lift force to be vary small within the range of applicability of the MRE. Nonetheless, one must keep in mind that the correction is orthogonal to the drag force and thus its effect can introduce important systematic changes in the particles' trajectories, especially in flows with a predominant direction. Since the largest values of the shear rate are usually close to the boundaries of the domain, this is very often the case anyway, for instance in internal flows. When considering the flow of particles along ducts, it is therefore important to consider the possibility that the inertial lift force might play a role, except for extremely small particles. In any case, we leave the study of the effect of boundaries for future work, see Section 2.2.6, and thus we will not elaborate this point any further here.

The effect of particle rotation

The linearity of Stokes flow means that, for a spherical particle, rotation and translation are decoupled. Indeed, the combined motion is the result of adding the effects of one and the other, and it is clear that, by symmetry, the relative rotation of the particle alone cannot produce a force in any particular direction. It is however necessary to examine how fast this rotation can be until the first inertial effects appear, invalidating the MRE.

The first-effects of the force modification due to non-zero particle angular velocity were calculated by Rubinow and Keller [294], to zeroth-order accuracy in . This is enough for our arguments, since the MRE assumes this effect to be very small. This formulation predicts that due to the rotation of the particle, there arises a lift force given by

|

|

(2.18) |

where is the angular velocity of the particle. In other words: the Reynolds number defined by the slip velocity produced by rotation should be small enough. The lift force that arises due to the rotation of the particle relative to the fluid is generically called Magnus effect [219].

The condition of having a negligible contribution to the force could then be expressed as a function of the particle Reynolds number associated to the rotational motion, given by

|

|

(2.19) |

where . Note that , and so this is assumed to be very small within the theory of the MRE.

Maxey [238] argues that in turbulent flows the particle will tend to acquire a rotational velocity of the same order as the local shear rate. Taking into account that the first-order rotation-induced effect of inertia is a lift force with a magnitude of order [294], the effect results of order ; more precisely, we have

|

|

(2.20) |

where give the estimation of local shear rate; and being the characteristic velocity and distance of the flow, which is certainly a small quantity by Eq.~2.7.

There are circumstances in which the particle angular velocity might be substantially higher. For instance, a collision against a wall or another particle might transform a good part of the translational energy into rotational energies, leading to high angular velocities, especially for the smaller particles 1. The fact that this force is perpendicular to the direction of translation makes this effect even more important. Nevertheless, collisions are not expected to be too frequent in this regime (see Section 2.2.4), substantially alleviating this effect.

In this chapter we ignore the rotational degrees of freedom of the particle, except for the present discussion, with the argument that these effects are, to first order of approximation, decoupled. Nonetheless, it is worth to consider momentarily what the rotational dynamics of a particle within the range of applicability of the Maxey–Riley regime looks like. Feuillebois and Lasek [128] derived the expression of the instationary motion of a small, rigid sphere spinning in a viscous, Newtonian fluid, providing history-dependent terms that decay much faster than the translational analogues. In the steady-state limit, the equation reads

|

|

(2.21) |

This equation allows us to calculate the rotational relaxation time as

|

|

(2.22) |

from which a rotational Stokes number can be defined (assuming the steady-state forces are dominant)

|

|

(2.23) |

which shows that this Stokes number is of the same order as the translational Stokes number, under the assumption that the fluid time scales are similar. Note that for large Stokes numbers the assumption above that the particles rotation will be of the order of the fluid vorticity most of the time (in fact equal to the local angular velocity or one half of the vorticity in the limit of zero inertia) can be violated, as the particles may not have time to accommodate to the fluid vorticity. In such cases the analysis becomes more involved and it is likely that finite Reynolds number effects must be taken into account for the angular dynamics. Nonetheless, for this order-of-magnitude analysis the present formulation will suffice, especially since the rate at which the steady-state formulation becomes inaccurate is quite slow, and it still yields reasonable results at well past unity, see [219].

(1) Indeed, a fixed percentage of energy conversion from translation to rotation leads to higher angular velocities for smaller particles. Specifically, the rotational kinetic energy is and the translational kinetic energy is . Thus, after a collision we have , for light and/or small particles. Furthermore, this gives ; and the ratio can become quite large.

2.2.2 Finite radius effects