Shinsm2021 (talk | contribs) (Tag: Visual edit) |

(→References) |

||

| (150 intermediate revisions by 3 users not shown) | |||

| Line 4: | Line 4: | ||

<div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> | <div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> | ||

''' Integration of Game theory and Response Surface Method for Robust Parameter Design'''</div> | ''' Integration of Game theory and Response Surface Method for Robust Parameter Design'''</div> | ||

| − | -->== Abstract == | + | --> |

| − | + | == Abstract == | |

| + | Robust parameter design (RPD) is to determine the optimal controllable factors that minimize the variation of quality performance caused by noise factors. The dual response surface approach is one of the most commonly applied approaches in RPD that attempts to simultaneously minimize the process bias (i.e., the deviation of the process mean from the target) as well as process variability (i.e., variance or standard deviation). In order to address this tradeoff issue between the process bias and variability, a number of RPD methods are reported in literature by assigning relative weights or priorities to both the process bias and variability. However, the relative weights or priorities assigned are often subjectively determined by a decision maker (DM) who in some situations may not have enough prior knowledge to determine the relative importance of both the process bias and variability. In order to address this problem, this paper proposes an alternative approach by integrating the bargaining game theory into an RPD model to determine the optimal factor settings. Both the process bias and variability are considered as two rational players that negotiate how the input variable values should be assigned. Then Nash bargaining game solution technique is applied to determine the optimal, fair, and unique solutions (i.e., a balanced agreement point) for this game. This technique may provide a valuable recommendation for the DM to consider before making the final decision. This proposed method may not require any preference information from the DM by considering the interaction between the process bias and variability. To verify the efficiency of the obtained solutions, a lexicographic weighted Tchebycheff method which is often used in bi-objective optimization problems is utilized. Finally, in two numerical examples, the proposed method provides non-dominated tradeoff solutions for particular convex Pareto frontier cases. Furthermore, sensitivity analyses are also conducted for verification purposes associated with the disagreement and agreement points. | ||

| − | '''Keywords''': | + | '''Keywords''': Robust parameter design, lexicographic weighted Tchebycheff, bargaining game, response surface methodology, dual response model |

| − | + | ==1. Introduction== | |

| − | + | Due to fierce competition among manufacturing companies and an increase in customer quality requirements, robust parameter design (RPD), an essential method for quality management, is becoming ever more important. RPD was developed to decrease the degree of unexpected deviation from the requirements that are proposed by customers or a DM and thereby helps to improve the quality and reliability of products or manufacturing processes. The central idea of RPD is to build quality into the design process by identifying an optimal set of control factors that make the system impervious to variation [1]. The objectives of RPD are set out to ensure that the process mean is at the desired level and process variability is minimized. However, in reality, a simultaneous realization of those two objectives sometimes is not possible. As Myers et al. [2] stated there are circumstances where the process variability is robust against the effects of noise factors but the mean value is still far away from the target. In other words, a set of parameter values that satisfies these two conflicting objectives may not exist. Hence, the tradeoffs that exist between the process mean and variability are undoubtedly crucial in determining a set of controllable parameters that optimize quality performance. | |

| − | + | The tradeoff issue between the process bias and variability can be associated with assigning different weights or priority orders. Weight-based methods assign different weights to the process bias and variability, respectively, to establish their relative importance and transform the bi-objective problem into a single objective problem. The two most commonly applied weight-based methods are the mean square error model [3] and the weighted sum model [4,5]. Alternatively, priority-based methods sequentially assign priorities to the objectives (i.e., minimization of the process bias or variability). For instance, if the minimization of the process bias is prioritized, then the process variability is optimized with a constraint of zero-process bias [6]. Other priority-based approaches are discussed by Myers and Carter [7], Copeland and Nelson [8], Lee et al. [9], and Shin and Cho [10]. In both weight-based and priority-based methods, the relative importance can be assigned by the decision maker’s (DM) preference, which is obviously subjective. Additionally, there are situations in which the DM could be unsure about the relative importance of the process parameters in bi-objective optimization problems. | |

| − | + | Therefore, this paper aims to solve this tradeoff problem from a game theory point of view by integrating bargaining game theory into the RPD procedure. First, the process bias and variability are considered as two rational players in the bargaining game. Furthermore, the relationship functions for the process bias and variability are separately estimated by using the response surface methodology (RSM). In addition, those estimated functions are regarded as utility functions that represent players’ preferences and objectives in this bargaining game. Second, a disagreement point, signifying a pair of values that the players expect to receive when negotiation among players breaks down, can be defined by using the minimax-value theory which is often used as a decision rule in game theory. Third, Nash bargaining solution techniques are then incorporated into the RPD model to obtain the optimal solutions. Then, to verify the efficiency of the obtained solutions, a lexicographic weighted Tchebycheff approach is used to generate the associated Pareto frontier so that it can be visually observed if the obtained solutions are on the Pareto frontier. Two numerical examples are conducted to show that the proposed model can efficiently locate well-balanced solutions. Finally, a series of sensitivity analyses are also conducted in order to demonstrate the effects of the disagreement point value on the final agreed solutions. | |

| − | This research is laid out as follows: Section 2 discusses | + | This research paper is laid out as follows: Section 2 discusses existing literature for RPD and game theory applications. In Section 3, the dual response optimization problem, the lexicographic weighted Tchebycheff method, and the Nash bargaining solution are explained. Next, in Section 4, the proposed model is presented. Then in Section 5, two numerical examples are addressed to show the efficiency of the proposed method, and sensitivity studies are performed to reveal the influence of disagreement point values on the solutions. In Section 6, a conclusion and further research directions are discussed. |

| − | == <span id="_Hlk58849949"></span>2. | + | <span id="OLE_LINK4"></span><span id="OLE_LINK5"></span><span id="OLE_LINK6"></span><span id="OLE_LINK7"></span><span id="cite-1"></span>[[Draft Shin 691882792|<span id="cite-2"></span>]]<span id="OLE_LINK2"></span><span id="OLE_LINK22"></span> |

| + | |||

| + | <span id="_Hlk58849949"></span> | ||

| + | ==2. Literature review == | ||

===2.1 Robust parameter design === | ===2.1 Robust parameter design === | ||

| − | Taguchi | + | Taguchi proposed both experimental design concepts and parameter tradeoff issues into a quality design process. In addition, Taguchi developed an orthogonal-array-based experimental design and used the signal-to-noise (SN) ratio to measure the effects of factors on desired output responses. As discussed by Leon et al. [11] in some situations, the SN ratio is not independent of the adjustment parameters, so using the SN ratio as a performance measure may often lead to far from the optimal design parameter settings. Box [12] also argued that statistical analyses based on experimental data should be introduced, rather than relying only on the maximization of the SN ratio. The controversy about the Taguchi method is further discussed and addressed by Nair et al. [13] and Tsui [14]. |

| − | + | Based on Taguchi’s philosophy, further statistical based methods for RPD have been developed. Vining and Myers [6] introduced a dual response method, which takes zero-process bias as a constraint and minimizes the variability. Copeland and Nelson [15] proposed an alternative method for the dual response problem by introducing a predetermined upper limit on the deviation from the target. Similar approaches related to upper limit concept are further discussed by Shin and Cho [10] and Lee et al. [9] For the estimation phase, Shoemaker et al. [16] and Khattree [17] suggested a utilization of the response surface model approaches. However, when a homoscedasticity assumption for regression is violated, then other methods, such as the generalized linear model, can be applied [18]. Additionally, in cases where there is incomplete data, Lee and Park [19] suggested an expectation-maximization (EM) algorithm to provide an estimation of the process mean and variance, while Cho and Park [20] suggested a weighted least squares (WLS) method. However, Lin and Tu [3] pointed out that the dual response approach had some deficiencies and proposed an alternative method called mean-squared-error (MSE) model. Jayaram and Ibrahim [21] modified the MSE model by incorporating capability indexes and considered the minimization of total deviation of capability indexes to achieve a multiple response robust design. More flexible alternative methods that could obtain Pareto optimal solutions based on a weighted sum model were introduced by many researchers [4,5,22]. In fact, this weighted sum model is more flexible than conventional dual response models, but it cannot be applied when a Pareto frontier is nonconvex [23]. In order to overcome this problem, Shin and Cho [23] proposed an alternative method called lexicographic weighted Tchebycheff by using an <math display="inline">L-\infty</math> norm. | |

| − | More recently, RPD has become more widely used not only in manufacturing but also in | + | More recently, RPD has become more widely used not only in manufacturing but also in other science and engineering areas including pharmaceutical drug development. New approaches such as simulation, multiple optimization techniques, and neural networks (NN) have been integrated into RPD. For example, Le et al. [24] proposed a new RPD model by introducing a NN approach to estimate dual response functions. Additionally, Picheral et al. [25] estimated the process bias and variance function by using the propagation of variance method. Two new robust optimization methods, the gradient-assisted and quasi-concave gradient-assisted robust optimization methods, were presented by Mortazavi et al. [26]. Bashiri et al. [27] proposed a robust posterior preference method that introduced a modified robust estimation method to reduce the effects of outliers on functions estimation and used non-robustness distance to compare non-dominated solutions. However, the responses are assumed to be uncorrelated. To address the correlation among multiple responses and the variation of noise factors over time, Yang et al. [28] extended offline RPD to online RPD by applying Bayesian seemingly unrelated regression and time series models so that the set of optimal controllable factor values can be adjusted in real-time. |

===2.2 Game Theory === | ===2.2 Game Theory === | ||

| − | + | The field of game theory presents mathematical models of strategic interactions among rational agents. These models can become analytical tools to find the optimal choices for interactional and decision-making problems. Game theory is often applied in situations where the "roles and actions of multiple agents affect each other" [29]. Thus, game theory serves as an analysis model that aims at helping agents to make the optimal decisions, where agents are rational and those decisions are interdependent. Because of the condition of interdependence each agent has to consider other agents’ possible decisions when formulating a strategy. Based on these characteristics of game theory, it is widely applied in multiple disciplines, such as computer science [30], network security and privacy [31], cloud computing [32], cost allocation [33], and construction [34]. Because game theory has a degree of conceptual overlap with optimization and decision-making, three concepts (i.e., game theory, optimization, and decision-making) can often be combined, respectively. According to Sohrabi and Azgom [29], there are three kinds of basic combinations associated with those three concepts as follows: game theory and optimization, game theory and decision-making, game theory, optimization, and decision-making. | |

| − | + | The first type of these combinations (i.e., game theory and optimization) further has two possible situations. In the first situation, optimization techniques are used to solve a game problem and prove the existence of equilibrium [35,36]. In the second situation, game theory concepts are integrated to solve an optimization problem. For example, Leboucher et al. [37] used evolutionary game theory to improve the performance of a particle swarm optimization (PSO) approach. Additionally, Annamdas and Rao [38] solved a multi-objective optimization problem by using a combination of game theory and a PSO approach. The second type kind of combination (i.e., game theory and decision-making) integrates game theory to solve a decision-making problem, as discussed by Zamarripa et al. [39] who applied game theory to assist with decision-making problems in supply chain bottlenecks. More recently, Dai et al. [40] attempted to integrate the Stackelberg leadership game into RPD model to solve a dual response tradeoff problem. The third type of combination (i.e., game theory, optimization and decision-making) integrates game theory and optimization to a decision-making problem. For example, a combination of linear programming and game theory was introduced to solve a decision-making problem [41]. Doudou et al. [42] used a convex optimization method and game theory to settle a wireless sensor network decision-making problem.<span id="_Ref76149866"></span><span id="OLE_LINK20"></span><span id="OLE_LINK21"></span><span id="cite-20"></span><span id="cite-21"></span><span id="cite-22"></span><span id="cite-23"></span><span id="cite-24"></span><span id="cite-25"></span><span id="cite-_Ref76149866"></span><span id="cite-26"></span><span id="cite-27"></span><span id="cite-28"></span><span id="cite-29"></span><span id="cite-30"></span><span id="cite-31"></span><span id="cite-32"></span><span id="cite-33"></span><span id='OLE_LINK16'></span><span id='OLE_LINK17'></span><span id='cite-34'></span> | |

| − | ===2.3 Bargaining | + | ===2.3 Bargaining game=== |

| − | A | + | A bargaining game can be applied in a situation where a set of agents have an incentive to cooperate but have conflicting interests over how to distribute the payoffs generated from the cooperation [43]. Hence, a bargaining game essentially has two features: Cooperation and conflict. Because the bargaining game considers cooperation and conflicts of interest as a joint problem, it is more complicated than a simple cooperative game that ignores individual interests and maximizes the group benefit [44]. Typical three bargaining game examples include a price negotiation problem between product sellers and buyers, a union and firm negotiation problem over wages and employment levels, and a simple cake distribution problem. |

| − | + | Significant discussions about the bargaining game can be addressed by Nash [45,46]. Nash [45] presented a classical bargaining game model aimed at solving an economic bargaining problem and used a numerical example to prove the existence of multiple solutions. In addition, Nash [46] extended his research to a more general form and demonstrated that there are two possible approaches to solve a two-person cooperative bargaining game. The first approach, called the negotiation model, is used to obtain the solution through an analysis of the negotiation process. The second approach, called the axiomatic method, is applied to solve a bargaining problem by specifying axioms or properties that the solution should obtain. For the axiomatic method, Nash concluded four axioms that the agreed solution called Nash bargaining solution should have. Based on Nash’s philosophy, many researchers attempted to modify Nash's model and proposed a number of different solutions based on different axioms. One famous modified model replaces one of Nash’s axioms in order to reach a fairer unique solution which is called the Kalai-Smorodinky’s solution [47]. Later, Rubinstein [48] addressed a bargaining problem by specifying a dynamic model which explains a bargaining procedure. <span id="cite-35"></span><span id="cite-36"></span><span id='OLE_LINK3'></span><span id='cite-37'></span><span id='cite-38'></span><span id='cite-39'></span><span id='cite-40'></span> | |

| − | ==3. | + | ==3. Models and methods == |

===3.1 Bi-objective robust design model=== | ===3.1 Bi-objective robust design model=== | ||

| − | + | A general bi-objective optimization problem involves simultaneous optimization of two conflicting objectives (e.g., <math>f_1({\boldsymbol{\text{x}}})</math> and <math>f_2({\boldsymbol{\text{x}}})</math>) that can be described in mathematical terms as <math>\min[f_1({\boldsymbol{\text{x}}}), f_2({\boldsymbol{\text{x}}})]</math>. The primary objective of PRD is to minimize the deviation of performance of the production process from the target value and the variability of the performance, where this performance deviation can be represented by process bias and the performance variability can be represented by standard deviation or variance. For example, Koksoy [49], Goethals and Cho [50], and Wu and Chyu [51] utilized estimated variance functions to represent process variability. On the other hand, Shin and Cho [10,52], Tang and Xu [53] used estimated standard deviation functions to measure process variability. Steenackers and Guillaume [54] discussed the effect of different response surface expressions on the optimal solutions, and they concluded that both standard deviation and variance can capture the process variability well but can lead to different optimal solution sets. Since it can be infeasible to minimize the process bias and variability simultaneously, a simultaneous optimization of these two process parameters, which are separately estimated by applying RSM, is then transformed into a tradeoff problem between the process bias and variability. This tradeoff problem can be formally expressed as a bi-objective optimization problem [23] as:<span id="cite-_Ref76152586"></span> | |

{| class="formulaSCP" style="width: 100%; text-align: center;" | {| class="formulaSCP" style="width: 100%; text-align: center;" | ||

| Line 53: | Line 57: | ||

|- | |- | ||

|<math>min</math> | |<math>min</math> | ||

| − | | <math | + | | <math>\left[ \left\{ \hat{\mu }\left( \boldsymbol{\text{x}}\right) -\tau \right\}^{2},\, \hat{\sigma }^{2} (\boldsymbol{\text{x}})\right]^{T}</math> |

|- | |- | ||

|<math>s.t.</math> | |<math>s.t.</math> | ||

| − | |<math display="inline"> \boldsymbol{x}\in X\,</math> | + | |<math display="inline"> {\boldsymbol{\text{x}}}\in X\,</math> |

|} | |} | ||

| style="width: 5px;text-align: right;white-space: nowrap;" | (1) | | style="width: 5px;text-align: right;white-space: nowrap;" | (1) | ||

|} | |} | ||

| − | where <math display="inline">\boldsymbol{x}</math> | + | where <math display="inline">{\boldsymbol{\text{x}}}</math>, <math display="inline">X</math>, <math display="inline">\tau</math>, <math display="inline">{\left\{ \hat{\mu }\left( {\boldsymbol{\text{x}}}\right) -\tau \right\} }^{2}</math>, and <math display="inline">{\hat{\sigma }}^{2}({\boldsymbol{\text{x}}})</math> represent a vector of design factors, the set of feasible solutions under specified constraints, the target process mean value, and the estimated functions for process bias and variability, respectively. |

| − | ===3.2 Lexicographic weighed Tchebycheff method | + | ===3.2 Lexicographic weighed Tchebycheff method=== |

| − | + | A bi-objective robust design problem is generally addressed by introducing a set of parameters, determined by a DM, which represents the relative importance of those two objectives. With the introduced parameters, the bi-objective functions can be transformed into a single integrated function, thus the bi-objective optimization problem can be solved by simply optimizing the integrated function. One way to construct this integrated function is by using the weighted sum of the distance between the optimal solution and the estimated function. Different ways of measuring distance can lead to different solutions, and one of the most common methods is <math display="inline">{L}_{p}</math> metric, where <math>p=1,2,\, \mbox{or}\, \infty</math>. When <math>p=1</math>, the metric is called the Manhattan metric, whereas <math display="inline">p=\infty</math>, it is named the Tchebycheff metric [47]. Utopia point represents an initial point to apply <math>L-\infty</math> metric in weighted Tchebycheff method and can be obtained by minimizing each objective function separately. The weak Pareto optimal solutions can be obtained by introducing different weights: <span id='cite-41'></span> | |

{| class="formulaSCP" style="width: 100%; text-align: center;" | {| class="formulaSCP" style="width: 100%; text-align: center;" | ||

| Line 72: | Line 76: | ||

{| style="text-align: center; margin:auto;" | {| style="text-align: center; margin:auto;" | ||

|- | |- | ||

| − | | | + | | <math>\mathrm{min}\,\left(\displaystyle\sum _{i=1}^{p}{w}_{i}\left| {f}_{i}\left( \boldsymbol{\text{x}}\right) -{u}_{i}^{\ast }\right| ^{p}\right)^{\frac{1}{p}}</math> |

|} | |} | ||

| style="width: 5px;text-align: right;white-space: nowrap;" | (2) | | style="width: 5px;text-align: right;white-space: nowrap;" | (2) | ||

|} | |} | ||

| − | + | where <math display="inline">{u}_{i}^{\ast }</math> and <math display="inline">{w}_{i}</math> denote the utopia point values and weights associated with objective functions, respectively. When <math>p=\infty</math>, the above function (i.e., Eq.(2)) will only consider the largest deviation. Although the weighted Tchebycheff method is an efficient approach, its main drawback is that only weak non-dominated solutions can be guaranteed [56], which is obviously not optimal for the DM. So, Steuer and Choo [57] introduced an interactive weighted Tchebycheff method, which can generate every non-dominated point provided that weights are selected appropriately. Shin and Cho [23] introduced the lexicographic weighted Tchebycheff method to the RPD area. This method is proved to be efficient and capable of generating all Pareto optimal solutions when the process bias and variability are treated as a bi-objective problem. The mathematical model is shown below [23]:<span id='cite-42'></span>[[Draft Shin 691882792|<span id="cite-43"></span>]]<span id='cite-_Ref76152586'></span><span id='cite-_Ref76152586'></span> | |

| − | where <math display="inline">{u}_{i}^{\ast }</math> | + | |

{| class="formulaSCP" style="width: 100%; text-align: center;" | {| class="formulaSCP" style="width: 100%; text-align: center;" | ||

| Line 86: | Line 89: | ||

|- | |- | ||

|<math>min</math> | |<math>min</math> | ||

| − | | <math | + | | <math>\left[\,\xi ,\, \left[ {\left\{ \hat{\mu }\left( {\boldsymbol{\text{x}}}\right) -t\right\} }^{2}-{u}_{1}^{\ast }\right] +\left[{\hat{\sigma }}^{2}\left( {\boldsymbol{\text{x}}}\right) -{u}_{2}^{\ast }\right]\right]</math> |

|- | |- | ||

|<math>s.t.</math> | |<math>s.t.</math> | ||

| − | |<math> \, \lambda [{\left\{ \hat{\mu }\left( \boldsymbol{x}\right) -t\right\} }^{2}-{u}_{1}^{\ast }]\leq \xi</math> | + | |<math> \, \lambda \left[{\left\{ \hat{\mu }\left( {\boldsymbol{\text{x}}}\right) -t\right\} }^{2}-{u}_{1}^{\ast }\right]\leq \xi</math> |

|- | |- | ||

| | | | ||

| − | |<math>\left( 1-\lambda \right) [\hat{\sigma }\left( \boldsymbol{x}\right) -{u}_{2}^{\ast }]\leq \xi</math> | + | |<math>\left( 1-\lambda \right) \left[\hat{\sigma }\left({\boldsymbol{\text{x}}}\right) -{u}_{2}^{\ast }\right]\leq \xi</math> |

|- | |- | ||

| | | | ||

| − | |<math>\boldsymbol{x}\in X</math> | + | |<math>{\boldsymbol{\text{x}}}\in X</math> |

|} | |} | ||

| style="width: 5px;text-align: right;white-space: nowrap;" | (3) | | style="width: 5px;text-align: right;white-space: nowrap;" | (3) | ||

|} | |} | ||

| − | where <math>\xi</math> | + | where <math>\xi</math> and <math>\lambda</math> represent a non-negative variable and a weight term associated with process bias and variability, respectively. The Lexicographic weighed Tchebycheff method is utilized as a verification method in this paper. |

===3.3 Nash bargaining solution=== | ===3.3 Nash bargaining solution=== | ||

| − | A two-player bargaining game can be represented by a pair <math display="inline">\, (U,d)</math>, where <math display="inline">U\subset {R}^{2}</math>and <math display="inline">d\subset {R}^{2}</math>. | + | A two-player bargaining game can be represented by a pair <math display="inline">\, (U,d)</math>, where <math display="inline">U\subset {R}^{2}</math> and <math display="inline">d\subset {R}^{2}</math>. <math display="inline">U=({u}_{1}({\boldsymbol{\text{x}}}){,u}_{2}({\boldsymbol{\text{x}}}))</math> denotes a pair of obtainable payoffs of the two players, where <math display="inline">{u}_{1}({\boldsymbol{\text{x}}})</math> and <math display="inline">{\, u}_{2}\left({\boldsymbol{\text{x}}}\right) \,</math> represent the utility functions for player 1 and 2, respectively, and <math display="inline">{\boldsymbol{\text{x}}}{\, =}{(}{x}_{1},\, {x}_{2})\,</math> denotes a vector of actions taken by players. <math display="inline">d</math> (<math display="inline">{d=(d}_{1},{d}_{2})</math>), defined as a disagreement point, represents the payoffs that each player will gain from this game when two players fail to reach a satisfactory agreement. In other words the disagreement point values are the payoffs that each player can expect to receive if a negotiation breaks down. Assuming <math display="inline">{u}_{i}({\boldsymbol{\text{x}}})>{d}_{i}</math> where <math display="inline">{u}_{i}({\boldsymbol{\text{x}}})\in U</math> for <math display="inline">\, i\, =1,2</math>, the set <math display="inline">U\cap \left\{ \left( {u}_{1}({\boldsymbol{\text{x}}}),{u}_{2}({\boldsymbol{\text{x}}})\right) \in \, {R}^{2}:\, {u}_{1}({\boldsymbol{\text{x}}})\geq {d}_{1};\, {u}_{2}({\boldsymbol{\text{x}}})\geq {d}_{2}\right\}</math> is non-empty. As suggested by the expression of the Nash bargaining game <math display="inline">(U, d)</math>, the Nash bargaining solution is affected by both the reachable utility range (<math display="inline">U</math>) and disagreement point value (<math display="inline">d</math>). Since <math display="inline">U</math> cannot be changed, rational players will decide a disagreement point value to optimize their bargaining position. According to Myerson [59], there are three possible ways to determine the value of a disagreement point. One standard way is to calculate the minimax value for each player |

| − | {| class="formulaSCP" style="width: 100%; text-align: | + | |

| − | + | {| class="formulaSCP" style="width: 100%; text-align: left;" | |

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | | + | | |

| − | | <math>{d}_{2}=\mathrm{min}\,\mathrm{max}\,{u}_{2}({x}_{1},{x}_{2})</math> | + | {| style="text-align: center; margin:auto;width: 100%;" |

| − | | | + | |- |

| + | | style="text-align: center;" | <math>{d}_{1}=\mathrm{min}\,\mathrm{max}\,{u}_{1}({x}_{1},{x}_{2})\,\quad \mbox{and} \,\quad{d}_{2}=\mathrm{min}\,\mathrm{max}\,{u}_{2}({x}_{1},{x}_{2})</math> | ||

| + | |} | ||

| + | | style="width: 5px;text-align: right;white-space: nowrap;" |(4) | ||

|} | |} | ||

| + | To be more specific, Eq.(4) states that, given each possible action for player 2, player 1 has a corresponding best response strategy. Then, among all those best response strategies, player 1 chooses the one that returns the minimum payoff which is defined as a disagreement point value. Following this logic, player 1 can guarantee to receive an acceptable payoff. Another possible way of determining the disagreement point value is to derive the disagreement point value as an effective and rational threat to ensure the establishment of an agreement. The last possibility is to set the disagreement point as the focal equilibrium of the game. | ||

| − | + | Nash proposed four possible axioms that should be possessed by the bargaining game solution [<span id='cite-44'></span><span id='cite-45'></span>58,59]: | |

| − | + | ||

| − | Nash proposed four possible axioms that should be possessed by the solution | + | |

:* Pareto optimality | :* Pareto optimality | ||

| Line 128: | Line 131: | ||

:* Independence of irrelevant alternatives (IIA) | :* Independence of irrelevant alternatives (IIA) | ||

| − | The first axiom states that the solution should be Pareto optimal, which means it should not be | + | The first axiom states that the solution should be Pareto optimal, which means it should not be dominated by any other point. If the notation <math display="inline">f\left( U,d\right) =\left( {f}_{1}\left( U,d\right) ,\, {f}_{2}\left( U,d\right) \right) \,</math> stands for the Nash bargaining solution to the bargaining problem <math display="inline">(U,d)</math>, then the solution <math display="inline">{u}^{\ast }=</math><math>\left( {u}_{1}\left( {{\boldsymbol{\text{x}}}}^{\mathit{\boldsymbol{\ast }}}\right) ,{u}_{2}\left( {{\boldsymbol{\text{x}}}}^{\boldsymbol{\ast }}\right) \right)</math> can be Pareto efficient if and only if there exists no other point <math display="inline">{u}^{'}=</math><math>\left( {u}_{1}\left( {{\boldsymbol{\text{x}}}}^{\mathit{\boldsymbol{'}}}\right) ,\, {u}_{2}\left( {{\boldsymbol{\text{x}}}}^{\boldsymbol{'}}\right) \right) \in U</math> such that <math display="inline">{u}_{1}\left( {{\boldsymbol{\text{x}}}}^{\boldsymbol{'}}\right) >{u}_{1}\left( {{\boldsymbol{\text{x}}}}^{\boldsymbol{\ast }}\right) ;{u}_{2}\left( {{\boldsymbol{\text{x}}}}^{\mathit{\boldsymbol{'}}}\right) \geq {u}_{2}\left( {{\boldsymbol{\text{x}}}}^{\boldsymbol{\ast }}\right)</math> or <math display="inline">{\, u}_{1}\left( {{\boldsymbol{\text{x}}}}^{\boldsymbol{'}}\right) \geq {u}_{1}\left( {{\boldsymbol{\text{x}}}}^{\boldsymbol{\ast }}\right) ;</math> <math display="inline">{u}_{2}\left( {{\boldsymbol{\text{x}}}}^{\boldsymbol{'}}\right) >{u}_{2}\left( {{\boldsymbol{\text{x}}}}^{\boldsymbol{\ast }}\right)</math>. This implies that there is no alternative feasible solution that is better for one player without worsening the payoff for other players. |

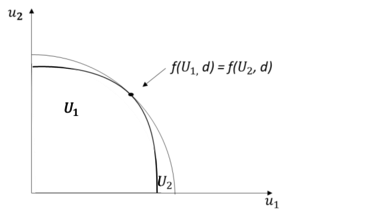

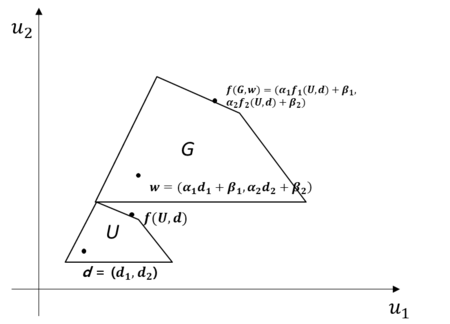

| − | The second axiom, IEUR also referred to as scale covariance, states that the solution should be independent of positive affine transformations of utilities. | + | The second axiom, IEUR also referred to as scale covariance, states that the solution should be independent of positive affine transformations of utilities.In other words, if a new bargaining game <math display="inline">(G,w)</math> exists, where <math>G=\left\{ {\alpha }_{1}{u}_{1}({\boldsymbol{\text{x}}})+ {\beta }_{1},{\alpha }_{2}{u}_{2}({\boldsymbol{\text{x}}})+{\beta }_{2}\right\}</math> and <math>w=\left({\alpha }_{1}{d}_{1}+{\beta }_{1},{\alpha }_{2}{d}_{2}+{\beta }_{2}\right)\,</math> and where <math>\left({u}_{1}({\boldsymbol{\text{x}}}),{u}_{2}({\boldsymbol{\text{x}}})\right)\in U</math> and <math> {\alpha }_{1}>0,{\alpha }_{2}>0</math>, then the solution for this new bargaining game (i.e., <math display="inline">f(G,w)</math>) can be obtained by applying the same transformations, which is demonstrated by Eq.(5) and [[#img-1|Figure 1]]: |

| − | + | ||

| − | + | {| class="formulaSCP" style="width: 100%; text-align: left;" | |

| − | + | ||

| − | | | + | |

|- | |- | ||

| − | | | + | | |

| − | | <math>w=({\alpha }_{1}{ | + | {| style="text-align: center; margin:auto;width: 100%;" |

| − | | | + | |- |

| + | | style="text-align: center;" |<math>\, \, f(G,\, w)=({\alpha }_{1}{f}_{1}(U,d)+{\beta }_{1},\, {\alpha }_{2}{f}_{2}(U,\, d)+{\beta }_{2})\, | ||

| + | </math> | ||

| + | |} | ||

| + | | style="width: 5px;text-align: right;white-space: nowrap;" |(5) | ||

|} | |} | ||

| − | + | <div id='img-1'></div> | |

| + | {| style="text-align: center; border: 1px solid #BBB; margin: 1em auto; width: auto;max-width: auto;" | ||

| + | |- | ||

| + | |style="padding:10px;"| [[File:Dail2.png|450px]] | ||

| + | |- style="text-align: center; font-size: 75%;" | ||

| + | | colspan="1" style="padding:10px;"| '''Figure 1'''. Explanation of IEUR axiom | ||

| + | |} | ||

| − | + | ||

| − | + | The third axiom “symmetry” represents that the solutions should be symmetric when the bargaining positions of the two players are completely symmetric. This axiom can be explained as if there is no information that can be used to distinguish one player from the other, then the solutions should also be indistinguishable between players [46]. | |

| − | |<math | + | |

| − | </math> | + | As shown in [[#img-2|Figure 2]], the last axiom states that if <math display="inline">{U}_{1}\subset {U}_{2}</math> and <math display="inline">f({U}_{2},d)</math> is located within the feasible area <math display="inline">{U}_{1}</math>, then <math display="inline">f\left( {U}_{1,}d\right) =</math><math>f({U}_{2},d)</math> [59]. |

| − | | | + | |

| + | <div id='img-2'></div> | ||

| + | {| style="text-align: center; border: 1px solid #BBB; margin: 1em auto; width: auto;max-width: auto;" | ||

| + | |- | ||

| + | |style="padding:10px;"| [[File:Draft_Shin_691882792-image2.png|centre|374x374px|]] | ||

| + | |- style="text-align: center; font-size: 75%;" | ||

| + | | colspan="1" style="padding:10px;"| '''Figure 2'''. Explanation of IIA axiom | ||

|} | |} | ||

| − | |||

| − | |||

| − | |||

| − | + | The solution function introduced by Nash [46] that satisfies all the four axioms as identified before can be defined as follows: | |

| − | + | ||

| − | + | {| class="formulaSCP" style="width: 100%; text-align: center;" | |

| + | |- | ||

| + | | | ||

| + | {| style="text-align: center; margin:auto;" | ||

| + | |- | ||

| + | | <math display="inline">f\left( U,\, d\right) =Max\prod _{i=1,2}^{}({u}_{i}({\boldsymbol{\text{x}}})-{d}_{i})=</math><math>Max\, ({u}_{1}({\boldsymbol{\text{x}}})-{d}_{1})({u}_{2}({\boldsymbol{\text{x}}})-{d}_{2})</math> | ||

| + | |} | ||

| + | | style="width: 5px;text-align: right;white-space: nowrap;" | (6) | ||

| + | |} | ||

| − | + | where <math display="inline">{u}_{i}({\boldsymbol{\text{x}}})>{d}_{i},\, i=1,2</math>. Intuitively, this function is trying to find solutions that maximize each player’s difference in payoffs between the cooperative agreement point and the disagreement point. In simpler terms, Nash selects an agreement point <math display="inline">({u}_{1}\left( {{\boldsymbol{\text{x}}}}^{\ast }\right) ,{u}_{2}({{\boldsymbol{\text{x}}}}^{\ast }))</math> that maximizes the product of utility gains from the disagreement point <math display="inline">\, ({d}_{1},{d}_{2})</math>. | |

| − | + | == 4. The proposed model == | |

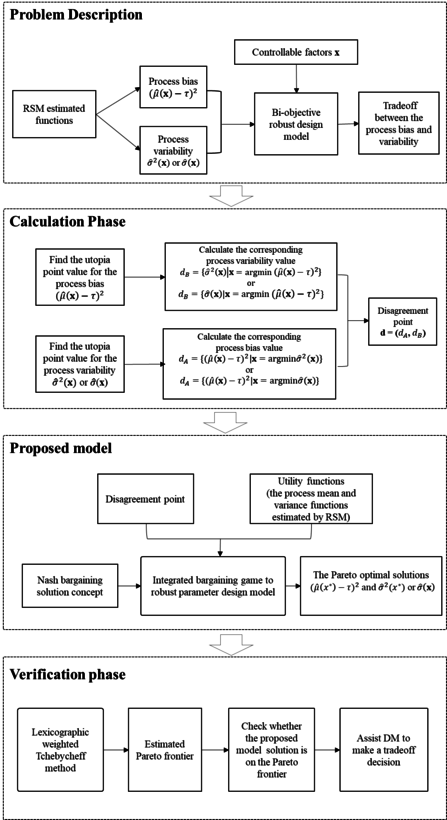

| − | + | The proposed method attempts to integrate bargaining game concepts into the tradeoff issue between the process bias and variability, so that not only the interaction between process bias and variability can be incorporated but also a unique optimal solution can be obtained. The detailed procedure includes problem description, calculation for response functions and disagreement points, bargaining game based RPD model, and verification can be illustrated in [[#img-3|Figure 3]]. As illustrated in [[#img-3|Figure 3]], the objective of the proposed method is to address the tradeoff between process bias and variability. In the calculation phase, a utopia point can be calculated based on separately estimated functions for the process bias and variability. However, this utopia point is in an infeasible region, which means that a simultaneous minimization of the process bias and variability is unachievable. The disagreement point is calculated by first, optimizing only one of the objective functions (i.e., the estimated process variability or the process bias function) and obtaining a solution set, and second, inserting the obtained solution set into the other objective function to generate a corresponding value. In the proposed model, based on the obtained disagreement point, the Nash bargaining solution concept is applied to solve the bargaining game. While in the verification phase, the lexicographic weighted tchebycheff is applied to generate the associated Pareto frontier, so that the obtained game solution can be compared with other efficient solutions. | |

| − | + | ||

| − | <div | + | <div id='img-3'></div> |

| − | ''' | + | {| style="text-align: center; border: 1px solid #BBB; margin: 1em auto; width: auto;max-width: auto;" |

| + | |- | ||

| + | |style="padding:10px;"| [[File:New2.png|centre|820x820px|]] | ||

| + | |- style="text-align: center; font-size: 75%;" | ||

| + | | colspan="1" style="padding:10px;"| '''Figure 3'''. The proposed procedure by integrating of bargaining game into RPD | ||

| + | |} | ||

| − | |||

| − | Nash | + | An integration of the Nash bargaining game model involves three steps. First step, the two players and their corresponding utility function should be defined. The process bias can be defined as player A, and variability can be regarded as player B. The RSM-based estimated functions of both responses will be regarded as the players’ utility functions in this bargaining game (i.e., <math display="inline">u_{A}({\boldsymbol{\text{x}}})</math> and <math display="inline"> u_{B}({\boldsymbol{\text{x}}})</math>) where <math>{\boldsymbol{\text{x}}}</math> stands for a vector of controllable factors. Then, the goal of each player is to choose a set of controllable factors while minimizing each individual utility function. Second step, a disagreement point can be determined by applying a minimax-value theory as identified in Equation 7. Based on the tradeoff between the process bias and variability, the modified disagreement point functions can be defined as follows: |

{| class="formulaSCP" style="width: 100%; text-align: center;" | {| class="formulaSCP" style="width: 100%; text-align: center;" | ||

| Line 179: | Line 203: | ||

{| style="text-align: center; margin:auto;" | {| style="text-align: center; margin:auto;" | ||

|- | |- | ||

| − | | <math | + | | <math>{d}_{A}=\mathrm{max}\,\mathrm{min}\,{u}_{A}({\boldsymbol{\text{x}}})\,\quad \mbox{and}\,\quad {d}_{B}=\mathrm{max}\,\mathrm{min}\,{u}_{B}({\boldsymbol{\text{x}}})</math> |

|} | |} | ||

| style="width: 5px;text-align: right;white-space: nowrap;" | (7) | | style="width: 5px;text-align: right;white-space: nowrap;" | (7) | ||

|} | |} | ||

| − | + | In this way, both player A (i.e., the process bias) and player B (i.e., the process variability) are guaranteed to receive the worst acceptable payoffs. In that case, the disagreement point, defined as the maximum minimum utility value, can be calculated by minimizing only one objective (process variability or bias). The computational functions for the disagreement point values can be formulated as: | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

{| class="formulaSCP" style="width: 100%; text-align: center;" | {| class="formulaSCP" style="width: 100%; text-align: center;" | ||

| Line 198: | Line 215: | ||

{| style="text-align: center; margin:auto;" | {| style="text-align: center; margin:auto;" | ||

|- | |- | ||

| − | | <math | + | | <math>\left\{ {d}_{A}={u}_{A}({\boldsymbol{\text{x}}})|{\boldsymbol{\text{x}}}=\arg\min{u}_{B}({\boldsymbol{\text{x}}})\qquad \mbox{and}\qquad {\boldsymbol{\text{x}}}\in X\right\} </math> |

|} | |} | ||

| style="width: 5px;text-align: right;white-space: nowrap;" | (8) | | style="width: 5px;text-align: right;white-space: nowrap;" | (8) | ||

|} | |} | ||

| − | |||

and | and | ||

| Line 211: | Line 227: | ||

{| style="text-align: center; margin:auto;" | {| style="text-align: center; margin:auto;" | ||

|- | |- | ||

| − | | <math | + | | <math>\left\{ {d}_{B}={u}_{B}({\boldsymbol{\text{x}}})|{\boldsymbol{\text{x}}}=\arg\min{u}_{A}({\boldsymbol{\text{x}}})\qquad \mbox{and}\qquad{\boldsymbol{\text{x}}}\in X\right\} </math> |

|} | |} | ||

| style="width: 5px;text-align: right;white-space: nowrap;" | (9) | | style="width: 5px;text-align: right;white-space: nowrap;" | (9) | ||

|} | |} | ||

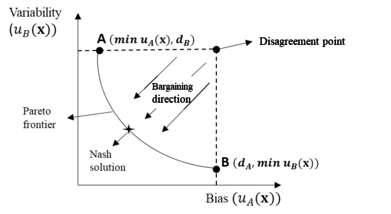

| + | Thus, the idea of the proposed method to find the optimal solutions is to continuously perform bargaining games from the specified disagreement point <math display="inline">({d}_{A} ,\, {d}_{B})</math> to Pareto frontier as illustrated in [[#img-4|Figure 4]]. To be more specific, as demonstrated in [[#img-4|Figure 4]], if the convex curve represents all Pareto optimal solutions, then each point on the curve can be regarded as a minimum utility value for one of the two process parameters (i.e., the process variability or bias). For example, at point A, when the process bias is minimized within the feasible area, the corresponding variability value is the minimum utility value for the process variability, since other utility values would be either dominated or infeasible. These solutions may provide useful insight for a DM when the relative importance between process bias and variability is difficult to identify. | ||

| − | < | + | <div id='img-4'></div> |

| + | {| style="text-align: center; border: 1px solid #BBB; margin: 1em auto; width: auto;max-width: auto;" | ||

| + | |- | ||

| + | |style="padding:10px;"| [[File:New.png|centre|391x391px|]] | ||

| + | |- style="text-align: center; font-size: 75%;" | ||

| + | | colspan="1" style="padding:10px;"| '''Figure 4'''. Solution concepts for the proposed bargaining game based RPD method<br> by integrating trafeoff between both process bias and variability | ||

| + | |} | ||

| + | |||

| + | |||

| + | In the final step, the Nash bargaining solution function <math display="inline">Max\left( {u}_{A}({\boldsymbol{\text{x}}})- {d}_{A}\right) \left( {u}_{B}({\boldsymbol{\text{x}}})-{d}_{B}\right)</math> is utilized. In an RPD problem, the objective of this problem is to minimize both process bias and variability, so a constraint of <math display="inline">{u}_{i}({\boldsymbol{\text{x}}})</math>< <math display="inline">{d}_{i},\, i=</math><math>A,B</math> is applied. After the players, utility functions and the disagreement point are identified, the Nash bargaining solution function is applied as below: | ||

{| class="formulaSCP" style="width: 100%; text-align: center;" | {| class="formulaSCP" style="width: 100%; text-align: center;" | ||

| Line 224: | Line 250: | ||

{| style="text-align: center; margin:auto;" | {| style="text-align: center; margin:auto;" | ||

|- | |- | ||

| − | | <math display="inline">\left\{ {d}_{A} | + | |<math>\max </math> |

| + | | <math display="inline"> \left( {u}_{A}({\boldsymbol{\text{x}}})-{d}_{A}\right) \left( {u}_{B}({\boldsymbol{\text{x}}})-\right.\left. {d}_{B}\right) </math> | ||

| + | |- | ||

| + | |<math>s.t. </math> | ||

| + | |<math>{u}_{A}({\boldsymbol{\text{x}}})\leq {d}_{A},{u}_{B}({\boldsymbol{\text{x}}})\leq {d}_{B}, \qquad \mbox{and} \qquad {\boldsymbol{\text{x}}}\in \,X</math> | ||

|} | |} | ||

| style="width: 5px;text-align: right;white-space: nowrap;" | (10) | | style="width: 5px;text-align: right;white-space: nowrap;" | (10) | ||

|} | |} | ||

| + | where | ||

| − | and | + | {| style="text-align: center; margin:auto;" |

| − | + | |- | |

| + | |<math>u_A({\boldsymbol{\text{x}}})=\bigl(\widehat{\mu}({\boldsymbol{\text{x}}})-\tau\bigr)^2,\,u_B({\boldsymbol{\text{x}}})=\hat{\sigma}^2({\boldsymbol{\text{x}}})\,or\,\hat{\sigma}({\boldsymbol{\text{x}}})</math> | ||

| + | |- | ||

| + | |<math>\hat{\mu}({\boldsymbol{\text{x}}})=\alpha_0+{\boldsymbol{\text{x}}}^T\boldsymbol{\alpha_1}+{\boldsymbol{\text{x}}}^T\boldsymbol{\Gamma}{\boldsymbol{\text{x}}},\quad \mbox{and}\quad \hat{\sigma}^2({\boldsymbol{\text{x}}})=\beta_0+{\boldsymbol{\text{x}}}^T\boldsymbol{\beta_1}+{\boldsymbol{\text{x}}}^T\Delta{\boldsymbol{\text{x}}}</math> | ||

| + | |- | ||

| + | |<math>\hat{\sigma}({\boldsymbol{\text{x}}})=\gamma_0+{\boldsymbol{\text{x}}}^T\boldsymbol{\gamma_1}+{\boldsymbol{\text{x}}}^T\Epsilon{\boldsymbol{\text{x}}}</math> | ||

| + | |} | ||

| + | and, where | ||

{| class="formulaSCP" style="width: 100%; text-align: center;" | {| class="formulaSCP" style="width: 100%; text-align: center;" | ||

|- | |- | ||

| Line 237: | Line 275: | ||

{| style="text-align: center; margin:auto;" | {| style="text-align: center; margin:auto;" | ||

|- | |- | ||

| − | | <math display="inline">\left\{ { | + | | <math display="inline">{\boldsymbol{\text{x}}}=\left[ \, \begin{matrix}{x}_{1}\\{x}_{2}\\\, \begin{matrix}\vdots \\{x}_{n-1}\\{x}_{n}\end{matrix}\end{matrix}\right], \,{\mathit{\boldsymbol{\alpha }}}_{\mathit{\boldsymbol{1}}}=\left[ \, \begin{matrix}{\hat{\alpha }}_{1}\\{\hat{\alpha }}_{2}\\\, \begin{matrix}\vdots \\{\hat{\alpha }}_{n-1}\\{\hat{\alpha }}_{n}\end{matrix}\end{matrix}\right],\,{\mathit{\boldsymbol{\beta }}}_{\mathit{\boldsymbol{1}}}=\, \left[ \begin{matrix}{\hat{\beta }}_{1}\\\begin{matrix}{\hat{\beta }}_{2}\\\vdots \end{matrix}\\\begin{matrix}{\hat{\beta }}_{n-1}\\{\hat{\beta }}_{n}\end{matrix}\end{matrix}\right], \,{\mathit{\boldsymbol{\gamma }}}_{\mathit{\boldsymbol{1}}}=\, \left[ \begin{matrix}{\hat{\gamma }}_{1}\\\begin{matrix}{\hat{\gamma }}_{2}\\\vdots \end{matrix}\\\begin{matrix}{\hat{\gamma }}_{n-1}\\{\hat{\gamma }}_{n}\end{matrix}\end{matrix}\right],\quad \mbox{and}\quad \boldsymbol{\Gamma}=\, \left[ \begin{matrix}\begin{matrix}{\hat{\alpha }}_{11}&{\hat{\alpha }}_{12}/2\\{\hat{\alpha }}_{12}/2&{\hat{\alpha }}_{22}\end{matrix}&\cdots &\begin{matrix}{\hat{\alpha }}_{1n}/2\\{\hat{\alpha }}_{2n}/2\end{matrix}\\\vdots &\ddots &\vdots \\\begin{matrix}{\hat{\alpha }}_{1n}/2&{\hat{\alpha }}_{2n}/2\end{matrix}&\cdots &{\hat{\alpha }}_{nn}\end{matrix}\right] </math> |

|} | |} | ||

| − | | | + | |- |

| + | |<math>\boldsymbol{\Delta}=\, \left[ \begin{matrix}\begin{matrix}{\hat{\beta }}_{11}&{\hat{\beta }}_{12}/2\\{\hat{\beta }}_{12}/2&{\hat{\beta }}_{22}\end{matrix}&\cdots &\begin{matrix}{\hat{\beta }}_{1n}/2\\{\hat{\beta }}_{2n}/2\end{matrix}\\\vdots &\ddots &\vdots \\\begin{matrix}{\hat{\beta }}_{1n}/2&{\hat{\beta }}_{2n}/2\end{matrix}&\cdots &{\hat{\beta }}_{nn}\end{matrix}\right],\quad \mbox{and}\quad\boldsymbol{\Epsilon}=\, \left[ \begin{matrix}\begin{matrix}{\hat{\gamma }}_{11}&{\hat{\gamma }}_{12}/2\\{\hat{\gamma }}_{12}/2&{\hat{\gamma }}_{22}\end{matrix}&\cdots &\begin{matrix}{\hat{\gamma }}_{1n}/2\\{\hat{\gamma}}_{2n}/2\end{matrix}\\\vdots &\ddots &\vdots \\\begin{matrix}{\hat{\gamma}}_{1n}/2&{\hat{\gamma }}_{2n}/2\end{matrix}&\cdots &{\hat{\gamma }}_{nn}\end{matrix}\right]</math> | ||

|} | |} | ||

| − | + | where <math>(d_A, d_B)</math>, <math>u_A({\boldsymbol{\text{x}}})</math>, <math>u_B({\boldsymbol{\text{x}}})</math>, <math>\hat{\mu}({\boldsymbol{\text{x}}})</math>, <math>\hat{\sigma}^2({\boldsymbol{\text{x}}})</math>, <math>\hat{\sigma}({\boldsymbol{\text{x}}})</math>, <math>\tau</math>, <math>X</math> and <math>\bf x</math> represent a disagreement point, utility functions for player A and B, an estimated process mean function, process variance function, and standard deviation function, the target value, the feasible area, the vector of controllable factors, respectively. In Eq.(10), <math>\boldsymbol{\alpha_1} </math>, <math>\boldsymbol{\beta_1} </math>, <math>\boldsymbol{\gamma_1} </math>, <math>\boldsymbol{\Gamma} </math>, <math>\boldsymbol{\Delta} </math>, and <math>\boldsymbol{\Epsilon} </math> denote vectors and matrixes of estimated regression coefficients for the process mean, variance, and standard deviation, respectively. Here, the constraint <math>u_i({\boldsymbol{\text{x}}})\leq d_i </math>, where <math>i=A,B </math>, ensures that the obtained agreement point payoffs will be at least as good as the disagreement point payoffs. Otherwise, there is no reason for players to participate in the negotiation. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | Here, the constraint | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

== 5. Numerical illustrations and sensitivity analysis == | == 5. Numerical illustrations and sensitivity analysis == | ||

| Line 298: | Line 287: | ||

===5.1 Numerical example 1=== | ===5.1 Numerical example 1=== | ||

| − | <span id='_Hlk60583940'></span>Two numerical examples are conducted to | + | <span id='_Hlk60583940'></span> |

| + | Two numerical examples are conducted to demonstrate the efficiency of the proposed method. As explained in section 3.1, the process variability can be measured in terms of both the estimated standard deviation and variance functions, but the optimal solutions can be different if different response surface expressions are used. Therefore, the equations estimated in the original example were utilized for better comparison. Example 1 investigates the relationship between the coating thickness of bare silicon wafers (<math>y </math>) and three controller variables: mould temperature <math display="inline">({x}_{1})</math>, injection flow rate <math display="inline">({x}_{2})</math>, and cooling rate <math display="inline">{(x}_{3})</math> [10]. A central composite design and three replications were conducted, and the detailed experimental data with coded values can be shown in [[#tab-1|Table 1]]. | ||

| + | <span id='cite-46'></span> | ||

| − | <div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> | + | <div class="center" style="width: auto; margin-left: auto; margin-right: auto;font-size: 75%;"> |

| − | ''' | + | '''Table 1'''. Data for numerical example 1</div> |

| − | {| style=" | + | <div id='tab-1'></div> |

| + | {| class="wikitable" style="margin: 1em auto 0.1em auto;border-collapse: collapse;font-size:85%;width:auto;" | ||

| + | |-style="text-align:center" | ||

| + | ! Experiments number !! <math>x_1</math> !! <math>x_2</math> !! <math>x_3</math> !! <math>y_1</math> !! <math>y_2</math> !!<math>y_3</math> !! <math>y_4</math> !! <math>\overline{\mathit{y}}</math> !! <math>\mathit{\sigma }</math> | ||

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |1 |

| − | + | | style="text-align: center;" |-1 | |

| − | + | | style="text-align: center;" |-1 | |

| − | | | + | | style="text-align: center;" |-1 |

| − | | | + | | style="text-align: center;" |76.30 |

| − | | | + | | style="text-align: center;" |80.50 |

| − | | | + | | style="text-align: center;" |77.70 |

| − | | | + | | style="text-align: center;" |81.10 |

| − | | | + | | style="text-align: center;" |78.90 |

| − | | | + | | style="text-align: center;" |2.28 |

| − | | | + | |

| − | | | + | |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |2 |

| − | | | + | | style="text-align: center;" |1 |

| − | | | + | | style="text-align: center;" |-1 |

| − | | | + | | style="text-align: center;" |-1 |

| − | | | + | | style="text-align: center;" |79.10 |

| − | | | + | | style="text-align: center;" |81.20 |

| − | | | + | | style="text-align: center;" |78.80 |

| − | | | + | | style="text-align: center;" |79.60 |

| − | | | + | | style="text-align: center;" |79.68 |

| − | | | + | | style="text-align: center;" |1.07 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |3 |

| − | | | + | | style="text-align: center;" |-1 |

| − | | | + | | style="text-align: center;" |1 |

| − | | | + | | style="text-align: center;" |-1 |

| − | | | + | | style="text-align: center;" |82.50 |

| − | | | + | | style="text-align: center;" |81.50 |

| − | | | + | | style="text-align: center;" |79.50 |

| − | | | + | | style="text-align: center;" |80.90 |

| − | | | + | | style="text-align: center;" |81.10 |

| − | | | + | | style="text-align: center;" |1.25 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |4 |

| − | | | + | | style="text-align: center;" |1 |

| − | | | + | | style="text-align: center;" |1 |

| − | | | + | | style="text-align: center;" |-1 |

| − | | | + | | style="text-align: center;" |72.30 |

| − | | | + | | style="text-align: center;" |74.30 |

| − | | | + | | style="text-align: center;" |75.70 |

| − | | | + | | style="text-align: center;" |72.70 |

| − | | | + | | style="text-align: center;" |73.75 |

| − | | | + | | style="text-align: center;" |1.56 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |5 |

| − | | | + | | style="text-align: center;" |-1 |

| − | | | + | | style="text-align: center;" |-1 |

| − | | | + | | style="text-align: center;" |1 |

| − | | | + | | style="text-align: center;" |70.60 |

| − | | | + | | style="text-align: center;" |72.70 |

| − | | | + | | style="text-align: center;" |69.90 |

| − | | | + | | style="text-align: center;" |71.50 |

| − | | | + | | style="text-align: center;" |71.18 |

| − | | | + | | style="text-align: center;" |1.21 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |6 |

| − | | | + | | style="text-align: center;" |1 |

| − | | | + | | style="text-align: center;" |-1 |

| − | | | + | | style="text-align: center;" |1 |

| − | | | + | | style="text-align: center;" |74.10 |

| − | | | + | | style="text-align: center;" |77.90 |

| − | | | + | | style="text-align: center;" |76.20 |

| − | | | + | | style="text-align: center;" |77.10 |

| − | | | + | | style="text-align: center;" |76.33 |

| − | | | + | | style="text-align: center;" |1.64 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |7 |

| − | | | + | | style="text-align: center;" |-1 |

| − | | | + | | style="text-align: center;" |1 |

| − | | | + | | style="text-align: center;" |1 |

| − | | | + | | style="text-align: center;" |78.50 |

| − | | | + | | style="text-align: center;" |80.00 |

| − | | | + | | style="text-align: center;" |76.20 |

| − | | | + | | style="text-align: center;" |75.30 |

| − | | | + | | style="text-align: center;" |77.50 |

| − | | | + | | style="text-align: center;" |2.14 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |8 |

| − | | | + | | style="text-align: center;" |1 |

| − | | | + | | style="text-align: center;" |1 |

| − | | | + | | style="text-align: center;" |1 |

| − | | | + | | style="text-align: center;" |84.90 |

| − | | | + | | style="text-align: center;" |83.10 |

| − | | | + | | style="text-align: center;" |83.90 |

| − | | | + | | style="text-align: center;" |83.50 |

| − | | | + | | style="text-align: center;" |83.85 |

| − | | | + | | style="text-align: center;" |0.77 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |9 |

| − | | | + | | style="text-align: center;" |-1.682 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |74.10 |

| − | | | + | | style="text-align: center;" |71.80 |

| − | | | + | | style="text-align: center;" |72.50 |

| − | | | + | | style="text-align: center;" |71.90 |

| − | | | + | | style="text-align: center;" |72.58 |

| − | | | + | | style="text-align: center;" |1.06 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |10 |

| − | | | + | | style="text-align: center;" |1.682 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |76.40 |

| − | | | + | | style="text-align: center;" |78.70 |

| − | | | + | | style="text-align: center;" |79.20 |

| − | | | + | | style="text-align: center;" |79.30 |

| − | | | + | | style="text-align: center;" |78.40 |

| − | | | + | | style="text-align: center;" |1.36 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |11 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |-1.682 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |79.20 |

| − | | | + | | style="text-align: center;" |80.70 |

| − | | | + | | style="text-align: center;" |81.00 |

| − | | | + | | style="text-align: center;" |82.30 |

| − | | | + | | style="text-align: center;" |80.80 |

| − | | | + | | style="text-align: center;" |1.27 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |12 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |1.682 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |77.90 |

| − | | | + | | style="text-align: center;" |76.40 |

| − | | | + | | style="text-align: center;" |76.90 |

| − | | | + | | style="text-align: center;" |77.40 |

| − | | | + | | style="text-align: center;" |77.15 |

| − | | | + | | style="text-align: center;" |0.65 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |13 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |-1.682 |

| − | | | + | | style="text-align: center;" |82.40 |

| − | | | + | | style="text-align: center;" |82.70 |

| − | | | + | | style="text-align: center;" |82.60 |

| − | | | + | | style="text-align: center;" |83.10 |

| − | | | + | | style="text-align: center;" |82.70 |

| − | | | + | | style="text-align: center;" |0.29 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |14 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |1.682 |

| − | | | + | | style="text-align: center;" |79.70 |

| − | | | + | | style="text-align: center;" |82.40 |

| − | | | + | | style="text-align: center;" |81.00 |

| − | | | + | | style="text-align: center;" |81.20 |

| − | | | + | | style="text-align: center;" |81.08 |

| − | | | + | | style="text-align: center;" |1.11 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |15 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |70.40 |

| − | | | + | | style="text-align: center;" |70.60 |

| − | | | + | | style="text-align: center;" |70.80 |

| − | | | + | | style="text-align: center;" |71.10 |

| − | | | + | | style="text-align: center;" |70.73 |

| − | | | + | | style="text-align: center;" |0.30 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |16 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |70.90 |

| − | | | + | | style="text-align: center;" |69.70 |

| − | | | + | | style="text-align: center;" |69.00 |

| − | | | + | | style="text-align: center;" |69.90 |

| − | | | + | | style="text-align: center;" |69.88 |

| − | | | + | | style="text-align: center;" |0.78 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |17 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |70.70 |

| − | | | + | | style="text-align: center;" |71.90 |

| − | | | + | | style="text-align: center;" |71.70 |

| − | | | + | | style="text-align: center;" |71.20 |

| − | | | + | | style="text-align: center;" |71.38 |

| − | | | + | | style="text-align: center;" |0.54 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |18 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |70.20 |

| − | | | + | | style="text-align: center;" |71.00 |

| − | | | + | | style="text-align: center;" |71.50 |

| − | | | + | | style="text-align: center;" |70.40 |

| − | | | + | | style="text-align: center;" |70.78 |

| − | | | + | | style="text-align: center;" |0.59 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |19 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |0 |

| − | | | + | | style="text-align: center;" |71.50 |

| − | | | + | | style="text-align: center;" |71.10 |

| − | | | + | | style="text-align: center;" |71.20 |

| − | | | + | | style="text-align: center;" |70.00 |

| − | | | + | | style="text-align: center;" |70.95 |

| − | | | + | | style="text-align: center;" |0.66 |

|- | |- | ||

| − | | | + | | style="text-align: center;vertical-align: top;" |20 |

| − | + | | style="text-align: center;" |0 | |

| − | + | | style="text-align: center;" |0 | |

| − | + | | style="text-align: center;" |0 | |

| − | + | | style="text-align: center;" |71.00 | |

| − | + | | style="text-align: center;" |70.40 | |

| − | + | | style="text-align: center;" |70.90 | |

| − | | | + | | style="text-align: center;" |69.90 |

| − | + | | style="text-align: center;" |70.55 | |

| − | + | | style="text-align: center;" |0.51 | |

| − | | | + | |

| − | + | ||

| − | + | ||

| − | | | + | |

| − | | | + | |

| − | + | ||

| − | | | + | |

| − | | | + | |

| − | | | + | |

| − | | | + | |

| − | | | + | |

|} | |} | ||

| − | The | + | The fitted response functions for the process bias and standard deviation of the coating thickness are estimated by using LSM through MINITABsoftware package as: |

| − | + | {| class="formulaSCP" style="width: 100%; text-align: center;" | |

| − | <math | + | |- |

| + | | | ||

| + | {| style="text-align: center; margin:auto;" | ||

| + | |- | ||

| + | | <math>\hat{\mu }\left({\bf x}\right) =\,72.21 +\, {\bf x}^{T} \boldsymbol{\alpha }_{1}+{\bf x}^{T}\boldsymbol{\Gamma} {\bf x}</math> | ||

| + | |} | ||

| + | | style="width: 5px;text-align: right;white-space: nowrap;" | (11) | ||

| + | |} | ||

| − | + | where | |

| − | + | {| class="formulaSCP" style="width: 100%; text-align: center;" | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | | + | | |

| + | {| style="text-align: center; margin:auto;" | ||

| + | |- | ||

| + | |<math>\boldsymbol{\alpha }_{1}=\, \left[ \begin{matrix}0.59\\-0.35\\-0.01\end{matrix}\right], \qquad \mbox{and} \qquad \boldsymbol\Gamma =\, \left[ \begin{matrix}0.28&0.045&0.83\\0.045&1.29&0.755\\0.83&0.755&1.85\end{matrix}\right] </math> | ||

| + | |} | ||

| + | |} | ||

| − | + | {| class="formulaSCP" style="width: 100%; text-align: center;" | |

| − | + | ||

| − | | | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | | + | | |

| − | | | + | {| style="text-align: center; margin:auto;" |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | | + | | <math>\hat{\sigma }\left( {\bf x}\right)=\, 2.55\,+ {\bf x}^T\boldsymbol{\gamma}_1+{\bf x}^T \Epsilon {\bf x}</math> |

| − | | | + | |} |

| − | | | + | | style="width: 5px;text-align: right;white-space: nowrap;" | (12) |

| − | + | |} | |

| − | + | ||

| − | | | + | where |

| − | + | ||

| − | + | {| class="formulaSCP" style="width: 100%; text-align: left;" | |

| − | + | ||

| − | | | + | |

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | | + | | |

| − | | | + | {| style="text-align: center; margin:auto;width: 100%;" |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | | + | | style="text-align: center;" |<math>\boldsymbol\gamma_1=\, \left[ \begin{matrix}0.38\\-0.43\\0.56\end{matrix}\right], \qquad \mbox{and} \qquad \boldsymbol\mathrm{E}=\, \left[ \begin{matrix}0.49&-0.235&0.36\\-0.235&0.61&-0.06\\0.36&-0.06&0.85\end{matrix}\right] </math> |

| − | + | |} | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | | | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

|} | |} | ||

| + | Based on the proposed RPD procedure as described in [[#img-3|Figure 3]], those two functions (i.e., process bias and standard deviation) as shown in Eqs.(11) and (12) are regarded as two players and also their associated utility functions in the bargaining game. The disagreement point as shown in [[#img-4|Figure 4]] can be computed as <math display="inline">d=({d}_{A}, {d}_{B})=(1.2398, 3.1504)</math> by using Eqs.(8) and (9). Then, the optimization problem can be solved by applying Eq.(10) under an additional constraint, <math display="inline">\sum _{l=1}^{3}{{x}_{l}}^{2}\leq 3</math>. which represents a feasible experiment region. | ||

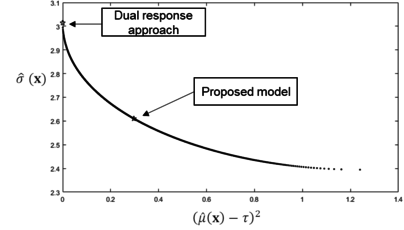

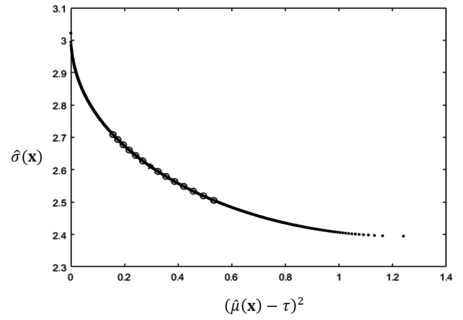

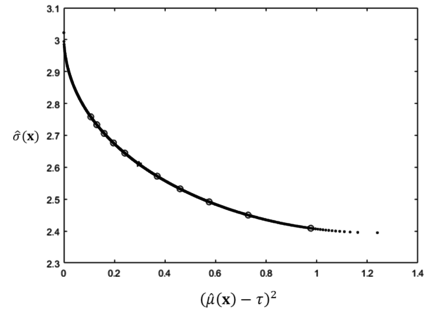

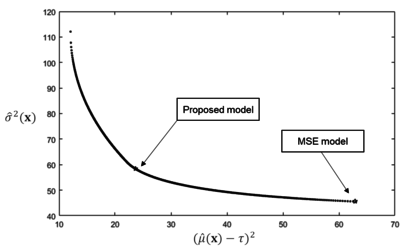

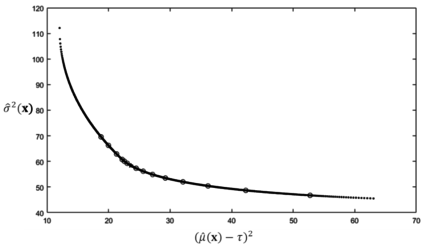

| − | + | The solution (i.e., <math display="inline">\left( \hat{\mu }({\bf x}^* ) -\tau \right)^{2}= 0.2967</math> and <math display="inline">\hat\sigma ({\bf x}^*) = 2.6101</math>) are calculated by using a MATLAB software package. To perform a comparative study, the optimization results of the proposed method and the conventional dual response approach are summarized in [[#tab-2|Table 2]]. Based on [[#tab-2|Table 2]], the proposed method provides slightly better MSE results in this particular numerical example. To check the efficiency of the obtained results, the lexicographic weighted Tchebycheff approach is adopted to procure an associated Pareto frontier which is shown in [[#img-5|Figure 5]]. | |

| − | + | ||

| − | + | ||

| − | <math display="inline">\hat{\mu } | + | |

| − | + | ||

| − | + | ||

| − | <math display="inline"> | + | |

| − | + | ||

| − | + | ||

| − | <div style=" | + | <div class="center" style="width: auto; margin-left: auto; margin-right: auto;font-size: 75%;"> |

| − | + | '''Table 2'''. The optimization results of example 1</div> | |

| − | + | <div id='tab-2'></div> | |

| − | + | {| class="wikitable" style="margin: 1em auto 0.1em auto;border-collapse: collapse;font-size:85%;width:auto;" | |

| − | + | |-style="text-align:center" | |

| − | + | ! !! <math>{x}_{1}^{\ast }</math> !! <math>{x}_{2}^{\ast }</math> !! <math>{x}_{3}^{\ast }</math> !! <math>{\left( \hat{\mu }\left( {\bf x}^*\right) -\tau \right) }^{2}</math> !! <math>\hat{\sigma }^{2}({\bf x}^*)</math> !! MSE | |

| − | + | ||

| − | + | ||

|- | |- | ||

| − | | style=" | + | | style="text-align: left;"|'''Dual response model with WLS''' |

| − | | style=" | + | | style="text-align: center;"|-1.4561 |

| − | | style=" | + | | style="text-align: center;"|-0.1456 |

| − | | style=" | + | | style="text-align: center;"|0.5596 |

| − | | style=" | + | | style="text-align: center;"|0 |

| − | | style=" | + | | style="text-align: center;"|3.0142 |

| + | | style="text-align: center;"|9.0854 | ||

|- | |- | ||

| − | | style=" | + | | style="text-align: left;"|'''Proposed model''' |

| − | | style=" | + | | style="text-align: center;"|-0.8473 |

| − | | style=" | + | | style="text-align: center;"|0.0399 |

| − | | style=" | + | | style="text-align: center;"|0.2248 |

| − | | style=" | + | | style="text-align: center;"|0.2967 |

| − | + | | style="text-align: center;"|2.6101 | |

| − | + | | style="text-align: center;"|7.1093 | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | | style=" | + | |

| − | | style=" | + | |

| − | + | ||

|} | |} | ||

| − | + | <div id='img-5'></div> | |

| + | {| style="text-align: center; border: 1px solid #BBB; margin: 1em auto; width: auto;max-width: auto;" | ||

| + | |- | ||

| + | |style="padding:10px;"| [[File:News.png|alt=|centre|404x404px|]] | ||

| + | |- style="text-align: center; font-size: 75%;" | ||

| + | | colspan="1" style="padding:10px;"| '''Figure 5'''. The optimization results plot with the Pareto frontier of example 1 | ||

| + | |} | ||

| − | |||

| − | |||

| − | + | As exhibited in [[#img-5|Figure 5]], the obtained Nash bargaining solution, which is plotted as a star, is on the Pareto frontier. By using the concept of bargaining game theory, the interaction between process bias and variability can be incorporated while identifying a unique tradeoff result. As result, this proposed method might provide well-balanced optimal solutions associated with the process bias and variability in this particular example. | |

| − | + | ||

| − | ===5. | + | === 5.2 Sensitivity analysis for numerical example 1=== |

| − | + | Based on the optimization results, sensitivity analysis for different disagreement point values are then conducted for verification purposes as shown in [[#tab-3|Table 3]]. While changing <math>d_B</math> values by both 10% increment and decrement with fixed <math>d_A</math> value at 3.1504, the changing patterns of the process bias and variability values are investigated in this sensitivity analysis. | |

| − | <div class="center" style=" | + | <div class="center" style="font-size: 75%;">'''Table 3'''. Sensitivity analysis results for numerical example 1 by changing <math display="inline">{d}_{A}</math></div> |

| − | ''' | + | |

| − | {| style=" | + | <div id='tab-3'></div> |

| + | {| class="wikitable" style="margin: 1em auto 0.1em auto;border-collapse: collapse;font-size:85%;width:auto;" | ||

| + | |-style="text-align:center" | ||

| + | !<math>{d}_{A}</math> !! <math>{d}_{B}</math> !! <math>\left(\left( \hat{\mu }({\bf x}) -\tau \right)^2 -{d}_{A}\right)\ast \left( \hat{\sigma }({\bf x}) -{d}_{B}\right)</math> !! <math>x</math> !! <math>\left( \mu ({\bf x}^*) -\tau \right)^2</math> !! <math>\sigma ({\bf x}^*)</math> | ||

|- | |- | ||

| − | | style=" | + | | style="text-align: center;"|0.6589 |

| − | + | | style="text-align: center;"|3.1504 | |

| − | + | | style="text-align: center;"|0.2218 | |

| − | + | | style="text-align: center;"|[-1.0281 -0.0159 0.3253] | |

| − | + | | style="text-align: center;"|0.157 | |

| − | + | | style="text-align: center;"|2.7085 | |

| − | + | ||

| − | + | ||

| − | | style=" | + | |

| − | | style=" | + | |

| − | | style=" | + | |

| − | | style=" | + | |

| − | | style=" | + | |

|- | |- | ||

| style="text-align: center;"|0.7321 | | style="text-align: center;"|0.7321 | ||

| Line 860: | Line 708: | ||

| style="text-align: center;"|2.5191 | | style="text-align: center;"|2.5191 | ||

|- | |- | ||

| − | | style=" | + | | style="text-align: center;"|2.4160 |

| − | | style=" | + | | style="text-align: center;"|3.1504 |

| − | | style=" | + | | style="text-align: center;"|1.2148 |

| − | | style=" | + | | style="text-align: center;"|[-0.6002 0.1180 0.0847] |

| − | | style= | + | | style=text-align: center;"|0.5331 |

| − | | style=" | + | | style="text-align: center;"|2.5052 |

|} | |} | ||

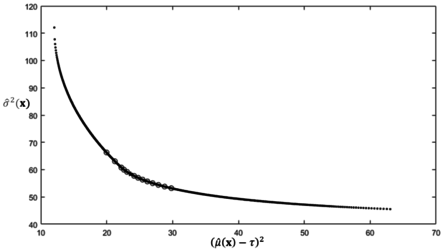

| − | As shown in Table | + | As shown in [[#tab-3|Table 3]], if only <math display="inline">{d}_{A}</math> increases, the optimal squared bias <math display="inline">(\hat{\mu }({\bf x}^*)-\tau )^{2}</math> increases while the process variability <math display="inline">\hat{\sigma }\left( {\bf x}^*\right)</math> decreasing. All of the optimal solutions obtained by using the proposed methods are plotted as circles and compared with the Pareto optimal solutions generated by using the lexicographic weighted Tchebycheff method. Clearly, the obtained solutions are on the Pareto frontier, as shown in [[#img-6|Figure 6]]. |

| − | <div | + | <div id='img-6'></div> |

| − | + | {| style="text-align: center; border: 1px solid #BBB; margin: 1em auto; width: auto;max-width: auto;" | |

| + | |- | ||

| + | |style="padding:10px;"| [[File:Draft_Shin_691882792-image7.png|centre|463x463px]] | ||

| + | |- style="text-align: center; font-size: 75%;" | ||

| + | | colspan="1" style="padding:10px;"| '''Figure 6'''. Plot of sensitivity analysis results with the Pareto frontier for numerical example 1 by changing <math display="inline">{d}_{A}</math> | ||

| + | |} | ||

| − | |||

| − | |||

| − | On the | + | On the other hand, if <math display="inline">{d}_{A}</math> is considered as a constant and <math display="inline">{d}_{B}</math> is changed by 5% each time, the transformed data is summarized and plotted in [[#tab-4|Table 4]] and [[#img-7|Figure 7]], respectively. |

| − | <div class="center" style=" | + | <div class="center" style="font-size: 75%;">'''Table 4'''. Sensitivity analysis results for numerical example 1 by changing <math display="inline">{d}_{B}</math></div> |

| − | ''' | + | |

| − | {| style=" | + | <div id='tab-4'></div> |

| + | {| class="wikitable" style="margin: 1em auto 0.1em auto;border-collapse: collapse;font-size:85%;width:auto;" | ||

| + | |-style="text-align:center" | ||