| Line 1: | Line 1: | ||

<!-- metadata commented in wiki content | <!-- metadata commented in wiki content | ||

| − | + | ||

<div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> | <div class="center" style="width: auto; margin-left: auto; margin-right: auto;"> | ||

<big>'''Information release right of scientific research institutions in health emergencies: Taking China as the research object'''</big></div> | <big>'''Information release right of scientific research institutions in health emergencies: Taking China as the research object'''</big></div> | ||

Revision as of 02:25, 30 July 2025

Abstract

The integration of autonomous robotic systems is pivotal for advancing agricultural mechanization. A critical challenge impeding their widespread adoption is the achievement of reliable, self-sufficient navigation, particularly in environments where conventional positioning systems are compromised. While Global Navigation Satellite Systems (GNSS), often fused with auxiliary sensors, represent a primary solution for outdoor robot guidance, their susceptibility to signal occlusion necessitates alternative, stable methodologies for consistent operation. Addressing this limitation, this paper presents a novel, model-based navigation algorithm engineered specifically for orchard robots operating under prolonged GNSS denial. The core methodology leverages a deterministic kinematic model, deliberately neglecting higher-order dynamic effects justified by the inherently low operational speeds mandated in precision agricultural settings. This model directly processes commanded trajectory coordinates and real-time vehicle state estimates derived solely from incremental wheel encoders and a steering angle sensor. Within the MATLAB/Simulink environment, this transformation is implemented to generate precise longitudinal velocity and angular steering rate commands necessary for path tracking. Empirical validation was conducted through rigorous simulation and field trials employing a non-holonomic mobile robot platform in representative outdoor conditions. Performance evaluation confirmed the robot’s capability for satisfactory autonomous navigation. Quantitatively, the normalized root mean square error (NRMSE) for lateral path deviation during turning maneuvers ranged between 0.2 and 0.4, while straight-line travel exhibited minimal steering offset, typically within ±0.05 degrees. Furthermore, the correlation coefficient between the model’s predicted steering output and the actual commanded input consistently approached 0.99. Collectively, the results demonstrate that the proposed sensor-minimized, model-driven approach provides a viable and realistic foundation for achieving resilient vehicle navigation in structurally complex agricultural domains where GNSS reliability cannot be assured.

Keywords: Autonomous Navigation; Agricultural Robotics; GNSS-Denied Environments; Kinematic Modeling; Path Tracking Control

1. Introduction

The deployment of self-governing robotic platforms within agriculture has accelerated, driven by the need for novel vehicle solutions that elevate farm output, operational efficiency, and the capacity to execute diverse tasks within inherently variable agricultural settings. This trend offers a promising mitigation strategy for the growing scarcity of agricultural labor. Fundamental to the realization of such autonomous mobile systems is the capability for self-directed navigation. While the conceptual foundation for agricultural robotics was established as early as the mid-1980s [1], practical implementation has surged significantly in recent times. This acceleration is largely attributable to concurrent breakthroughs in computational capabilities, sensor technologies [2], and the advent of powerful, affordable processors. Progress in electronics and the commercialization of cost-effective sensors have rendered the development of economically viable robotic platforms for farm environments increasingly feasible. Nevertheless, the aspiration for fully autonomous robotic or vehicular movement remains partially unrealized within contemporary robotics, constrained by persistent technical hurdles. Outdoor navigation for agricultural robots presents particular difficulties, stemming from the challenge of obtaining precise environmental perception amidst fluctuating weather patterns, diverse terrain, and dynamic vegetation. Consequently, developing robust sensing and control strategies capable of accommodating these environmental characteristics is paramount. Supporting this, research by Bac et al. specifically investigated the detrimental impact of environmental variability on the operational efficacy of a harvesting robot [3]. Achieving a precise interpretation of environmental features and deploying resilient technologies for navigation and environmental detection under demanding conditions is crucial for striking an optimal balance between system cost and technological sophistication [4].

Affordability is a critical consideration in agricultural technology development, given the primary end-users are farmers operating within constrained financial frameworks compared to other vehicle markets. System complexity inherently drives cost escalation, making the specific operational environment a vital factor in design philosophy. Orchards, characterized by their semi-structured spatial organization and defined biological architecture, present a highly conducive environment for the initial large-scale adoption of robotics and automation in farming [5]. This suitability is further amplified by a pressing concern: the diminishing availability of skilled seasonal labor poses a substantial risk to orchard sustainability, thereby establishing orchard automation as a critical priority [5].

Ongoing research and development efforts signal positive growth indicators for the agricultural robotics sector and the vital transition of these technologies from laboratory prototypes to practical field deployment, irrespective of current manufacturing volumes. Indeed, the pace of innovation in autonomous navigation specifically within agricultural robotics parallels trends observed in the broader automotive market, though the absolute scale of development may currently differ.

Accurate localization constitutes a fundamental pillar for autonomous navigation in mobile robots, demanding sophisticated sensor integration and algorithmic processing. It remains a highly active area demanding continued advancement. Wang et al. explored the utilization of machine vision for robotic positioning and pathfinding [6]. Blok et al. demonstrated a combined Particle Filter and Kalman Filter approach for probabilistic localization, employing a 2D LIDAR scanner to enable in-row navigation for orchard robots [7]. Their empirical findings indicated superior performance of the Particle Filter model over the Kalman Filter in this specific context. The critical influence of the environment on localization efficacy is well-recognized; Schwarting et al. detailed the principal localization challenges encountered by terrestrial robots operating in diverse settings [8]. They offered a comprehensive analysis of existing methodologies tackling these challenges, their inherent limitations, and prospective future directions. Bechar and Vigneault provided a parallel overview of design, development, and operational considerations for agricultural robots, including their constraints, emphasizing the necessity for sensor fusion to achieve adequate localization and environmental awareness, alongside the development of adaptive path planning and navigation algorithms for variable conditions [9]. Contemporary research frequently focuses on enhancing navigation reliability through the fusion of Global Positioning System (GPS) data with complementary sensor modalities. For instance, Winterhalter et al. proposed an approach combining GNSS-referenced maps with GNSS signals for in-field localization and autonomous traversal along crop rows [10]. Thomasson et al. documented progress in automatic guidance and steering control, highlighting commercially available systems primarily reliant on Global Navigation Satellite Systems (GNSS) [11]. However, these commercial solutions are predominantly tailored for tractors and often necessitate human intervention when encountering unexpected field or navigation anomalies. Alternative configurations include the use of dual RTK-GPS receivers for navigating 4-wheel-steering agricultural rovers, or localization relying on laser scanners combined with wheel and steering encoders, albeit without integrated obstacle avoidance [12,13]. Zaidner and Shapiro presented a model-based state estimation approach for a vineyard robot utilizing sensor fusion. Recognizing the complexity of real-world testing [14], Linz et al. developed a 3D simulation environment using Gazebo for preliminary virtual validation of robotic navigation algorithms employing image-based sensors, laser scanners, and GPS, prior to outdoor deployment [15].

As evidenced by the literature, agricultural machinery and automated guidance systems extensively leverage GNSS for navigation and positioning. However, a significant limitation of GNSS-centric approaches is their inability to dynamically perceive and respond to the immediate environment [16]. Furthermore, sole reliance on GPS proves unreliable within orchards due to signal degradation and multipath interference caused by dense tree canopies during in-row operation [17]. Standard GPS offers limited positional accuracy (typically 1-2 meters), insufficient for precise autonomous navigation. While centimeter-level accuracy solutions (e.g., RTK-GNSS) exist, their high cost presents a barrier. The economic viability of systems is further compromised when integrating other expensive sensors.

Beyond localization, achieving full autonomy necessitates integrated environmental mapping and motion planning modules. Understanding the seamless interaction between these sub-systems is essential for the effective verification of overall control software. The inherent complexity of agricultural robotic vehicles, arising from diverse subsystems and demanding operational requirements, suggests that a model-based design (MBD) methodology offers a promising framework for current development efforts. While MBD is well-established and continually evolving within the automotive industry, particularly for embedded systems, its application in agricultural contexts presents distinct modeling and simulation challenges. Therefore, this paper details the development of a navigation control system for an orchard mobile robot utilizing a model-based design paradigm. The core objective is to create a robot capable of autonomous navigation in GNSS-restricted zones, employing minimal sensor interactions, computationally efficient algorithms, and requiring only minor modifications to its base platform configuration. The system leverages a localization strategy predicated on the provision of a pre-existing environmental map.

2. System modelling and simulation

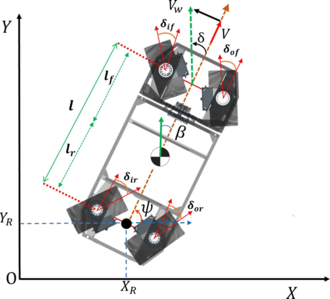

The robotic system depicted in Figure 1 is configured as a four-wheel steering (4WS) vehicle, conceptually akin to a conventional automobile. Its operation is constrained to a two-dimensional (2D) planar domain, wherein idealized rolling motion is presumed in the absence of skidding. The robot’s state is formally defined within a configuration space described by:

|

|

(1) |

|

| Figure 1. Schematic representation of the kinematic configuration employed in the four-wheel steering robotic platform |

In this study, a kinematic representation of the 4WS robotic platform has been adopted. Considering the regime of low-velocity traversal, and under the assumption that wheel velocity vectors align with the instantaneous direction of travel while tire forces remain negligible, a non-dynamic kinematic framework has been employed. The resultant formulation is expressed as:

|

|

(2) |

Here, and designate the Cartesian coordinates of the geometric center located along the rear axle, while v denotes the linear forward speed. The angular orientation of the vehicle is symbolized by , with corresponding to the yaw rate. The angular displacements of the front and rear wheels are represented by and , respectively, and their combined effect is consolidated in the equivalent steering angle . These steering angles are designed to be equal in magnitude but directed oppositely across the front and rear wheel assemblies. The parameters and indicate the longitudinal distances from the vehicle’s center of mass to the respective axles. The parameter , denoting the slip angle, is regarded as negligible in the context of low-speed locomotion, with similar assumptions applied to individual wheel side-slip angles.

The range of admissible values for the steering configuration is constrained within the following interval: , which defines the upper and lower bounds of the equivalent steering angle. This angle serves as a surrogate for representing the aggregate steering effect of both front and rear wheels as if a single wheel were positioned at each end. The equivalent angle is formulated as:

|

|

(3) |

where and denote the steering angles of the robot’s inner and outer wheels, respectively. This formulation remains valid under the assumption of symmetrical steering inputs, and the value of is constrained to lie within a specified range, expressed as:

|

|

(4) |

3. Controller design architecture

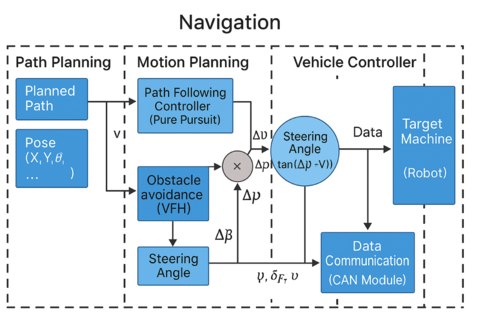

The controller software architecture delineates the high-level structure of constituent modules and their inter-module interactions. As illustrated in Figure 2, the robot’s navigational control system is fundamentally structured around three core computational modules: the path planner, the motion planner, and the vehicle controller. This integrated navigation algorithm is implemented exclusively within the MATLAB® and Simulink® simulation environment.

|

| Figure 2. Hierarchical control architecture devised for autonomous navigational decision-making |

The path planning module processes environmental imagery, translating it into a binary probabilistic map representing navigable space. Specifically, satellite imagery sourced from providers like Google is converted into this occupancy grid format, which subsequently serves as the foundational spatial representation for both robot navigation and self-localization tasks. This generated map explicitly demarcates traversable regions from non-drivable areas (e.g., obstacles, boundaries) within the operational environment. Leveraging this grid map, the module derives an optimal navigable trajectory for the robot. Detailed exposition of the specific path generation algorithms employed is omitted here, as it constitutes a distinct research domain beyond the present article’s scope. Upon successful path computation, this module outputs the requisite sequence of driving coordinates essential for guiding the robot through the designated environment.

The vehicle controller actuates the commands generated by the motion planner module. It translates the computed linear and angular velocity setpoints into specific translational velocity and steering angle commands appropriate for the target robotic platform. These actionable directives are transmitted to the physical robot via a dedicated data communication interface. Crucially, sensor feedback – including wheel encoder data and steering angle measurements – is relayed back to the path tracking controller, enabling continuous closed-loop correction to maintain the desired trajectory under kinematic constraints.

3.1 Lateral control design strategy

To ensure strict adherence to the pre-defined navigation path, the implementation of an effective lateral control strategy is indispensable for governing vehicular steering dynamics. In the present study, robotic trajectory tracking has been facilitated through a pure pursuit control scheme, integrated with vehicle odometry data and a two-dimensional reference map. The realization of accurate navigation necessitates the simultaneous orchestration of both lateral and longitudinal control actions. Specifically, the steering control law—mathematically represented in Eq. (5)—is composed of a heading error correction (yaw/orientation compensation) and lateral position deviation suppression relative to the designated path:

|

|

(5) |

The heading error is defined as the angular deviation between the target path orientation and the robot’s actual heading , whereas the lateral error quantifies the perpendicular displacement between the vehicle’s center of gravity and the reference trajectory:

|

|

(6) |

Here, and respectively represent the vehicle’s longitudinal and lateral velocity components. As detailed in Section 2, under the employed four-wheel steering (4WS) configuration—illustrated in Figure 3—the lateral velocity component is considered negligible at low speeds to facilitate optimal steering precision. Consequently, Eq. (7) may be reexpressed as:

|

|

(7) |

|

| Figure 3. Depiction of the elWobot’s inverse four-wheel steering configuration under zero lateral slip conditions |

This specific control regime, wherein the lateral slip is eliminated, is termed the Zero-side-slip maneuver, a condition characteristically achieved under reduced velocity conditions. Under such circumstances, the heading angle is equated with the vehicle’s course direction , i.e., the actual trajectory orientation:

|

|

(8) |

However, due to physical constraints imposed by steering geometry and the attainable side-slip angle , the permissible steering angle required for trajectory realization is bounded as follows:

|

|

(9) |

Alternatively, Eq. (10) provides the steering angle condition necessary to achieve the desired orientation alignment for trajectory following.

|

|

(10) |

The configuration illustrated in Figure 3 represents the elWobot in a 4WS-negative alignment during the Zero-side-slip maneuver.

Due to limitations in sensor-based localization, the robot’s actual heading 𝜓 is inferred indirectly via its steering angle and velocity. The heading error is regulated by the PID controller, which is structured as a nonlinear, discrete-time single-input single-output (SISO) system constrained by predefined saturation bounds:

3.2 Model-based PID controller tuning

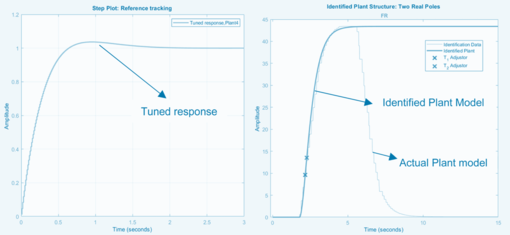

Effective controller implementation necessitates precise calibration of gain parameters to achieve optimal system performance. Post-design PID tuning constitutes a critical phase for determining ideal proportional, integral, and derivative coefficients that govern controller behavior. While conventional manual tuning remains prevalent, this approach demands considerable expertise from control engineers and frequently evolves into a protracted, iterative process. To circumvent these limitations, our research adopts an automated model-based tuning framework leveraging MATLAB/Simulink’s computational ecosystem. Specifically, we employ Simulink Control Design™ alongside the System Identification Toolbox™ to implement this data-driven methodology. The technique requires a linearized plant approximation, presenting implementation challenges for our inherently nonlinear robotic system. To address this, operational open-loop response datasets are acquired under controlled input conditions. These experimental datasets enable localized linearization within defined operational envelopes, permitting the PID tuner to construct region-specific transfer function approximations. Our implementation utilizes reference trajectory tracking with bounded output constraints during optimization, applying this standardized approach to both steering and velocity control subsystems. Figure 4 illustrates this model-based workflow for steering control, where a dedicated plant model is empirically derived from robotic steering response characteristics. Subsequent system identification procedures generate the foundational transfer function model essential for PID coefficient optimization.

|

| Figure 4. Steering controller calibration via model-informed PID parameter tuning |

The velocity control subsystem undergoes specialized tuning within the navigational velocity spectrum (0.45–2.25 m/s), reflecting operational boundary conditions. Strategic tuning prioritizes lower velocity regimes to prevent destabilization during slow-speed maneuvers while maintaining acceptable higher-speed responsiveness. As documented in Table 1, the finalized gain parameters reflect platform-specific optimization for both control dimensions. Analysis of tuned responses reveals an intentional design compromise: Minor overshoot (observable in steering response plots) is deliberately preserved to enhance transient performance characteristics. Complete overshoot elimination induces excessive system lethargy, extending stabilization periods beyond functional requirements. Consequently, controller calibration embodies a deliberate equilibrium between dynamic responsiveness and stabilization precision—a fundamental tradeoff in transient performance optimization. This balance ensures adequate reference tracking agility without compromising operational stability, particularly crucial during trajectory execution under variable inertial conditions.

Table 1. Optimized gain parameters for PID-based regulation of velocity and steering dynamics

| Control | P | I | D |

| Velocity | 0.5106 | 2.153 | -0.005 |

| Steering | 1.35 | 3.537 | 0.30 |

3.3 Model integration

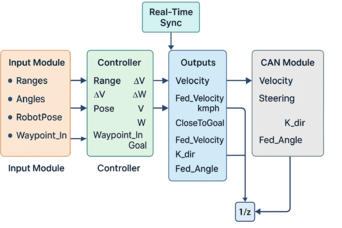

The comprehensive navigation framework synthesizes unit-level and subsystem components into an integrated operational model. This architectural integration establishes deterministic data exchange protocols across functional segments, enabling holistic system validation. A significant advantage of this modular design lies in its cross-project reusability: Pre-validated components accelerate development cycles for future autonomous platforms. Debugging efficiency is substantially enhanced through fault isolation capabilities, allowing targeted module inspection rather than full-system analysis. As visualized in Figure 5, the implemented robotic navigation system partitions functionality into four principal domains:

|

| Figure 5. Fully integrated and operational model of the robotic navigation framework |

(1) Sensor Fusion Interface: Processes both live sensor streams and simulated inputs essential for localization. This includes ingestion of trajectory waypoint sequences generated by path planning algorithms.

(2) Motion Control Core: Embeds the Pure Pursuit tracking controller that computes steering directives using egocentric localization estimates and navigational waypoints. Pose data originates from odometric measurements correlated against prior path planning operations on occupancy grid maps. These environmental representations derive from georeferenced 2D raster workspace models.

(3) Kinematic Output Hub: Transmits derived velocity setpoints and steering directives to drive systems. Continuously monitors Euclidean distance to target coordinates, issuing null motion commands when the robot enters predefined terminal proximity thresholds.

(4) CAN Communication Gateway: Translates kinematic directives into Controller Area Network messages for actuator control. Incorporates diagnostic telemetry channels and failsafe triggers for real-time system health monitoring.

All computational processes execute within a rigorously enforced 10ms deterministic cycle. Since native Simulink® lacks inherent real-time capability, temporal synchronization is achieved through dedicated Real-Time Sync blocks. These enforce hardware-timed execution intervals that emulate embedded deployment conditions during simulation. The fail-safe subsystem autonomously triggers protective shutdown protocols upon detecting critical anomalies, including actuator faults or deviation beyond safe operating envelopes. Diagnostic messaging provides continuous state observability through standardized SAE J1939 telemetry frames. This temporal formalization ensures controller outputs maintain phase alignment with sensor inputs - a critical requirement for closed-loop stability during high-speed navigation. The synchronized execution environment further enables valid performance benchmarking against real-world timing constraints prior to physical deployment.

4. Simulation results and discussion

This segment presents empirical validation results for the elWObot robotic platform’s navigation controller through co-simulation and field trials. The integrated development framework leveraged MATLAB/Simulink for model-based design, incorporating both virtual simulation and hardware-in-the-loop (HIL) verification. Successful algorithm deployment occurred in unstructured outdoor environments, specifically cobblestone pathways and densely vegetated terrain engineered to simulate agricultural field irregularities and off-road conditions. Under these challenging substrates, the system exhibited consistently reliable operational performance and trajectory adherence.

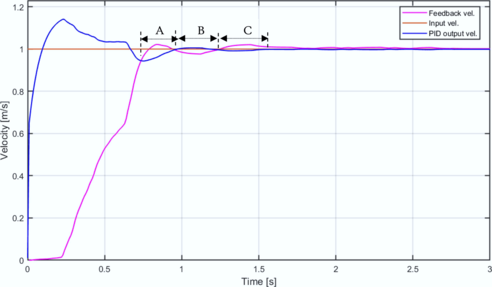

4.1 Robot velocity response

Performance characterization was conducted at a target operational velocity of 1 m/s within the Wenzhou Vocational College of Science and Technology. While orchard validation remains pending platform refinements, the AST terrain provided sufficient stochastic disturbances for preliminary evaluation. Figure 6 demonstrates the optimized velocity tracking behavior, with model execution at 10ms intervals contrasting with 200ms CAN bus update cycles. The PID controller exhibits characteristic transient dynamics: an intentional overshoot to 1.1 m/s occurs within the initial 200ms, subsequently decaying exponentially to converge within ±1% of the setpoint at t=1s. This overshoot magnitude constitutes a deliberate design parameter to overcome stiction-induced torque requirements at the wheel-terrain interface. Actual velocity feedback (measured via wheel encoders) demonstrates second-order following behavior, achieving asymptotic stability at t=1.8s with near-zero steady-state error. Segments A-C confirm consistent tracking fidelity during sustained operation without observable oscillation. Though enhanced disturbance rejection could theoretically be achieved through gain elevation, such tuning induces hypersensitivity to inertial perturbations and reduces stability margins. Consequently, the implemented PID configuration represents an optimal compromise between transient acceleration capability and robust velocity regulation.

|

| Figure 6. Temporal response of the robot’s velocity following PID gain optimization |

4.2 Robot steering response

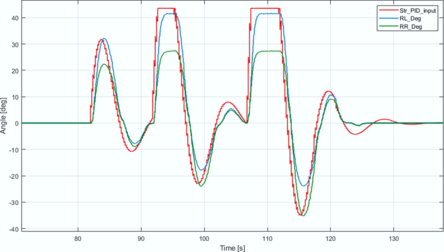

To evaluate directional control fidelity, the elWObot executed pre-mapped trajectories at its optimized operational velocity of 1 m/s (established in Section 4.5 following comparative velocity trials). All turning maneuvers were digitally recorded and synchronously compared against commanded steering inputs and actuator responses. During critical testing sequences observed from the vehicle’s frontal perspective, the robotic system initiated right-turn maneuvers. Leveraging its four-wheel-independent-steering (4WS) architecture, the platform implemented negative-phase coordination where front and rear axles counter-steered to minimize turning radius. As evidenced in Figure 7, the steering controller effectively translated reference inputs (Str_PID_input) into wheel-angle outputs while maintaining Ackermann-compliant kinematics. This necessitates greater deflection of inner wheels relative to outer wheels during curvature negotiation - a behavior confirmed by rear-left wheel (RL_deg) consistently exceeding rear-right wheel (RR_deg) angular displacement.

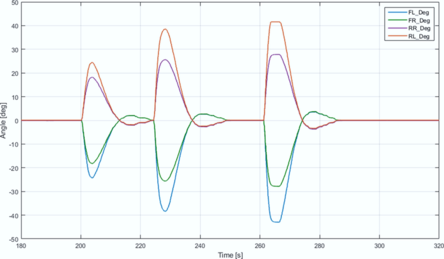

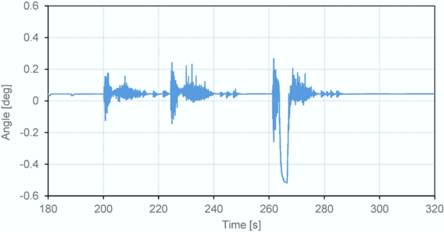

Initial turn execution demonstrated exceptional tracking performance across all wheels. Subsequent maneuvers, however, revealed a systematic discrepancy at extreme steering demands: the inner wheel plateaued at 42°±0.5° tolerance versus the 45° command input (Figure 7). This limitation stems from inherent kinematic boundaries hard-coded during developmental modeling, where ±42° constitutes the mechanical steering stop. Consequently, the control algorithm intentionally clamps inputs at 45° to emulate physical stops with operational margin. Additional signal phase offsets observed between commanded and achieved angles derive from deterministic CAN bus communication latency operating at 200ms cycles. Comprehensive wheel-angle trajectories during transitional states appear in Figure 8, further validating the 42° saturation threshold. Crucially, sideslip angle (β) variations remained below 1.2° throughout testing (Figure 9), exhibiting negligible influence on vehicle orientation or steering dynamics per Eqs. 2 and 15. This insensitivity confirms the theoretical advantage of negative-phase 4WS over conventional 2WS systems in slip mitigation. Persistent β offsets of 0.3°±0.1° originated from minor wheel-alignment inconsistencies at neutral positions, though these remained within operational tolerances for agricultural contexts.

|

| Figure 7. Steering angle dynamics in response to PID-regulated input signals |

|

| Figure 8. Multi-wheel steering angle response elicited by the applied control input |

|

| Figure 9. Quantitative evaluation of the robot’s sideslip angle (β) during maneuvering |

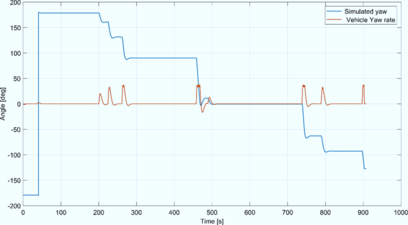

4.3 Robot yaw response

Figure 10 demonstrates close correlation between simulated yaw predictions and experimentally measured yaw rates during trajectory execution. Initial conditions established the robot’s heading at ψ₀ = 180° (π radians) relative to magnetic north. Throughout linear navigation from t=0–200s, both datasets maintained directional stability with angular deviations below ±0.5°. A deliberate 10° yaw rate perturbation introduced at t=200s was accurately replicated in the simulation model within 0.3° RMS error. This tracking fidelity persisted during subsequent maneuvers, with synchronized responses observed at t=480s when commanded directional changes occurred. Here, the simulation registered a 90° orientation shift while physical constraints limited the actual yaw rate to 45° – a discrepancy attributable to steering linkage saturation.

|

| Figure 10. Comparative analysis of simulated versus empirical yaw rate responses |

Fundamental to this analysis is the established sign convention: positive values denote counterclockwise rotation (viewed top-down), while negative values indicate clockwise motion. The simulation’s negative yaw manifestation at t=760s correctly represented the vehicle’s reversed directional state upon path completion. Notably, the platform returned to its initial geospatial coordinates but with inverted orientation (heading = -180°), confirming successful loop closure despite directional reversal. This terminal state aligns with conventional vehicle dynamics frameworks where magnetic north corresponds to 0° yaw. The persistent 180° offset throughout testing originated from intentional initialization parameters rather than measurement drift, as confirmed by post-mission inertial validation. Kinematic discrepancies at extreme steering demands (e.g., t=480s) highlight the model’s capacity to capture saturation effects inherent to physical systems.

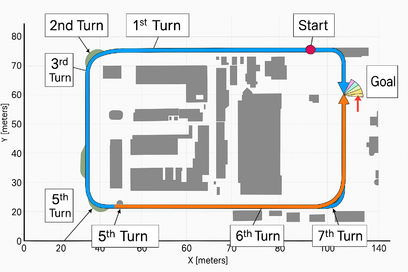

Conventional navigation strategies for car-like robotic platforms typically operate within reduced-configuration planar spaces defined solely by Cartesian x-y coordinates. Figure 11 demonstrates trajectory execution within such an environment, simulating agricultural row-traversal behaviors including linear inter-row navigation and headland turning maneuvers. Path planning was implemented via Probabilistic Road Map (PRM) methodology on occupancy grid maps, where white regions denote navigable terrain and black zones represent obstructions. Notably, this approach disregards non-holonomic constraints inherent in wheeled platforms. The triangular representation in Figure 11 illustrates the robot’s orientation during traversal at Wenzhou Vocational College of Science and Technology’ test facility. Velocity trials (0.5, 1.0, 1.5, 2.0 m/s) revealed optimal tracking fidelity at 1.0 m/s (Figure 11f), with comprehensive velocity-dependent analysis detailed in Section 4.5. Trajectory adherence was quantified through superimposed path visualization (blue trajectory), though Turn 4 exhibited corner-cutting behavior—manifesting as increased turning radius relative to waypoint positioning. This phenomenon stems from discretized path planning and non-holonomic limitations preventing instantaneous directional changes, necessitating minimum rotational radii. Such constraints are particularly pronounced in Ackermann-steered platforms (2WS/4WS) compared to differential/skid-steer systems capable of zero-radius turns. Mitigation strategies include implementing continuous-curvature path planners and optimizing pure pursuit controllers through velocity-adaptive lookahead distances.

|

| Figure 11. Real-world trajectory tracking of the robot along a predefined path at the AST test facility, Wenzhou Vocational College of Science and Technology |

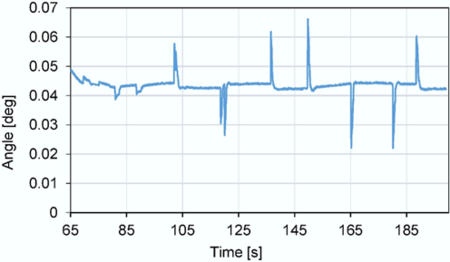

Beyond qualitative assessment, rigorous quantitative evaluation examined linear tracking (Start → Turn 1) and turning performance across four maneuvers. Figure 12 documents straight-line traversal errors bounded within ±0.05° tolerance, with transient overshoots attributable to surface-induced disturbances challenging controller robustness. Turning characteristics (Figure 11) were statistically analyzed in Table 2, revealing a maximum instantaneous peak error of 27% at Turn 1. Crucially, this metric represents localized deviation rather than holistic steering fidelity—a consequence of modeling simplifications where steering angle was computed as the absolute average of front-wheel displacements. Physical systems exhibit asymmetric wheel angles due to Ackermann kinematics, constraining achievable yaw rates. This approximation propagates to yaw estimation (Eq. 2), where velocity-dependent transients precede steady-state convergence. True maneuvering precision is better quantified by average angular offsets below 5° across all turns (Table 2). Negative steering values indicate leftward deflection, with kinematic saturation evidenced by the front-right wheel’s inability to achieve the 42.97° command angle—validating modeled mechanical limits.

|

| Figure 12. Navigation performance of the robot during linear path traversal |

Table 2. Comparative metrics of steering input angles and corresponding yaw responses across multiple turning scenarios

| Turn | Peak Input angle (deg.) | Peak yaw angle (deg.) | Peak error (%) | Avg.offset (deg.) | FL angle | FR angle | RL angle | RR angle | Equivalent Steering angle |

| 1st | 28.28 | 20.50 | 27.5 | 1.19 | -24.10 | -18.11 | 24.18 | 18.15 | 22.12 |

| 2nd | 38.42 | 33.04 | 14.0 | 3.06 | -38.27 | -25.57 | 38.45 | 25.60 | 32.18 |

| 3rd | 42.97 | 36.44 | 15.2 | 4.26 | -41.95 | -27.83 | 41.59 | 27.82 | 34.70 |

| 4th | 42.97 | 36.44 | 15.2 | 5.04 | -41.95 | -27.83 | 41.59 | 27.82 | 34.71 |

| 5th | 15.95 | 12.69 | 20.4 | 1.15 | -13.95 | -12.26 | 14.13 | 12.22 | 13.63 |

| 6th | 42.97 | 36.44 | 15.2 | 5.04 | -41.95 | -27.83 | 41.59 | 27.82 | 34.47 |

| 7th | 38.23 | 32.28 | 15.5 | 3.43 | -38.25 | -25.55 | 38.43 | 25.55 | 32.00 |

4.5 Controller tracking performance

The path-tracking efficacy of the pure pursuit controller was rigorously assessed through steering angle discrepancy analysis during turning maneuvers. Systematic testing evaluated velocity profiles (0.5, 1.0, 1.5, 2.0 m/s) coupled with preview distances (0.75, 1.0, 1.2, 1.5, 2.2 m), with the maximum lookahead constrained to the robotic platform’s inter-axle dimension. Optimal operational parameters were determined through minimization of steering offset error – defined as the angular deviation between commanded steering input and realized yaw response. Figure 13 presents quantile distribution plots of angular tracking discrepancies across turning sequences. Minimal median errors emerged at 1.0 m/s velocity with 0.75 m preview distance, attributable to velocity-dependent orientation dynamics: at this specific velocity, kinematic constraints yielded near-ideal alignment between steering commands and platform heading. Suboptimal velocities induced systematic overcompensation (>1.0 m/s) or underresponsiveness (<1.0 m/s), degrading trajectory adherence. Notably, reduced preview distances consistently outperformed longer horizons across velocity regimes.

Analysis of the optimal configuration (Figure 13f) reveals near-zero median errors for most turns, with exceptions at Turns 4 and 6. These anomalies stem from Ackermann steering limitations during acute maneuvers, where physical saturation prevented attainment of required wheel angles. The resultant kinematic discontinuity generated accumulated heading offsets and statistical outliers. Additional error variance in Figure 13f derives from sensor latency and transient noise artifacts during high-curvature transitions.

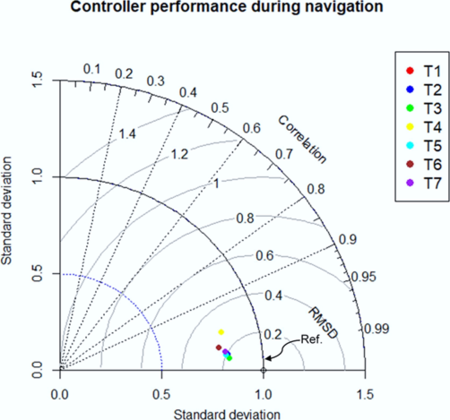

Figure 14 employs Taylor’s statistical framework to quantify controller performance during turning sequences, correlating reference steering inputs with measured yaw responses [18]. Controller efficacy was benchmarked against three criteria: (1) Normalized root-mean-square difference (RMSD) approaching zero, (2) Normalized standard deviation approximating unity, and (3) Correlation coefficients nearing maximum values.

|

| Figure 14. Normalized Taylor diagram illustrating controller efficacy across diverse turning conditions during autonomous navigation |

Experimental data demonstrates robust performance: RMSD values cluster within 0.2–0.4 across maneuvers, while standard deviations converge near 0.85. Correlation coefficients exceeding 0.99 confirm exceptional command-response synchronization. This statistical constellation – minimal RMSD, near-unity standard deviation, and maximal correlation – validates the controller’s proficiency in negotiating curvilinear paths despite kinematic limitations and environmental disturbances.

5. Conclusion

This study addresses the critical challenge of robotic navigation in dense orchard settings where GNSS/GPS signal degradation impedes localization capabilities. We present a model-driven framework for developing navigation architectures tailored to Ackermann-constrained mobile platforms operating under such conditions. The solution employs a modular decomposition strategy, with dedicated subsystems for: (1) Probabilistic motion planning; (2) Nonlinear vehicle control; (3) Sensor-fused localization; (4) Robust data telemetry.

This compartmentalized design accelerated iterative development and validation cycles. Our integrated navigation model synthesizes trajectory generation outputs with vehicular kinetic constraints to produce executable motion primitives. The core innovation lies in decoupling velocity regulation from heading control - requiring only proprioceptive inputs from wheel encoders and steering resolvers. This sensor-minimal approach significantly reduces exteroceptive sensing dependencies while maintaining navigation integrity in constrained environments. Rigorous verification was conducted through co-simulation in MATLAB/Simulink environments, followed by field trials on a scaled robotic platform. The architecture demonstrated exceptional path-tracking fidelity during both linear transits and curvilinear maneuvers across varied terrain. Systematic velocity sweeps revealed optimal operational performance at 1 m/s, where platform yaw dynamics exhibited near-perfect correspondence with steering geometry kinematics. This velocity-specific synchronization minimized cumulative heading deviations observed at other test speeds (0.5, 1.5, 2.0 m/s).

Current investigations focus on enhancing environmental adaptability through tight coupling of inertial navigation systems with online path optimization routines. This sensor-fusion strategy aims to boost robustness against orchard-specific disturbances including: canopy occlusion effects, terrain-induced wheel slip, and foliage multipath artifacts. Subsequent research phases will validate reliability metrics under extended operational durations in commercial orchard deployments.

Acknowledgement:

Funding Statement: None.

Author Contributions: The authors confirm contribution to the paper as follows: Study conception and design: Li Tian, Xingjia Pan; Data collection: Li Tian; Analysis and interpretation of results: Li Tian, Xingjia Pan; Draft manuscript preparation: Li Tian, Xingjia Pan. All authors reviewed the results and approved the final version of the manuscript.

Ethics Approval: Not applicable.

Conflicts of Interest: The authors declare no conflicts of interest to report regarding the present study.

References

[1] Oberti R, Shapiro A. Advances in robotic agriculture for crops. Biosyst Eng. 2016;100(146):1–2.

[2] Koh KC, Cho HS. A path tracking control system for autonomous mobile robots: an experimental investigation. Mechatronics. 1994;4(8):799–820.

[3] Bac CW, Van Henten EJ, Hemming J, Edan Y. Harvesting robots for high-value crops: State-of-the-art review and challenges ahead. J Field Robot. 2014;31(6):888–911.

[4] Reina G, Milella A, Rouveure R, Nielsen M, Worst R, Blas MR. Ambient awareness for agricultural robotic vehicles. Biosyst Eng. 2016;146:114–32.

[5] Verbiest R, Ruysen K, Vanwalleghem T, Demeester E, Kellens K. Automation and robotics in the cultivation of pome fruit: Where do we stand today? J Field Robot. 2021;38(4):513–31.

[6] Wang T, Chen B, Zhang Z, Li H, Zhang M. Applications of machine vision in agricultural robot navigation: A review. Comput Electron Agric. 2022;198:107085.

[7] Blok P M, van Boheemen K, van Evert F K, IJsselmuiden J, Kim G H. Robot navigation in orchards with localization based on Particle filter and Kalman filter. Comput Electron Agric. 2019;157:301–10.

[8] Schwarting W, Alonso-Mora J, Rus D. Planning and decision-making for autonomous vehicles. Annu Rev Control Robot Auton Syst. 2018;1(1):187–210.

[9] Bechar A, Vigneault C. Agricultural robots for field operations: Concepts and components. Biosyst Eng. 2016;149:94–111.

[10] Winterhalter W, Fleckenstein F, Dornhege C, Burgard W. Localization for precision navigation in agricultural fields—Beyond crop row following. J Field Robot. 2021;38(3):429–51.

[11] Thomasson JA, Baillie CP, Antille DL, Lobsey CR, McCarthy CL. Autonomous technologies in agricultural equipment: a review of the state of the art. Trans ASABE. 2019;62(1):1–17.

[12] Tu X, Gai J, Tang L. Robust navigation control of a 4WD/4WS agricultural robotic vehicle. Comput Electron Agric. 2019;164:104892.

[13] Bayar G, Bergerman M, Koku A B, Konukseven EI. Localization and control of an autonomous orchard vehicle. Comput Electron Agric. 2015;115:118–28.

[14] Zaidner G, Shapiro A. A novel data fusion algorithm for low-cost localisation and navigation of autonomous vineyard sprayer robots. Biosyst Eng. 2016;146:133–48.

[15] Linz A, Brunner D, Fehrmann J, Herlitzius T, Keicher R, Ruckelshausen A, Schwarz H P. Modelling environment for an electrical driven selective sprayer robot in orchards. Adv Anim Biosci. 2017;8(2):848–53.

[16] Reina G, Milella A, Rouveure R, Nielsen M, Worst R, Blas M R. Ambient awareness for agricultural robotic vehicles. Biosyst Eng. 2016;146:114–32.

[17] Andersen J C, Ravn O, Andersen N A. Autonomous rule-based robot navigation in orchards. IFAC Proc Vol. 2010;43(16):43–8.

[18] Taylor K E. Summarizing multiple aspects of model performance in a single diagram. J Geophys Res Atmos. 2001;106(D7):7183–92.

Document information

Published on 04/06/24

Accepted on 20/05/24

Submitted on 04/05/24

Volume 40, Issue 2, 2024

DOI: 10.23967/j.rimni.2024.05.008

Licence: CC BY-NC-SA license

Share this document

claim authorship

Are you one of the authors of this document?