Acknowledgments

The research leading to these results has received funding from, on the one hand, the European Research Council under the European Union's Seventh Framework Programme (FP/2007-2013) / ERC Grant Agreement n. 320815, Advanced Grant Project COMP-DES-MAT, and, on the other hand, the Spanish Ministry of Science and Innovation under grant BIA2011-24258.

Abstract

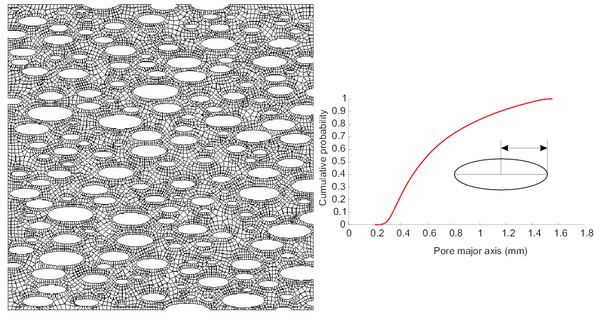

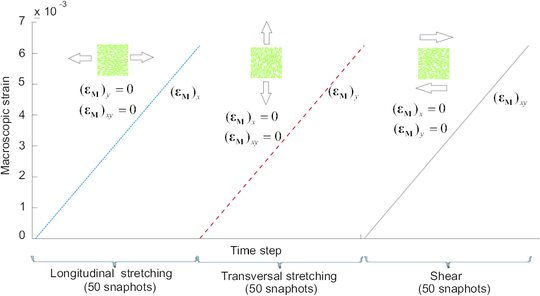

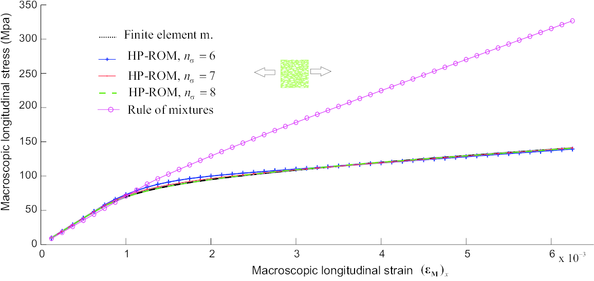

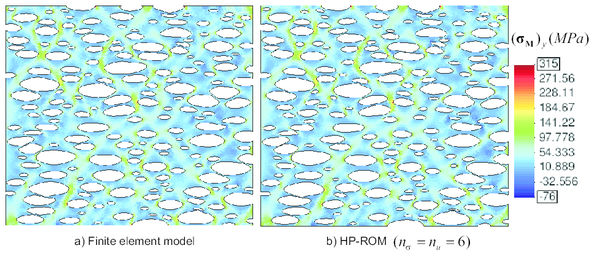

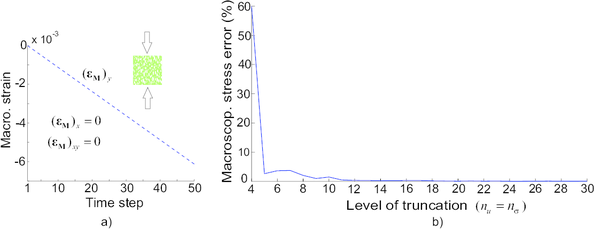

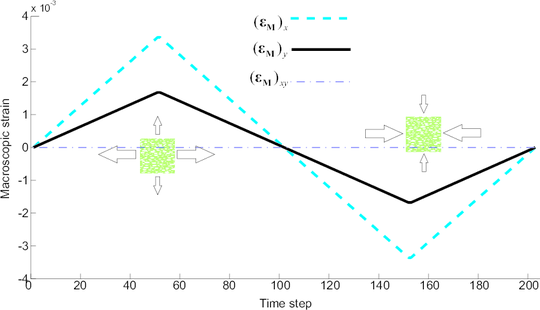

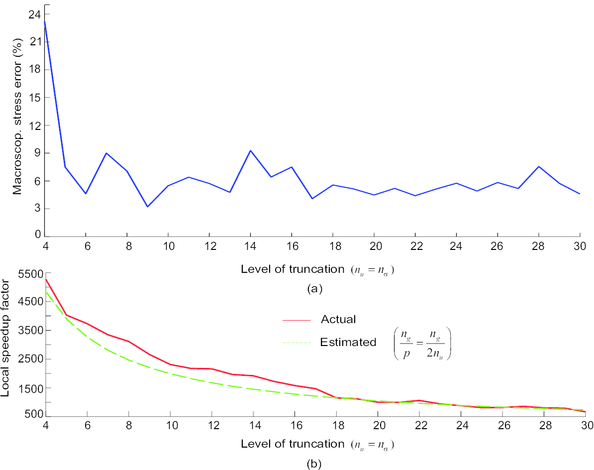

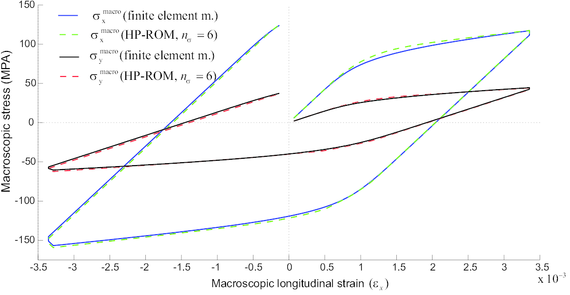

The present work is concerned with the application of projection-based, model reduction techniques to the efficient solution of the cell equilibrium equation appearing in (otherwise prohibitively costly) two-scale, computational homogenization problems. The main original elements of the proposed Reduced-Order Model (ROM) are fundamentally three. Firstly, the reduced set of empirical, globally-supported shape functions are constructed from pre-computed Finite Element (FE) snapshots by applying, rather than the standard Proper Orthogonal Decomposition (POD), a partitioned version of the POD that accounts for the elastic/inelastic character of the solution. Secondly, we show that, for purposes of fast evaluation of the nonaffine term (in this case, the stresses), the widely adopted approach of replacing such a term by a low-dimensional interpolant constructed from POD modes, obtained, in turn, from FE snapshots, leads invariably to ill-posed formulations. To safely avoid this ill-posedness, we propose a method that consists in expanding the approximation space for the interpolant so that it embraces also the gradient of the global shape functions. A direct consequence of such an expansion is that the spectral properties of the Jacobian matrix of the governing equation becomes affected by the number and particular placement of sampling points used in the interpolation. The third innovative ingredient of the present work is a points selection algorithm that does acknowledge this peculiarity and chooses the sampling points guided, not only by accuracy requirements, but also by stability considerations. The efficiency of the proposed approach is critically assessed in the solution of the cell problem corresponding to a highly complex porous metal material under plane strain conditions. Results obtained convincingly show that the computational complexity of the proposed ROM is virtually independent of the size and geometrical complexity of the considered representative volume, and this affords gains in performance with respect to finite element analyses of above three orders of magnitude without significantly sacrificing accuracy –-hence the appellation High-Performance ROM.

1 Introduction

1.1 Motivation

1.1.1 Moore's and Parkinson's laws in computational modeling

A basic precept in constructing a successful computational model –-one that strikes the right balance between accuracy and simplicity–- is, quoting M.Ashby [1], ``to unashamedly distort the inessentials in order to capture the features that really matter. Yet with the general availability of fast computers with large memories, and the exponential growth presaged by Moore's law of such capacities, it is becoming increasingly difficult to resist the temptation to violate this basic precept and, in the quest for higher accuracy, indiscriminately include features that do not contribute significantly to the overall response of the modeled system. As pointed out by Venkataraman et al.[2], this had led to the paradox that certain engineering problems that were computationally tackled for the first time 40 years ago appear to remain comparatively costly, even though the capacity of computers have increased one trillion-fold during this period. This apparent paradox is of course not exclusive of computational physical modeling, but rather just another manifestation of the general adage “software applications will invariably grow to fill up increased computer memory, processing capabilities and storage space”, known as the computerized Parkinson's law [3].

1.1.2 The two-scale homogenization problem

An engineering research area that seems to be falling into this paradigmatic trend is, as we intend to argue in the ensuing discussion, the two-scale modeling, via homogenization, of materials such as composites and polycrystalline metals, characterized by exhibiting a clear heterogeneous composition at some lower length scale –-the micro-, or meso-, scale–-, but that can be regarded as homogeneous at the length scale at which engineering predictions are needed –-the macro-scale. The actual challenge in the macro-scale continuum description of the mechanical behavior of such materials lies in the determination of a constitutive connection between macro-stresses and macro-strains that accurately reflects the material properties and geometrical arrangement of the distinct phases at the micro-/meso-scale. Under the hypotheses of either periodicity or statistical homogeneity, on the one hand; and scale separation, on the other hand, it is well-known [4] that this constitutive link can be systematically established by solving, for each point at the coarse scale, a boundary value problem (BVP) on a certain representative microscopic subdomain (the cell equilibrium problem). In a strain-driven formulation of this BVP, the macro-strain at a given point acts as “loading parameter”, in the form of appropriate essential boundary conditions, whereas the associated macro-stress is obtained through volume averaging –-i.e., homogenization–- of the corresponding micro-stress field.

1.1.3 Evolution of homogenization approaches

When the discipline of continuum micromechanics began to flourish in the 60's and 70's of the last century, research focus was fundamentally directed, absent powerful computational tools (if any), towards the development of approximated, closed-form solutions of this BVP for certain types of geometrically and constitutively simple micro-structures. To arrive at these solutions, pioneers such as R. Hill [5], Z. Hashin [6], and K. Tanaka [7] struggled to identify and retain, guided sometimes by an uncanny physical intuition, only those features of the microstructural response that has a significant impact on the accuracy of coarse-scale predictions –-filtering out, thus, the “inessentials”. The advent of increasingly fast computers, and the concomitant advancement of numerical methods to solve differential equations, progressively fostered a shift towards the development of homogenization techniques that rely less on analytical results and more on numerical solutions, widening thereby their scope; for comprehensive surveys of these semi-analytical homogenization methods, the reader is referred to Refs. [8,9,10]. The approach termed by some authors [11,12] computational homogenization (or direct computational homogenization ) can be regarded as the culmination of this shift towards purely numerical methods: in this approach, the microscopic boundary value problem at each coarse-scale point is attacked using no other approximation than the spatial discretization of the pertinent solution strategy (finite element method, for instance), thus, circumventing the need for simplifying assumptions regarding the topological arrangement of the micro-phases and/or their collective constitutive behavior.

1.1.4 Infeasibility of direct computational homogenization methods

Although no doubt the most versatile and accurate homogenization technique, with no other limitation in scope than the imposed by the aforementioned hypotheses of statistical homogeneity and scale separation, the direct computational homogenization approach violates squarely the modeling precept outlined at the outset –-it does not discriminate between essential and irrelevant features in solving the fine-scale BPVs–-, making the accuracy/parsimony balance to tilt unduly towards the accuracy side and far from the parsimony one. The consequence is its enormous computational cost (in comparison with analytical and semi-analytical homogenization techniques). For instance, when the Finite Element (FE) method is the strategy of choice for both scales –-the commonly known as multilevel finite element method, abbreviated FE method [13]–-, one has to solve, at every increment and every Newton-Raphson iteration of the global, coarse-scale FE analysis, and for each Gauss point of the coarse-scale mesh, a local, non-linear finite element problem which, in turn, may involve several thousand of degrees of freedom. To put it metaphorically, in the FE method, the complexity of the overall analysis is submitted to the “tyranny of scales” [14,15,16]–- it depends on the resolution of both coarse- and fine- scale grids. This explains why, presently, almost five decades after R.Hill [17] laid the foundations of non-linear continuum micro-mechanics, and despite the dizzying speed of today's computers, the routine application of this general and versatile homogenization method to model the inelastic behavior of large structural systems featuring complex micro-/meso-structures is still considered intolerably costly, especially when the system has to be analyzed for various configurations, as in design optimization or inverse analysis.

1.1.5 Shortcomings of semi-analytical approaches

The foregoing review and critical appraisal of how things have evolved in the field of continuum micromechanics clearly teaches us that, to defeat the tyranny of scales, and develop a successful homogenization method, one should strive to adhere to Ashby's modeling principle and introduce –-as actually done in analytical and semi-analytical approaches–- appropriate simplifications in dealing with the fine-scale BVPs. Negating the need for such simplifications in the hope that the upcoming generations of peta-, exa- (and so forth) flops computers will eventually come to the rescue and cope with the resulting complexity, is, in the authors' opinion, a mistaken view, at odds with the true spirit of physical modeling, and the culprit for the paradoxical trend alluded to earlier.

Yet finding, for a given, arbitrarily complex microstructure, a suitable set of simplifying assumptions, let alone incorporating in a consistent manner such assumptions in the formulation of the local BVPs, is in general a formidably challenging endeavor. The admittedly brilliant idea underlying many advanced semi-analytical homogenization methods, such as the Transformation Field Analysis (TFA) [18] and variants thereof [19,20,21,22], of pre-computing certain characteristic operators (strain localization and influence tensors) by solving a carefully chosen battery of fine-scale BPVs has only partially relieved modelers from this burden: these methods are still predicated, to a lesser or greater extent, on ad-hoc assumptions connected with the constitutive description of the involved phases. Consideration of new materials with unstudied compositions will thereby require additional research efforts by specialists in the field and eventual modifications of the corresponding mathematical and numerical formulations –-in contrast to direct computational homogenization approaches, such as the FE method, in which the formulation is “material-independent”, and hence more versatile.

1.2 Goal

The current state of affairs in the field of two-scale homogenization seems to call, thus, for a unified homogenization approach that combines somewhat the advantages of direct computational homogenization and semi-analytical techniques. It would be desirable to have a homogenization method with a computational cost virtually independent of the geometric complexity of the considered representative volume, as in semi-analytical techniques –-i.e., one that defies the tyranny of scales. At the same time, it would be also interesting to arrive at a method whose mathematical formulation dispenses with ad-hoc, simplifying assumptions related with the composition of the heterogeneous material; i.e, one enjoying the versatility, unrestricted applicability and “user-friendliness” –-insofar as it would totally relieve the modeler from the often exceedingly difficult task of visualizing such assumptions –- of direct computational homogenization methods. The goal of the present work is to show that these desirable, apparently conflicting attributes can be conceivably achieved, for arbitrarily complex heterogeneous materials well into the inelastic range, by using the so-called [23] Reduced-Basis (RB) approximation in the solution of the cell BVPs.

1.3 Reduced-basis approach

1.3.1 Essence of the approach

Generally speaking, the reduced-basis approximation is simply a class of Galerkin approximation procedure that employs, as opposed to the FE method, but similarly to classical Rayleigh-Ritz solution techniques [24], globally supported basis functions. The main difference with respect to classical Rayleigh-Ritz schemes is that these basis functions or modes are not constructed from globally supported polynomials or transcendental functions (sines, cosines ...), but rather are determined from a larger set of previously computed, using the finite element (FE) method or other classical solution techniques, solutions of the BVP at appropriately selected values of the input of interest. These functions are commonly termed empirical basis functions [25], the qualifier empirical meaning “derived from computational experiments”.

As noted earlier, the input of interest or “loading” parameter in the fine-scale cell problem is the macro-scale strain tensor. Accordingly, the starting point for constructing the basis functions in the case under study would consist in solving a battery of cell BVPs for various, judiciously chosen macro-strain values. In the linear elastic regime, for instance, the displacement solution depends linearly on the prescribed macro-strain tensor, and hence it would suffice to perform 6 linear FE analysis (stretch in three directions and three shears); the corresponding modes would simply arise from orthonormalizing the displacement solutions of these problems. By constraining the cell to deform only into these 6 pre-defined, shape functions, one automatically obtains a genuine reduced-order model (ROM) of the cell. Note that the dimension of this ROM is totally independent of the spatial discretization (and, therefore, of the geometric complexity of the cell) used in the preliminary or offline FE analyses.

1.3.2 Dimensionality reduction

Things get a little bit more complicated in the inelastic range. The solution of the problem not only ceases to bear a linear relation to the prescribed macro-strain tensor: it also depends in general on the entire history of this coarse-scale kinematic variable. As a consequence, instead of single linear FE analyses, it becomes necessary to perform nonlinear FE studies on the cell subjected to various, representative macro-strain histories. The outcome of these FE calculations is a data set comprising an ensemble of hundred or even thousand (depending on the number of time steps into which the strain histories are discretized) displacement field solutions (also called snapshots). Were all these snapshots barely correlated with each other, the dimension of the manifold spanned by them would prove overly high, rendering the entire approach impractical –-it would no longer qualify as a truly reduced basis method. Fortunately, as we show in the present work, in general, most of these snapshots do display strong linear correlations between each other –-i.e., they have redundant information–-, and, in addition, contain deformation modes that are irrelevant to the quality of coarse-scale predictions –-in the sense that their associated stress fields have vanishing or negligible average volumes. Accordingly, all that is required to obtain a much lower dimensional representation of the solution data set, and therewith the desired reduced basis, is an automatic means to identify and remove this redundant and irrelevant information, while preserving, as much as possible, its essential features. This problem of removing unnecessary complexity from huge data sets so as to uncover dominant patterns is the central concern of disciplines such as digital image and video compression [26], and patter recognition [27], to name but a few, and thereby many efficient dimensionality reduction (or data compression, in more common parlance) algorithms already exist to deal with it. In the present work, we employ the arguably simplest and most popular of such algorithms: the Proper Orthogonal Decomposition (POD).

It becomes clear from the above discussion that, in the reduced basis approach, the inescapable task of discriminating what is essential and what is not is automatically carried out by these dimensionality reduction methods. In other words, the “burden” of simplification of the cell BVP in the RB approach is entirely borne by the computer, and not by the modeler, as it occurs in analytical and, to a lesser extent, in semi-analytical homogenization methods. It is precisely this feature that confers the advantages of versatility and “user-friendliness” alluded to earlier.

1.3.3 Numerical integration

Once the global shape functions have been computed, the next step in the construction of the reduced-order model of the cell is to introduce an efficient method for numerically evaluating the integrals appearing in the weak form of the cell BVP. Of course one can simply use the same Gauss quadrature formulae and the same sampling points (a total number of , being the number of mesh nodes) as the underlying finite element model. But this would be akin to integrating, say, a third-order polynomial function using thousand of sampling points–-a profligate waste of computational resources. Since displacement solutions for the cell BVP are constrained to lie in a reduced-order space of dimension , it is reasonable to expect that the corresponding stresses, internal forces and Jacobians will also reside in reduced-order spaces of dimensions of order , and consequently, only sampling points would suffice in principle to accurately evaluate the corresponding integrals. The challenging questions that have to be confronted are where to locate these sampling points and, loosely speaking, how to determine their associated weighting functions so that maximum accuracy in the integration is attained.

Approaches found in the model reduction literature that, directly or indirectly, deal with these fundamental questions can be broadly classified either as interpolatory approaches [28,29,30,31,32] or Gauss-type quadrature approaches [33,34], the former having a wider scope, for they also serve to reduce models derived from finite difference approximations [32,31]. The starting point for both is to collect, during the alluded to earlier finite element calculations, snapshots of the integrand or part of the integrand. In interpolatory approaches, the resulting ensemble of snapshots is submitted to a dimensionality reduction process, in the manner described previously for the solution basis functions, in order to compute a reduced set of dominant, orthogonal modes. Such orthogonal modes are employed to construct an interpolant of the integrand, using as interpolation points (the desired sampling points) those minimizing the interpolation error over the finite element snapshots. In the spirit of classical, interpolatory quadrature schemes such as Newton-Cotes [35], the resulting quadrature formula, and therefore, the weighting functions, emerges from approximating the integrand by this reduced-order interpolant. In Gauss-type quadrature procedures [33,34], by contrast, the selection of sampling points and the calculation of the accompanying weighting factors are simultaneously carried out, guided by a criterion of minimum integration error over the snapshots–-in a vein, in turn, similar to that used in classical Gauss-Legendre quadrature rules [35]. A more comprehensive review on both type of integration methods can be found in Ref. [36].

In the BVP under consideration, the output of interest is the volume average of the stresses over the cell domain and, therefore, accuracy is required not only in the integration of the equilibrium equation, but also on the approximation of the stresses themselves. This is the reason why, notwithstanding the presumably higher efficiency of Gauss-type quadrature (less integration error for the same number of sample points), attention is focused in the present work on interpolatory integration strategies, the variable subject to spatial interpolation being precisely the stresses.

1.4 Originality of this work

The idea of exploiting the synergistic combination of multiscale modeling and reduced basis approximation is admittedly not new. In the specific context of two-scale homogenization, it has been recently explored by Boyaval [37], Yvonnet et al. [38], and Monteiro et al. [39]. Traces of this idea can also be found in articles dealing with more general hierarchical multiscale techniques –-that do not presuppose either scale separation or periodicity/statistical homogeneity, or both–-, namely, in the multiscale finite element method [40,41,42] and in the heterogeneous multiscale method [43,44]. However, it should be noted that none of the above cited papers confronts the previously described, crucial question of how to efficiently integrate the resulting reduced-order equations, simply because, in most of them [37,40,41,42,43,44], integration is not an issue –- the fine-scale BVPs addressed in these works bear an affine relation with the corresponding coarse-scale, input parameter, as in linear elasticity, and, consequently, all integrals can be pre-computed, i.e., evaluated offline, with no impact in the online computational cost. Thus, the development of cell reduced-order models endowed with efficient, mesh-size independent integration schemes –-able to handle any material composition–- is a research area that, to the best of the authors' knowledge, still remains uncharted.

1.4.1 Main original contribution

The theory underlying ROMs that incorporate efficient interpolatory integration schemes, henceforth termed High-Performance ROMs (HP-ROMs), is still at its embryonic stage of development –-the first general proposal for parametrized BVPs dates back to 2004 [28]–- and many fundamental issues remain to be addressed. Foremost among these is the crucial question of well-posedness of the resulting system of algebraic equations: does the replacement of the integrand, or nonaffine term in the integrand, by a reduced-order interpolant always lead to a well-posed, discrete problem ? Examination of the reduced basis literature indicates that apparently no researcher has so far been confronted with ill-posed reduced-order equations, a fact that might certainly promote the view that uniqueness of solution can be taken for granted whenever the full-order model is well-posed. Unfortunately, this is not always so: we demonstrate in this work that the choice of the reduced-order space in which the interpolant of the integrand resides has a profound impact on the well-posedness of the discrete problem. In particular, we show that, in the case of the cell boundary-value problem, the widely adopted [29] approach of determining the basis functions for this space from (converged) FE snapshots leads invariably to ill-posed, discrete formulations. The main original contribution of the present work to the field of reduced-order modeling is the development of an interpolatory integration method that safely overcomes this type of ill-posedness. The gist of the method is to expand the interpolation space so that it embraces, aside from the span of the POD stress basis functions, the space generated –-and herein lies the novelty–- by the gradient of the (reduced-order) shape functions.

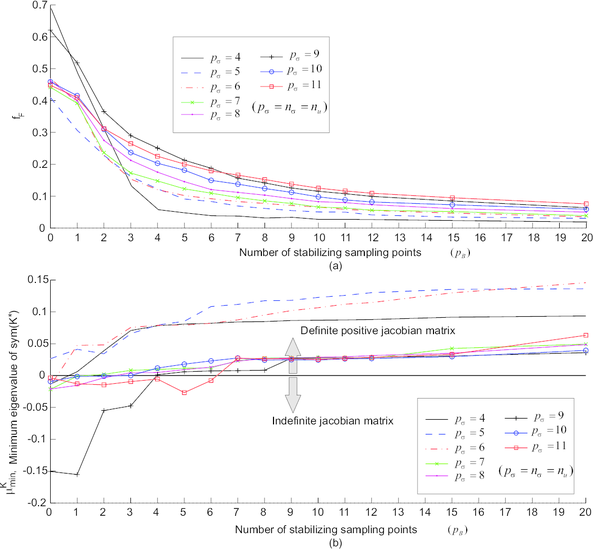

1.4.2 Other original contributions

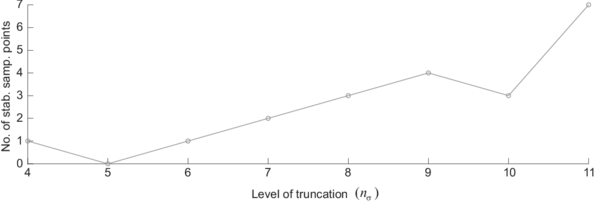

An inevitable consequence of adopting the aforementioned expanded space approach is that, in contrast to the situation encountered when using standard interpolatory schemes in other parametrized BVPs [29], in the proposed method, the number and particular placement of sampling points within the integration domain influence notably the spectral properties (positive definiteness) of the Jacobian matrix of the governing equation, and therefore, the convergence characteristics of the accompanying Newton-Raphson solution algorithm. Another innovative ingredient of the present work is a points selection algorithm that does acknowledge this peculiarity and chooses the desired sampling points guided, not only by accuracy requirements (minimization of the interpolation error over the FE stress snapshot), but also by stability considerations.

Lastly, a further original contribution of the present work is the strategy for computing the global shape functions and stress basis functions. Instead of directly applying the POD over the snapshots to obtain the dominant modes, we first decompose these snapshots into (mutually orthogonal) elastic and inelastic components, and then apply separately the standard POD to the resulting elastic and inelastic snapshots. In so doing, the resulting reduced-order model is guaranteed to deliver linear elastic solutions with the same accuracy as the underlying (full-order) finite element model.

1.5 Organization of the document

The remaining of this document is organized as follows. Chapter 2is devoted to the formulation and finite element implementation of the cell equilibrium problem. Chapter 3, on the other hand, is concerned with the issue of the offline computation of the POD reduced basis. In Section 3.2, the Galerkin projection of the cell equilibrium equation onto the space spanned by the POD basis is presented. Chapter 4 outlines the procedure for efficiently integrating the reduced-order equilibrium equation. In Section 4.3, we discuss the crucial issue of where the low-dimensional approximation of the stress field should lie in order to obtain an accurate and at the same time well-posed reduced-order problem; in Section 4.3.3, the original proposal of expanding the stress basis with the gradient of the (reduced-order) shape functions is put forward. Section 4.4 delineates the derivation of the modal coefficients in the approximation of the stress field in terms of the stress values computed at the set of pre-specified sampling points. The determination of the optimal location of these sampling points is addressed in Section 4.5. For the reader's convenience and easy reference, in Section 4.6, both the offline and online steps leading to the proposed reduced-order model are conveniently summarized. Chapter 5 is dedicated to numerically assess the efficiency of the proposed model reduction strategy. Finally, in Chapter 6, some concluding remarks are presented.

In order to preserve the continuity of the presentation, details concerning the algorithmic implementation of the computation of the reduced basis and the selection of sampling points are relegated to the appendices.

2 First-order homogenization

2.1 Basic assumptions

The fundamental assumptions upon which the homogenization approach followed in this work rests are presented below. For a more in-depth description of the underlying axiomatic framework, the reader is referred to Refs. [45,46,47,48].

2.1.1 Existence of a representative subvolume

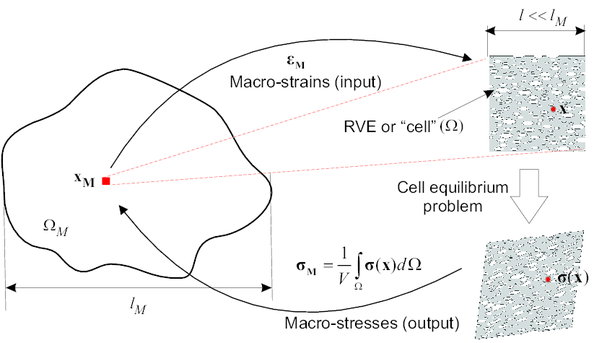

The homogenization approach employed in this work –-commonly known as first-order homogenization–- is only valid for materials that either display statistical homogeneity or spatial periodicity [45]. In both type of materials, it is possible to identify a subvolume , of characteristic length , that is representative, in a sense that will be properly defined later, of the heterogeneous material as a whole. Furthermore, this subvolume has to be small enough that it can approximately be regarded as a point at the coarse-scale level [4] (i.e, , being the characteristic length of the macro-continuum , see Figure 1). This is the so-called scale separation hypothesis. In micro-structures that exhibit statistical homogeneity, this domain receives the name of Representative Volume Element (RVE), whereas in micro-structures that display periodicity, it is commonly known as repeating unit cell (RUC), or simply unit cell [45]. In the sequel, both the acronym RVE and the more generic term “cell” will be used interchangeably to refer to .

|

| Figure 1: First-order homogenization. |

2.1.2 Decomposition into macroscopic and microscopic contributions

The displacement at any point is assumed to be decomposed into macroscopic and microscopic parts; under the hypothesis of infinitesimal deformations, this decomposition can be written as:

|

|

(2.1) |

where stands1 for the macroscopic strain tensor (the input parameter in the BVP we wish to efficiently solve) and denotes the so-called displacement fluctuation field (in turn, the basic unknown of this BVP). The macroscopic term represents the displacements that would have been observed had the material been homogeneous, whereas the fluctuating contribution accounts for the deviations from this homogeneous state due to the presence of heterogeneities [49].

The decomposition of the microscopic strain tensor follows from simply differentiating Eq.(2.1):

|

|

(2.2) |

Notice that, in writing Eq.(2.2), one is tacitly assuming that the macroscopic strain tensor is uniform over the spatial length associated to the cell size . This proviso renders the employed homogenization approach –-commonly termed first-order homogenization [12,48]–- not suitable for appropriately handling localization problems. For this reason, in the present work, we shall presuppose that localization of strains does not take place within the deforming cell.

Implicit in the scale separation assumption is the fact that fine-scale deformations only influence coarse-scale behavior through its volume average over the RVE; this implies that

|

|

(2.3) |

where stands for the volume of the RVE:

|

|

(2.4) |

By virtue of Eq.(2.2), this condition is equivalent to impose the volume average of the fluctuation contribution to vanish:

|

|

(2.5) |

(1) Some remarks concerning notation are in order here. Firstly, macroscopic variables will be identified by appending a subscript “M”, while variables associated to the fine scale will be designated by bare symbols. For instance, we shall write and to denote the macroscopic strain tensor and the fine-scale strain field, respectively. Secondly, readers accustomed to classical continuum mechanics notation are reminded that, in this work, the symbol does not represent the displacement field, but rather the fluctuating part –-due to the presence of heterogeneities in the concerned RVE–- of such a field.

2.1.3 Hill-Mandel principle of macro-homogeneity

The scale bridging is completed by the Hill-Mandel principle of macro-homogeneity, that states that the stress power at any point of the macro-continuum must equal the volume average (over the RVE) of the corresponding fine-scale, stress power field (making, thus, both coarse- and fine-scale, continuum description energetically equivalent [8]). The variational statement of this principle is as follows [47]: let be a statically admissible, fine-scale stress field, and the associated macroscopic stress tensor, then, the identity

|

|

(2.6) |

must hold for any kinematically admissible strain rate . Inserting the rate form of Eq.(2.2) into the above equation, and by virtue of Eq.(2.3), it follows that, for identity (2.6) to hold, the macroscopic stress tensor must be the volume average of the microscopic stress field :

|

|

(2.7) |

Another necessary condition for Eq.(2.6) to hold is that:

|

|

(2.8) |

for any kinematically admissible displacement fluctuation field . It is shown in Ref. [47] that this condition amounts to requiring that the external surface traction and body force field in the RVE be purely reactive –-i.e., a reaction to the kinematic constraints imposed upon the RVE. This is why the upcoming cell equilibrium equation does not contain neither external boundary traction nor body force terms.

2.2 RVE equilibrium problem

2.2.1 Boundary conditions

To address the issue of boundary conditions (BCs), it proves first convenient to, using Gauss' theorem, recast equation (2.5) in terms of boundary displacement fluctuations:

|

|

(2.9) |

where represents the boundary of , is the outer unit normal vector to and the symbol denotes the symmetrized tensor product. Any type of boundary conditions prescribed on the cell must obey this condition.

The natural choice for a repeating unit cell (RUC) –-defined as the smallest volume that allows one to generate, by periodic repetition, the entire microstructure of a periodic media [49]–- is to employ periodic boundary conditions for the displacement fluctuations. By definition, the boundary of an RUC comprises pairs of identical –-appropriately shifted in space–- surfaces and (), with the property that for every , , being, loosely speaking, the “counterpart” of in . Periodicity of displacement fluctuations implies that for every , . (See Refs. [8,49] for more details on this type of BCs).

In statistically homogeneous micro-structures, by contrast, the situation is not so clear-cut. The concept of RVE admits various, alternative interpretations and, as a consequence, there is a certain latitude in the choice of boundary conditions (vanishing fluctuations, uniform tractions, quasi-periodic conditions …); the reader interested in this topic is urged to consult Refs. [45,50,51]. Arguably, the most practical choice –-from an implementational point of view–- in a strain-driven finite element context (and the one adopted here) is to use vanishing boundary conditions for the displacement fluctuations (), and correspondingly determine the RVE as the smallest subvolume of the statistically homogeneous microstructure whose mechanical response is, under this type of boundary conditions, indistinguishable from that of the material-at-large.

2.2.2 Trial and test function spaces

It is not hard to see that both periodic and vanishing BCs are just particular instances of the more general case of homogeneous boundary conditions [52], i.e, conditions of the form:

|

|

(2.10) |

being the corresponding linear operator. An immediate corollary of this observation is that the set of all kinematically admissible displacement fluctuation fields, henceforth denoted by , will form for both type of boundary conditions a vector space; this space is defined formally as:

|

|

(2.11) |

Here, stands for the Sobolev space of functions possessing square integrable derivatives over . Since the test functions are kinematically admissible variations, we can write

|

|

(2.12) |

from which it follows that , i.e., the spaces of trial and test functions coincide.

Observation 1: As will be further explained later, having structure of linear space confers a unique advantage –-not enjoyed by ROMs of BVPs with general inhomogeneous boundary conditions [25]–- for model reduction purposes: reduced-order responses will invariably and automatically conform to the imposed boundary conditions, regardless of the level of approximation.

2.2.3 Variational statement

Consider a time discretization of the interval of interest . The current value of the microscopic stress tensor at each is presumed to be entirely determined by, on the one hand, the current value of the microscopic strain tensor , and, on the other hand, a set of microscopic internal variables –-that encapsulate the history of microscopic deformations. The relationship between these variables is established by (phenomenological) rate constitutive equations; these equations may vary from point to point within the cell (multiphase materials). Likewise, the considered RVE may contain also voids distributed all over the domain. The (incremental) RVE equilibrium problem at time can be stated as follows (see Ref. [47]): given the initial data and the prescribed macroscopic strain tensor , find such that

|

|

(2.13) |

for all . The actual output of interest in this fine-scale BVP is not the displacement fluctuation field per se, but rather the macroscopic stress tensor :

|

|

(2.14) |

In order to keep the notation uncluttered, the superindex “n+1” will be hereafter dropped out and all quantities will be assumed to be evaluated at time ; only when confusion is apt to show up, the pertinent distinction will be introduced.

2.3 Finite element formulation

Let be a finite element discretization of the cell. It will be assumed that this discretization is fine enough to consider the exact and FE approximated solutions indistinguishable at the accuracy level of interest. Let ( denotes the number of nodes of the discretization) be a set of shape functions associated to this discretization such that

|

|

(2.15) |

In the case of vanishing displacement fluctuations, this can be achieved by simply ensuring that:

|

|

(2.16) |

As for periodic boundary conditions, let us suppose, for simplicity, that the spatial grid is such that every node () has a “counterpart” node (and vice versa), and that, in addition, no nodes are placed at the intersection of adjoining surfaces. Such being the case, it can be readily shown that the conditions that the shape functions of a given node and its counterpart have to satisfy for proviso (2.15) to hold are:

|

|

(2.17) |

|

|

(2.18) |

The reader is referred to Refs. [49,53] for guidelines on how to enforce periodicity conditions in a more general scenario.

Now we approximate and as:

|

|

(2.19) |

|

|

(2.20) |

where and () denote the nodal values of the displacement fluctuations and test functions, respectively. Inserting these approximations in Eq.(2.13), and exploiting the arbitrariness of coefficients (), one arrives at the following set of discrete equilibrium equations (repeated indices implies summation):

|

|

(2.21) |

Introducing Voigt's notation1, the above equation can be expressed in matrix format as:

|

|

(2.22) |

where represents, with a slight abuse of notation2, the column matrix form of the stress tensor ( and for plane and 3D problems, respectively), and is the classical, global ``-matrix" connecting strains at a given point with the vector containing all nodal displacements:

|

|

(2.23) |

As usual, numerical evaluation of the integral in Eq.(2.22) is carried out by Gaussian quadrature:

|

|

(2.24) |

Here, stands for the total number of Gauss points of the mesh; denotes the weight associated to the Gauss point (this weight includes both the quadrature weight itself and the corresponding Jacobian determinant.); and and stand for the B-matrix and the stress vector at Gauss point , respectively.

(1) Here, it is convenient to use the so-called modified Voigt's notation rather than the standard one. In the modified Voigt's notation, both stress and strain tensors are represented as column vectors ( and , respectively ) in which the shear components are multiplied by . The advantage of this notation over the conventional, engineering Voigt's notation is the equivalence between norms; viz., . The reader is urged to consult [54] for further details on this notation.

(2) The same symbol is used to denote the stress tensor and its counterpart in Voigt's notation.

3 Reduced-order model of the RVE

3.1 Computation of reduced basis

A basic, intuitive picture of the strategy for computing the reduced basis onto which to project the cell equilibrium equation (2.13) was already given in the introductory Chapter. In the following, we put the idea behind this strategy on a more rigorous footing. We begin by noting that, from a functional analysis standpoint, the term model reduction is conceptually akin to the more common term model discretization, since both connote transitions from higher-dimensional to lower-dimensional solution spaces. Indeed, whereas model discretization is used to refer to the (classical) passage from the infinite dimensional space to the finite element subspace , model reduction denotes a transition from this finite dimensional space to a significantly smaller manifold –-the reduced-order space. This latter transition is not carried out directly, but in two sequential steps, namely, sampling of the parameter space and dimensionality reduction. The precise meaning of these terms is explained below.

3.1.1 Sampling of the input parameter space

In constructing the finite element space of kinematically admissible functions , the only restrictions placed on the motion of the mesh nodes are those imposed at the boundaries through conditions (2.16) (for RVEs) and (2.17), (2.18) (for RUCs). The finite element solution space, thus, does not presuppose any constraint on the motion of the interior nodes of the mesh.

However, in actuality, interior nodes cannot fluctuate freely, independently from each other, but they rather move according to deformational patterns dictated by the constitutive laws that govern the mechanical behavior of the distinct phases in the cell –-as noted by Lubliner [55], constitutive laws can be regarded as internal restrictions on the kinds of deformation a body can suffer. This means that the solution of the finite element equilibrium equation (2.13) for given values of the macro-strain tensor actually lives in a smaller subspace (in the parlance of model reduction [23,56], is the manifold induced by the parametric dependence of the BVP on the input variables).

Yet, in general, this manifold cannot be precisely determined –-such a task would require finite element analyses of the cell under all conceivable strain paths. Rather, one has to be content to construct an approximation of it as the span of the displacement fluctuation solutions obtained for a judiciously chosen set of input strain histories . Suppose, for simplicity, that each of these strain histories is discretized into equal number of steps , and let

|

|

(3.1) |

denote the displacement fluctuation solution at the time step of the strain history (, ). The approximating space for , henceforth called the snapshots space, is then defined as:

|

|

(3.2) |

being the total number of snapshots. Likewise, the matrix containing, in columns, the nodal values of these displacement fluctuations solutions:

|

|

(3.3) |

will correspondingly be termed the (displacement fluctuations) snapshot matrix.

Remark 1: The first error incurred in solving the cell equilibrium equations using the reduced basis approach arises in approximating by this space of snapshots . In order to keep this error to a minimum, one should strive to select the set of strain histories in such a way that the span of the corresponding displacement fluctuation solutions cover as much as possible the space (or at least, the region or regions of particular interest), while, at the same time, trying to keep the total number of snapshots in check –-the computational cost of the subsequent dimensionality reduction process grows considerably with the size of the snapshot matrix. In this respect, it may be interesting to note that this task of sampling the input parameter space (also known as “training”1) is somehow akin to the experimental process whereby material parameters of standard phenomenological models are calibrated in a laboratory. In this analogy, the RVE plays the role of the corresponding experimental specimen, whereas the macro-strain training trajectories represent the loading paths of the pertinent calibration tests. As opposed to the situation encountered in standard laboratory experiments, however, in the training process, one has “privileged” information regarding the phenomenological behavior of the constituents. Hindsight and elementary physical considerations can therefore aid in restricting the number of strain histories (and hence of snapshots) necessary to characterize the response. For instance, if the behavior of the materials that compose the cell is governed by rate-independent constitutive models, we know beforehand that it is not necessary to study the response under varying rates of deformation. Strategies for efficiently sampling the input parameter space in general model reduction contexts can be found in Refs. [58,59,60,61].

(1) The term “training”, which, incidentally, is borrowed from the neural network literature [57], is used throughout the text to refer to the offline generation of snapshots.

3.1.2 Dimensionality reduction

The next and definitive step in the transition from the high-dimensional finite element space to the desired reduced-order space –-in which the cell BVP is to be finally posed–- is the dimensionality reduction process, in which, as pointed out in the introductory Chapter, the dominant deformational patterns of the cell response are identified and unveiled by washing out the “inessentials”.

3.1.2.1 Proper Orthogonal Decomposition

To accomplish this central task, we employ here the Proper Orthogonal Decomposition (POD). The formal statement of the POD problem goes as follows: given the ensemble of snapshots , find a set of orthogonal basis functions () such that the error defined as

|

|

(3.4) |

is minimized. Here, represents the projection of onto the subspace spanned by the basis functions , and symbolizes the norm. It is shown in Ref. [62] that the solution of this optimization problem can be obtained by first solving the following eigenvalue problem:

|

|

(3.5) |

where is a symmetric matrix defined as:

|

|

(3.6) |

i.e., is the inner product between snapshots and . In statistical terms, can be interpreted as a covariance matrix: the off-diagonal entries captures the degree of linear correlation or redundancy between pair of snapshots (the covariance), whereas the diagonal terms are the variances [63]. The goal in diagonalizing by solving the eigenvalue problem (3.5) is to re-express the snapshots data in axes that filter out the redundancies and reveals the actual dominant displacement fluctuation patterns.

Since , expression (3.6) for the covariance matrix can be rewritten in terms of the snapshot matrix as follows:

|

|

(3.7) |

or in matrix form:

|

|

(3.8) |

where

|

|

(3.9) |

Note that, except for the density factor, this matrix is similar to the “mass matrix” appearing in finite element implementations of dynamical problems.

Once the eigenvalues problem (3.5) has been solved, the desired reduced basis is calculated from the largest eigenvalues and associated eigenvectors through the following expression:

|

|

(3.10) |

(modes are judged to be essential or dominant, and hence worthy of being included in the reduced basis set, if their associated eigenvalues have relatively large magnitudes). Substitution of into the above equation yields:

|

|

(3.11) |

where1 is given by

|

|

(3.12) |

and stands for the value of the basis function at the node of the finite element grid. The matrix defined by the above equation will be hereafter called the reduced basis matrix. Each column () of this matrix can be compactly expressed in terms of the snapshot matrix as follows:

|

|

(3.13) |

3.1.2.2 Singular value decomposition

Instead of solving the eigenvalue problem (3.5), and then obtaining the reduced basis matrix from expression (3.12), one can alternatively compute this basis matrix using the Singular Value Decomposition. Indeed, let be the Cholesky decomposition of , and let denote the matrix defined as:

|

|

(3.14) |

It can be shown (see Appendix A) that the column of the reduced basis matrix is related to the left singular vector of , denoted by , through expression

|

|

(3.15) |

3.1.2.3 Elastic/Inelastic reduced basis functions

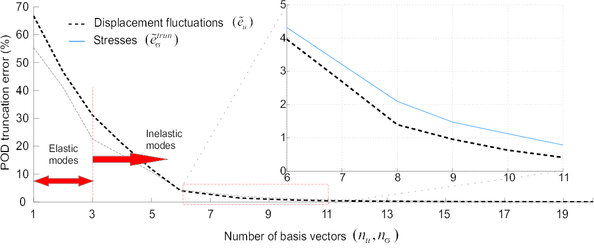

The POD can be viewed as a multidimensional data fitting procedure intended to obtain a sequence of orthogonal basis functions whose span best approximate the space of snapshots. As such, the POD is a purely data-driven process, “agnostic” to the physical origin of the data [64,63]. For instance, for POD basis construction purposes, it is completely immaterial whether a given snapshot corresponds to a purely linear elastic solution or to a solution well into the inelastic regime. The task of discriminating which features of the cell response are essential and which are not is exclusively guided by statistical considerations: if the elastic response happens to be poorly represented within the snapshot ensemble, the POD may regard as unimportant the contribution of these snapshots, and, as a consequence, the basis functions with largest associated eigenvalues –-i.e., the essential modes–- would hardly contain any information of this range. To accurately replicate the apparently trivial linear elastic behavior, thus, one may be forced to take a relatively large number of basis functions, and this may translate into a significant increase in the overall online computational cost. This fact certainly places the POD-based reduced basis approach at a competitive disadvantage compared with semi-analytical homogenization approaches such as the Nonlinear Transformation Field Analysis [20], which do capture exactly (and effortlessly) the linear elastic response of the cell.

To eliminate this shortcoming, we propose here a slightly different strategy for constructing the reduced basis. The essence of the proposal is to partition the space of snapshots into elastic () and inelastic () subspaces:

|

|

(3.16) |

( symbolizes direct sum of subspaces [65]) and then obtain the reduced basis as the union of the bases for both subspaces. Below, we describe this strategy more in detail.

The first step is to determine an orthogonal basis for . One can do this by simply performing independent, linear elastic finite element analysis of the cell ( for 3D problems, and for plane strain), and then orthonormalizing the resulting displacement fluctuation fields. These elastic modes will be considered as the first basis functions of the reduced basis:

|

|

(3.17) |

Once we have at our disposal this set of elastic basis functions, we compute the (orthogonal) projection of each snapshot onto the orthogonal complement of (which is precisely the aforementioned inelastic space ):

|

|

(3.18) |

It is now on this ensemble of inelastic snapshots that the previously described POD is applied to obtain the remaining basis functions. Thus, we finally have:

|

|

(3.19) |

for 3D problems, and

|

|

(3.20) |

for plane strain.

Observation 2: In placing the elastic modes within the first positions, the reduced-order model is guaranteed to deliver linear elastic solutions with the same accuracy as the underlying (full-order) finite element model (obviously, provided that ).

Further details concerning the numerical implementation of this apparently novel –-to the best of the authors' knowledge–- basis construction strategy can be found in Appendix B.

(1) It is necessary at this point to further clarify the notation employed for referring to the basis functions. A bold italic “phi” symbol with one subscript is employed to denote the basis function itself, i.e., (). Bold normal “phi” symbols, on the other hand, are employed to represent values of such basis functions at the nodes of the underlying finite element mesh. For instance, the value of the basis functions at the node is symbolized as . When accompanied by only one (lowercase) subscript, the bold normal “phi” symbol denotes a column vector containing the nodal values of the pertinent basis functions at all gauss points: (). Lastly, when no subscript is attached, represents the reduced basis matrix: .

3.2 Galerkin projection onto the reduced subspace

We now seek to pose the boundary-value problem represented by Eq.(2.13) in the reduced-order space spanned by the basis functions . To this end, we approximate both test and trial functions by the following linear expansions:

|

|

(3.21) |

|

|

(3.22) |

and being the low-dimensional approximations of trial and test functions, respectively (hereafter, asterisked symbols will be used to denote low-dimensional approximations of the associated variables). Inserting Eqs. (3.21) and (3.22) into Eq.(2.13), and exploiting the arbitrariness of coefficients (), we arrive at the following set of equilibrium equations:

|

|

(3.23) |

Expressing now the reduced basis functions in the above equation in terms of finite element shape functions (through expression ), we get (in Voigt's notation):

|

|

(3.24) |

or more compactly:

|

|

(3.25) |

Here, denotes the vector containing the reduced displacement fluctuations –-the basic unknowns of the reduced-order problem:

|

|

(3.26) |

and stands for the reduced “B-matrix”, defined as:

|

|

(3.27) |

and that connects the gradient of the displacement fluctuation field with the vector of reduced displacement fluctuations, i.e.:

|

|

(3.28) |

For implementational purposes, it is more expedient to express Eq.(3.27) in terms of elemental matrices. To this end, we write:

|

|

(3.29) |

where denotes the local -matrix of element (, in turn, is the number of nodes in ). Thus,

|

|

(3.30) |

In the above equation, represents the block matrix of corresponding to the nodes of finite element ().

4 Numerical integration

4.1 Classical Gauss quadrature: the standard ROM

A straightforward –-but, as already mentioned, ostensibly inefficient–- route for numerically evaluating the integral appearing in the reduced-order equilibrium equation (3.24) is to simply use the same Gauss quadrature formulae and the same set of Gauss points as the underlying finite element model (see Eq.(2.24)):

|

|

(4.1) |

Low-rank approximations that employ the underlying finite element Gauss points for numerically evaluating integrals in the weak statement of the problem are commonly known as standard reduced-order models [66].

4.2 Efficient numerical integration: the High-Performance ROM (HP-ROM)

4.2.1 Overview

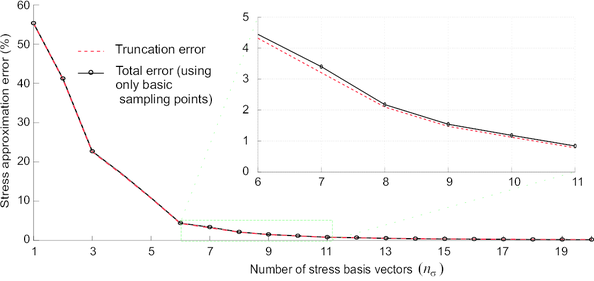

As outlined in the introductory Chapter, approaches found in the model reduction literature that, directly or indirectly, deal with the still underexplored question of how to efficiently –-at an online computational cost independent of the dimension of the underlying finite element model–- integrate reduced-order equations can be broadly classified either as interpolatory approaches [28,29,30,31,32] or Gauss-type quadrature methods [33,34]. The integration strategy proposed in the present work falls into the former category of interpolatory approaches. Recall that the basic idea in such approaches is to replace the nonaffine term in the BVP by a low-dimensional approximation. In our case, a glance at the reduced-order equilibrium equation (3.24) reveals that such “offending”, nonaffine term is the stress field –-the reduced -matrix is independent of the input parameter and hence need not be subject to approximation. The proposed integration scheme, thus, is predicated on the assumption that, not only the displacement fluctuations, but also the stress field over the cell admits an accurate, low-dimensional approximation. Numerical experiments confirm (see Chapter 5) that, luckily, this premise generally holds whenever the displacement fluctuations field itself lives in a low-dimensional space.

Let () denote a set of orthogonal basis functions for such low-dimensional, stress approximation space. Then, the reduced-order approximation of the stress field may be written as:

|

|

(4.2) |

(notice that, in keeping with the notational convention introduced in Section 3.2, the low-dimensional approximation of the stress field is represented by attaching an asterisk to the stress symbol). In the spirit of classical polynomial quadrature (such as Newton-Cotes formulae [35]), coefficients () in Eq.(4.2) are calculated by fitting this linear expansion to the stress values computed at a set of pre-specified sampling points:

|

|

(4.3) |

Approximation (4.2) becomes therefore expressible as:

|

|

(4.4) |

where () stands for the interpolation or, more generally, reconstruction operator1 at sampling point , whereas

|

|

(4.5) |

represents the stress vector evaluated at sampling point through the pertinent constitutive relation .

Substitution of the above approximation into equation Eq.(3.25) leads to:

|

|

(4.6) |

The bracketed integral (denoted by ) in the above equation is independent of the input parameter –-the macroscopic strain –-, and, hence, it can be entirely pre-computed offline, using, for instance, the full set of finite element Gauss points:

|

|

(4.7) |

Introducing the above definition into Eq.(4.6) finally yields the quadrature formula for the reduced equilibrium equation:

|

|

(4.8) |

It is noteworthy that this quadrature formula requires evaluation of stresses at only sampling points within the cell domain (in contrast to the standard ROM (4.1), that needs Gauss points). Furthermore, inserting Eq.(4.4) into Eq.(2.14), we get:

|

|

(4.9) |

where

|

|

(4.10) |

Note that () can also be pre-computed offline.

In summary, in projecting the cell equilibrium equation onto the displacement fluctuations, reduced space, and in additionally adopting the above described integration scheme, one automatically arrives at a reduced-order model in which the operation count –-the complexity–- in both solving the cell equilibrium equation and calculating the macroscopic stress tensor depends exclusively on the dimension of the reduced basis. We shall refer to this model as the High-Performance, Reduced-Order Model (HP-ROM), to highlight the tremendous gains in performance that affords this model over the previously described standard ROM, let alone over the full-order, finite model, discussed in Section 2.3–-in the numerical example shown in Chapter 5, we report speedup factors of above three order of magnitudes.

(1) The term interpolation usually connotes that the fit is exact at the sampling points, and this only occurs when .

4.3 Stress approximation space

Two crucial aspects of the integration scheme sketched in the foregoing remains to be addressed, namely, the determination of the vector space (hereafter denoted by ) in which the low-dimensional approximation of the stress field should lie in order to obtain an accurate and at the same time well-posed HP-ROM; and the calculation of the optimal location of the sampling or integration points at which the stress tensor is to be evaluated. Attention here and in the next Section is confined to the aspect related to the stress approximation space, while the discussion of the issue related to the selection of sampling points is deferred to Section 4.5.

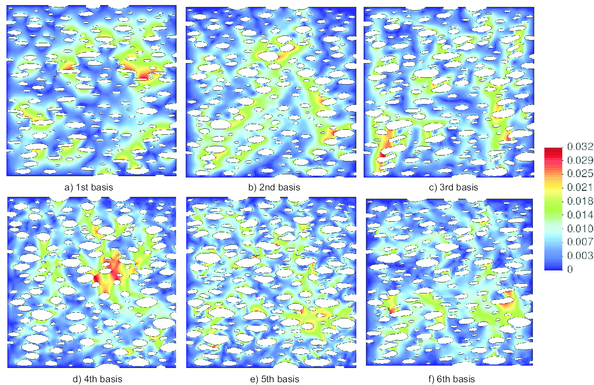

4.3.1 The reduced-order subspace of statically admissible stresses (Vσ*)

Similarly to the problem addressed in Chapter 3 concerning the reduced basis for the displacement fluctuations, the problem of constructing a -dimensional representation of the stress field reduces, in principle, to finding a set of orthogonal basis functions () such that its span accurately approximates the set of all possible stress solutions –-that is, the set of all statically admissible stresses. Accordingly, the procedure to compute the reduced basis for the stress field would be, mutatis mutandis, formally identical to that explained earlier for the displacement fluctuations. Firstly, finite element, stress distributions over the cell are computed for representative, input macro-strain histories (the most practical and somehow consistent choice regarding these strain trajectories is to use the same as in the computation of the displacement fluctuations snapshots). Then, the elastic/inelastic dimensionality reduction process set forth in Section 3.1.2 is applied to the resulting ensemble of stress solutions , in order to identify both the elastic and the essential inelastic stress modes. The space spanned by these modes will be denoted hereafter by and termed the reduced-order subspace of statically admissible stresses:

|

|

(4.11) |

4.3.2 Ill-posedness of the HP-ROM

At first sight, it appears reasonable to simply construct the low-dimensional approximation required in the proposed integration method as a linear combination of the above described stress reduced basis–- hence making –-; i.e.,

|

|

(4.12) |

where (). This strategy of approximating the offending, nonaffine term in the BVP by a linear combination of pre-computed basis functions –-obtained, in turn, from samples of the nonaffine term evaluated at the solution–- has been successfully applied by several authors, with no apparent –-or at least not reported–- computational pitfalls, to a wide gamut of problems: nonlinear monotonic elliptic and nonlinear parabolic BPVs [67,29], nonlinear miscible viscous fingering in porous media [68,31], uncertainty quantification in inverse problems [69], and nonlinear heat conduction problems [32,66], to cite but a few.

However, a closer examination of the the cell equilibrium problem reveals that, in this case, this “standard” strategy proves completely fruitless, for it leads to patently ill-posed reduced-order equations. To show this, let us first substitute approximation (4.12) into Eq.(3.24):

|

|

(4.13) |

By virtue of Eq.(3.27), the bracketed integral in the preceding equation can be rephrased as:

|

|

(4.14) |

Each basis function () is, by construction (see Chapter 3), a linear combination of the stress snapshots collected during the offline, finite element analysis; thus, we can write:

|

|

(4.15) |

being the corresponding coefficients in the linear combination. Inserting the above equation into Eq.(4.14) and considering that () are finite element stress solutions –-and therefore fulfill the finite element equilibrium equation (2.22)–-, we finally arrive at:

|

|

(4.16) |

that is, the integral (4.14) appearing in the equilibrium equation (4.13), and hence, the left-hand side of the equation itself, vanishes identically regardless of the value of the modal coefficients (), and therefore, regardless of the value of the reduced displacement fluctuations –-hence the ill-posedness.

4.3.3 Proposed remedy: the expanded space approach

It is clear from the foregoing discussion that the root cause of the ill-posedness lies in the fact that the set of all admissible stress fields () forms a vector space, and, consequently, the POD stress modes () –-and any linear combination of them–- turn out to be self-equilibrated fields. Thus, for the reduced-order problem to be well-posed, the approximation space cannot be only formed by statically admissible stresses, but it must also include statically inadmissible fields –-i.e. stress functions that do not satisfy the reduced-order equilibrium equation (3.24).

One plausible route for determining a low-dimensional approximation space that embraces both statically admissible and statically inadmissible stresses might be to collect, during the offline finite element calculations, not only converged stresses, but also the unconverged ones –-i.e., those generated during the corresponding iterative algorithm–-, and then perform the POD-based dimensionality reduction over the whole ensemble of snapshots1. In the present work, however, we pursue an approach that precludes the necessity of undertaking this computationally laborious and in some aspects objectionable –-there is no guarantee that the span of selected, unconverged stress snapshots covers the entire space of statically inadmissible stresses–-process. The idea behind the employed approach was originally conceived, but not fully developed, by the authors in a recent report [36]. Here, the theory underlying such an idea is further elaborated and cast into the formalisms of functional analysis.

(1) Incidentally, this way of proceeding has the flavor of the nonlinear model reduction strategy advocated by Carlberg and co-workers [78,80], in which the reduction is carried over the linearized form of the pertinent governing equation

4.3.3.1 Continuum formulation

To originate our considerations from a general standpoint, it proves convenient first to rephrase the left-hand side of the reduced-order equilibrium equation Eq.(3.24) as the action of a certain linear operator on the stress field over the cell:

|

|

(4.17) |

Invoking now the orthogonal decomposition of induced by this operator, one obtains:

|

|

(4.18) |

where stands for the nullspace of . Since the cell equilibrium equation has a vanishing right-hand side term, it follows that is actually the space of statically admissible stress fields. Its orthogonal complement, , can be therefore construed as the aforementioned space of statically inadmissible stresses. The key fact here is that such a space is inherently -dimensional and, thus, there is no need to perform any dimensionality reduction whatsoever over unconverged snapshots to arrive at the desired basis: the strain-displacement functions themselves are linearly independent (albeit not orthogonal) and can thereby serve this very purpose.

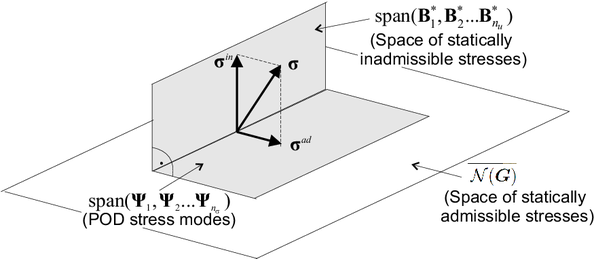

According to the preceding decomposition, any can be resolved as (see Figure 2):

|

|

(4.19) |

where and stand for the statically admissible and statically inadmissible components of , respectively. Following the standard approach, the statically admissible component –-i.e., the stress solution we wish to calculate for a given input –- is forced to lie in the span of the POD modes () obtained from converged snapshots:

|

|

(4.20) |

() being the corresponding modal coefficients. The non-equilibrated component , on the other hand, resides naturally in the span of the reduced strain-displacement functions, so we can directly write–-i.e., without introducing further approximations–-:

|

|

(4.21) |

with (). The low-dimensional approximation required in the proposed integration method, denoted in what follows by (the appended superscript “ex” means ``stress approximated in the expanded space), is finally obtained as the sum of Eq.(4.20) and Eq.(4.21) :

|

|

(4.22) |

Substituting the above approximation into the equilibrium equation, one gets:

|

|

(4.23) |

Since are linearly independent functions, it becomes immediately clear that the above equations holds only if:

|

|

(4.24) |

i.e., if the coefficients multiplying () are identically zero. In adopting the proposed integration approach, thus, the reduced-order cell equilibrium problem (3.24) is transformed into the problem of finding, for a given input macroscopic strain tensor , the reduced displacement fluctuations vector that makes the non-equilibrated component (defined in Eq.(4.21)) to vanish.

In a nutshell, the ill-posedness exhibited by the discrete problem when adopting the standard approach of using only POD modes is eliminated by expanding the stress approximation space so that it embraces also the span of the reduced strain-displacement functions (or strain modes2) ():

|

|

(4.25) |

4.3.3.2 Discrete formulation

In typical finite element implementations, both stresses and gradients of shape functions are only calculated and stored at the Gauss points of the underlying spatial discretization. For practical reasons, thus, it proves imperative to reformulate the above explained expanded space strategy and treat both magnitudes as spatially discrete variables, defined only at such Gauss points.

The discrete counterparts of the continuously defined fields and () will be denoted by and , and termed the global stress vector, and the global matrix of strain modes, respectively. The global stress vector is constructed by stacking the stress vectors () at the Gauss points of the finite element grid into a single column vector:

|

|

(4.26) |

Similarly, the global matrix of strain modes is constructed as:

|

|

(4.27) |

With definitions (4.26) and (4.27) at hand, the standard ROM equilibrium equation (4.1) can be readily rephrased in the following compact, matrix form:

|

|

(4.28) |

where is a diagonal matrix containing the weights at each Gauss point:

|

|

(4.29) |

(here, denotes the identity matrix). Assuming that () –-Gauss quadrature rules with negative weights are excluded from our considerations–-, one can reexpress Eq.(4.28) as:

|

|

(4.30) |

where symbolizes the following inner product:

|

|

(4.31) |

Equation (4.30) reveals that any statically admissible global stress vector is orthogonal to the global strain modes vectors () in the sense of the inner product induced by . In other words, in approximating the integral of the internal forces by Gauss quadrature, the orthogonality condition translates into orthogonality in the sense of Eq.(4.31). From the point of view of numerical implementation, however, it is preferable to cast the equilibrium condition and the subsequent developments in terms of the standard euclidean scalar product in –-working with the inner product defined by Eq.(4.31) is somehow a nuisance and complicates unnecessarily the involved algebra. This can be achieved by inserting the Cholesky decomposition of the weights matrix

|

|

(4.32) |

into Eq.(4.26):

|

|

(4.33) |

Defining now the weighted global stress vector and weighted matrix of strain modes as

|

|

(4.34) |

and

|

|

(4.35) |

respectively, and inserting these definitions into Eq.(4.26), one finally arrives at:

|

|

(4.36) |

or equivalently:

|

|

(4.37) |

which shows that any statically admissible weighted stress vector is orthogonal, in the sense of the standard euclidean inner product, to the weighted strain modes ().

Comparing Eq.(4.36) with Eq.(4.17), it becomes clear that plays the same role as operator in Eq.(4.17). In analogy with Eq.(4.18), thus, we can write

|

|

(4.38) |

where and denote the null space and the range (or column space) of and , respectively, and consequently decompose any as

|

|

(4.39) |

with and . As in the continuous case (see Eq.(4.20)), the statically admissible component is now approximated by a linear combination of POD basis vectors obtained from converged stress snapshots (the methodology for obtaining these modes using the SVD is thoroughly explained in Appendix B.2):

|

|

(4.40) |

where

|

|

(4.41) |

denotes the (weighted) stress basis matrix and stands for the vector of modal coefficients associated to such a basis matrix. Likewise, since the non-equilibrated component pertains to the column space of , we can directly write

|

|

(4.42) |

where . The low-dimensional (weighted) stress vector required in the proposed integration method is finally obtained as the sum of Eq.(4.42) and Eq.(4.40).

|

|

(4.43) |

or in a more compact format:

|

|

(4.44) |

where

|

|

(4.45) |

and

|

|

(4.46) |

The matrix defined by Eq.(4.45) will be hereafter called the expanded basis matrix for the (weighted) stresses, whereas will be correspondingly termed the expanded vector of modal coefficients. Inserting approximation (4.43) into Eq.(4.36), and considering that and that is a full rank matrix, one finally arrives at the same equilibrium condition derived in the continuum case (see Eq. 4.24):

|

|

(4.47) |

Once the above equation is solved for , the desired equilibrated stress vector is obtained by evaluating Eq.(4.40):

|

|

(4.48) |

(2) Indeed, functions () can be viewed as fluctuating strain modes, since they are the symmetric gradient of the displacement fluctuation modes, see Eq. 3.27.

4.4 Determination of modal coefficients

The next step in the development of the proposed integration scheme is to deduce closed-form expressions for the vectors of modal coefficients and in terms of the stress values computed at a set of pre-specified sampling points (to be chosen among the set of Gauss points of the underlying finite element mesh). To this end, we need first to introduce some notation and terminology.

4.4.1 Gappy vectors

Let denote the set of indices of sampling points. Notationally, we write to designate the subvector of containing the rows associated to these sampling points; viz.:

|

|

(4.49) |

(When confusion is not apt to arise, the parenthetical subscript indicating the set of sampling indices will be dropped, and we shall simply write ). It proves conceptually advantageous to regard this restricted or “gappy” –-a terminology that goes back to the work of Everson et al. [70]–- stress vector as the result of the application of a certain boolean operator over the full vector :

|

|

(4.50) |

We call the selection operator associated to sampling indices . This operator can be of course applied to any (). For instance, the restricted matrix of weighted strain modes would be defined as:

|

|

(4.51) |

Furhtermore, it is straighforward to show that

|

|

(4.52) |

(here is the x identity matrix) and that

|

|

(4.53) |

for any and .

4.4.2 Least-squares fit

In the spirit of classical polynomial quadrature, such as Newton-Cotes formulae [35], the modal coefficients and are determined by fitting the low-dimensional approximation (4.43) to the weighted stresses calculated at the pre-specified sampling points. It should be noticed that, the variable subject to approximation –-the stress–- being a vector-valued function, the total number of discrete points to be fitted does not coincide with the number of spatial sampling points (), but rather is equal to the product of such a number times the number of stress components (). The well-posedness of the fitting problem, thus, demands that:

|

|

(4.54) |

i.e., the number of discrete points must be equal or greater than the number of parameters to be adjusted. For the equality to hold, both and have to be multiple of ; thus, an exact fit is in general not possible for arbitrary values of and , and recourse to an approximate fit is to be made. In this respect, we follow here the standard approach of using a least-squares, best-fit criterion, i.e., minimization of the squares of the deviations between “observed” () and fitted () values (in our context, “observed” signifies “calculated through the pertinent constitutive equation”). This minimization problem can be stated as:

|

|

(4.55) |

where stands for the standard euclidean norm. Let be the gappy expanded basis matrix, and suppose that the sampling indices have been chosen so that has full rank, i.e.:

|

|

(4.56) |

Then, it can be shown (see, for instance, Ref. [71]) that the solution of this standard, least-squares problem is provided by the following vector of coefficients:

|

|

(4.57) |

where

|

|

(4.58) |

is the so-called pseudo-inverse of matrix .

Recall that our ultimate aim is to derive closed-form expressions for and as functions of . Thus, it remains to extricate these two sub-vectors from expression (4.57). This can be done by first partitioning both and in terms of the gappy stress basis matrix and the gappy matrix of strain modes :

|

|

(4.59) |

Invoking the blockwise inverse formula for x block symmetric matrices [72], and upon tedious algebra –-that has been relegated to Appendix C–-, one finally arrives at the following expressions for and

|

|

(4.60) |

|

|

(4.61) |

where denotes the pseudoinverse of the gappy stress basis matrix :

|

|

(4.62) |

and is the so-called Schur complement [72] of in :

|

|

(4.63) |

(note that this matrix is invertible by virtue of the hypothesis represented by Eq.(4.56)).

Reconstruction matrix

Let us first examine expression (4.60) for the modal coefficients –-those that multiply the statically admissible component of the global stress vector. Since, at the solution, , we have that:

|

|

(4.64) |

(Notice that this result can also be obtained by directly solving minimization problem (4.55) with ). Substitution of this equation into Eq.(4.48) yields:

|

|

(4.65) |

where

|

|

(4.66) |

Inspection of Eq.(4.65) reveals that the matrix defined above is the operator that allows one to reconstruct the (weighted) statically admissible stress vector using only the (weighted) stress values () calculated at the pre-selected sampling points . For this reason, we shall use the term weighted reconstruction matrix (or simply reconstruction matrix) to refer to this operator. It must be emphasized here that this matrix only depends on the POD stress basis matrix and on the selected sampling indices –-i.e., it is independent of the input parameter, the macro-strain –-and, therefore, it can be pre-computed offline.

4.4.3 “Hyperreduced” cell equilibrium equation

As for the expression for the set of “statically inadmissible” coefficients , we know that, at the solution, these coefficients must vanish; thus, from Eq.(4.61), we have

|

|

(4.67) |

Since is a nonsingular matrix, the above condition is equivalent to

|

|

(4.68) |

Furthermore, examination of Eq.(4.66) and Eq.(4.68) readily shows that the bracketed term in Eq.(4.68) is nothing but the submatrix of the reconstruction matrix formed by the rows associated to sampling points , i.e.:

|

|

(4.69) |

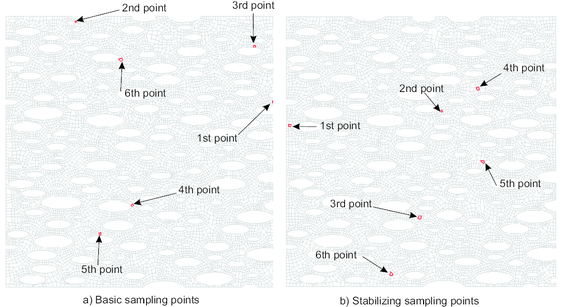

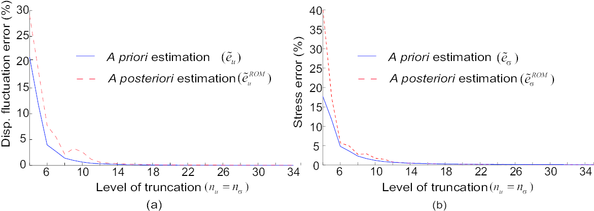

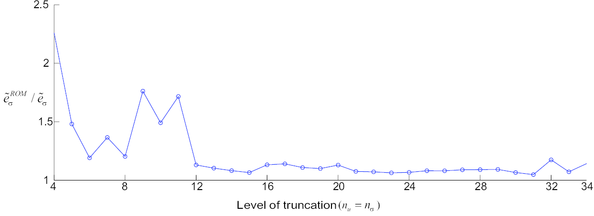

Substitution of expression (4.69) into Eq.(4.68) finally leads to: